Warm-up + CosineAnnealing

参考

pytorch余弦退火学习率CosineAnnealingLR的使用-CSDN博客

Pytorch:几行代码轻松实现Warm up + Cosine Anneal LR_pytorch warmup-CSDN博客

CosineAnnealingLR

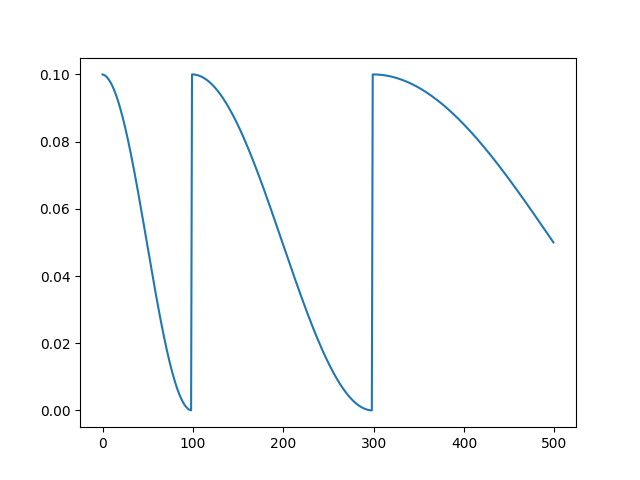

torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max, eta_min=0)

其中,

T_max 指的是 cosine 函数经过多少次更新完成二分之一个周期。

eta_min指结束时的学习率。

import matplotlib.pyplot as plt

import torch

from torch.optim.lr_scheduler import CosineAnnealingLR, CosineAnnealingWarmRestarts, LambdaLR

from torchvision.models import resnet18

epochs = 5

steps = 100

learning_rate = 0.1

model = resnet18(weights=False)

optimizer = torch.optim.SGD(model.parameters(), lr=0.1)

T_max = steps * epochs

scheduler = CosineAnnealingLR(optimizer, T_max, eta_min=0.05)

cur_lr_list = []

for epoch in range(epochs):

for batch in range(steps):

optimizer.step()

scheduler.step()

cur_lr = optimizer.param_groups[-1]['lr']

cur_lr_list.append(cur_lr)

x_list = list(range(len(cur_lr_list)))

plt.figure()

plt.plot(x_list, cur_lr_list)

plt.show()

CosineAnnealingWarmRestarts

torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0=100, T_mult=2)

其中,

T_0和T_max一样,指的是 cosine 函数经过多少次更新完成二分之一个周期。

T_mult周期翻几倍。验证准确率总是会在学习率的最低点达到一个很好的效果,而随着学习率回升,验证精度会有所下降.所以为了能最终得到一个更好的收敛点,设置T_mult>1是很有必要的,这样到了训练后期,学习率不会再有一个回升的过程,而且一直下降直到训练结束。

自定义实现Warm-up+CosineAnnealing

T_max = steps * epochs

warm_up_iter = 100

lr_max, lr_min = learning_rate, 0

lambda0 = lambda cur_iter: cur_iter / warm_up_iter if cur_iter < warm_up_iter else \

(lr_min + 0.5 * (lr_max - lr_min) * (

1.0 + math.cos((cur_iter - warm_up_iter) / (T_max - warm_up_iter) * math.pi))) / learning_rate

scheduler = LambdaLR(optimizer, lambda0)

SequentialLR实现Warm-up+CosineAnnealing

warm_up_iter = 100

scheduler1 = LinearLR(optimizer, start_factor=0.01, end_factor=1, total_iters=warm_up_iter)

scheduler2 = CosineAnnealingLR(optimizer, steps * epochs - warm_up_iter)

scheduler = SequentialLR(optimizer, schedulers=[scheduler1, scheduler2], milestones=[warm_up_iter])

OneCycleLR达到同样效果

T_max = steps * epochs

scheduler = OneCycleLR(optimizer, max_lr=0.1, pct_start=0.2, total_steps=T_max)

浙公网安备 33010602011771号

浙公网安备 33010602011771号