pytorch两种保存模型方式对比

torch.save(model, "model.pt")

'''

保存了以下三项内容:

模型的结构:包括模型的类定义和层的结构。

模型的权重:包括所有参数(parameters)和缓冲区(buffers)的值。

模型的状态:包括模型的训练/评估模式(model.training)等。

加载:

model = torch.load("model.pt")

特点:

1.保存整个模型对象,隐藏模型结构,加载时不需要模型源代码

2.依赖环境,适合打包环境(不变环境)使用。PyTorch其内部实现会在不同版本之间发生变化,如果保存的模型对象依赖于这些内部属性,加载时会出现错误

3.切换模型时,只需要改模型文件名,不需要改代码

'''

state_dict = {"net": model.state_dict(), "optimizer": optimizer.state_dict(), "epoch": epoch}

torch.save(state_dict, "model.pth")

'''

model.state_dict()只保存了:

模型的权重(所有参数和批归一化中均值方差的值)

加载:

model = MyModel()

model.load_state_dict(torch.load("model_weights.pth"))

特点:

1.加载时需要模型源码

2.不依赖于pytorch版本

'''

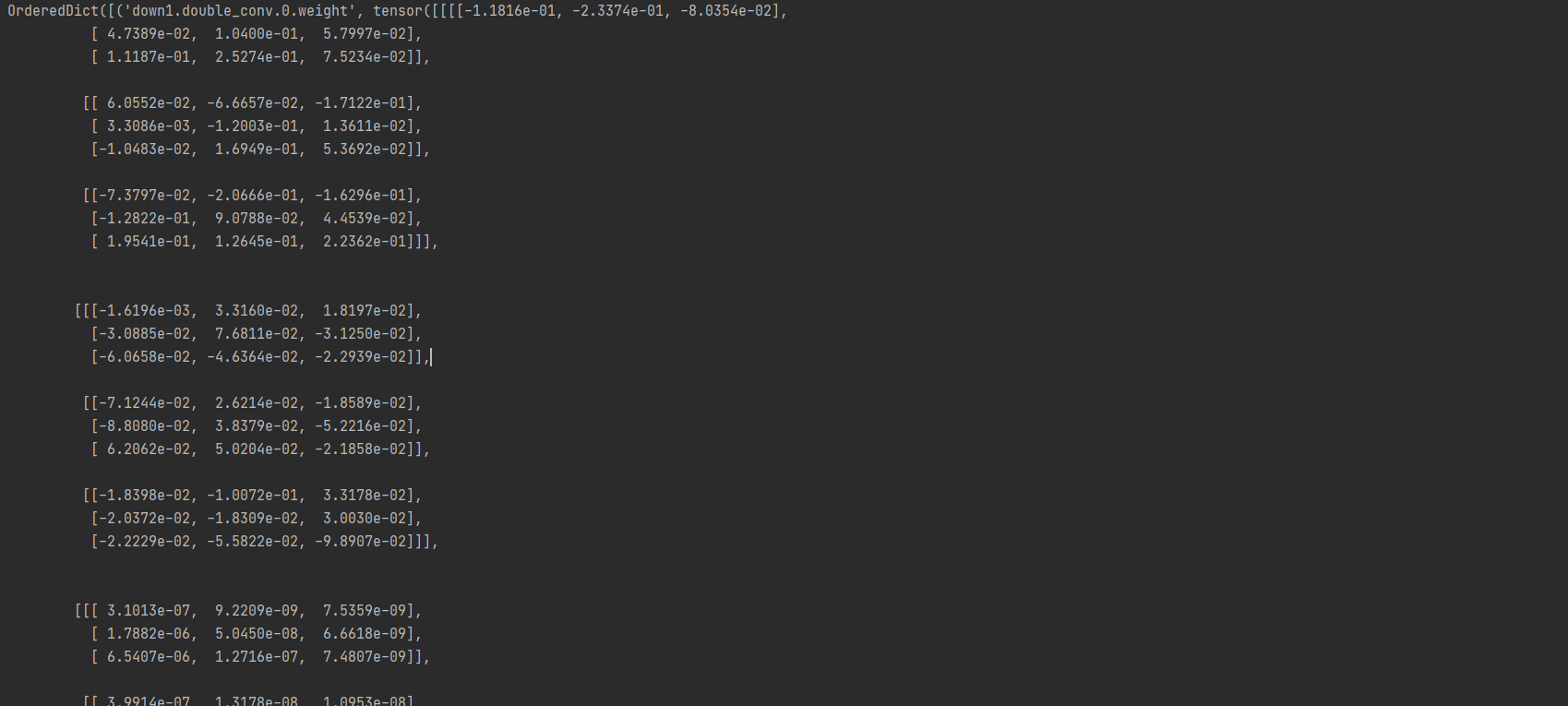

模型权重:

模型结构:

UNet(

(time_emb): Sequential(

(0): TimePositionEmbedding()

(1): Linear(in_features=256, out_features=256, bias=True)

(2): ReLU()

)

(cls_emb): Embedding(10, 32)

(enc_convs): ModuleList(

(0): ConvBlock(

(seq1): Sequential(

(0): Conv2d(1, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(time_emb_linear): Linear(in_features=256, out_features=64, bias=True)

(relu): ReLU()

(seq2): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(crossattn): CrossAttention(

(w_q): Linear(in_features=64, out_features=16, bias=True)

(w_k): Linear(in_features=32, out_features=16, bias=True)

(w_v): Linear(in_features=32, out_features=16, bias=True)

(softmax): Softmax(dim=-1)

(z_linear): Linear(in_features=16, out_features=64, bias=True)

(norm1): LayerNorm((64,), eps=1e-05, elementwise_affine=True)

(feedforward): Sequential(

(0): Linear(in_features=64, out_features=32, bias=True)

(1): ReLU()

(2): Linear(in_features=32, out_features=64, bias=True)

)

(norm2): LayerNorm((64,), eps=1e-05, elementwise_affine=True)

)

)

(1): ConvBlock(

(seq1): Sequential(

(0): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(time_emb_linear): Linear(in_features=256, out_features=128, bias=True)

(relu): ReLU()

(seq2): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(crossattn): CrossAttention(

(w_q): Linear(in_features=128, out_features=16, bias=True)

(w_k): Linear(in_features=32, out_features=16, bias=True)

(w_v): Linear(in_features=32, out_features=16, bias=True)

(softmax): Softmax(dim=-1)

(z_linear): Linear(in_features=16, out_features=128, bias=True)

(norm1): LayerNorm((128,), eps=1e-05, elementwise_affine=True)

(feedforward): Sequential(

(0): Linear(in_features=128, out_features=32, bias=True)

(1): ReLU()

(2): Linear(in_features=32, out_features=128, bias=True)

)

(norm2): LayerNorm((128,), eps=1e-05, elementwise_affine=True)

)

)

(2): ConvBlock(

(seq1): Sequential(

(0): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(time_emb_linear): Linear(in_features=256, out_features=256, bias=True)

(relu): ReLU()

(seq2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(crossattn): CrossAttention(

(w_q): Linear(in_features=256, out_features=16, bias=True)

(w_k): Linear(in_features=32, out_features=16, bias=True)

(w_v): Linear(in_features=32, out_features=16, bias=True)

(softmax): Softmax(dim=-1)

(z_linear): Linear(in_features=16, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(feedforward): Sequential(

(0): Linear(in_features=256, out_features=32, bias=True)

(1): ReLU()

(2): Linear(in_features=32, out_features=256, bias=True)

)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

)

)

(3): ConvBlock(

(seq1): Sequential(

(0): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(time_emb_linear): Linear(in_features=256, out_features=512, bias=True)

(relu): ReLU()

(seq2): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(crossattn): CrossAttention(

(w_q): Linear(in_features=512, out_features=16, bias=True)

(w_k): Linear(in_features=32, out_features=16, bias=True)

(w_v): Linear(in_features=32, out_features=16, bias=True)

(softmax): Softmax(dim=-1)

(z_linear): Linear(in_features=16, out_features=512, bias=True)

(norm1): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(feedforward): Sequential(

(0): Linear(in_features=512, out_features=32, bias=True)

(1): ReLU()

(2): Linear(in_features=32, out_features=512, bias=True)

)

(norm2): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

)

)

(4): ConvBlock(

(seq1): Sequential(

(0): Conv2d(512, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(time_emb_linear): Linear(in_features=256, out_features=1024, bias=True)

(relu): ReLU()

(seq2): Sequential(

(0): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(crossattn): CrossAttention(

(w_q): Linear(in_features=1024, out_features=16, bias=True)

(w_k): Linear(in_features=32, out_features=16, bias=True)

(w_v): Linear(in_features=32, out_features=16, bias=True)

(softmax): Softmax(dim=-1)

(z_linear): Linear(in_features=16, out_features=1024, bias=True)

(norm1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(feedforward): Sequential(

(0): Linear(in_features=1024, out_features=32, bias=True)

(1): ReLU()

(2): Linear(in_features=32, out_features=1024, bias=True)

)

(norm2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

)

)

)

(maxpools): ModuleList(

(0): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(deconvs): ModuleList(

(0): ConvTranspose2d(1024, 512, kernel_size=(2, 2), stride=(2, 2))

(1): ConvTranspose2d(512, 256, kernel_size=(2, 2), stride=(2, 2))

(2): ConvTranspose2d(256, 128, kernel_size=(2, 2), stride=(2, 2))

(3): ConvTranspose2d(128, 64, kernel_size=(2, 2), stride=(2, 2))

)

(dec_convs): ModuleList(

(0): ConvBlock(

(seq1): Sequential(

(0): Conv2d(1024, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(time_emb_linear): Linear(in_features=256, out_features=512, bias=True)

(relu): ReLU()

(seq2): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(crossattn): CrossAttention(

(w_q): Linear(in_features=512, out_features=16, bias=True)

(w_k): Linear(in_features=32, out_features=16, bias=True)

(w_v): Linear(in_features=32, out_features=16, bias=True)

(softmax): Softmax(dim=-1)

(z_linear): Linear(in_features=16, out_features=512, bias=True)

(norm1): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(feedforward): Sequential(

(0): Linear(in_features=512, out_features=32, bias=True)

(1): ReLU()

(2): Linear(in_features=32, out_features=512, bias=True)

)

(norm2): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

)

)

(1): ConvBlock(

(seq1): Sequential(

(0): Conv2d(512, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(time_emb_linear): Linear(in_features=256, out_features=256, bias=True)

(relu): ReLU()

(seq2): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(crossattn): CrossAttention(

(w_q): Linear(in_features=256, out_features=16, bias=True)

(w_k): Linear(in_features=32, out_features=16, bias=True)

(w_v): Linear(in_features=32, out_features=16, bias=True)

(softmax): Softmax(dim=-1)

(z_linear): Linear(in_features=16, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(feedforward): Sequential(

(0): Linear(in_features=256, out_features=32, bias=True)

(1): ReLU()

(2): Linear(in_features=32, out_features=256, bias=True)

)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

)

)

(2): ConvBlock(

(seq1): Sequential(

(0): Conv2d(256, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(time_emb_linear): Linear(in_features=256, out_features=128, bias=True)

(relu): ReLU()

(seq2): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(crossattn): CrossAttention(

(w_q): Linear(in_features=128, out_features=16, bias=True)

(w_k): Linear(in_features=32, out_features=16, bias=True)

(w_v): Linear(in_features=32, out_features=16, bias=True)

(softmax): Softmax(dim=-1)

(z_linear): Linear(in_features=16, out_features=128, bias=True)

(norm1): LayerNorm((128,), eps=1e-05, elementwise_affine=True)

(feedforward): Sequential(

(0): Linear(in_features=128, out_features=32, bias=True)

(1): ReLU()

(2): Linear(in_features=32, out_features=128, bias=True)

)

(norm2): LayerNorm((128,), eps=1e-05, elementwise_affine=True)

)

)

(3): ConvBlock(

(seq1): Sequential(

(0): Conv2d(128, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(time_emb_linear): Linear(in_features=256, out_features=64, bias=True)

(relu): ReLU()

(seq2): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(crossattn): CrossAttention(

(w_q): Linear(in_features=64, out_features=16, bias=True)

(w_k): Linear(in_features=32, out_features=16, bias=True)

(w_v): Linear(in_features=32, out_features=16, bias=True)

(softmax): Softmax(dim=-1)

(z_linear): Linear(in_features=16, out_features=64, bias=True)

(norm1): LayerNorm((64,), eps=1e-05, elementwise_affine=True)

(feedforward): Sequential(

(0): Linear(in_features=64, out_features=32, bias=True)

(1): ReLU()

(2): Linear(in_features=32, out_features=64, bias=True)

)

(norm2): LayerNorm((64,), eps=1e-05, elementwise_affine=True)

)

)

)

(output): Conv2d(64, 1, kernel_size=(1, 1), stride=(1, 1))

)

浙公网安备 33010602011771号

浙公网安备 33010602011771号