机器学习(线性回归)

线性模型

损失函数

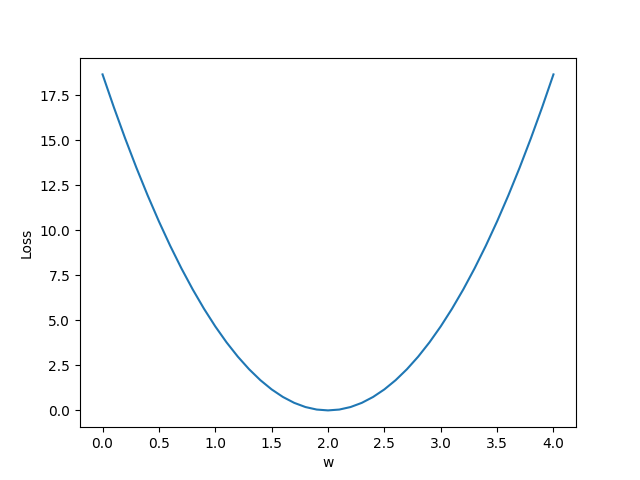

原理:给定原始x,y值,对权重w值从[0:4]中以0.1间隔随机取值,设置预测线性函数y=x*w,对预测值与原始y值进行loss计算,并画出预测图像

| x | y | y_predit | loss |

|---|---|---|---|

| 1 | 2 | 3 | 1 |

| 2 | 4 | 6 | 4 |

| 3 | 6 | 9 | 9 |

| mean=14/3 |

源代码

import numpy as np

import matplotlib.pyplot as plt

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

# our model for the forward pass

def forward(x):

return x * w

# 损失函数

def loss(x, y):

y_pred = forward(x)

return (y_pred - y) * (y_pred - y)

#权重准备空列表

w_list = []

mse_list = []

for w in np.arange(0.0, 4.1, 0.1):

# Print the weights and initialize the lost

print("w=", w)

l_sum = 0

for x_val, y_val in zip(x_data, y_data):

# For each input and output, calculate y_hat

# Compute the total loss and add to the total error

y_pred_val = forward(x_val)#计算预测值

l = loss(x_val, y_val)#计算损失

l_sum += l

print("\t", x_val, y_val, y_pred_val, l)

# Now compute the Mean squared error (mse) of each

# Aggregate the weight/mse from this run

print("MSE=", l_sum / 3)#mse均方误差

w_list.append(w)

mse_list.append(l_sum / 3)

# Plot it all

plt.plot(w_list, mse_list)

plt.ylabel('Loss')

plt.xlabel('w')

plt.show()

结果

损失在w=2时为最小,为最优值

优化

当数据集过大时,可用visdom实时绘画

Exercise

问题

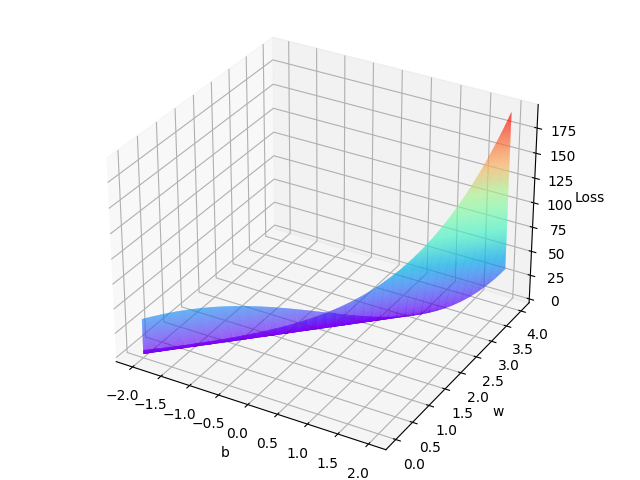

- Try to use the model in right-side, and draw the cost graph

- Tips

- you can read the material of how to draw 3d graph

- Function np.meshgrid() is poplular for drawing 3d graph,read the [docs] and utilize vectorization caluation.

线性模型

源代码

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

x = np.arange(-2.0, 2.0 , 0.01)

y = np.arange(-4.0, 4.0 , 0.01)

w = np.arange(-0.0, 4.0, 0.01)

b = np.arange(-2.0, 2.0, 0.01)

# our model for the forward pass

def forward(x):

return x * w+b

# 损失函数

def loss(x, y):

y_pred = forward(x)

return (y_pred - y) * (y_pred - y)

figure = plt.figure()

ax = Axes3D(figure)

x,y=np.meshgrid(x,y)

z=loss(x,y)

ax.plot_surface(b,w,z,rstride=1,cstride=1,cmap='rainbow')

ax.set_xlabel('b')

ax.set_ylabel('w')

ax.set_zlabel('Loss')

plt.show()

结果

从图可以看出,当w=2,b=0时,误差最小。

posted on 2020-10-27 22:25 doubleqing 阅读(218) 评论(0) 编辑 收藏 举报