1.安装环境:

oracle版本:12.1.0.2

集群模式:rac+asm+udev

存储:iscsi共享

平台:VMware+centos7.9

Grid集群安装包:Oracle Database Grid Infrastructure 12.1.0.2.0 for Linux x86-64

DB数据库安装包:Oracle Database 12.1.0.2.0 for Linux x86-64

下载地址:https://edelivery.oracle.com/osdc/faces/SoftwareDelivery

2.IP划分

# Public

192.168.0.66 ol7-121-rac1.localdomain ol7-121-rac1

192.168.0.67 ol7-121-rac2.localdomain ol7-121-rac2

# Private

1.1.1.1 ol7-121-rac1-priv.localdomain ol7-121-rac1-priv

1.1.1.2 ol7-121-rac2-priv.localdomain ol7-121-rac2-priv

# Virtual

192.168.0.68 ol7-121-rac1-vip.localdomain ol7-121-rac1-vip

192.168.0.69 ol7-121-rac2-vip.localdomain ol7-121-rac2-vip

# SCAN

192.168.0.70 ol7-121-scan.localdomain ol7-121-scan

3.虚拟机配置

rac1+rac2+rac存储准备三台虚拟机

4.安装依赖包

yum install binutils -y

yum install compat-libstdc++-33 -y

yum install compat-libstdc++-33.i686 -y

yum install gcc -y

yum install gcc-c++ -y

yum install glibc -y

yum install glibc.i686 -y

yum install glibc-devel -y

yum install glibc-devel.i686 -y

yum install ksh -y

yum install libgcc -y

yum install libgcc.i686 -y

yum install libstdc++ -y

yum install libstdc++.i686 -y

yum install libstdc++-devel -y

yum install libstdc++-devel.i686 -y

yum install libaio -y

yum install libaio.i686 -y

yum install libaio-devel -y

yum install libaio-devel.i686 -y

yum install libXext -y

yum install libXext.i686 -y

yum install libXtst -y

yum install libXtst.i686 -y

yum install libX11 -y

yum install libX11.i686 -y

yum install libXau -y

yum install libXau.i686 -y

yum install libxcb -y

yum install libxcb.i686 -y

yum install libXi -y

yum install libXi.i686 -y

yum install make -y

yum install sysstat -y

yum install unixODBC -y

yum install unixODBC-devel -y

yum install zlib-devel -y

yum install zlib-devel.i686 -y

4.配置内核参数

#vi /etc/sysctl.conf

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 4398046511104

kernel.panic_on_oops = 1

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

net.ipv4.conf.all.rp_filter = 2

net.ipv4.conf.default.rp_filter = 2

fs.aio-max-nr = 1048576

net.ipv4.ip_local_port_range = 9000 65500

修改生效:/sbin/sysctl -p

5.修改系统限制参数

# vi /etc/security/limits.conf

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft nproc 16384

oracle hard nproc 16384

oracle soft stack 10240

oracle hard stack 32768

oracle hard memlock 134217728

oracle soft memlock 134217728

二、安装前准备

1.修改 /etc/selinux/config 配置

# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config; setenforce 0 ; cat /etc/selinux/config | grep -i SELINUX= | grep -v "^#"

SELINUX=disabled

2.关闭防火墙

systemctl stop firewalld; systemctl disable firewalld

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

Removed symlink /etc/systemd/system/basic.target.wants/firewalld.service.

3.增加组和用户

groupadd -g 54321 oinstall; groupadd -g 54322 dba; \

groupadd -g 54323 oper; groupadd -g 54324 backupdba; \

groupadd -g 54325 dgdba; groupadd -g 54326 kmdba; \

groupadd -g 54327 asmdba; groupadd -g 54328 asmoper; \

groupadd -g 54329 asmadmin; groupadd -g 54330 racdba; \

useradd -u 54321 -g oinstall -G dba,asmdba,backupdba,dgdba,kmdba,racdba,oper oracle; \

useradd -u 54322 -g oinstall -G asmadmin,asmdba,asmoper,dba grid

# passwd oracle

# passwd grid

4.修改 hosts 文件

127.0.0.1 localhost.localdomain localhost

# Public

192.168.56.101 ol7-121-rac1.localdomain ol7-121-rac1

192.168.56.102 ol7-121-rac2.localdomain ol7-121-rac2

# Private

192.168.1.101 ol7-121-rac1-priv.localdomain ol7-121-rac1-priv

192.168.1.102 ol7-121-rac2-priv.localdomain ol7-121-rac2-priv

# Virtual

192.168.56.103 ol7-121-rac1-vip.localdomain ol7-121-rac1-vip

192.168.56.104 ol7-121-rac2-vip.localdomain ol7-121-rac2-vip

# SCAN

#192.168.56.105 ol7-121-scan.localdomain ol7-121-scan

5.建立文件路径

mkdir -p /u01/app/12.1.0.2/grid

mkdir -p /u01/app/oracle/product/12.1.0.2/db_1

chown -R oracle:oinstall /u01

chmod -R 775 /u01/

6. 修改oracle用户的环境变量

# Oracle Settings

vi /home/oracle/.bash_profile

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_HOSTNAME=ol7-121-rac1.localdomain

export ORACLE_UNQNAME=CDBRAC

export ORACLE_BASE=/u01/app/oracle

export GRID_HOME=/u01/app/12.1.0.2/grid

export DB_HOME=$ORACLE_BASE/product/12.1.0.2/db_1

export ORACLE_HOME=$DB_HOME

export ORACLE_SID=cdbrac1

export ORACLE_TERM=xterm

export BASE_PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$BASE_PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

alias grid_env='. /home/oracle/grid_env'

alias db_env='. /home/oracle/db_env'

#--注意rac2节点需要修改cdbrac2

vi /home/oracle/db_env

export ORACLE_SID=cdbrac1

export ORACLE_HOME=$DB_HOME

export PATH=$ORACLE_HOME/bin:$BASE_PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

7.修改grid用户的环境变量

vi /home/oracle/grid_env

export ORACLE_SID=+ASM1

export ORACLE_HOME=$GRID_HOME

export PATH=$ORACLE_HOME/bin:$BASE_PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

#--注意 ORACLE_SID rac2 节点需要修改 +ASM2

8. 继续修改oracle用户的环境变量

vi /home/oracle/.bash_profile

$ grid_env

$ echo $ORACLE_HOME

/u01/app/12.1.0.2/grid

$ db_env

$ echo $ORACLE_HOME

/u01/app/oracle/product/12.1.0.2/db_1

$

# shutdown -r now 变量修改完毕建议重启

三、配置 iscsi 共享存储

iscsi 构建 Oracle12C RAC 共享存储

关闭防火墙

[root@ol7-122-rac1 ~]# systemctl stop firewalld

[root@ol7-122-rac1 ~]# systemctl disable firewalld

#防火墙如果沒有关掉,必须打开 iSCSI 所使用的 Port

[root@ol7-122-rac1 ~]# firewall-cmd --permanent --add-port=3260/tcp

[root@ol7-122-rac1 ~]# firewall-cmd --reload

修改 /etc/selinux/config 配置

# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

# setenforce 0

# cat /etc/selinux/config | grep -i SELINUX= | grep -v "^#"

SELINUX=disabled

环境信息

server:10.0.7.135

rac1:10.0.7.110

rac2:10.0.7.120

这里我们选 server 为 iscsi 服务器,然后把其上的磁盘共享给 rac1 和 rac2 使用;

注:这里我们可以选用这个2个节点任何一台或者其他机器作为 iscsi 服务器;

首先我们在rac1上添加需要共享的磁盘,也就是rac的磁盘组。这里我已经在 server 上分配了6块盘,说明如下:

Ocrvotedisk 3块 4G

Data 1块 40G

FRA_ARC 1 块 20G

注:Data数据盘必须大于或等于 40G

server 端安装 iSCSI

首先安装管理工具

# yum install -y targetcli

启动 iSCSI 服务

# systemctl start target.service

# systemctl enable target.service

Created symlink from /etc/systemd/system/multi-user.target.wants/target.service to /usr/lib/systemd/system/target.service.

在 server 节点上查看

# fdisk -l

Disk /dev/vda: 42.9 GB, 42949672960 bytes, 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x000ae6a1

Device Boot Start End Blocks Id System

/dev/vda1 * 2048 2099199 1048576 83 Linux

/dev/vda2 2099200 83886079 40893440 8e Linux LVM

Disk /dev/mapper/rhel-root: 37.6 GB, 37576769536 bytes, 73392128 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/rhel-swap: 4294 MB, 4294967296 bytes, 8388608 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/vdb: 4294 MB, 4294967296 bytes, 8388608 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/vdc: 4294 MB, 4294967296 bytes, 8388608 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/vdd: 4294 MB, 4294967296 bytes, 8388608 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/vde: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/vdf: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

以上说明磁盘已经在 server 节点上挂载,下面就利用 iscsi 共享给 rac1 和 rac2 节点

配置 iscsi server服务端

a)安装 scsi-target-utils 已用 yum 安装完成,不需要操作

# rpm -q epel-release

package epel-release is not installed

# yum install -y epel-release

# rpm -q epel-release

epel-release-7-11.noarch

# yum --enablerepo=epel -y install scsi-target-utils libxslt

b)配置 targets.conf ,在文件末尾添加如下内容

# cat >> /etc/tgt/targets.conf << EOF

<target iqn.2019-09.com.oracle:rac>

backing-store /dev/vdb

backing-store /dev/vdc

backing-store /dev/vdd

backing-store /dev/vde

backing-store /dev/vdf

initiator-address 10.0.7.0/24

write-cache off

</target>

EOF

iqn 名字可任意

initiator-address 限定 允许访问的客户端地址段或具体IP

write-cache off 是否开启或关闭快取

注:里面添加的就是想要共享给 rac1 和 rac2 的磁盘

c)启动 tgtd

# /bin/systemctl restart tgtd.service

# systemctl restart target.service

# systemctl enable tgtd

Created symlink from /etc/systemd/system/multi-user.target.wants/tgtd.service to /usr/lib/systemd/system/tgtd.service.

# tgtadm --lld iscsi --mode target --op show

Target 1: iqn.2019-09.com.oracle:rac

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

SWP: No

Thin-provisioning: No

Backing store type: null

Backing store path: None

Backing store flags:

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 4295 MB, Block size: 512

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

SWP: No

Thin-provisioning: No

Backing store type: rdwr

Backing store path: /dev/vdb

Backing store flags:

LUN: 2

Type: disk

SCSI ID: IET 00010002

SCSI SN: beaf12

Size: 4295 MB, Block size: 512

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

SWP: No

Thin-provisioning: No

Backing store type: rdwr

Backing store path: /dev/vdc

Backing store flags:

LUN: 3

Type: disk

SCSI ID: IET 00010003

SCSI SN: beaf13

Size: 4295 MB, Block size: 512

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

SWP: No

Thin-provisioning: No

Backing store type: rdwr

Backing store path: /dev/vdd

Backing store flags:

LUN: 4

Type: disk

SCSI ID: IET 00010004

SCSI SN: beaf14

Size: 21475 MB, Block size: 512

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

SWP: No

Thin-provisioning: No

Backing store type: rdwr

Backing store path: /dev/vde

Backing store flags:

LUN: 5

Type: disk

SCSI ID: IET 00010005

SCSI SN: beaf15

Size: 21475 MB, Block size: 512

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

SWP: No

Thin-provisioning: No

Backing store type: rdwr

Backing store path: /dev/vdf

Backing store flags:

Account information:

ACL information:

10.0.7.0/24

3.配置 iscsi 客户端(所有rac节点)

a)安装 iscsi-initiator-utils,安裝 iSCSI Client 软件

# yum install -y iscsi-initiator-utils

# rpm -qa | grep iscsi

iscsi-initiator-utils-iscsiuio-6.2.0.874-10.el7.x86_64

libiscsi-1.9.0-7.el7.x86_64

iscsi-initiator-utils-6.2.0.874-10.el7.x86_64

##重启客户端

# systemctl restart iscsid.service

b)配置 initiatorname.iscsi

# vim /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2019-09.com.oracle:rac

注:InitiatorName 值就是 server 上 /etc/tgt/targets.conf 里配置的值

c)启动iscsi

# systemctl restart iscsi

# systemctl enable iscsi.service

注:如果我安装操作系统时已经安装了 iscsi-initiator-utils 软件包所以不用再另外安装,直接配置就行。

4.共享存储(所有rac节点)

a)通过3260端口查看开放了哪些共享存储:

# iscsiadm -m discovery -tsendtargets -p 10.0.7.110:3260

10.0.7.110:3260,1 iqn.2019-09.com.oracle:rac

# iscsiadm -m node -T discovery -T iqn.2019-09.com.oracle:rac -p 10.0.7.110:3260

# BEGIN RECORD 6.2.0.874-10

node.name = iqn.2019-09.com.oracle:rac

node.tpgt = 1

node.startup = automatic

node.leading_login = No

iface.hwaddress = <empty>

iface.ipaddress = <empty>

iface.iscsi_ifacename = default

...

b)登录共享存储:

# iscsiadm -m node -T iqn.2019-09.com.oracle:rac -p 10.0.7.110:3260 -l

Logging in to [iface: default, target: iqn.2019-09.com.oracle:rac, portal: 10.0.7.110,3260] (multiple)

Login to [iface: default, target: iqn.2019-09.com.oracle:rac, portal: 10.0.7.110,3260] successful.

c)探测下共享存储的目录:

# partprobe

# fdisk -l

Disk /dev/vda: 42.9 GB, 42949672960 bytes, 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x000ae6a1

Device Boot Start End Blocks Id System

/dev/vda1 * 2048 2099199 1048576 83 Linux

/dev/vda2 2099200 83886079 40893440 8e Linux LVM

Disk /dev/mapper/rhel-root: 37.6 GB, 37576769536 bytes, 73392128 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/rhel-swap: 4294 MB, 4294967296 bytes, 8388608 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdb: 4294 MB, 4294967296 bytes, 8388608 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sda: 4294 MB, 4294967296 bytes, 8388608 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdc: 4294 MB, 4294967296 bytes, 8388608 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdd: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sde: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

d)退出共享存储目录:

# iscsiadm -m node -T iqn.2019-09.com.oracle:rac -p 10.0.7.110:3260 --logout

Logging out of session [sid: 1, target: iqn.2019-09.com.oracle:rac, portal: 10.0.7.110,3260]

Logout of [sid: 1, target: iqn.2019-09.com.oracle:rac, portal: 10.0.7.110,3260] successful.

# fdisk -l (将查看不到共享目录)

# fdisk -l

Disk /dev/vda: 42.9 GB, 42949672960 bytes, 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x000ae6a1

Device Boot Start End Blocks Id System

/dev/vda1 * 2048 2099199 1048576 83 Linux

/dev/vda2 2099200 83886079 40893440 8e Linux LVM

Disk /dev/mapper/rhel-root: 37.6 GB, 37576769536 bytes, 73392128 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/rhel-swap: 4294 MB, 4294967296 bytes, 8388608 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

e)删除共享存储目录:

# systemctl restart iscsi.service

# iscsiadm -m node -T iqn.2019-09.com.oracle:rac -p 10.0.7.110:3260 --logout

# iscsiadm -m node -T iqn.2019-09.com.oracle:rac -p 10.0.7.110:3260 -o delete

f)重新服务并重新创建登录:

# systemctl restart iscsi.service

# iscsiadm -m discovery -t sendtargets -p 10.0.7.110:3260

# iscsiadm -m node -T iqn.2019-09.com.oracle:rac -p 10.0.7.110:3260 -l

四、绑定 UDEV 共享磁盘(rac1 和 rac2)

注意:添加共享磁盘顺序要一致

1. 生成规则文件

# touch /etc/udev/rules.d/99-oracle-asmdevices.rules; cd /etc/udev/rules.d; ll

-rw-r--r--. 1 root root 709 8月 24 2016 70-persistent-ipoib.rules

-rw-r--r-- 1 root root 0 9月 10 20:16 99-oracle-asmdevices.rules

#####或者#####

]# touch /usr/lib/udev/rules.d/99-oracle-asmdevices.rules

2. 生成规则

没有对sdb进行分区,执行如下shell脚本,

for i in a b c d e;

do

echo "KERNEL==\"sd*\", SUBSYSTEM==\"block\", PROGRAM==\"/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\", RESULT==\"`/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\", SYMLINK+=\"asm-disk$i\", OWNER=\"grid\", GROUP=\"asmadmin\", MODE=\"0660\"" >> /etc/udev/rules.d/99-oracle-asmdevices.rules

done

# cat 99-oracle-asmdevices.rules

KERNEL=="sd*", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="360000000000000000e00000000010001", SYMLINK+="asm-diska", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="360000000000000000e00000000010002", SYMLINK+="asm-diskb", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="360000000000000000e00000000010003", SYMLINK+="asm-diskc", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="360000000000000000e00000000010004", SYMLINK+="asm-diskd", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="360000000000000000e00000000010005", SYMLINK+="asm-diske", OWNER="grid", GROUP="asmadmin", MODE="0660"

对sdb 进行了分区,执行如下shell脚本 (不需要操作,了解就可以)

for i in b1 b2

do

echo "KERNEL==\"sd$i\", SUBSYSTEM==\"block\", PROGRAM==\"/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/\$parent\", RESULT==\"`/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sd${i:0:1}`\", SYMLINK+=\"asm-disk$i\", OWNER=\"grid\", GROUP=\"asmadmin\", MODE=\"0660\"" >> /etc/udev/rules.d/99-oracle-asmdevices.rules

done;

注意:未分区用 $name,分区用 $parent

3. 文件 99-oracle-asmdevices.rules 格式 (不需要操作,了解就可以)

KERNEL=="sd*", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c2948ef9d9e4a7937bfc65888bc8", NAME="asm-diskb", OWNER="grid", GROUP="asmadmin", MODE="0660"

手动加载分区

[root@ehs-rac-01 rules.d]# /sbin/partprobe /dev/sda; /sbin/partprobe /dev/sdb; /sbin/partprobe /dev/sdc; /sbin/partprobe /dev/sdd; /sbin/partprobe /dev/sde

备注:获取 RESULT

在 Linux 7下,可以使用如下命令(不需要操作,了解就可以)

[root@ehs-rac-01 rules.d]# /usr/lib/udev/scsi_id -g -u /dev/sdb

360000000000000000e00000000010002

4. 用 udevadm 进行测试

注意: udevadm 命令不接受 /dev/sdc 这样的挂载设备名,必须是使用 /sys/block/sdb 这样的原始设备名。

[root@ehs-rac-01 rules.d]# /sbin/udevadm test /sys/block/sda; /sbin/udevadm test /sys/block/sdb; /sbin/udevadm test /sys/block/sdc; /sbin/udevadm test /sys/block/sdd; /sbin/udevadm test /sys/block/sde

[root@ehs-rac-01 rules.d]# udevadm info --query=all --path=/sys/block/sda; udevadm info --query=all --path=/sys/block/sdb; udevadm info --query=all --path=/sys/block/sdc; udevadm info --query=all --path=/sys/block/sdd; udevadm info --query=all --path=/sys/block/sde

[root@ehs-rac-01 rules.d]# udevadm info --query=all --name=asm-diska; udevadm info --query=all --name=asm-diskb; udevadm info --query=all --name=asm-diskc; udevadm info --query=all --name=asm-diskd; udevadm info --query=all --name=asm-diske

5. 启动udev

[root@ehs-rac-01 rules.d]# /sbin/udevadm control --reload-rules

[root@ehs-rac-01 rules.d]# systemctl status systemd-udevd.service

[root@ehs-rac-01 rules.d]# systemctl enable systemd-udevd.service

6. 检查设备是否正确绑定

[root@ehs-rac-01 rules.d]# ll /dev/asm*

lrwxrwxrwx 1 root root 3 9月 10 20:28 /dev/asm-diska -> sda

lrwxrwxrwx 1 root root 3 9月 10 20:30 /dev/asm-diskb -> sdb

lrwxrwxrwx 1 root root 3 9月 10 20:30 /dev/asm-diskc -> sdc

lrwxrwxrwx 1 root root 3 9月 10 20:30 /dev/asm-diskd -> sdd

lrwxrwxrwx 1 root root 3 9月 10 20:30 /dev/asm-diske -> sde

[root@ehs-rac-01 rules.d]#

[root@ehs-rac-01 rules.d]# ll /dev/sd*

brw-rw---- 1 grid asmadmin 8, 0 9月 10 20:28 /dev/sda

brw-rw---- 1 grid asmadmin 8, 16 9月 10 20:30 /dev/sdb

brw-rw---- 1 grid asmadmin 8, 32 9月 10 20:30 /dev/sdc

brw-rw---- 1 grid asmadmin 8, 48 9月 10 20:30 /dev/sdd

brw-rw---- 1 grid asmadmin 8, 64 9月 10 20:30 /dev/sde

五、设置SSH信任关系(rac1 和 rac2 )

基本步骤:

1)设置 rac1 的 rsa 和 dsa 加密,然后都追加到 authorized_keys 文件中

2)再把 rac1 的 authorized_keys 拷贝到 rac2 中

3)同样 rac2 的 rsa 和 dsa 加密,然后把 rac2 的 rsa 和 dsa 加密追加到 authorized_keys 文件中

4)再把 rac2 的 authorized_keys 文件拷贝到 rac1 中,覆盖之前的 authorized_keys 文件

注1:这样的话 rac1 和 rac2 的 authorized_keys 文件中都有了彼此的 rsa 和 dsa 加密

注2:需设置 grid 和 oracle 两个用户(这里以 oracle 用户为例)

1.Rac1服务器设置

(1)设置rsa和dsa加密:

# su - oracle

$ ssh-keygen -t rsa

$ ssh-keygen -t dsa

$ ll .ssh/

total 16

-rw------- 1 oracle oinstall 672 Sep 10 13:51 id_dsa

-rw-r--r-- 1 oracle oinstall 607 Sep 10 13:51 id_dsa.pub

-rw------- 1 oracle oinstall 1679 Sep 10 13:51 id_rsa

-rw-r--r-- 1 oracle oinstall 399 Sep 10 13:51 id_rsa.pub

(2)把rsa和dsa加密都放置到authorized_keys文件中:

$ cat .ssh/id_rsa.pub >> .ssh/authorized_keys

$ cat .ssh/id_dsa.pub >> .ssh/authorized_keys

$ ll .ssh/

总用量 20

-rw-r--r-- 1 oracle oinstall 1006 9月 10 20:49 authorized_keys

-rw------- 1 oracle oinstall 668 9月 10 20:48 id_dsa

-rw-r--r-- 1 oracle oinstall 607 9月 10 20:48 id_dsa.pub

-rw------- 1 oracle oinstall 1675 9月 10 20:48 id_rsa

-rw-r--r-- 1 oracle oinstall 399 9月 10 20:48 id_rsa.pub

(3)把rac1的authorized_keys拷贝到rac2中

$ cd /home/oracle/.ssh

$ scp authorized_keys 10.0.7.120:/home/oracle/.ssh/

2.Rac2服务器设置

(1)设置rsa和dsa加密:

# su - oracle

$ ssh-keygen -t rsa

$ ssh-keygen -t dsa

$ ll .ssh/

总用量 20

-rw-r--r-- 1 oracle oinstall 1006 9月 10 20:51 authorized_keys

-rw------- 1 oracle oinstall 668 9月 10 20:50 id_dsa

-rw-r--r-- 1 oracle oinstall 607 9月 10 20:50 id_dsa.pub

-rw------- 1 oracle oinstall 1679 9月 10 20:50 id_rsa

-rw-r--r-- 1 oracle oinstall 399 9月 10 20:50 id_rsa.pub

(2)把rac2的rsa和dsa加密都放置到从rac1拷贝来的authorized_keys文件中:

$ cat .ssh/id_rsa.pub >> .ssh/authorized_keys

$ cat .ssh/id_dsa.pub >> .ssh/authorized_keys

(3)把rac2中的authorized_keys文件拷贝到rac1中,覆盖之前的authorized_keys文件:

$ cd /home/oracle/.ssh

$ scp authorized_keys 10.0.7.110:/home/oracle/.ssh/

4.测试SSH

Rac1和 rac2分别测试,可以正常返回日期即可:

$ ssh ehs-rac-01 date

Tue Sep 10 14:11:55 CST 2019

$ ssh ehs-rac-01-priv date

Tue Sep 10 14:11:59 CST 2019

$ ssh ehs-rac-02 date

Tue Sep 10 14:12:03 CST 2019

$ ssh ehs-rac-02-priv date

Tue Sep 10 14:12:08 CST 2019

$ ssh ehs-rac-01 date

Tue Sep 10 14:10:38 CST 2019

$ ssh ehs-rac-01-priv date

Tue Sep 10 14:10:43 CST 2019

$ ssh ehs-rac-02 date

Tue Sep 10 14:10:51 CST 2019

$ ssh ehs-rac-02-priv date

Tue Sep 10 14:10:56 CST 2019

下载Xmanager Power Suite 6,xstart图形化安装

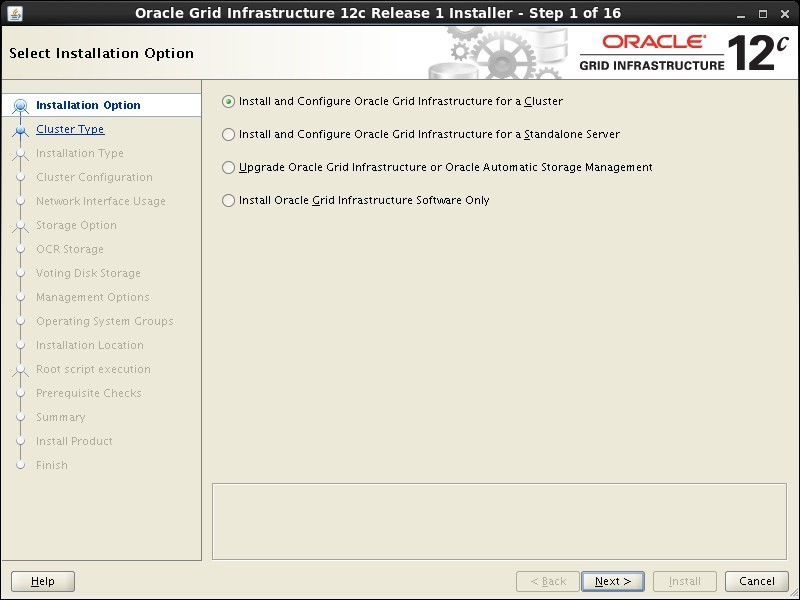

六、安装GRID软件 (rac1)

grid安装包上传至/home/gird目录

注意:OSASM group 根据你配置的udev来分配的组,否则看不到磁盘组

两个节点均执行相应的*.sh脚本

安装完后进行集群检查

$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE ol7-121-rac1 STABLE

ONLINE ONLINE ol7-121-rac2 STABLE

ora.LISTENER.lsnr

ONLINE ONLINE ol7-121-rac1 STABLE

ONLINE ONLINE ol7-121-rac2 STABLE

ora.asm

ONLINE ONLINE ol7-121-rac1 Started,STABLE

ONLINE ONLINE ol7-121-rac2 Started,STABLE

ora.net1.network

ONLINE ONLINE ol7-121-rac1 STABLE

ONLINE ONLINE ol7-121-rac2 STABLE

ora.ons

ONLINE ONLINE ol7-121-rac1 STABLE

ONLINE ONLINE ol7-121-rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE ol7-121-rac2 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE ol7-121-rac1 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE ol7-121-rac1 STABLE

ora.MGMTLSNR

1 ONLINE ONLINE ol7-121-rac1 169.254.255.49 192.1

68.1.101,STABLE

ora.cvu

1 ONLINE ONLINE ol7-121-rac1 STABLE

ora.mgmtdb

1 ONLINE ONLINE ol7-121-rac1 Open,STABLE

ora.oc4j

1 ONLINE ONLINE ol7-121-rac1 STABLE

ora.ol7-121-rac1.vip

1 ONLINE ONLINE ol7-121-rac1 STABLE

ora.ol7-121-rac2.vip

1 ONLINE ONLINE ol7-121-rac2 STABLE

ora.scan1.vip

1 ONLINE ONLINE ol7-121-rac2 STABLE

ora.scan2.vip

1 ONLINE ONLINE ol7-121-rac1 STABLE

ora.scan3.vip

1 ONLINE ONLINE ol7-121-rac1 STABLE

配置 ASM(rac1)

根据自己的规划配置

$ asmca

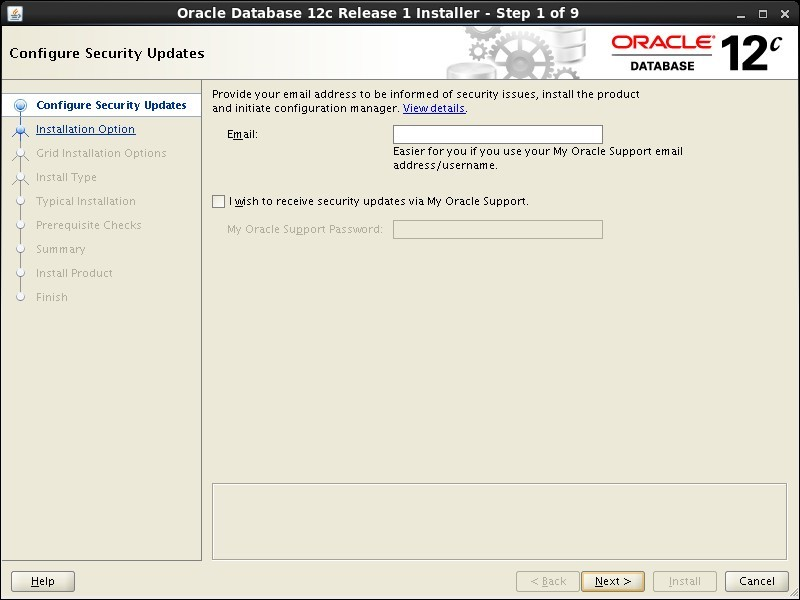

安装 ORACLE 软件(rac1)

解压 oracle 安装包(解压到 oracle 家目录就可以)

./runInstaller

一下组根据默认即可

创建数据库

$ dbca

安装完毕检查数据库运行状态

$ srvctl config database -d cdbrac

Oracle12C RAC安装常见错误及解决办法:

Oracle12c rac安装过程

scan name 为主机scan IP 的名字,此ip不能被占用

错误提示:

[INS-32016] The selected Oracle home contains directories or files.

解决办法:

安装目录结构要标准,且安装目录不能有其他文件

在Linux和UNIX上,Oracle网格基础设施Oracle base的最佳灵活体系结构(OFA)路径是/u[00-99][00-99]/app/user,其中user是Oracle软件安装所有者帐户的名称。例如:u01/app/grid。

在Linux和UNIX上,软件所在的Oracle网格基础设施Oracle home(Grid home)的OFA路径是/u[00-99][00-99]/app/release/Grid,其中release是三位数的Oracle网格基础设施版本。例如:u01/app/12.1.0/grid。

错误提示:

安装grid时找不到asmdisk*

解决办法:

udev配置时需要注意权限及配置的group和owner

错误提示:

Failed to create keys in the OLR, rc = 127, Message:

/u01/11.2.0/grid/bin/clscfg.bin: error while loading shared libraries: libcap.so.1: cannot open shared object file: No such file or directory

解决方法:

[root@node1 lib64]# cd /lib64

[root@node1 lib64]# ls -lrt libcap

libcap-ng.so.0 libcap-ng.so.0.0.0 libcap.so.2 libcap.so.2.16

[root@node1 lib64]# ls -lrt libcap.so.2

lrwxrwxrwx. 1 root root 14 12月 23 21:21 libcap.so.2 -> libcap.so.2.16

[root@web1 node1]# ln -s libcap.so.2.16 libcap.so.1

[root@node1 lib64]# ls -lrt libcap*

-rwxr-xr-x. 1 root root 18672 Jun 25 2011 libcap-ng.so.0.0.0

-rwxr-xr-x. 1 root root 19016 Dec 8 2011 libcap.so.2.16

lrwxrwxrwx. 1 root root 14 Oct 9 11:14 libcap.so.2 -> libcap.so.2.16

lrwxrwxrwx. 1 root root 18 Oct 9 11:14 libcap-ng.so.0 -> libcap-ng.so.0.0.0

lrwxrwxrwx 1 root root 14 Nov 24 13:17 libcap.so.1 -> libcap.so.2.16

继续执行脚本

[root@node1 lib64]# /u01/11.2.0/grid/root.sh

错误提示:

PRCC-1108 : Invalid VIP address 192.168.0.68 because the specified IP address is reachable

2022/05/11 00:18:34 CLSRSC-180: An error occurred while executing the command 'add nodeapps -n ol7-121-rac1 -A ol7-121-rac1-vip/255.255.255.0/bond0 ' (error code 0)

2022/05/11 00:18:34 CLSRSC-286: Failed to add Grid Infrastructure node applications

解决方法:

删除VIP网络回话,因为VIP网络回话可访问

错误提示:

[INS-30100] Insufficient disk space on the selected location 所选磁盘空间不足

解决办法:

增加磁盘空间

错误提示:

[FATAL] [INS-32025] The chosen installation conflicts with software already installed in the given Oracle home.

解决办法:

原因分析:该问题的主要原因是,之前使用该路径安装过一次数据库,所以系统识别该路径之前已经被使用过,需要选择其他路径进行安装

解决方法:修改文件inventory.xml中的oracel_home信息即可

在linux中,查找该文件:cd /; locate inventory.xml

找到后,进入该路径,打开文件,vi inventory.xml

删除里面和安装界面报错指向的路径一致的行,然后保存退出

取消oralce安装,再次执行./runinstaller即可

错误提示:

Swap Size

Cluster Verification Check "Swap Size" failed on node "ol7-121-rac2", expected value: 15.6119GB (1.637028E7KB) actual value: 2GB (2097148.0KB).

PRVF-7573 : Sufficient swap size is not available on node "ol7-121-rac2" [Required = 15.6119GB (1.637028E7KB) ; Found = 2GB (2097148.0KB)]

Cluster Verification Check "Swap Size" failed on node "ol7-121-rac1", expected value: 15.6119GB (1.637028E7KB) actual value: 2GB (2097148.0KB).

PRVF-7573 : Sufficient swap size is not available on node "ol7-121-rac1" [Required = 15.6119GB (1.637028E7KB) ; Found = 2GB (2097148.0KB)]

Cluster Verification check failed on nodes: ol7-121-rac2,ol7-121-rac1.

This is a prerequisite condition to test whether sufficient total swap space is available on the system.

解决办法:

添加磁盘,增加交换分区

错误提示:

Single Client Access Name (SCAN)

PRVF-4664 : Found inconsistent name resolution entries for SCAN name "ol7-121-scan"

PRVF-4664 : Found inconsistent name resolution entries for SCAN name "ol7-121-scan"

Cluster Verification check failed on nodes: ol7-121-rac2,ol7-121-rac1.

This test verifies the Single Client Access Name configuration.

解决办法:

一个scan ip可以在hosts配置,如有报错可跳过

三个scan ip 需要配置独立的dns

Generat ing "/run/ in itramf s/rdsosreport. txt"

[ 125.481135] Buffer I/0 error on dev dm-2, logical block 17874928, asymc page read

[ 125.524847] Buffer I/0 error on dev dm-2, logical block 17874928, asymc page read

Enter ing emergency mode. Exit the shell to cont inue .

Type " journalct1" to view system logs .

You might want to save " /run/ in itramf s/rdsosreport.txt" to a USB stick or / boot

af ter mounting them and attach it to a bug report .

解决办法:

未解决,虚拟机可恢复镜像,服务器重装系统吧

错误提示:

ORA-29760: instance_number parameter not specified

解决办法:

查看环境变量.bash_profile的Oracle_sid是否一致

[root@node1 bin]# ./srvctl status database -d cdbrac

浙公网安备 33010602011771号

浙公网安备 33010602011771号