爬虫

-

python连接测试URL

导入库

from requests import get

有关request库的函数

| 函数 | 说明 |

| get(url [, timeout=n]) | 对应HTTP的GET方式,设定请求超时时间为n秒 |

| post(url, data={'key':'value'}) | 对应HTTP的POST方式,字典用于传输客户数据 |

| delete(url) | 对应HTTP的DELETE方式 |

| head(url) | 对应HTTP的HEAD方式 |

| options(url) | 对应HTTP的OPTIONS方式 |

| put(url, data={'key':'value'}) | 对应HTTP的PUT方式,字典用于传输客户数据 |

设定url,,运用get函数请求页面

url = "https://hao.360.cn/" r = get(url, timeout=3) print("获得响应的状态码:", r.status_code) print("响应内容的编码方式:", r.encoding)

运行结果

获得响应的状态码: 200

响应内容的编码方式: ISO-8859-1

获取网页内容

r.encoding = "utf-8" url_text = r.text print("网页内容:", r.text) print("网页内容长度:", len(url_text))

运行结果

网页内容: <!DOCTYPE html> <!--STATUS OK--><html> <head> ... 意见反馈</a> 京ICP证030173号 <img src=//www.baidu.com/img/gs.gif> </p> </div> </div> </div> </body> </html>

网页内容长度: 2287

-

连接网站20次

在上面的基础上加上一个循环结构即可

""" Spyder Editor This is a temporary script file. """ import requests def getHTMLText(url): try: r=requests.get(url,timeout=30) r.raise_for_status() r.encoding='utf-8' return r.text except: return "F" url="https://www.google.com.hk/" print(getHTMLText(url)) for i in range(20): getHTMLText(url) print(i+1)

3.获取网页中的标签内容

选取一个网站用于演示

URL=https://www.baidu.com/

先获取网站内容

# -*- coding: utf-8 -*-

"""

Spyder Editor

"""

Spyder Editor

This is a temporary script file.

"""

"""

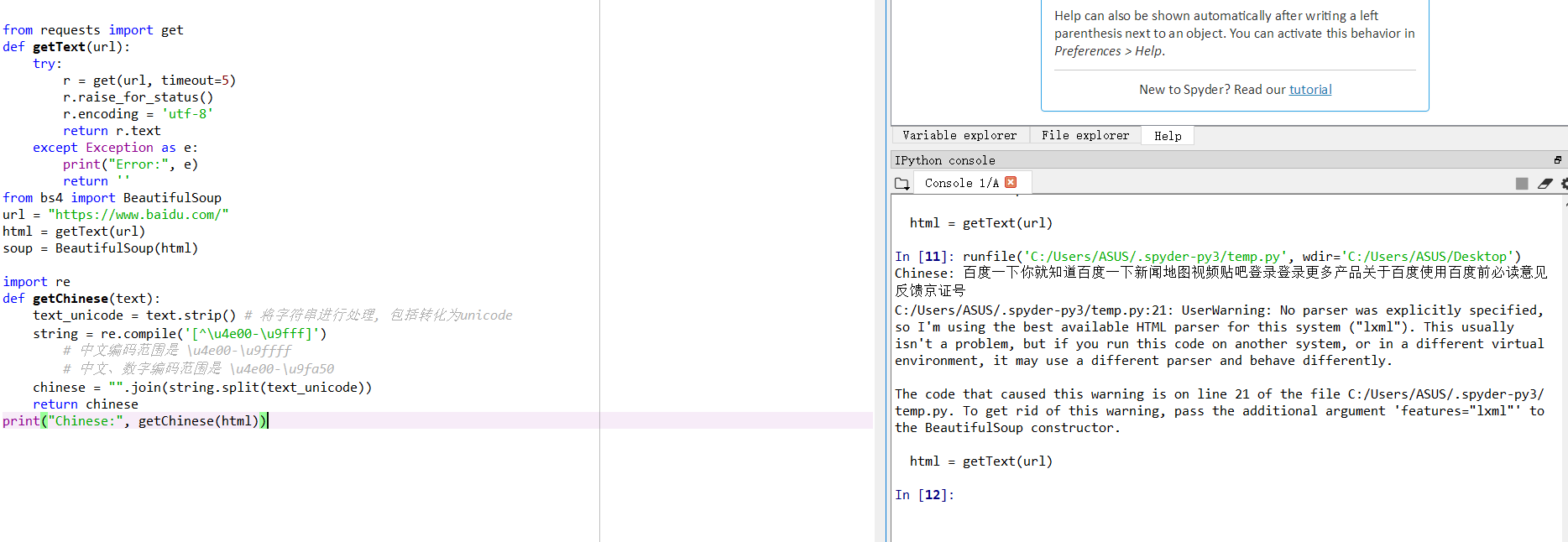

from requests import get def getText(url): try: r = get(url, timeout=5) r.raise_for_status() r.encoding = 'utf-8' return r.text except Exception as e: print("Error:", e) return ''

运用beautifulsoup库

from bs4 import BeautifulSoup

创建一个beautifulsoup对象

url = "https://www.baidu.com/" html = getText(url) soup = BeautifulSoup(html)

现在就可以来有选择的获取网站内容了

获取head

print("head:", soup.head) print("head:", len(soup.head))

获取title的标签和内容

print("title:", soup.title) print("title_string:", soup.title.string)

摘取所有的中文字符

import re def getChinese(text): text_unicode = text.strip() # 将字符串进行处理, 包括转化为unicode string = re.compile('[^\u4e00-\u9fff]') # 中文编码范围是 \u4e00-\u9ffff # 中文、数字编码范围是 \u4e00-\u9fa50 chinese = "".join(string.split(text_unicode)) return chinese

print("Chinese:", getChinese(html))

以上就是获取网站特定内容的方式了,我们也可以在其他网址使用。

4.爬取大学排名

# -*- coding: utf-8 -*- """ Spyder Editor zyp26 This is a temporary script file. """ import pandas import requests from bs4 import BeautifulSoup from requests import get# 获得网站数据 def getText(url): try: r = get(url, timeout=5) r.raise_for_status() r.encoding = 'utf-8' return r.text except Exception as e: print("Error:", e) return '' url=http://www.zuihaodaxue.com/zuihaodaxuepaiming2017.html# 选择2017年的大学排名 def fillTabelList(soup): # 获取表格的数据 tabel_list = [] # 存储整个表格数据 Tr = soup.find_all('tr') for tr in Tr: Td = tr.find_all('td') if len(Td) == 0: continue tr_list = [] # 存储一行的数据 for td in Td: tr_list.append(td.string) tabel_list.append(tr_list) return tabel_list # 可视化展示数据 def PrintTableList(tabel_list, num): # 输出前num行数据 print("{1:^2}{2:{0}^10}{3:{0}^5}{4:{0}^5}{5:{0}^8}".format(chr(12288), "排名", "学校名称", "省市", "总分", "生涯质量")) for i in range(num): text = tabel_list[i] print("{1:{0}^2}{2:{0}^10}{3:{0}^5}{4:{0}^8}{5:{0}^10}".format(chr(12288), *text)) #储存为csv格式 def saveAsCsv(filename, tabel_list): FormData = pandas.DataFrame(tabel_list) FormData.columns = ["排名", "学校名称", "省市", "总分", "生涯质量", "培养结果", "科研规模", "科研质量", "顶尖成果", "顶尖人才", "科研服务", "产学研合作", "成果转化"] FormData.to_csv(filename) if __name__ == "__main__": url = "http://www.zuihaodaxue.cn/zuihaodaxuepaiming2017.html" html = getHTMLText(url) soup = BeautifulSoup(html, features="html.parser") data = fillTabelList(soup) PrintTableList(data, 10) # 输出前10行数据 saveAsCsv("D:\\University_Rank.csv", data

浙公网安备 33010602011771号

浙公网安备 33010602011771号