Kafka高可用集群搭建

192.168.0.191

192.168.0.110

192.168.0.122

三台机器搭建

每台机器 docker pull zookeeper:3.4

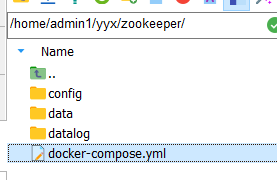

每台机器映射挂载目录

每台机器增加目录权限

chmod +777 /home/admin1/yyx/zookeeper/

chmod +777 /home/admin1/yyx/zookeeper/config

chmod +777 /home/admin1/yyx/zookeeper/data

chmod +777 /home/admin1/yyx/zookeeper/datalog

192.168.0.191 机器

zookeeper

docker-compose.yml 内容

version: '3'

services:

zookeeper:

image: zookeeper:latest

restart: always

hostname: zoo

container_name: zookeeper

ports:

- 2181:2181

- 2888:2888

- 3888:3888

- 8080:8080

volumes:

- /home/admin1/yyx/zookeeper/config/zoo.cfg:/conf/zoo.cfg

- /home/admin1/yyx/zookeeper/data:/data

- /home/admin1/yyx/zookeeper/datalog:/datalog

environment:

ZOO_MY_ID: 1

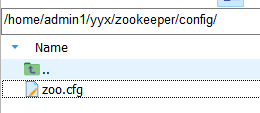

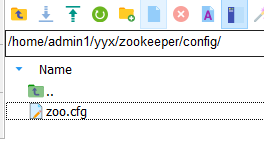

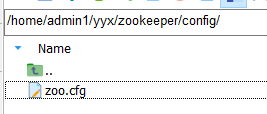

zoo.cfg 配置

zoo.cfg 内容

dataDir=/data

dataLogDir=/datalog

tickTime=2000

initLimit=5

syncLimit=2

clientPort:2181

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

standaloneEnabled=true

admin.enableServer=false

4lw.commands.whitelist=*

server.1=127.0.0.1:2888:3888

server.2=192.168.0.110:2888:3888

server.3=192.168.0.122:2888:3888

192.168.0.110 机器

zookeeper

docker-compose.yml

version: '3'

services:

zookeeper:

image: zookeeper:latest

restart: always

hostname: zoo

container_name: zookeeper

ports:

- 2181:2181

- 2888:2888

- 3888:3888

- 8080:8080

volumes:

- /home/admin1/yyx/zookeeper/config/zoo.cfg:/conf/zoo.cfg

- /home/admin1/yyx/zookeeper/data:/data

- /home/admin1/yyx/zookeeper/datalog:/datalog

environment:

ZOO_MY_ID: 2

zoo.cfg 内容

dataDir=/data

dataLogDir=/datalog

tickTime=2000

initLimit=5

syncLimit=2

clientPort:2181

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

standaloneEnabled=true

admin.enableServer=true

4lw.commands.whitelist=*

server.1=192.168.0.191:2888:3888

server.2=0.0.0.0:2888:3888

server.3=192.168.0.122:2888:3888

192.168.0.122 机器

zookeeper

docker-compose.yml

version: '3'

services:

zookeeper:

image: zookeeper:latest

restart: always

hostname: zoo

container_name: zookeeper

ports:

- 2181:2181

- 2888:2888

- 3888:3888

- 8080:8080

volumes:

- /home/admin1/yyx/zookeeper/config/zoo.cfg:/conf/zoo.cfg

- /home/admin1/yyx/zookeeper/data:/data

- /home/admin1/yyx/zookeeper/datalog:/datalog

environment:

ZOO_MY_ID: 3

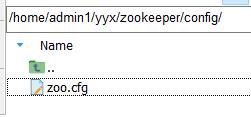

zoo.cfg

zoo.cfg 内容

dataDir=/data

dataLogDir=/datalog

tickTime=2000

initLimit=5

syncLimit=2

clientPort:2181

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

standaloneEnabled=true

admin.enableServer=false

admin.serverPort=10086

4lw.commands.whitelist=*

server.1=192.168.0.191:2888:3888

server.2=192.168.0.110:2888:3888

server.3=0.0.0.0:2888:3888

========================zookeeper 配置 完 接下来配置 kafka==========================

192.168.0.191 192.168.0.110 192.168.0.122 同样三台机器

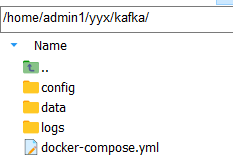

目录结构

192.168.0.191 机器

kafka

docker-compose.yml 内容

version: '3'

services:

kafka:

image: bitnami/kafka:latest

restart: always

hostname: kafka-node-1

container_name: kafka

ports:

- 9092:9092

- 9999:9999

volumes:

- ./logs:/opt/bitnami/kafka/logs

- ./data:/bitnami/kafka/data

- ./config/server.properties:/opt/bitnami/kafka/config/server.properties

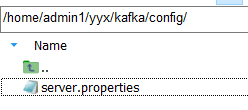

server.properties

broker.id=1

listeners=PLAINTEXT://:9092

advertised.listeners=PLAINTEXT://192.168.0.191:9092

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/bitnami/kafka/data

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=192.168.0.191:2181,192.168.0.110:2181,192.168.0.122:2181

zookeeper.connection.timeout.ms=18000

group.initial.rebalance.delay.ms=0

auto.create.topics.enable=true

max.partition.fetch.bytes=1048576

max.request.size=1048576

sasl.enabled.mechanisms=PLAIN,SCRAM-SHA-256,SCRAM-SHA-512

sasl.mechanism.inter.broker.protocol=

192.168.0.110 机器

docker-compose.yml

version: '3'

services:

zookeeper:

image: zookeeper:latest

restart: always

hostname: zoo

container_name: zookeeper

ports:

- 2181:2181

- 2888:2888

- 3888:3888

- 8080:8080

volumes:

- /home/admin1/yyx/zookeeper/config/zoo.cfg:/conf/zoo.cfg

- /home/admin1/yyx/zookeeper/data:/data

- /home/admin1/yyx/zookeeper/datalog:/datalog

environment:

ZOO_MY_ID: 2

zoo.cfg 内容

dataDir=/data

dataLogDir=/datalog

tickTime=2000

initLimit=5

syncLimit=2

clientPort:2181

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

standaloneEnabled=true

admin.enableServer=true

4lw.commands.whitelist=*

server.1=192.168.0.191:2888:3888

server.2=0.0.0.0:2888:3888

server.3=192.168.0.122:2888:3888

192.168.0.122 机器

docker-compose.yml

version: '3'

services:

zookeeper:

image: zookeeper:latest

restart: always

hostname: zoo

container_name: zookeeper

ports:

- 2181:2181

- 2888:2888

- 3888:3888

- 8080:8080

volumes:

- /home/admin1/yyx/zookeeper/config/zoo.cfg:/conf/zoo.cfg

- /home/admin1/yyx/zookeeper/data:/data

- /home/admin1/yyx/zookeeper/datalog:/datalog

environment:

ZOO_MY_ID: 3

zoo.cfg

zoo.cfg 内容

dataDir=/data

dataLogDir=/datalog

tickTime=2000

initLimit=5

syncLimit=2

clientPort:2181

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

standaloneEnabled=true

admin.enableServer=false

admin.serverPort=10086

4lw.commands.whitelist=*

server.1=192.168.0.191:2888:3888

server.2=192.168.0.110:2888:3888

server.3=0.0.0.0:2888:3888

以上 docker-compose up -d 生成容器

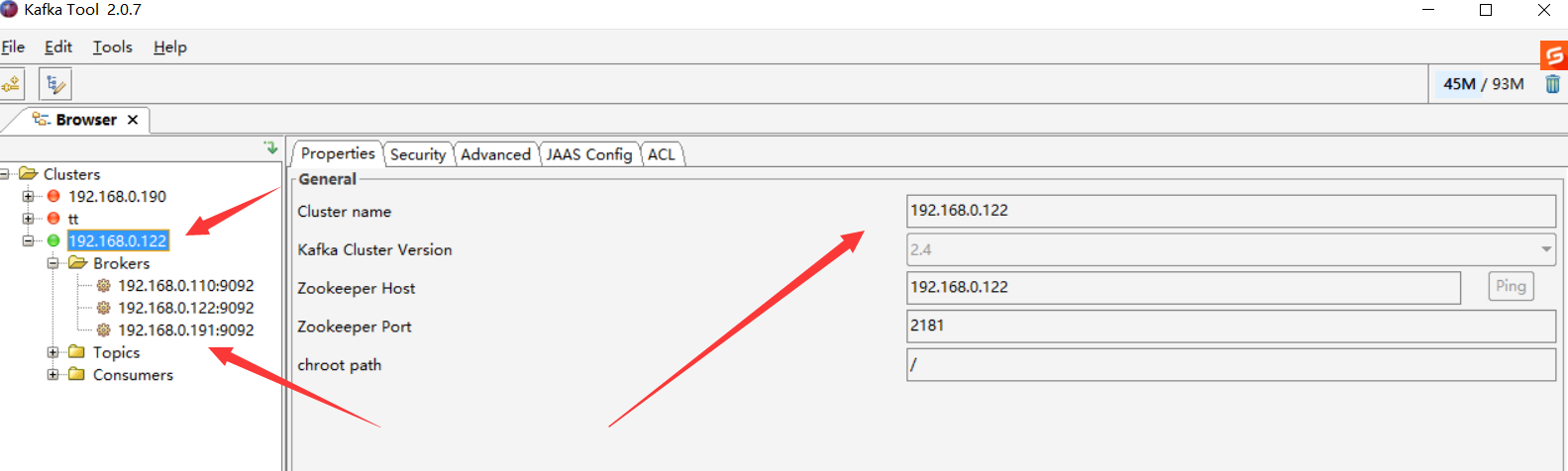

用 KafKa Tool 连接 192.168.0.191 可以看到 三个 节点 都有了

参考 docker-compose多服务器部署kafka集群_docker-compose kafka_野生的大熊的博客-CSDN博客

参考 ZooKeeper:因为协调分布式系统是一个动物园 (apache.org)

参考 docker安装zookeeper集群_myid could not be determined, will not able to loc_Carlos__z的博客-CSDN博客

参考 win10下用Docker Desktop搭建zookeeper集群 - InkYi - 博客园 (cnblogs.com)

浙公网安备 33010602011771号

浙公网安备 33010602011771号