pytorch学习笔记(6)--神经网络非线性激活

如果神经元的输出是输入的线性函数,而线性函数之间的嵌套任然会得到线性函数。如果不加非线性函数处理,那么最终得到的仍然是线性函数。所以需要在神经网络中引入非线性激活函数。

常见的非线性激活函数主要包括Sigmoid函数、tanh函数、ReLU函数、Leaky ReLU函数,这几种非线性激活函数的介绍在神经网络中重要的概念(超参数、激活函数、损失函数、学习率等)中有详细说明

ReLU函数处理自然语言效果更佳,Sigmoid函数处理图像效果更佳

(一)ReLU

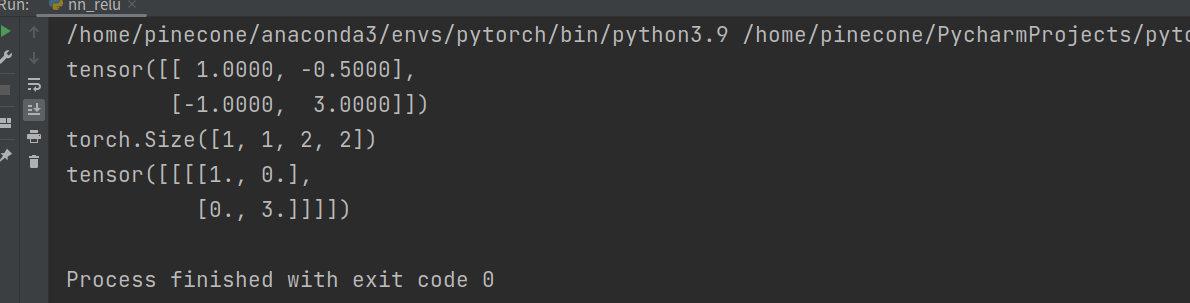

import torch from torch import nn from torch.nn import ReLU input = torch.tensor([[1, -0.5], [-1, 3]]) print(input) input = torch.reshape(input, (-1, 1, 2, 2)) print(input.shape) class Tudui(nn.Module): def __init__(self): super(Tudui, self).__init__() self.relu = ReLU() def forward(self, input): output = self.relu(input) return output tudui = Tudui() output = tudui(input) print(output)

()Sigmoid

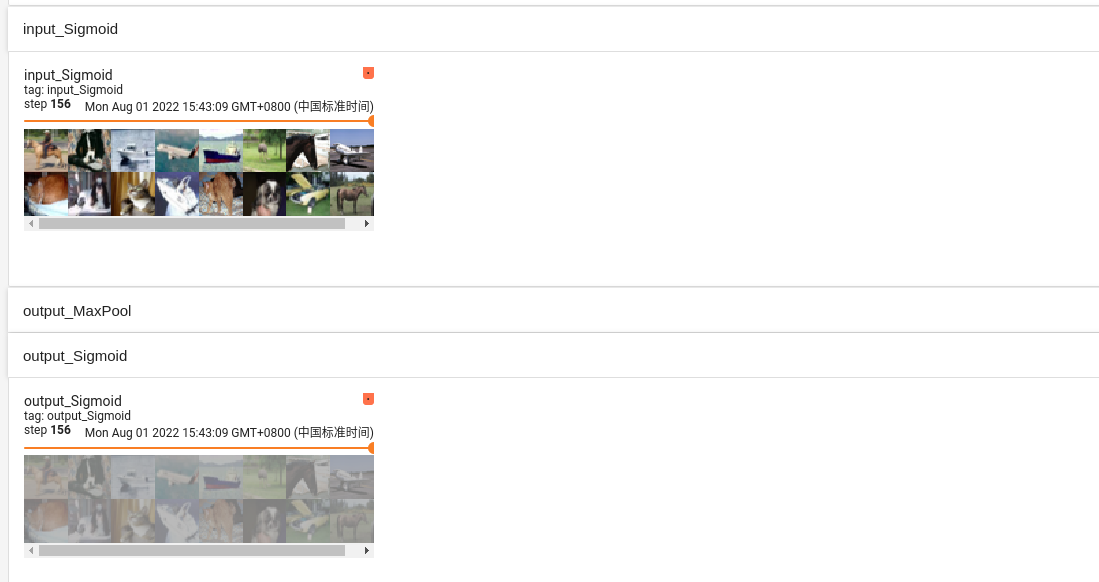

import torch import torchvision.datasets from torch import nn from torch.nn import ReLU, Sigmoid from torch.utils.data import DataLoader from torch.utils.tensorboard import SummaryWriter dataset = torchvision.datasets.CIFAR10("../dataset", train=False, transform=torchvision.transforms.ToTensor()) dataloader = DataLoader(dataset, batch_size=64) class Tudui(nn.Module): def __init__(self): super(Tudui, self).__init__() self.sigmoid1 = Sigmoid() def forward(self, input): output = self.sigmoid1(input) return output tudui = Tudui() writer = SummaryWriter("../logs") step = 0 for data in dataloader: imgs, target = data writer.add_images("input_Sigmoid", imgs, global_step=step) output = tudui(imgs) writer.add_images("output_Sigmoid", output, global_step=step) step = step+1 writer.close()

浙公网安备 33010602011771号

浙公网安备 33010602011771号