数据库高可用介绍

数据库中的高可用功能,主要是用于避免数据库服务或数据信息的损坏问题,其中数据损坏的类型有:

- 数据物理损坏:磁盘、主机、程序实例、数据文件误删除

- 数据逻辑损坏:drop update ...

数据库高可用技术的出现主要解决的是数据逻辑损坏问题,而主从架构技术主要解决的是数据物理损坏问题

数据库高可用解决方案选型依据

| 无故障率 | 故障时间 | 解决方案 |

|---|---|---|

| 99.9% | 0.1%(525.6min) | keepalived+双主架构,但需要人为干预 |

| 99.99% | 0.01%(52.56min) | MHA ORCH TMHA,具有自动监控,自动切换,自动数据补偿,但还是属于半自动化 比较适合非金融类互联网公司 eg: facebook taobao前端-TMHA-->polaradb |

| 99.999% | 0.001%(5.256min) | PXC MGR MGC,数据是高一致性 比较适合金融类互联网公司 |

| 99.9999% | 0.0001%(0.5256min) | 自动化、云计算化、平台化,仍然属于概念阶段 |

| 无故障率 | 故障时间 | 解决方案 |

|---|---|---|

| 99.9% | 0.1%(525.6min) | keepalived+双主架构,但需要人为干预 |

| 99.99% | 0.01%(52.56min) | MHA ORCH TMHA,具有自动监控,自动切换,自动数据补偿,但还是属于半自动化 比较适合非金融类互联网公司 eg: facebook taobao前端-TMHA-->polaradb |

| 99.999% | 0.001%(5.256min) | PXC MGR MGC,数据是高一致性 比较适合金融类互联网公司 |

| 99.9999% | 0.0001%(0.5256min) | 自动化、云计算化、平台化,仍然属于概念阶段 |

MHA介绍

MHA是一套优秀的作为MySQL高可用性环境下故障切换和主从提升的高可用软件 MySQL进行故障切换过程中,MHA能做到在0~30秒之内自动完成数据库的故障切换操作,并且在进行故障切换过程中; MHA能在最大程度上保证数据的一致性,以达到真正意义上的高可用 MHA主要有两部分组成: MHA Manager(管理节点) 可以单独部署在一台独立的机器上管理多个master-slave集群,也可以部署在一台slave上,不要部署在主节点上 MHA Node(数据节点) MHA Manager 会定时探测集群中的master节点,当master出现故障时,它可以自动将最新数据的slave提升为新的master; 然后将所有其他的slave重新指向新的master,整个故障转移过程对应用程序是完全透明的;

MHA软件结构介绍:(MHA中的所有组件就是perl语言编写的功能脚本)

| 节点信息 | 软件组件 | 作用介绍 |

|---|---|---|

| MHA Manager(管理节点) | masterha_manger | 用于启动MHA监控进程 |

| masterha_check_ssh | 检查MHA集群中各节点间的SSH免密登录配置是否正常(用于检查MHA的SSH配置互信状况) | |

| masterha_check_repl | 用于检查MySQL主从复制状态,以及配置信息 | |

| masterha_master_monitor | 用于检测master是否宕机 | |

| masterha_check_status | 用于检测当前MHA运行状态 | |

| masterha_master_switch | 用于主库切换(自动故障转移或者手动在线切换) | |

| masterha_conf_host | 动态的添加或删除配置文件中的server信息 | |

| MHA Node(数据节点) | save_binary_logs | 保存和复制master的二进制日志 |

| apply_diff_relay_logs | 识别差异的中继日志事件并将其差异的事件应用于其他slave | |

| purge_relay_logs | 清除中继日志(不会阻塞SQL线程) |

数据库服务高可用环境构建

MHA高可用架构基础环境(三台数据库+GTID复制)

| 主机角色 | 主机名称 | 地址信息 |

|---|---|---|

| 主库服务器 | 192.168.30.101 | 3306 |

| 从库服务器 | 192.168.30.102 | 3306 |

| 从库服务器 | 192.168.30.103(兼做管理节点) | 3306 |

- 清除原有环境的数据和日志(所有节点)

pkill mysqld rm -rf /data/3306/data/* rm -rf /data/3306/binlog/*

- 配置文件(所有节点)

cat /data/3306/my.cnf [mysql] prompt=db01 [\\d]> socket=/tmp/mysql.sock [mysqld] user=mysql basedir=/usr/local/mysql datadir=/data/3306/data socket=/tmp/mysql.sock server_id=51 port=3306 default_authentication_plugin=mysql_native_password log_bin=/data/3306/binlog/mysql-bin log_bin_index=/data/3306/binlog/mysql-bin.index binlog_format=row gtid-mode=on enforce-gtid-consistency=true log-slave-updates=1 autocommit=0

- 初始化、启动(所有节点)

mysqld --initialize-insecure --user=mysql --basedir=/usr/local/mysql \

--datadir=/data/3306/data

/usr/local/mysql/bin/mysqld_safe --defaults-file=/data/3306/my.cnf &

- 重建主从关系

# 主库操作 db01 [(none)]>create user repl@'10.0.0.%' identified with mysql_native_password by '123456'; db01 [(none)]>grant replication slave on *.* to repl@'10.0.0.%'; # 从库操作(db01、db02) stop slave; reset slave all; change master to master_host='10.0.0.51', master_user='repl', master_password='123456', master_auto_position=1; start slave;

MHA高可用软件安装部署

- 创建程序命令软连接(所有节点均配置)

ln -s /usr/local/mysql/bin/mysqlbinlog /usr/bin/mysqlbinlog ln -s /usr/local/mysql/bin/mysql /usr/bin/mysql -- 由于HMA加载数据库命令,默认会在/usr/bin/目录下面进行加载

- 配置各个节点互信(所有节点均配置)

ssh-keygen -t rsa -f /root/.ssh/id_rsa -P '' # 创建密钥 ssh-copy-id -i /root/.ssh/id_rsa.pub root@10.0.0.x # 分发公钥 ssh 10.0.0.x date # 测试

- 安装mha软件包及其依赖

# 所有节点安装mha-Node软件及其依赖 yum install perl-DBD-MySQL -y # 让Perl脚本能够连接和操作mysql数据库的驱动(没有它Perl程序将无法访问MySQL) yum install -y mha4mysql-node-0.58-0.el7.centos.noarch.rpm # 管理安装mha-manager需要安装的包(db03) [root@db03 ~]# yum install -y perl-Config-Tiny epel-release perl-Log-Dispatch \ perl-Parallel-ForkManager perl-Time-HiRes [root@db03 ~]# yum install -y mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

- 在db01主库中创建mha监控用户

db01 [(none)]>create user mha@'10.0.0.%' identified with mysql_native_password by 'mha'; db01 [(none)]>grant all privileges on *.* to mha@'10.0.0.%';

- manager配置文件(db03)

[server default] manager_log=/var/log/mha/app01/manager manager_workdir=/var/log/app01 master_binlog_dir=/data/3306/binlog user=mha password=mha ping_interval=2 repl_user=repl repl_password=123456 ssh_user=root [server1] hostname=10.0.0.51 port=3306 [server2] hostname=10.0.0.52 port=3306 [server3] hostname=10.0.0.53 port=3306

[root@db03 ~]# mkdir -p /etc/mha # 创建配置文件目录 [root@db03 ~]# mkdir -p /var/log/mha/app01 # 创建日志目录(这个配置文件需要有写的权限) [root@db03 ~]# vim /etc/mha/app01.cnf [server default] # 全局默认配置块:适用于所有服务器节点 manager_log=/var/log/mha/app01/manager # MHA Manager进程的日志文件路径 manager_workdir=/var/log/app01 # MHA Manager的工作目录 master_binlog_dir=/data/3306/binlog # 主服务器上binlog日志的实际存放目录路径(当主库宕机但SSH还能连接时,MHA 从这个目录抢救binlog) user=mha # 监控用户 password=mha # 监控用户密码 ping_interval=2 # 心跳间隔秒数(MHA Manager会每隔2秒钟连接一次主库,判断主库是否存活) repl_user=repl # 配置主从复制的用户 repl_password=123456 # 主从复制用户密码 ssh_user=root # ssh连接用户(ssh互信用户:通过互信用户从主库scp获取binlog日志信息,便于从库进行数据信息补偿) [server1] # 各个MySQL服务器的配置块 hostname=10.0.0.51 # MySQL服务器的IP地址或主机名 port=3306 # MySQL服务的端口号 [server2] hostname=10.0.0.52 port=3306 [server3] hostname=10.0.0.53 port=3306

- ssh互信功能检查

# 在MHA管理节点,进行ssh互信功能检查(显示成功表示检查通过) [root@db03 ~]# masterha_check_ssh --conf=/etc/mha/app01.cnf All SSH connection tests passed successfully.

- MHA配置的MySQL主从复制集群是否正常

# 在MHA管理节点,全面检查MHA配置下的MySQL主从复制集群是否正常 [root@db03 ~]# masterha_check_repl --conf=/etc/mha/app01.cnf MySQL Replication Health is OK.

- 启动MHA监控(MHA管理节点db03)

MHA在完成主从切换后会自动停止监控进程,需要手动重启以继续监控新的主从拓扑(--ignore_last_failover)

nohup masterha_manager --conf=/etc/mha/app01.cnf --remove_dead_master_conf \ --ignore_last_failover < /dev/null> /var/log/mha/app01/manager.log 2>&1 & nohup:让进程忽略挂断信号(即使终端关闭,进程也不会退出) masterha_manager:用于启动MHA监控进程 --conf=/etc/mha/app01.cnf:指定MHA配置文件路径 --remove_dead_master_conf:故障转移成功后,自动从配置文件中移除宕机的主库配置 --ignore_last_failover:忽略上一次故障转移的记录,避免因检测到旧纪录而拒绝启动 </dev/null:将进程的标准输入重定向到空设备,避免像输入密码一样等待输入 >/var/log/ha/app01/manager.log:将标准输出重定向到日志文件 2>&1:将标准错误重定向到标准输出,即错误信息也写入同一个日志文件

- 查看MHA状态

[root@db03 ~]# masterha_check_status --conf=/etc/mha/app01.cnf app01 (pid:5551) is running(0:PING_OK), master:10.0.0.51 # 显示以上提示信息,表示MHA基础环境搭建成功了,但还不能在生产环境使用,还需要有后续的操作配置

主库崩溃测试及恢复

# 模拟主库出现异常 [root@db01 ~]# kill mysqld # 监控管理节点进行查看MHA状态 [root@db03 ~]# masterha_check_status --conf=/etc/mha/app01.cnf app01 is stopped(2:NOT_RUNNING). # db02节点 show master status; # 显示对应的信息 show slave status\G # 显示为空 # db03节点 show slave status\G Master_Host: 10.0.0.52 Slave_IO_Running: Yes Slave_SQL_Running: Yes 现象:当在db02中插入相关数据时,db03就是不能同步数据 原因:MHA在完成主从切换后会自动停止监控进程,需要手动重启以继续监控新的主从拓扑(--ignore_last_failover) # 将db01进行恢复,并加入到当前主从结构(需要重启监控进程) vim /etc/mha/app01.cnf [server1] hostname=10.0.0.51 port=3306 stop slave; reset slave all; change master to master_host='10.0.0.52', master_user='repl', master_password='123456', master_auto_position=1; start slave;

nohup masterha_manager --conf=/etc/mha/app01.cnf --remove_dead_master_conf \ --ignore_last_failover < /dev/null> /var/log/mha/app01/manager.log 2>&1 &

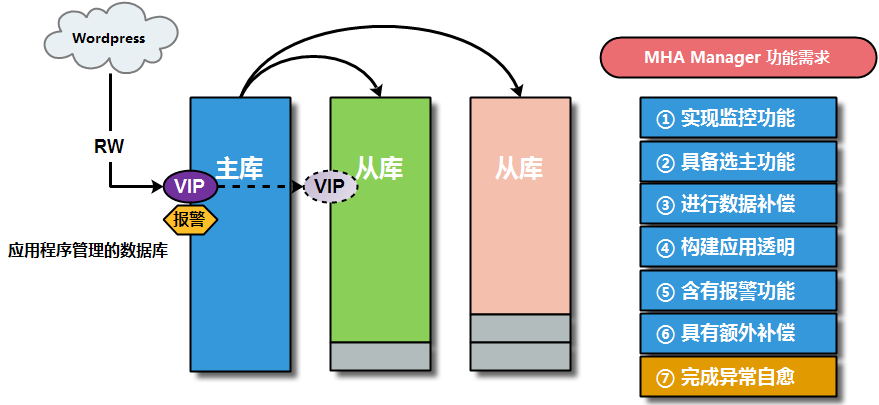

数据库服务高可用工作原理

应用高可用服务可以解决哪些痛点:

① 如何在高可用架构中,当主库宕机异常后,使之及时的发现主库服务程序产生了运行异常?

解决此痛点问题,需要实现高可用的监控需求;

② 如何在高可用架构中,当主库宕机异常后,可以找到可以替代主库的服务器主机进行切换?

解决此痛点问题,需要实现高可用的选主功能;(并且选择数据量越接近主库的从库成为新主)

③ 如何在高可用架构中,当主库宕机异常后,新的主库接管后可以保证与原有主库数据一致?

解决此痛点问题,需要实现高可用的数据补偿;

④ 如何在高可用架构中,当主库宕机异常后,将应用程序的读写请求对接切换到新的主库上?

解决此痛点问题,需要实现高可用的应用透明;(VIP技术)

⑤ 如何在高可用架构中,当主库宕机异常后,能够及时向管理员发起告知提醒使之进行修复?(MHA切换是一次性的)

解决此痛点问题,需要实现高可用的报警功能;

⑥ 如何在高可用架构中,当主库宕机异常后,当整体主库系统环境都异常时实现数据的补偿?

解决此痛点问题,需要实现高可用的额外补偿;

⑦ 如何在高可用架构中,当主库宕机异常后,根据主库服务器的异常情况进行原有主库修复?

解决此痛点问题,需要实现高可用的自愈功能;(待开发,只有云平台RDS具有此功能)

MHA软件启动

nohup masterha_manager --conf=/etc/mha/app01.cnf --remove_dead_master_conf \ --ignore_last_failover < /dev/null> /var/log/mha/app01/manager.log 2>&1 &

根据以上启动命令,需要先调取MHA启动脚本文件masterha_manager ,然后在调取加载MHA软件的配置文件--conf\

=.../app01.cnf,会根据加载的MHA的配置文件不同,实现管理多个高可用架构环境,进行高可用业务的架构环境的区分;

--remove_dead_master_conf参数表示在主节点出现宕机情况时,将会从集群中被踢出,即从配置文件中删除掉故障节点; --ignore_last_failover 忽略上一次故障转移的记录,避免因检测到旧纪录而拒绝启动 (MHA设计了一个安全机制:成功完成一次故障转移后,他会在工作目录中创建一个状态文件。\

当MHA Manager再次启动时,会检查到这个状态文件存在,系统会认为刚刚完成了一次故障转移,\

现在立即启动监控不安全,会拒绝启动并报错) 最后将MHA启动运行的信息放入到日志文件中即可 /var/log/mha/app01/manager.log 2>&1

MHA实现监控

MHA启动脚本文件masterha_manager会自动调用监控脚本文件masterha_master_monitor,并且每隔配置文件指定的时间(ping_interval=2)进行脚本监控一次,从而判断主节点是否处于存活状态(如果短时间连续多次检查失败,则确认主库不可用,启动故障转移流程)

[root@db03 ~]# mysql -umha -pmha -h10.0.0.53 -e "select user();" mysql: [Warning] Using a password on the command line interface can be insecure. +----------+ | user() | +----------+ | mha@db03 |

+----------+

主节点存活的定义:MHA Manager能够用监控账户成功连接到主库并执行简单查询

MHA选主过程

在进行选主时,主要会关注竞选新主节点的日志量、以及是否设置candidate_master参数配置信息;

| 数组信息 | 简述 | 作用说明 |

|---|---|---|

| alive | 存活数组 | 主要用于探测存活的节点状态;当主库宕机后,探测的就是两个从库节点 |

| latest | 最新数组 | 表示获取日志最新的从库信息,即数据量最接近主库的从库(根据GTID信息 或 position信息) |

| pref | 备选数组 | 在数组中具有candidate_master参数判断条件,此参数可以放入配置文件节点中,便于节点优先选择为新主 |

| bad | 不选数组 | 如果设定了参数:no_master=1,表示相应节点不参与竞选主; 如果设定了参数:log_bin=0(二进制日志没开),表示相应节点不参与竞选主; 如何设定了参数:check_slave_delay,检查从库延迟主库100M数据信息日志量,表示节点不参与竞选主 |

选主策略简述表:

| 优先级 | alive数组 | latest数组 | pref数组 | bad数组 | 选主策略 | 多个选择情况 |

|---|---|---|---|---|---|---|

| 01 | 满足 |

满足 |

满足 |

不满足 | 优选选择 | 按照节点号码顺序选择 |

| 02 | 满足 |

满足 |

不满足 | 不满足 | 优选选择 | 按照节点号码顺序选择 |

| 03 | 满足 |

不满足 | 满足 |

不满足 | 优选选择 | 按照节点号码顺序选择 |

| 04 | 满足 |

不满足 | 不满足 | 不满足 | 优选活着节点 | 按照节点号码顺序选择 |

说明:在进行手工指定切换新主时,即应用了prio_new_master_host参数信息时,会最优先选择相应节点为新主;

MHA数据补偿

在进行数据补偿之前,需要让新主库与原有宕机主库进行对比,获悉需要补偿的数据量情况,即对比差的数据日志量信息;

然后可以从binlog日志中,进行补充数据信息的截取,随之进行数据信息补偿,但是有种特殊情况,若原有主库无法访问了;

# 环境:原主库SSH可连接(理想情况) MHA在故障转移时自动执行: save_binary_logs --command=save --start_file=mysql-bin.000123 --start_pos=456 --ssh_user=root 操作:直接从原主库的 master_binlog_dir 抢救最新的 binlog 优势:数据零丢失,最完整的补偿 # 环境:原主库SSH不可连接(服务器宕机) 比较各个从库的中继日志差异 apply_diff_relay_logs --server=新主库 --other_servers=其他从库 操作:从数据最新的从库的中继日志中生成差异补丁 风险:可能丢失原主库上已提交但未传输到任何从库的数据 # 预先配置 Binlog Server(最优方案) 提前部署了 Binlog Server 实时同步主库binlog vim /etc/mha/app01.cnf [binlog1] # 节点标识:定义这是一个Binlog Server节点(必须以binlog开头,后面跟数字) no_master=1 # 禁止选为主库 hostname=10.0.0.53 # 一台独立的服务器实时同步主库的binlog,即使原主库完全损坏无法SSH,也能从这台服务器获取binlog master_binlog_dir=/data/binlog_backup # 实时同步的二进制存放位置(该目录需要有写的权限) 优势:即使原主库完全损坏,也能保证数据零丢失 原理:Binlog Server 实时拉取主库binlog作为冗余备份

MHA业务切换(主从关系切换)

自动解除原有的主从关系,实现新的主从关系的建立;

# 所有从库自动解除主从关系操作 stop slave; reset slave; # 所有从库自动重构主从关系操作 change master to ...

MHA应用透明(利用vip功能)

利用vip功能,实现对前端的访问

进行MHA的VIP地址漂移时,只能在局域网环境进行漂移,不能实现跨网段的VIP地址漂移;

#!/usr/bin/env perl use strict; use warnings FATAL => 'all'; use Getopt::Long; use MHA::DBHelper; my ( $command, $ssh_user, $orig_master_host, $orig_master_ip, $orig_master_port, $new_master_host, $new_master_ip, $new_master_port, $new_master_user, $new_master_password ); my $vip = '10.0.0.50/24'; my $key = "1"; my $ssh_start_vip = "/sbin/ifconfig eth0:$key $vip"; my $ssh_stop_vip = "/sbin/ifconfig eth0:$key down"; GetOptions( 'command=s' => \$command, 'ssh_user=s' => \$ssh_user, 'orig_master_host=s' => \$orig_master_host, 'orig_master_ip=s' => \$orig_master_ip, 'orig_master_port=i' => \$orig_master_port, 'new_master_host=s' => \$new_master_host, 'new_master_ip=s' => \$new_master_ip, 'new_master_port=i' => \$new_master_port, 'new_master_user=s' => \$new_master_user, 'new_master_password=s' => \$new_master_password, ); exit &main(); sub main { if ( $command eq "stop" || $command eq "stopssh" ) { my $exit_code = 1; eval { $exit_code = 0; }; if ($@) { warn "Got Error: $@\n"; exit $exit_code; } exit $exit_code; } elsif ( $command eq "start" ) { my $exit_code = 10; eval { print "Enabling the VIP - $vip on the new master - $new_master_host \n"; &start_vip(); &stop_vip(); $exit_code = 0; }; if ($@) { warn $@; exit $exit_code; } exit $exit_code; } elsif ( $command eq "status" ) { print "Checking the Status of the script.. OK \n"; `ssh $ssh_user\@$orig_master_host \" $ssh_start_vip \"`; exit 0; } else { &usage(); exit 1; } } sub start_vip() { `ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`; } # A simple system call that disable the VIP on the old_master sub stop_vip() { `ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`; } sub usage { print "Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n"; }

# 上传MHA所需的脚本文件 [root@db03 ~]# cd /usr/local/bin/ [root@db03 bin]# chmod +x /usr/local/bin/master_ip_failover # 修改MHA脚本文件的信息 [root@db03 bin]# vim master_ip_failover my $vip = '10.0.0.50/24'; my $key = "1"; my $ssh_start_vip = "/sbin/ifconfig eth0:$key $vip"; my $ssh_stop_vip = "/sbin/ifconfig eth0:$key down"; # 修改配置文件 [root@db03 ~]# vim /etc/mha/app01.cnf master_ip_failover_script=/usr/local/bin/master_ip_failover # 手工在主库上添加VIP 确保所有的节点上都有ifconfig命令(yum update -y , yum install net-tools -y) ifconfig eth0:1 10.0.0.50/24 # 在主库节点手工添加vip地址信息(通过ip address查看)

# 重启MHA服务 [root@db03 ~]# masterha_stop --conf=/etc/mha/app01.cnf [root@db03 ~]# nohup masterha_manager --conf=/etc/mha/app01.cnf --remove_dead_master_conf \ --ignore_last_failover < /dev/null> /var/log/mha/app01/manager.log 2>&1 & [root@db03 ~]# masterha_check_status --conf=/etc/mha/app01.cnf

# 进行VIP地址连接测试 -- 可以使用navcat软件,连接MHA的vip地址,查看所连主机信息是否为主节点,当故障转移后可以核实VIP地址是否持续连接;

MHA故障报警

邮件发送脚本/usr/local/bin/mha_notification

chmod +x /usr/local/bin/mha_notification

#!/usr/bin/env perl use strict; use warnings; my $smtp_server = 'smtp.qq.com'; my $smtp_user = '2573013863@qq.com'; my $smtp_password = 'qfpoqradxtvlecgf'; my $to_email = '2573013863@qq.com'; my ($event, $msg) = @ARGV; my $time = scalar(localtime); my %events = ( 'master_failover' => '[MHA告警] 主库切换完成', 'master_failover_start'=> '[MHA紧急] 开始切换', 'master_online' => '[MHA信息] 在线切换', 'error' => '[MHA错误] 监控异常', ); my $subject = $events{$event} || '[MHA通知] 未知事件'; my $body = "事件: $event\n时间: $time\n详情: $msg"; eval { require Mail::Sender; my $sender = Mail::Sender->new({ smtp => $smtp_server, port => 587, auth => 'LOGIN', authid => $smtp_user, authpwd => $smtp_password, from => $smtp_user, tls => 1 }); $sender->MailMsg({to => $to_email, subject => $subject, msg => $body}); print "邮件发送成功: $subject\n"; }; if ($@) { print "发送失败: $@\n" } open my $log, '>>', '/var/log/mha/notification.log'; print $log "$time - $event - $msg\n"; close $log;

# 上传MHA所需的脚本文件 [root@db03 ~]# cd /usr/local/bin/ [root@db03 ~]# chmod +x /usr/local/bin/mha_notification [root@db03 ~]# vim /usr/local/bin/mha_notification my $smtp_server = 'smtp.qq.com'; # QQ邮箱SMTP服务器 my $smtp_port = 587; # QQ邮箱端口 my $from_email = '2573013863@qq.com'; # 发件邮箱 my $smtp_user = '2573013863@qq.com'; # 邮箱账号 my $smtp_password = 'qfpoqradxtvlecgf'; # 邮箱授权码(不是密码!) $to_email ||= '2573013863@qq.com'; # 默认收件人(如果命令行没指定) # 修改配置文件 [root@db03 ~]# vim /etc/mha/app01.cnf report_script=/usr/local/bin/mha_notification

# 重启MHA服务 [root@db03 ~]# masterha_stop --conf=/etc/mha/app01.cnf [root@db03 ~]# nohup masterha_manager --conf=/etc/mha/app01.cnf --remove_dead_master_conf \ --ignore_last_failover < /dev/null> /var/log/mha/app01/manager.log 2>&1 & [root@db03 ~]# masterha_check_status --conf=/etc/mha/app01.cnf

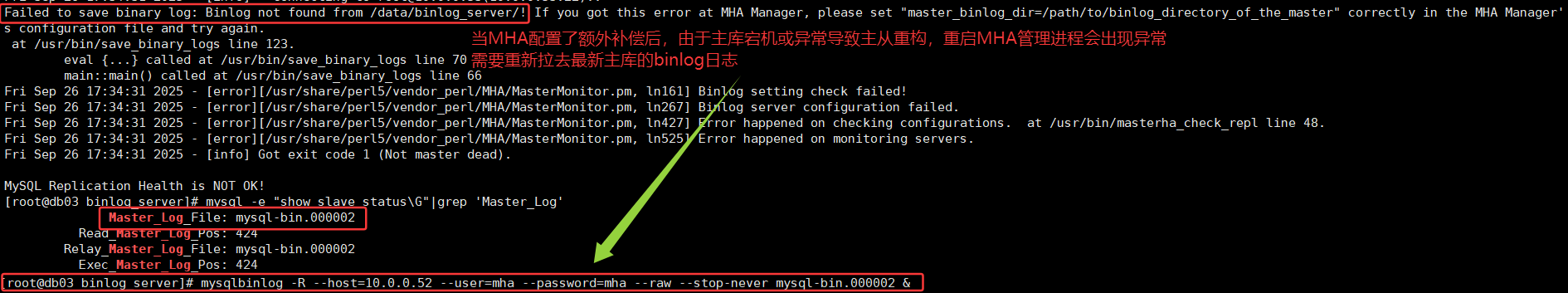

MHA额外补偿

利用binlog_server作为额外的日志补偿的冗余方案,即实时保存主库的bin_log日志文件到特定节点目录中;

# 创建日志存放目录 [root@db03 ~]# mkdir -p /data/binlog_server/ [root@db03 ~]# chown -R mysql.mysql /data/binlog_server/ [root@db03 ~]# mysql -e "show slave status\G"|grep 'Master_Log' Master_Log_File: mysql-bin.000001 Read_Master_Log_Pos: 1564 Relay_Master_Log_File: mysql-bin.000001 Exec_Master_Log_Pos: 1564 # 拉取日志的并指定主库当前的binlog日志位置作为起点拉去 [root@db03 data]# cd /data/binlog_server/ [root@db03 binlog_server]# mysqlbinlog -R --host=10.0.0.51 --user=mha \ --password=mha --raw --stop-never mysql-bin.000001 &

[root@db03 ~]# ps -ef |grep mysqlbinlog # 编写配置文件信息 [root@db03 ~]# vim /etc/mha/app01.cnf [binlog1] no_master=1 hostname=10.0.0.53 master_binlog_dir=/data/binlog_server/ # 重启MHA服务 [root@db03 ~]# masterha_stop --conf=/etc/mha/app01.cnf [root@db03 ~]# nohup masterha_manager --conf=/etc/mha/app01.cnf --remove_dead_master_conf \ --ignore_last_failover < /dev/null> /var/log/mha/app01/manager.log 2>&1 & [root@db03 ~]# masterha_check_status --conf=/etc/mha/app01.cnf

ps -ef |grep mysqlbinlog

数据库服务高可用故障切换日志分析(实现MHA高可用切换六个步骤)

[root@db03 ~]# masterha_check_status --conf=/etc/mha/app01.cnf app01 (pid:8296) is running(0:PING_OK), master:10.0.0.52 [root@db02 ~]# pkill mysqld [root@db03 ~]# tail -f /var/log/mha/app01/manager

- 01 MHA健康检查报错,显示主数据库节点无法正常连接

[warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '10.0.0.52' (111)) [warning] Connection failed 2 time(s).. [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '10.0.0.52' (111)) [warning] Connection failed 3 time(s).. [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '10.0.0.52' (111)) [warning] Connection failed 4 time(s).. [warning] Master is not reachable from health checker! [warning] Master 10.0.0.52(10.0.0.52:3306) is not reachable! # 对故障主节点进行4次健康检查,主节点数据库服务仍旧无法连接,即判定主节点故障

- 02 MHA检测到主库宕机,正根据GTID复制进度自动选举数据最新的从库为新主

[info] Connecting to a master server failed. Reading configuration \ file /etc/masterha_default.cnf and /etc/mha/app01.cnf again, and trying to connect \ to all servers to check server status.. ... [info] * Phase 1: Configuration Check Phase.. ... [info] ** Phase 1: Configuration Check Phase completed.

- 03 MHA进行节点关闭,选择完新的主节点后会将原有主节点的VIP地址消除

[info] * Phase 2: Dead Master Shutdown Phase.. [info] Forcing shutdown so that applications never connect to the current master.. [info] Executing master IP deactivation script: [info] /usr/local/bin/master_ip_failover --orig_master_host=10.0.0.52 --orig_master_ip=10.0.0.52 --orig_master_port=3306 --command=stopssh --ssh_user=root [warning] shutdown_script is not set. Skipping explicit shutting down of the dead master. [info] * Phase 2: Dead Master Shutdown Phase completed.

- 04 MHA进行节点切换,在新的主节点上进行非同步数据信息的补偿

[info] * Phase 3: Master Recovery Phase.. [info] * Phase 3.1: Getting Latest Slaves Phase.. .... [info] * Phase 3.3: Determining New Master Phase.. [info] New master is 10.0.0.51(10.0.0.51:3306) [info] Starting master failover.. [info] * Phase 3.3: New Master Recovery Phase.. [info] Executing binlog save command: save_binary_logs --command=save --start_file=mysql-bin.000002 --start_pos=1201 --output_file=/var/tmp/saved_binlog_binlog1_20230102153233.binlog --handle_raw_binlog=0 --skip_filter=1 --disable_log_bin=0 --manager_version=0.58 --oldest_version=8.0.26 --binlog_dir=/data/binlog_server/ [info] Additional events were not found from the binlog server. No need to save. Enabling the VIP - 10.0.0.50/24 on the new master - 10.0.0.51[info] OK. [info] ** Finished master recovery successfully. [info] * Phase 3: Master Recovery Phase completed.

- 05 MHA进行主从重构,将从库连接到新的主库上

[info] * Phase 4: Slaves Recovery Phase.. [info] * Phase 4.1: Starting Slaves in parallel.. [info] -- Slave recovery on host 10.0.0.53(10.0.0.53:3306) started, pid: 8659. Check tmp log /var/log/app01/10.0.0.53_3306_20250926182323.log if it takes time.. [info] Log messages from 10.0.0.53 ... [info] Resetting slave 10.0.0.53(10.0.0.53:3306) and starting replication from the new master 10.0.0.51(10.0.0.51:3306).. [info] Executed CHANGE MASTER. [info] Slave started. [info] gtid_wait(a9977de8-9a01-11f0-8f55-000c29e3dc70:1-5, adfada3d-9a01-11f0-9024-000c290a3b5f:1-5) completed on 10.0.0.53(10.0.0.53:3306). Executed 0 events. [info] End of log messages from 10.0.0.53. [info] -- Slave on host 10.0.0.53(10.0.0.53:3306) started. [info] All new slave servers recovered successfully.

- 06 MHA切换完毕过程,架构故障转移切换完毕后做清理阶段,并进行最终汇报

[info] * Phase 5: New master cleanup phase.. ----- Failover Report ----- app01: MySQL Master failover 10.0.0.52(10.0.0.52:3306) to 10.0.0.51(10.0.0.51:3306) succeeded Master 10.0.0.52(10.0.0.52:3306) is down! Check MHA Manager logs at db03:/var/log/mha/app01/manager for details. [info] Sending mail.. 邮件发送成功: [MHA通知] 未知事件

数据库服务高可用维护操作

实现MHA高可用主节点在线切换(手工操作)

可以在主库没有故障的情况下,利用手工方式将主库业务切换到其它的从库节点上,从而解放原有主库节点(维护性操作时应用);

#!/usr/bin/env perl use strict; use warnings FATAL => 'all'; use Getopt::Long; use MHA::DBHelper; use MHA::NodeUtil; use Time::HiRes qw( sleep gettimeofday tv_interval ); use Data::Dumper; my $_tstart; my $_running_interval = 0.1; my ( $command, $orig_master_is_new_slave, $orig_master_host, $orig_master_ip, $orig_master_port, $orig_master_user, $orig_master_password, $orig_master_ssh_user, $new_master_host, $new_master_ip, $new_master_port, $new_master_user, $new_master_password, $new_master_ssh_user, ); # VIP配置 - 根据您的网络环境调整 my $vip = '10.0.0.50'; my $brdc = '10.0.0.255'; my $ifdev = 'eth0'; my $key = '1'; my $ssh_start_vip = "/usr/sbin/ip addr add $vip/24 brd $brdc dev $ifdev label $ifdev:$key;/usr/sbin/arping -q -A -c 1 -I $ifdev $vip;iptables -F;"; my $ssh_stop_vip = "/usr/sbin/ip addr del $vip/24 dev $ifdev label $ifdev:$key"; my $ssh_check_vip = "/usr/sbin/ip addr show dev $ifdev | grep -w $vip"; GetOptions( 'command=s' => \$command, 'orig_master_is_new_slave' => \$orig_master_is_new_slave, 'orig_master_host=s' => \$orig_master_host, 'orig_master_ip=s' => \$orig_master_ip, 'orig_master_port=i' => \$orig_master_port, 'orig_master_user=s' => \$orig_master_user, 'orig_master_password=s' => \$orig_master_password, 'orig_master_ssh_user=s' => \$orig_master_ssh_user, 'new_master_host=s' => \$new_master_host, 'new_master_ip=s' => \$new_master_ip, 'new_master_port=i' => \$new_master_port, 'new_master_user=s' => \$new_master_user, 'new_master_password=s' => \$new_master_password, 'new_master_ssh_user=s' => \$new_master_ssh_user, ); exit &main(); sub current_time_us { my ( $sec, $microsec ) = gettimeofday(); my $curdate = localtime($sec); return $curdate . " " . sprintf( "%06d", $microsec ); } sub sleep_until { my $elapsed = tv_interval($_tstart); if ( $_running_interval > $elapsed ) { sleep( $_running_interval - $elapsed ); } } sub get_threads_util { my $dbh = shift; my $my_connection_id = shift; my $running_time_threshold = shift; my $type = shift; $running_time_threshold = 0 unless ($running_time_threshold); $type = 0 unless ($type); my @threads; my $sth = $dbh->prepare("SHOW PROCESSLIST"); $sth->execute(); while ( my $ref = $sth->fetchrow_hashref() ) { my $id = $ref->{Id}; my $user = $ref->{User}; my $host = $ref->{Host}; my $command = $ref->{Command}; my $state = $ref->{State}; my $query_time = $ref->{Time}; my $info = $ref->{Info}; $info =~ s/^\s*(.*?)\s*$/$1/ if defined($info); next if ( $my_connection_id == $id ); next if ( defined($query_time) && $query_time < $running_time_threshold ); next if ( defined($command) && $command eq "Binlog Dump" ); next if ( defined($user) && $user eq "system user" ); next if ( defined($command) && $command eq "Sleep" && defined($query_time) && $query_time >= 1 ); if ( $type >= 1 ) { next if ( defined($command) && $command eq "Sleep" ); next if ( defined($command) && $command eq "Connect" ); } if ( $type >= 2 ) { next if ( defined($info) && $info =~ m/^select/i ); next if ( defined($info) && $info =~ m/^show/i ); } push @threads, $ref; } return @threads; } # 检查VIP是否已存在 sub check_vip_exists { my $host = shift; my $ssh_user = shift; my $result = `ssh $ssh_user\@$host \" $ssh_check_vip \"`; return $result ? 1 : 0; } # 启动VIP sub start_vip { print current_time_us() . " Checking if VIP $vip already exists on $new_master_host...\n"; if (check_vip_exists($new_master_host, $new_master_ssh_user)) { print current_time_us() . " VIP $vip already exists on $new_master_host, skipping.\n"; return 1; } print current_time_us() . " Enabling VIP $vip on new master: $new_master_host \n"; my $result = `ssh $new_master_ssh_user\@$new_master_host \" $ssh_start_vip \" 2>&1`; if ($? == 0) { print current_time_us() . " VIP enabled successfully.\n"; # 验证VIP是否真的启动了 sleep(1); if (check_vip_exists($new_master_host, $new_master_ssh_user)) { print current_time_us() . " VIP verification: VIP $vip is now active on $new_master_host.\n"; return 1; } else { print current_time_us() . " WARNING: VIP command succeeded but VIP is not visible on interface.\n"; return 0; } } else { print current_time_us() . " ERROR: Failed to enable VIP: $result\n"; return 0; } } # 停止VIP sub stop_vip { print current_time_us() . " Checking if VIP $vip exists on $orig_master_host...\n"; unless (check_vip_exists($orig_master_host, $orig_master_ssh_user)) { print current_time_us() . " VIP $vip not found on $orig_master_host, skipping.\n"; return 1; } print current_time_us() . " Disabling VIP $vip on old master: $orig_master_host \n"; my $result = `ssh $orig_master_ssh_user\@$orig_master_host \" $ssh_stop_vip \" 2>&1`; if ($? == 0) { print current_time_us() . " VIP disabled successfully.\n"; # 验证VIP是否真的停止了 sleep(1); unless (check_vip_exists($orig_master_host, $orig_master_ssh_user)) { print current_time_us() . " VIP verification: VIP $vip is now removed from $orig_master_host.\n"; return 1; } else { print current_time_us() . " WARNING: VIP stop command succeeded but VIP is still visible on interface.\n"; return 0; } } else { print current_time_us() . " ERROR: Failed to disable VIP: $result\n"; return 0; } } sub main { if ( $command eq "stop" ) { ## Gracefully killing connections on the current master my $exit_code = 1; eval { ## Setting read_only=1 on the new master (to avoid accident) my $new_master_handler = new MHA::DBHelper(); # args: hostname, port, user, password, raise_error(die_on_error)_or_not $new_master_handler->connect( $new_master_ip, $new_master_port, $new_master_user, $new_master_password, 1 ); print current_time_us() . " Set read_only on the new master.. "; $new_master_handler->enable_read_only(); if ( $new_master_handler->is_read_only() ) { print "ok.\n"; } else { die "Failed!\n"; } $new_master_handler->disconnect(); # Connecting to the orig master, die if any database error happens my $orig_master_handler = new MHA::DBHelper(); $orig_master_handler->connect( $orig_master_ip, $orig_master_port, $orig_master_user, $orig_master_password, 1 ); ## Waiting for N * 100 milliseconds so that current connections can exit my $time_until_read_only = 15; $_tstart = [gettimeofday]; my @threads = get_threads_util( $orig_master_handler->{dbh}, $orig_master_handler->{connection_id} ); while ( $time_until_read_only > 0 && $#threads >= 0 ) { if ( $time_until_read_only % 5 == 0 ) { printf "%s Waiting all running %d threads are disconnected.. (max %d milliseconds)\n", current_time_us(), $#threads + 1, $time_until_read_only * 100; if ( $#threads < 5 ) { print Data::Dumper->new( [$_] )->Indent(0)->Terse(1)->Dump . "\n" foreach (@threads); } } sleep_until(); $_tstart = [gettimeofday]; $time_until_read_only--; @threads = get_threads_util( $orig_master_handler->{dbh}, $orig_master_handler->{connection_id} ); } ## Setting read_only=1 on the current master so that nobody(except SUPER) can write print current_time_us() . " Set read_only=1 on the orig master.. "; $orig_master_handler->enable_read_only(); if ( $orig_master_handler->is_read_only() ) { print "ok.\n"; } else { die "Failed!\n"; } ## Waiting for M * 100 milliseconds so that current update queries can complete my $time_until_kill_threads = 5; @threads = get_threads_util( $orig_master_handler->{dbh}, $orig_master_handler->{connection_id} ); while ( $time_until_kill_threads > 0 && $#threads >= 0 ) { if ( $time_until_kill_threads % 5 == 0 ) { printf "%s Waiting all running %d queries are disconnected.. (max %d milliseconds)\n", current_time_us(), $#threads + 1, $time_until_kill_threads * 100; if ( $#threads < 5 ) { print Data::Dumper->new( [$_] )->Indent(0)->Terse(1)->Dump . "\n" foreach (@threads); } } sleep_until(); $_tstart = [gettimeofday]; $time_until_kill_threads--; @threads = get_threads_util( $orig_master_handler->{dbh}, $orig_master_handler->{connection_id} ); } ## 停止旧主库上的VIP &stop_vip(); ## Terminating all threads print current_time_us() . " Killing all application threads..\n"; $orig_master_handler->kill_threads(@threads) if ( $#threads >= 0 ); print current_time_us() . " done.\n"; $orig_master_handler->disconnect(); $exit_code = 0; }; if ($@) { warn "Got Error: $@\n"; exit $exit_code; } exit $exit_code; } elsif ( $command eq "start" ) { ## Activating master ip on the new master my $exit_code = 10; eval { my $new_master_handler = new MHA::DBHelper(); # args: hostname, port, user, password, raise_error_or_not $new_master_handler->connect( $new_master_ip, $new_master_port, $new_master_user, $new_master_password, 1 ); ## Set read_only=0 on the new master print current_time_us() . " Set read_only=0 on the new master.\n"; $new_master_handler->disable_read_only(); $new_master_handler->disconnect(); ## 在新主库上启动VIP if (&start_vip()) { $exit_code = 0; } else { $exit_code = 10; # VIP启动失败,但继续执行 } }; if ($@) { warn "Got Error: $@\n"; exit $exit_code; } exit $exit_code; } elsif ( $command eq "status" ) { # 检查VIP状态 print "VIP Status:\n"; print "Old Master ($orig_master_host): " . (check_vip_exists($orig_master_host, $orig_master_ssh_user) ? "VIP EXISTS" : "VIP NOT FOUND") . "\n"; print "New Master ($new_master_host): " . (check_vip_exists($new_master_host, $new_master_ssh_user) ? "VIP EXISTS" : "VIP NOT FOUND") . "\n"; exit 0; } else { &usage(); exit 1; } } sub usage { print "Usage: master_ip_online_change --command=start|stop|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --orig_master_user=user --orig_master_password=password --orig_master_ssh_user=sshuser --new_master_host=host --new_master_ip=ip --new_master_port=port --new_master_user=user --new_master_password=password --new_master_ssh_user=sshuser \n"; die; }

# 关闭MHA服务程序 masterha_stop --conf=/etc/mha/app01.cnf -- 关闭mha程序是保证手工切换时,不会受到mha自动切换的影响 # 执行MHA手工切换 masterha_master_switch --conf=/etc/mha/app01.cnf --master_state=alive \

--new_master_host=10.0.0.52 --orig_master_is_new_slave --running_updates_limit=10000 masterha_master_switch:MHA Manager 的主备切换工具 --master_state=alive:表名当前主库处于正常运行状态

--new_master_host=10.0.0.52:手动指定新的主库服务器地址

--orig_master_is_new_slave:在切换完成后,将原主库配置为新主库的从库

--running_updates_limit=10000:设置一个安全阈值,用于主从控制切换(主库上正在执行的并发线程数,超过该值会暂停或中止切换操作)

FLUSH NO_WRITE_TO_BINLOG TABLES

在不记录二进制日志的情况下刷新所有表,确保刷新操作不会复制到从库

刷新所有表的作用:为了确保所有表的缓存数据写入磁盘并关闭所有打开的表,保证数据一致性和操作安全性

It is better to execute FLUSH NO_WRITE_TO_BINLOG TABLES on the master before switching. \

Is it ok to execute on 10.0.0.51(10.0.0.51:3306)? (YES/no):

-- 询问是否同意在当前主库(10.0.0.51)上执行表刷新操作

Starting master switch from 10.0.0.51(10.0.0.51:3306) to 10.0.0.52(10.0.0.52:3306)? (yes/NO):

-- 询问是否开始从10.0.0.51切换到10.0.0.52的主从切换操作

master_ip_online_change_script is not defined. If you do not disable writes on the current \

master manually, applications keep writing on the current master. Is it ok to proceed? (yes/NO):

-- 问题:MHA发现master_ip_online_change_script参数没有正确定义或脚本文件不存在

-- 风险:如果没有VIP切换脚本,MHA无法自动转移虚拟IP地址

-- 后果:应用程序可能继续向旧的主库写入数据,导致数据不一致

# 出现的问题

mha进程关闭

vip的地址没有实现飘逸(需要重新启动mha进程,这会导致新从库的日志内容比旧从库的日志内容多)

# 重启MHA服务

[root@db03 ~]# masterha_stop --conf=/etc/mha/app01.cnf

[root@db03 ~]# nohup masterha_manager --conf=/etc/mha/app01.cnf --remove_dead_master_conf \

--ignore_last_failover < /dev/null> /var/log/mha/app01/manager.log 2>&1 &

[root@db03 ~]# masterha_check_status --conf=/etc/mha/app01.cnf

应用master_ip_online_change_script功能脚本

MHA在线切换脚本master_ip_online_change结合VIP-阿里云开发者社区

#!/usr/bin/env perl use strict; use warnings FATAL => 'all'; use Getopt::Long; use MHA::DBHelper; use MHA::NodeUtil; use Time::HiRes qw( sleep gettimeofday tv_interval ); use Data::Dumper; my $_tstart; my $_running_interval = 0.1; my ( $command, $orig_master_is_new_slave, $orig_master_host, $orig_master_ip, $orig_master_port, $orig_master_user, $orig_master_password, $orig_master_ssh_user, $new_master_host, $new_master_ip, $new_master_port, $new_master_user, $new_master_password, $new_master_ssh_user, ); # VIP配置 - 根据您的网络环境调整 my $vip = '10.0.0.50'; my $brdc = '10.0.0.255'; my $ifdev = 'eth0'; my $key = '1'; my $ssh_start_vip = "/usr/sbin/ip addr add $vip/24 brd $brdc dev $ifdev label $ifdev:$key;/usr/sbin/arping -q -A -c 1 -I $ifdev $vip;iptables -F;"; my $ssh_stop_vip = "/usr/sbin/ip addr del $vip/24 dev $ifdev label $ifdev:$key"; my $ssh_check_vip = "/usr/sbin/ip addr show dev $ifdev | grep -w $vip"; GetOptions( 'command=s' => \$command, 'orig_master_is_new_slave' => \$orig_master_is_new_slave, 'orig_master_host=s' => \$orig_master_host, 'orig_master_ip=s' => \$orig_master_ip, 'orig_master_port=i' => \$orig_master_port, 'orig_master_user=s' => \$orig_master_user, 'orig_master_password=s' => \$orig_master_password, 'orig_master_ssh_user=s' => \$orig_master_ssh_user, 'new_master_host=s' => \$new_master_host, 'new_master_ip=s' => \$new_master_ip, 'new_master_port=i' => \$new_master_port, 'new_master_user=s' => \$new_master_user, 'new_master_password=s' => \$new_master_password, 'new_master_ssh_user=s' => \$new_master_ssh_user, ); exit &main(); sub current_time_us { my ( $sec, $microsec ) = gettimeofday(); my $curdate = localtime($sec); return $curdate . " " . sprintf( "%06d", $microsec ); } sub sleep_until { my $elapsed = tv_interval($_tstart); if ( $_running_interval > $elapsed ) { sleep( $_running_interval - $elapsed ); } } sub get_threads_util { my $dbh = shift; my $my_connection_id = shift; my $running_time_threshold = shift; my $type = shift; $running_time_threshold = 0 unless ($running_time_threshold); $type = 0 unless ($type); my @threads; my $sth = $dbh->prepare("SHOW PROCESSLIST"); $sth->execute(); while ( my $ref = $sth->fetchrow_hashref() ) { my $id = $ref->{Id}; my $user = $ref->{User}; my $host = $ref->{Host}; my $command = $ref->{Command}; my $state = $ref->{State}; my $query_time = $ref->{Time}; my $info = $ref->{Info}; $info =~ s/^\s*(.*?)\s*$/$1/ if defined($info); next if ( $my_connection_id == $id ); next if ( defined($query_time) && $query_time < $running_time_threshold ); next if ( defined($command) && $command eq "Binlog Dump" ); next if ( defined($user) && $user eq "system user" ); next if ( defined($command) && $command eq "Sleep" && defined($query_time) && $query_time >= 1 ); if ( $type >= 1 ) { next if ( defined($command) && $command eq "Sleep" ); next if ( defined($command) && $command eq "Connect" ); } if ( $type >= 2 ) { next if ( defined($info) && $info =~ m/^select/i ); next if ( defined($info) && $info =~ m/^show/i ); } push @threads, $ref; } return @threads; } # 检查VIP是否已存在 sub check_vip_exists { my $host = shift; my $ssh_user = shift; my $result = `ssh $ssh_user\@$host \" $ssh_check_vip \"`; return $result ? 1 : 0; } # 启动VIP sub start_vip { print current_time_us() . " Checking if VIP $vip already exists on $new_master_host...\n"; if (check_vip_exists($new_master_host, $new_master_ssh_user)) { print current_time_us() . " VIP $vip already exists on $new_master_host, skipping.\n"; return 1; } print current_time_us() . " Enabling VIP $vip on new master: $new_master_host \n"; my $result = `ssh $new_master_ssh_user\@$new_master_host \" $ssh_start_vip \" 2>&1`; if ($? == 0) { print current_time_us() . " VIP enabled successfully.\n"; # 验证VIP是否真的启动了 sleep(1); if (check_vip_exists($new_master_host, $new_master_ssh_user)) { print current_time_us() . " VIP verification: VIP $vip is now active on $new_master_host.\n"; return 1; } else { print current_time_us() . " WARNING: VIP command succeeded but VIP is not visible on interface.\n"; return 0; } } else { print current_time_us() . " ERROR: Failed to enable VIP: $result\n"; return 0; } } # 停止VIP sub stop_vip { print current_time_us() . " Checking if VIP $vip exists on $orig_master_host...\n"; unless (check_vip_exists($orig_master_host, $orig_master_ssh_user)) { print current_time_us() . " VIP $vip not found on $orig_master_host, skipping.\n"; return 1; } print current_time_us() . " Disabling VIP $vip on old master: $orig_master_host \n"; my $result = `ssh $orig_master_ssh_user\@$orig_master_host \" $ssh_stop_vip \" 2>&1`; if ($? == 0) { print current_time_us() . " VIP disabled successfully.\n"; # 验证VIP是否真的停止了 sleep(1); unless (check_vip_exists($orig_master_host, $orig_master_ssh_user)) { print current_time_us() . " VIP verification: VIP $vip is now removed from $orig_master_host.\n"; return 1; } else { print current_time_us() . " WARNING: VIP stop command succeeded but VIP is still visible on interface.\n"; return 0; } } else { print current_time_us() . " ERROR: Failed to disable VIP: $result\n"; return 0; } } sub main { if ( $command eq "stop" ) { ## Gracefully killing connections on the current master my $exit_code = 1; eval { ## Setting read_only=1 on the new master (to avoid accident) my $new_master_handler = new MHA::DBHelper(); # args: hostname, port, user, password, raise_error(die_on_error)_or_not $new_master_handler->connect( $new_master_ip, $new_master_port, $new_master_user, $new_master_password, 1 ); print current_time_us() . " Set read_only on the new master.. "; $new_master_handler->enable_read_only(); if ( $new_master_handler->is_read_only() ) { print "ok.\n"; } else { die "Failed!\n"; } $new_master_handler->disconnect(); # Connecting to the orig master, die if any database error happens my $orig_master_handler = new MHA::DBHelper(); $orig_master_handler->connect( $orig_master_ip, $orig_master_port, $orig_master_user, $orig_master_password, 1 ); ## Waiting for N * 100 milliseconds so that current connections can exit my $time_until_read_only = 15; $_tstart = [gettimeofday]; my @threads = get_threads_util( $orig_master_handler->{dbh}, $orig_master_handler->{connection_id} ); while ( $time_until_read_only > 0 && $#threads >= 0 ) { if ( $time_until_read_only % 5 == 0 ) { printf "%s Waiting all running %d threads are disconnected.. (max %d milliseconds)\n", current_time_us(), $#threads + 1, $time_until_read_only * 100; if ( $#threads < 5 ) { print Data::Dumper->new( [$_] )->Indent(0)->Terse(1)->Dump . "\n" foreach (@threads); } } sleep_until(); $_tstart = [gettimeofday]; $time_until_read_only--; @threads = get_threads_util( $orig_master_handler->{dbh}, $orig_master_handler->{connection_id} ); } ## Setting read_only=1 on the current master so that nobody(except SUPER) can write print current_time_us() . " Set read_only=1 on the orig master.. "; $orig_master_handler->enable_read_only(); if ( $orig_master_handler->is_read_only() ) { print "ok.\n"; } else { die "Failed!\n"; } ## Waiting for M * 100 milliseconds so that current update queries can complete my $time_until_kill_threads = 5; @threads = get_threads_util( $orig_master_handler->{dbh}, $orig_master_handler->{connection_id} ); while ( $time_until_kill_threads > 0 && $#threads >= 0 ) { if ( $time_until_kill_threads % 5 == 0 ) { printf "%s Waiting all running %d queries are disconnected.. (max %d milliseconds)\n", current_time_us(), $#threads + 1, $time_until_kill_threads * 100; if ( $#threads < 5 ) { print Data::Dumper->new( [$_] )->Indent(0)->Terse(1)->Dump . "\n" foreach (@threads); } } sleep_until(); $_tstart = [gettimeofday]; $time_until_kill_threads--; @threads = get_threads_util( $orig_master_handler->{dbh}, $orig_master_handler->{connection_id} ); } ## 停止旧主库上的VIP &stop_vip(); ## Terminating all threads print current_time_us() . " Killing all application threads..\n"; $orig_master_handler->kill_threads(@threads) if ( $#threads >= 0 ); print current_time_us() . " done.\n"; $orig_master_handler->disconnect(); $exit_code = 0; }; if ($@) { warn "Got Error: $@\n"; exit $exit_code; } exit $exit_code; } elsif ( $command eq "start" ) { ## Activating master ip on the new master my $exit_code = 10; eval { my $new_master_handler = new MHA::DBHelper(); # args: hostname, port, user, password, raise_error_or_not $new_master_handler->connect( $new_master_ip, $new_master_port, $new_master_user, $new_master_password, 1 ); ## Set read_only=0 on the new master print current_time_us() . " Set read_only=0 on the new master.\n"; $new_master_handler->disable_read_only(); $new_master_handler->disconnect(); ## 在新主库上启动VIP if (&start_vip()) { $exit_code = 0; } else { $exit_code = 10; # VIP启动失败,但继续执行 } }; if ($@) { warn "Got Error: $@\n"; exit $exit_code; } exit $exit_code; } elsif ( $command eq "status" ) { # 检查VIP状态 print "VIP Status:\n"; print "Old Master ($orig_master_host): " . (check_vip_exists($orig_master_host, $orig_master_ssh_user) ? "VIP EXISTS" : "VIP NOT FOUND") . "\n"; print "New Master ($new_master_host): " . (check_vip_exists($new_master_host, $new_master_ssh_user) ? "VIP EXISTS" : "VIP NOT FOUND") . "\n"; exit 0; } else { &usage(); exit 1; } } sub usage { print "Usage: master_ip_online_change --command=start|stop|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --orig_master_user=user --orig_master_password=password --orig_master_ssh_user=sshuser --new_master_host=host --new_master_ip=ip --new_master_port=port --new_master_user=user --new_master_password=password --new_master_ssh_user=sshuser \n"; die; }

[root@db03 ~]# vim /usr/local/bin/mha_vip_online_change_script

[root@db03 ~]# chmod +x /usr/local/bin/mha_vip_online_change_script

[root@db03 ~]# vim /etc/mha/app01.cnf master_ip_online_change_script=/usr/local/bin/mha_vip_online_change_script

master_ip_failover_script:在故障切换时自动将VIP从故障主库转移到新主库的脚本。

master_ip_online_change_script:在计划内主库切换时自动将VIP从旧主库迁移到新主库的脚本。

# 重启MHA服务 [root@db03 ~]# masterha_stop --conf=/etc/mha/app01.cnf [root@db03 ~]# nohup masterha_manager --conf=/etc/mha/app01.cnf --remove_dead_master_conf \ --ignore_last_failover < /dev/null> /var/log/mha/app01/manager.log 2>&1 & [root@db03 ~]# masterha_check_status --conf=/etc/mha/app01.cnf

# 之后从新之心对应的在线切换操作

错误记录

MHA高可用:masterha_check_repl error 汇总_mha repl不过-CSDN博客

[root@db03 bin]# masterha_check_repl --conf=/etc/mha/app01.cnf Thu Sep 25 15:36:51 2025 - [error][/usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm, ln208] Slaves settings check failed! Thu Sep 25 15:36:51 2025 - [error][/usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm, ln416] Slave configuration failed. Thu Sep 25 15:36:51 2025 - [error][/usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm, ln427] Error happened on checking configurations. at /usr/bin/masterha_check_repl line 48. Thu Sep 25 15:36:51 2025 - [error][/usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm, ln525] Error happened on monitoring servers.

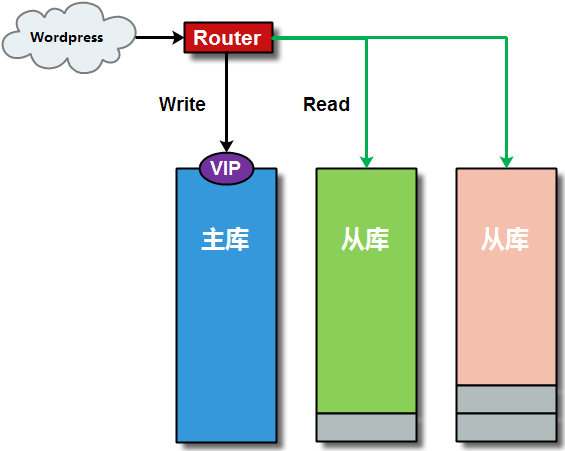

数据库服务读写分离

数据库服务读写分析原理分析

读写分离架构最终目的:实现业务写的请求到达主库,实现业务读的请求到达从库,从而减少主库的压力,实现不同请求的压力分担;

可以利用读写分离中间件实现以上的功能需求:atlas(360公司出品) proxySQL

利用读写分离中间件的设置,当业务请求有select查询时,将请求发送给从库,当业务请求有update insert等修改时,将请求发送给主库

proxySQL是基于MySQL的一款开源的中间件的产品,是一个灵活的MySQL代理层,可以实现读写分离:

- proxySQL数据库中间件支持Query路由功能;

- pxoxySQL数据库中间件支持动态指定某个SQL进行缓存;

- proxySQL数据库中间件支持动态加载配置信息(无需重启ProxySQL服务)

- proxySQL数据库中间件支持故障切换和SQL的过滤功能(安全)

ProxySQL的参考网站连接:

https://github.com/sysown/proxysql/releases

数据库服务读写分离架构搭建

步骤一:读写分离架构部署环境规划

为了实现读写分离架构构建,需要准备好三节点数据库+GTID复制环境+MHA环境(普通主从环境也可以构建);

| 主机角色 | 主机名称 | 地址信息 |

|---|---|---|

| 主库服务器 | 10.0.0.51 | 3306 |

| 从库服务器 | 10.0.0.52 | 3306 |

| 从库服务器 | 10.0.0.53 (兼做管理节点) |

3306 |

步骤二:读写分离架构软件下载安装

[root@db03 ~]# rpm -ivh proxysql-2.7.3-1-centos7.x86_64.rpm -i表示安装软件包,-v表示显示详细安装信息,-h表示以哈希符号显示安装进度条

[root@db03 ~]# ll /etc/systemd/system/proxysql* # 默认会自动安装到system目录下

-rw-r--r-- 1 root root 392 Mar 9 2025 /etc/systemd/system/proxysql-initial.service

-rw-r--r-- 1 root root 826 Mar 9 2025 /etc/systemd/system/proxysql.service

[root@db03 ~]# systemctl start proxysql

[root@db03 ~]# netstat -lntup |grep proxysql

tcp 0 0 0.0.0.0:6032 0.0.0.0:* LISTEN 10829/proxysql

tcp 0 0 0.0.0.0:6033 0.0.0.0:* LISTEN 10829/proxysql

# 启动生成的6032端口为管理端口,用于配置数据库中间件的功能信息连接此端口

# 启动生成的6033端口为访问端口,用于提供对外的业务访问此端口

步骤三:读写分离架构软件管理配置

在连接进入6032端口之后,表示进行proxysql的管理终端环境,终端环境中会加载五个重要的功能库:

| 库信息 | 配置信息 | 解释说明 |

|---|---|---|

main |

mysql_servers | 表示后端可以连接mysql服务器的列表 |

| mysql_users | 表示配置后端数据库的连接账号和监控账号 | |

| mysql_query_rules | 表示指定query路由到后端不同服务器的规则列表 | |

| mysql_replication_hostgroups | 表示节点分组配置信息,可以配置多个写或读节点到一个组中 | |

| disk | 表示持久化的磁盘配置信息 | |

stats |

表示统计信息的汇总 | |

monitor |

表示监控收集的信息,比如数据库的监控状态等 | |

| stats_history | 表示收集的有关软件内部功能的历史指标 |

说明:一般服务是通过配置文件保存功能配置信息,proxySQL是通过数据库中的表进行配置信息的存储设置;

[root@db03 ~]# mysql -uadmin -padmin -h127.0.0.1 -P6032 mysql> show databases; +-----+---------------+-------------------------------------+ | seq | name | file | +-----+---------------+-------------------------------------+ | 0 | main | | | 2 | disk | /var/lib/proxysql/proxysql.db | | 3 | stats | | | 4 | monitor | | | 5 | stats_history | /var/lib/proxysql/proxysql_stats.db | +-----+---------------+-------------------------------------+ mysql> show tables; +----------------------------------------------------+ | tables | +----------------------------------------------------+ | coredump_filters | | global_variables | | mysql_aws_aurora_hostgroups | | mysql_collations | | mysql_firewall_whitelist_rules | | mysql_firewall_whitelist_sqli_fingerprints | | mysql_firewall_whitelist_users | | mysql_galera_hostgroups | | mysql_group_replication_hostgroups | | mysql_hostgroup_attributes | | mysql_query_rules | | mysql_query_rules_fast_routing | | mysql_replication_hostgroups | | mysql_servers | | mysql_servers_ssl_params | | mysql_users | | proxysql_servers | | restapi_routes | | runtime_checksums_values | | runtime_coredump_filters | | runtime_global_variables | | runtime_mysql_aws_aurora_hostgroups | | runtime_mysql_firewall_whitelist_rules | | runtime_mysql_firewall_whitelist_sqli_fingerprints | | runtime_mysql_firewall_whitelist_users | | runtime_mysql_galera_hostgroups | | runtime_mysql_group_replication_hostgroups | | runtime_mysql_hostgroup_attributes | | runtime_mysql_query_rules | | runtime_mysql_query_rules_fast_routing | | runtime_mysql_replication_hostgroups | | runtime_mysql_servers | | runtime_mysql_servers_ssl_params | | runtime_mysql_users | | runtime_proxysql_servers | | runtime_restapi_routes | | runtime_scheduler | | scheduler | +----------------------------------------------------+

-- 表名以runtiem_开头的表示proxySQL服务中当前运行的配置内容,不能直接修改,不带runtime是下文图中mem相关的配置

Proxy SQL管理接口的多层配置关系

ProxySQL三层配置系统

# 第一层:RUNTIME: 代表proxySQL当前正在使用的配置,无法直接修改此配置,必须要从下一层(MEM层)load加载进来; # 第二层:MEMORY(主要修改的配置表) memory层上面连接runtime层,下面连接disk持久化存储层; 可以在线操作ProxySQL配置,随意进行修改,不会影响生产环境,确认正常之后再加载到runtime和持久化保存到磁盘 具体修改操作方法为:insert、update、delete、select; # 第三层:DISK/CFG FILE 持久化配置信息,重启时可以从磁盘快速加载回来;

ProxySQL不同层次间移动配置信息

user相关配置

LOAD MYSQL USERS TO RUNTIME; -- MEM加载到runtime SAVE MYSQL USERS TO MEMORY; -- RUNTIME保存至MEM LOAD MYSQL USERS FROM DISK; -- DISK加载到MEM SAVE MYSQL USERS TO DISK; -- MEM保存至DISK LOAD MYSQL USERS FROM CONFIG; -- CFG加载到MEM

server服务相关配置

LOAD MYSQL SERVERS TO RUNTIME; -- MEM加载到RUNTIME SAVE MYSQL SERVERS TO MEMORY; -- RUNTIME保存至MEM LOAD MYSQL SERVERS FROM DISK; -- DISK加载到MEM SAVE MYSQL SERVERS TO DISK; -- MEM保存至DISK LOAD MYSQL SERVERS FROM CONFIG; -- CFG加载到MEM

mysql query rules相关配置

LOAD MYSQL QUERY RULES TO RUNTIME; -- MEM加载到RUNTIME SAVE MYSQL QUERY RULES TO MEMORY; -- RUNTIME保存至MEM LOAD MYSQL QUERY RULES FROM DISK; -- DISK加载到MEM SAVE MYSQL QUERY RULES TO DISK; -- MEM保存至DISK LOAD MYSQL QUERY RULES FROM CONFIG; -- CFG加载到MEM

mysql variables相关配置

LOAD MYSQL VARIABLES TO RUNTIME; -- MEM加载到RUNTIME SAVE MYSQL VARIABLES TO MEMORY; -- RUNTIME保存至MEM LOAD MYSQL VARIABLES FROM DISK; -- DISK加载到MEM SAVE MYSQL VARIABLES TO DISK; -- MEM保存至DISK LOAD MYSQL VARIABLES FROM CONFIG; -- CFG加载到MEM

需要注意:只有load到runtime状态时才会验证配置,在保存到mem或disk时,都不会发生任何警告或错误;

当load到runtime时,如果出现了错误信息,将恢复为之前保存的状态,这时可以根据错误日志信息做检查;

总结:日常配置过程大部分时间是在mem中进行配置,然后load到runtime,或者save到disk中,对于cfg很少使用;

ProxySQL基于SQL语句进行读写分离实验配置

| 操作说明 | 涉及数据表信息 | 涉及操作信息 |

|---|---|---|

| 设置从库只读模式 | read_only=1 | |

| 添加主机组信息 | mysql_replication_hostgroups | |

| 添加主机组节点信息 | mysql_servers | |

| 添加用户信息(监控用户 应用用户) | global_variables mysql_users |

|

| 添加读写分离规则 | mysql_query_rules |

在mysql_replication_hostgroups表中配置读写组编号

proxySQL会根据server的read only的取值将服务器进行分组:

- read_only=0的server,即master会被分到编号为10的写组

- read_only=1的server,即slave会被分到编号为20的读组;(

所以需要将从库设置:set global read_only=1)

mysql> insert into mysql_replication_hostgroups(writer_hostgroup,reader_hostgroup,comment) values(10,20,'proxy'); mysql> select * from mysql_replication_hostgroups; +------------------+------------------+------------+---------+ | writer_hostgroup | reader_hostgroup | check_type | comment | +------------------+------------------+------------+---------+ | 10 | 20 | read_only | proxy | +------------------+------------------+------------+---------+ writer_hostgroup 定义写操作的主机组编号; reader_hostgroup 定义读操作的主机组编号; check_type 指定用于检测服务器读写状态的检查类型; comment 用于添加该配置的备注说明 mysql> save mysql servers to disk; mysql> load mysql servers to runtime;

添加主机到ProxySQL

mysql> insert into mysql_servers(hostgroup_id,hostname,port) values(10,'10.0.0.50',3306); mysql> insert into mysql_servers(hostgroup_id,hostname,port) values(20,'10.0.0.52',3306); mysql> insert into mysql_servers(hostgroup_id,hostname,port) values(20,'10.0.0.53',3306); mysql> select * from mysql_servers; +--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+ | hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment | +--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+ | 10 | 10.0.0.50 | 3306 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | | | 20 | 10.0.0.52 | 3306 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | | | 20 | 10.0.0.53 | 3306 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | | +--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

mysql> save mysql servers to disk;

mysql> load mysql servers to runtime;

创建监控用户,并开启监控

利用监控用户对后端节点的运行情况进行监控数据同步,一旦后端节点出现数据同步异常,就不要再向故障节点发送相应业务请求;

# 主库创建监控用户或者在proxysql中修改variables表配置信息 db01 [(none)]> create user monitor@'%' identified with mysql_native_password by 'monitor'; db01 [(none)]> grant replication client on *.* to monitor@'%'; # 修改变量方法一 db03 [(none)]> set mysql-monitor_username='monitor'; db03 [(none)]> set mysql-monitor_password='monitor'; # 修改变量方法二 db03 [(none)]>update global_variables set variable_value='monitor' where variable_name='mysql-monitor_username'; db03 [(none)]>update global_variables set variable_value='monitor' where variable_name='mysql-monitor_password'; db03 [(none)]>load mysql variables to runtime; db03 [(none)]>save mysql variables to disk;

db03 [(none)]>select * from mysql_server_connect_log; -- 这张表记录了所有尝试连接MySQL服务器的成功和失败信息 +-----------+------+------------------+-------------------------+---------------+ | hostname | port | time_start_us | connect_success_time_us | connect_error | +-----------+------+------------------+-------------------------+---------------+ | 10.0.0.50 | 3306 | 1759114335172474 | 2147 | NULL | | 10.0.0.52 | 3306 | 1759114335911205 | 2047 | NULL | | 10.0.0.53 | 3306 | 1759114336649926 | 773 | NULL | +-----------+------+------------------+-------------------------+---------------+ db03 [(none)]> select * from mysql_server_ping_log; -- 这张表专门用于记录对集群中每个成员服务器的连通性检查结果 +-----------+------+------------------+----------------------+----------------------------------------------+ | hostname | port | time_start_us | ping_success_time_us | ping_error | +-----------+------+------------------+----------------------+----------------------------------------------+ | 10.0.0.53 | 3306 | 1759114514920244 | 438 | NULL | | 10.0.0.52 | 3306 | 1759114514919868 | 916 | NULL | | 10.0.0.50 | 3306 | 1759114514920164 | 651 | NULL | +-----------+------+------------------+----------------------+----------------------------------------------+ db03 [(none)]> select * from mysql_server_read_only_log; -- 这张表记录只复制集群中每个成员read_only状态变化(获取主库或从库主机信息) +-----------+------+------------------+-----------------+-----------+-------+ | hostname | port | time_start_us | success_time_us | read_only | error | +-----------+------+------------------+-----------------+-----------+-------+ | 10.0.0.53 | 3306 | 1759114650009963 | 874 | 1 | NULL | | 10.0.0.52 | 3306 | 1759114650010060 | 839 | 1 | NULL | | 10.0.0.50 | 3306 | 1759114650009842 | 1091 | 0 | NULL | +-----------+------+------------------+-----------------+-----------+-------+ db03 [(none)]> select * from mysql_server_replication_lag_log; Empty set (0.00 sec) -- 这张表记录组复制集群中每个成员上观察到的复制延迟

创建应用用户信息

创建数据库应用用户信息,利用应用用户,可以使proxySQL进行数据库节点的操作管理;

mysql_users:该表用于管理通过ProxySQL连接后端MySQL服务器的用户凭证和基本路由信息

# 主库创建应用用户 db01 [(none)]> create user root@'%' identified with mysql_native_password by 'root'; db01 [(none)]> grant all on *.* to root@'%'; # 在proxysql中添加数据库节点的管理用户信息 db03 [(none)]>insert into mysql_users(username,password,default_hostgroup) \

values('root','root',10); -- username db03 [(none)]>load mysql users to runtime; Query OK, 0 rows affected (0.00 sec) db03 [(none)]>save mysql users to disk; Query OK, 0 rows affected (0.00 sec) # 早期版本,需要开启事务的持续化(忽略) update mysql_users set transaction_persistent=1 where username='root'; load mysql users to runtime; save mysql users to disk; -- 事务路由分配持续性,同一个事务的语句不会被分配到不同的组

实用的读写规则配置

> insert into mysql_query_rules(rule_id,active,match_pattern,destination_hostgroup,apply) values (1,1,'^select.*for update$',10,1); > insert into mysql_query_rules(rule_id,active,match_pattern,destination_hostgroup,apply) values (2,1,'^select',20,1); -- 其余数据库操作语句信息,默认路由放置到主节点进行执行 > load mysql query rules to runtime; > save mysql query rules to disk;

select ... for update规则的rule_id必须要小于普通的select规则的rule_id,proxySQL是根据rule_id的顺序进行规则匹配的;

测试读写分离效果

[root@xiaoQ-03 ~]# mysql -uroot -p123 -P6033 -h127.0.0.1 -e "begin;select @@server_id;commit" +-----------------+ | @@server_id | +-----------------+ | 51 | +-----------------+ -- 非查询操作走的是主节点 [root@xiaoQ-03 ~]# mysql -uroot -p123 -P6033 -h127.0.0.1 -e "select @@server_id;" +-----------------+ | @@server_id | +-----------------+ | 52 | +-----------------+ [root@xiaoQ-03 ~]# mysql -uroot -p123 -P6033 -h127.0.0.1 -e "select @@server_id;" +-----------------+ | @@server_id | +-----------------+ | 53 | +-----------------+ -- 查询操作走的是从节点 >select * from stats_mysql_query_digest\G -- 这个表对于分析SQL语句至关重要,是分析语句性能、定制路由规则指标的最主要来源

读写分离架构软件配置扩展

基于端口进行读写分离路由

# 修改proxySQL监听SQL流量的端口号,监听多端口信息 db03 [(none)]> set mysql-interfaces='0.0.0.0:6033;0.0.0.0:6034' # 使监听端口配置信息生效 db03 [(none)]> save mysql variables to disk; [root@db03 ~]# systemctl restart proxysql # 设定相应读写分离路由规则 db03 [(none)]> delete from mysql_query_rules; -- 为了测试效果,先清空已有规则信息 db03 [(none)]> insert into mysql_query_rules(rule_id,active,proxy_port,\ destination_hostgroup,apply) values(1,1,6033,10,1),(2,1,6034,20,1); db03 [(none)]> load mysql query rules to runtime; db03 [(none)]> save mysql query rules to disk; -- 除了基于端口进行分离,还可以基于监听地址(修改字段proxy_addr即可),也可以基于客户端地址(修改字段client_addr字段即可)

基于用户进行读写分离路由

db03 [(none)]> insert into mysql_users(username,password,default_hostgroup) \ values ('write','123',10),('reader','123',20); db03 [(none)]> load mysql users to runtime; db03 [(none)]> save mysql users to disk; db03 [(none)]> delete from mysql_query_rules; -- 为了测试效果,先清空已有规则信息 db03 [(none)]> insert into mysql_query_rules(rule_id,active,username,\ destination_hostgroup,apply) values (1,1,'write',10,1),(2,1,'reader',20,1); db03 [(none)]> load mysql users to runtime; db03 [(none)]> save mysql users to disk;

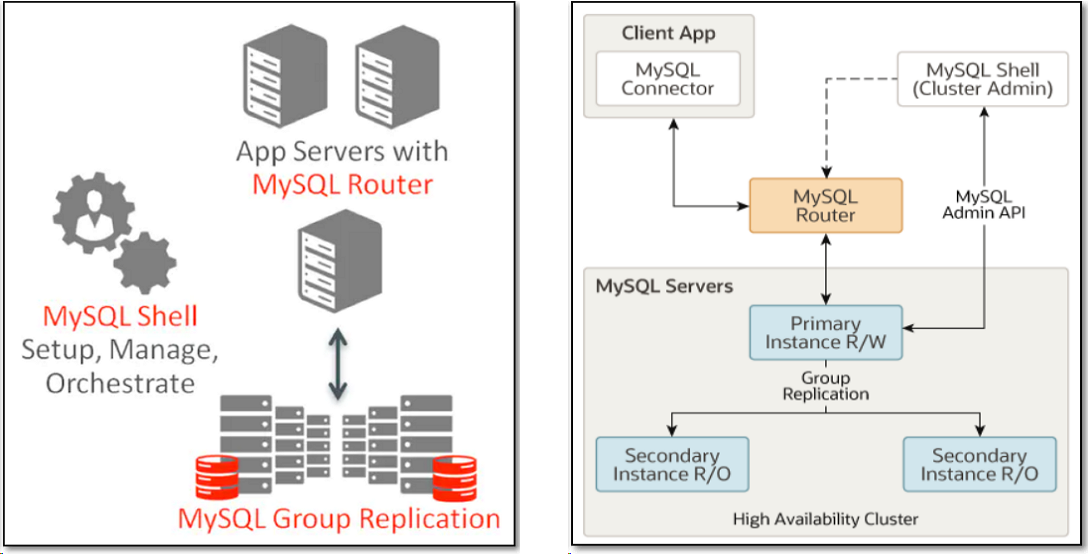

MIC(数据库高可用/读写分离集群)

MySQL8.0之后解决高可用、主从同步、读写分离原生方案

数据库服务架构集群概述

MySQLInnoDB集群提供了一个集成的,本地的,HA解决方案:MySQL InnoDB cluster;

MySQLInnoDB cluster是利用组复制的paxos协议,保障数据一致性,组复制支持单主模式和多主模式;

MySQLInnoDB cluster由以下几部分组成:

MySQL Servers with Group Replication:

向集群的所有成员复制数据,同时提供容错,自动故障转移和弹性伸缩;(MySQLServer5.7.17或更高的版本)

MySQL Router:

确保客户端请求是负载均衡的,并在任何数据库故障时,可以路由到正确的服务器;(MySQLRouter2.1.3或更高的版本)

MySQL shell:

通过内置的管理API创建管理Innodb集群,即统一管理MGR节点;(MySQLShell1.0.9或更高的版本)

数据库服务架构集群构建过程

构建架构主机规范说明

| IP | Hostname | Host role | install software |

| 10.0.0.51 | db01 | mic-master | mysql、mysqlsh |

| 10.0.0.52 | db02 | mic-slave01 | mysql、mysqlsh |

| 10.0.0.53 | db03 | mic-slave02 | mysql、mysqlsh |

| 10.0.0.54 | db04 | mix-manager | mysql、mysqldsh、mysql-router |

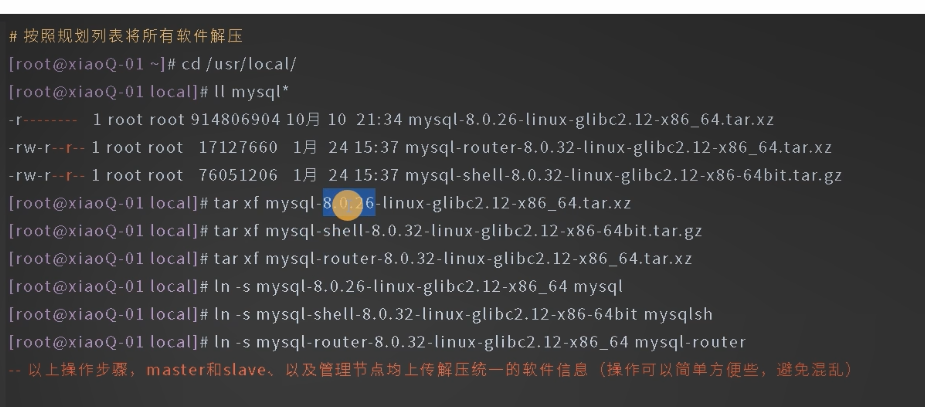

安装软件程序

配置环境变量

vim /etc/profile

export PATH=/usr/local/mysql/bin:/usr/local/mysqlsh/bin:/usr/local/mysql-router/bin:$PATH

source /etc/profile

将其进行域名解析

vim /etc/hosts

10.0.0.51 db01

10.0.0.52 db02

10.0.0.53 db03

10.0.0.54 db04

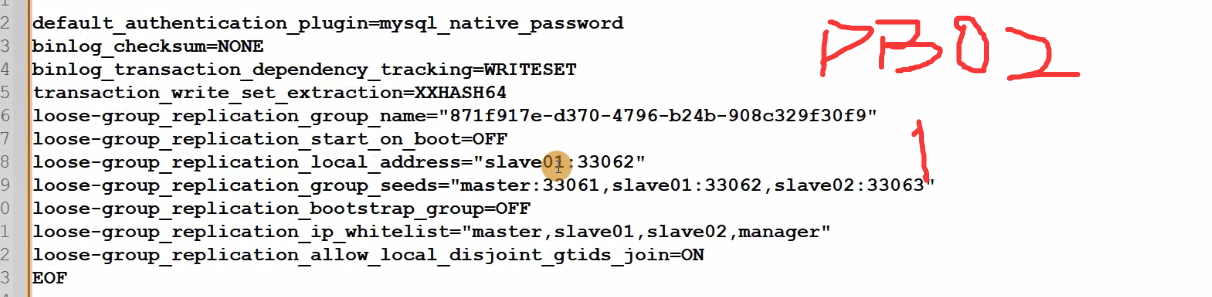

数据库初始化

pkill mysqld

rm -rf /data/3306/data/*

将三台主机进行初始化操作(此时还没有配置db04)

添加实例到mysqlsh中,使mysqlsh可以批量管理所有数据库节点

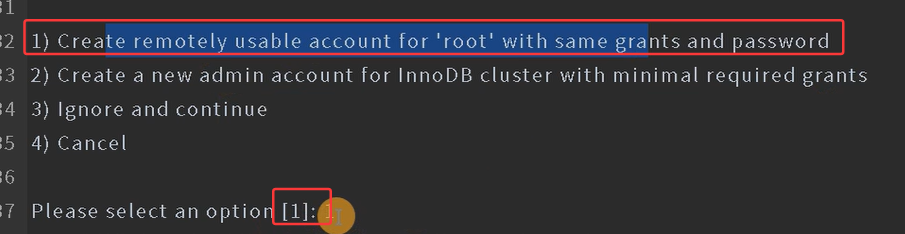

# 设置数据库本地root账户密码信息(所有数据节点均操作) db01 [(none)]>alter user root@'localhost' identified with mysql_nativee_password by 'root'; db02 [(none)]>alter user root@'localhost' identified with mysql_nativee_password by 'root'; db03 [(none)]>alter user root@'localhost' identified with mysql_nativee_password by 'root'; # 初始化配置集群节点实例(db01\db02\db03数据库节点均操作) mysqlsh > shell.connect('root@localhost:3306') ; -- 这是一个用于连接MySQL服务器实例的MySQL Shell命令,通过root用户连接到本地主机的3306端口 > dba.configureLocalinstance(); -- 快速配置当前连接的MySQL本地实例,使其满足MySQL Group Replication(组复制)的高可用集群要求

> dba.checkinstanceConfiguration("root@localhost:3306");

检查指定的MySQL实例当前的配置是否符合组建InnoDB Cluster(高可用集群)的要求(显示ok表示综上操作成功)

构建MGR主从架构

在现有数据库集群实例环境中,创建集群并添加节点(配置MGR-在管理节点上进行统一配置操控)

mysqlsh > shell.connect('root@10.0.0.51:3306') MySQL master:3306 ssl JS> -- 出现这个信息表示已经与主节点数据库服务建立连接 > var cluster=dba.createCluster('群组名') -- 创建MGR群组 等价于mgr中的 change master to master_user='repl',master_password='123' for channel '群组名' set global group_replication_bootstrap_group=ON; start group_replication; set global group_replication_bootstrap_group=OFF; 他会自动将第一个创建的连接的服务器作为引导节点 cluster.status() 查看群组信息 等价于MGR select * from performance_schema.replication_group_members; # 将从节点均加入到MGR集群中(如果断开了需要从新进入mysqlsh,并重新连接主节点) > var cluster=dba.getCluster('群组名'); -- 切换到指定的群组 > cluster.addinstance('root@10.0.0.52:3306'); -- 加入指定节点信息到群组中 Please select a recovery method [C]lone/[A]bort(default Abort):C 是否将主库的数据克隆到从库 > cluster.addinstance('root@10.0.0.53:3306'); -- 加入指定节点信息到群组中 Please select a recovery method [C]lone/[A]bort(default Abort):C 是否将主库的数据克隆到从库 cluster.status() 查看群组信息

构建MIC集群mysql-router中间件

# 注册router到集群架构中,生成myrouter目录,并生成启动程序和配置文件 [root@db04 ~]# cd /usr/local [root@db04 ~]# mysqlrouter --bootstrap root@db01:3306 -d myrouter --user=root 会在当前目录下生成对应的目录 Read/Write Connections: localhost:6446 Read/Only Connections: localhost:6447 # 启动mysql-router程序 cd /usr/loca/myrouter/ ./start.sh natstart -lntup |grep mysqld # 进行router代理程序连接测试 mysqlsh -uri root@localhost:6446 mysql -uroot -proot -h10.0.0.54 -P 6446 -- 读写分离测试 mysql -uroot -proot -h10.0.0.54 -P 6447

数据库服务架构集群管理命令

集群节点信息查询命令

# 连接主节点,并进行定义 mysqlsh > shell.connect('root@10.0.0.51:306'); > var cluster=dba.getCluster('群组名'); # 检查节点是否符合集群标准(是否加入集群) dba.checklnstanceConfiguration('root@master:3306'); # 集群结构信息描述 cluster.describe(); # 集群状态总览 cluster.status(); # 查看集群名字 dba.getCluster(); # 查看router信息 cluster.listRouters();

集群创建管理命令

# 创建集群节点 dba.createCluster() > var cluster=dba.createCluster('群组名'); # 添加集群节点 Cluster.addlinstance() > cluster.addinstance('root@slave01:3306'); # 移除集群节点 Cluster.removeinstance() > cluster.removeinstance('root@slave01:3306'); # 重新识别节点 Cluster.rejoininstance() > cluster.rejoininstance('root@slave01:3306'); -- 如果实例离开集群(丢失连接并且没有自动重新加入集群),可以通过Cluster.rejoininstance()方法将实例重新加入 # 在线切换实例 Cluster.setPrimaryinstance(instance) > cluster.setPrimaryinstance('root@slave01:3306'); > cluster.status(); > cluster.setPrimaryinstance('root@master:3306'); # 在线切换扩展 > cluster.switchToMultiPrimaryMode(); -- 切换为多主模式 > cluster.switchToSinglePrimaryMode('root@master:3306'); -- 切换为单主模式 -- 切换为多主模式后 6447端口(默认只读)接收读写,并且可通过该端口访问所有集群成员; 6446端口(默认读写),只能连接到其中一个成员(之前的primary成员)

集群节点故障处理操作

dba.rebootClusterFromCompleteOutage();

用于在集群完全断电后重新配置集群,如果以上操作失败,可以通过以下方式删除所有集群元素据

dba.dropMetadateSchema();

删除集群元数据,然后dba.createCluster()重建集群

cluster.removeRouterMetadata(router);

删除已经注册的router

浙公网安备 33010602011771号

浙公网安备 33010602011771号