k8s部署Prometheus实战

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

目录

一.prometheus Operator环境部署

1.Prometheus Operator概述

1.什么是Prometheus Operator

Prometheus Operator主要用于K8S集群监控,因此它被预先配置为从所有Kubernetes组件收集指标。

除此之外,它还提供了一组默认的仪表板和警报规则。

许多有用的仪表板和警报来自kubernetes-mixin项目,类似于这个项目,它提供了可组合的jsonnet作为库,供用户根据自己的需求进行自定义。

github地址:

https://github.com/prometheus-operator/kube-prometheus

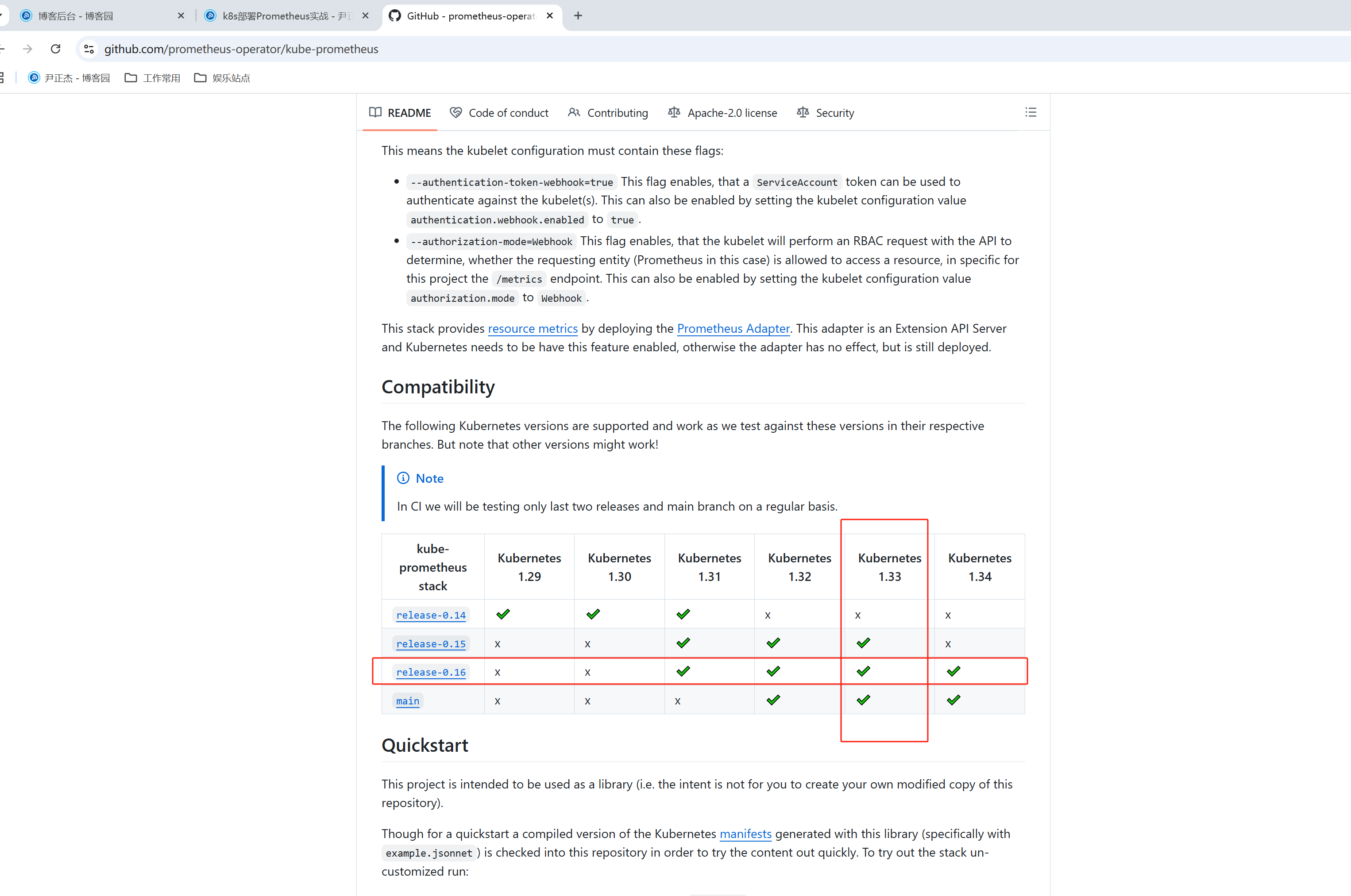

2.prometheus Operator和K8S版本的对应关系

如上图所示,prometheus Operator和K8S版本关系建议和和官方要匹配。

我的K8S集群环境是'1.31.9',因此选择的prometheus Operator的最新版本为'v0.16.0'。

[root@master241 kube-prometheus-0.16.0]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master241 Ready control-plane 134d v1.31.9 10.0.0.241 <none> Ubuntu 22.04.4 LTS 5.15.0-144-generic containerd://1.6.36

worker242 Ready <none> 134d v1.31.9 10.0.0.242 <none> Ubuntu 22.04.4 LTS 5.15.0-144-generic containerd://1.6.36

worker243 Ready <none> 134d v1.31.9 10.0.0.243 <none> Ubuntu 22.04.4 LTS 5.15.0-144-generic containerd://1.6.36

[root@master241 kube-prometheus-0.16.0]#

2.prometheus Operator环境部署

1.下载源代码

[root@master241 ~]# wget https://github.com/prometheus-operator/kube-prometheus/archive/refs/tags/v0.16.0.tar.gz

2.解压目录

[root@master241 ~]# tar xf v0.16.0.tar.gz

[root@master241 ~]# cd kube-prometheus-0.16.0/

[root@master241 kube-prometheus-0.16.0]# ll

total 172

drwxrwxr-x 11 root root 4096 Aug 28 23:21 ./

drwx------ 12 root root 4096 Sep 27 16:18 ../

-rwxrwxr-x 1 root root 679 Aug 28 23:21 build.sh*

-rw-rw-r-- 1 root root 17467 Aug 28 23:21 CHANGELOG.md

-rw-rw-r-- 1 root root 2020 Aug 28 23:21 code-of-conduct.md

-rw-rw-r-- 1 root root 3782 Aug 28 23:21 CONTRIBUTING.md

drwxrwxr-x 5 root root 4096 Aug 28 23:21 developer-workspace/

drwxrwxr-x 4 root root 4096 Aug 28 23:21 docs/

-rw-rw-r-- 1 root root 2273 Aug 28 23:21 example.jsonnet

drwxrwxr-x 7 root root 4096 Aug 28 23:21 examples/

drwxrwxr-x 3 root root 4096 Aug 28 23:21 experimental/

drwxrwxr-x 4 root root 4096 Aug 28 23:21 .github/

-rw-rw-r-- 1 root root 141 Aug 28 23:21 .gitignore

-rw-rw-r-- 1 root root 1474 Aug 28 23:21 .gitpod.yml

-rw-rw-r-- 1 root root 2246 Aug 28 23:21 go.mod

-rw-rw-r-- 1 root root 16022 Aug 28 23:21 go.sum

drwxrwxr-x 3 root root 4096 Aug 28 23:21 jsonnet/

-rw-rw-r-- 1 root root 400 Aug 28 23:21 jsonnetfile.json

-rw-rw-r-- 1 root root 6584 Aug 28 23:21 jsonnetfile.lock.json

-rw-rw-r-- 1 root root 1807 Aug 28 23:21 kubescape-exceptions.json

-rw-rw-r-- 1 root root 4902 Aug 28 23:21 kustomization.yaml

-rw-rw-r-- 1 root root 11325 Aug 28 23:21 LICENSE

-rw-rw-r-- 1 root root 3565 Aug 28 23:21 Makefile

drwxrwxr-x 3 root root 4096 Aug 28 23:21 manifests/

-rw-rw-r-- 1 root root 760 Aug 28 23:21 .mdox.validate.yaml

-rw-rw-r-- 1 root root 8835 Aug 28 23:21 README.md

-rw-rw-r-- 1 root root 5269 Aug 28 23:21 RELEASE.md

drwxrwxr-x 2 root root 4096 Aug 28 23:21 scripts/

drwxrwxr-x 3 root root 4096 Aug 28 23:21 tests/

[root@master241 kube-prometheus-0.16.0]#

3.安装Prometheus-Operator

kubectl apply --server-side -f manifests/setup

kubectl wait \

--for condition=Established \

--all CustomResourceDefinition \

--namespace=monitoring

kubectl apply -f manifests/

4.检查Prometheus是否部署成功

[root@master241 kube-prometheus-0.16.0]# kubectl get pods -n monitoring -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-main-0 2/2 Running 0 44m 10.100.207.28 worker243 <none> <none>

alertmanager-main-1 2/2 Running 0 44m 10.100.165.191 worker242 <none> <none>

alertmanager-main-2 2/2 Running 0 44m 10.100.207.50 worker243 <none> <none>

blackbox-exporter-c867b65b9-6b25g 3/3 Running 0 49m 10.100.207.63 worker243 <none> <none>

grafana-64f4bd5f67-99ffp 1/1 Running 0 49m 10.100.207.56 worker243 <none> <none>

kube-state-metrics-7bf686dc45-4wxkr 3/3 Running 0 49m 10.100.165.168 worker242 <none> <none>

node-exporter-24bc4 2/2 Running 0 49m 10.0.0.243 worker243 <none> <none>

node-exporter-dhznx 2/2 Running 0 49m 10.0.0.241 master241 <none> <none>

node-exporter-grc8j 2/2 Running 0 49m 10.0.0.242 worker242 <none> <none>

prometheus-adapter-784f566c54-979x9 1/1 Running 0 49m 10.100.165.131 worker242 <none> <none>

prometheus-adapter-784f566c54-c4fwk 1/1 Running 0 49m 10.100.207.55 worker243 <none> <none>

prometheus-k8s-0 2/2 Running 0 44m 10.100.207.57 worker243 <none> <none>

prometheus-k8s-1 2/2 Running 0 44m 10.100.165.150 worker242 <none> <none>

prometheus-operator-6df445c84-2jmfd 2/2 Running 0 49m 10.100.165.141 worker242 <none> <none>

[root@master241 kube-prometheus-0.16.0]#

5.修改Grafana的svc

[root@master231 kube-prometheus-0.16.0]# cat manifests/grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

...

name: grafana

namespace: monitoring

spec:

type: LoadBalancer # 添加改行配置,不然默认的svc类型为ClusterIP哟~

...

[root@master241 kube-prometheus-0.16.0]# kubectl apply -f manifests/grafana-service.yaml

service/grafana configured

[root@master241 kube-prometheus-0.16.0]# kubectl get svc -n monitoring grafana

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana LoadBalancer 10.201.242.227 10.0.0.155 3000:24685/TCP 51m

[root@master241 kube-prometheus-0.16.0]#

6.访问Grafana的WebUI

http://10.0.0.155:3000/

默认的用户名和密码: admin

存在的问题:

可能会出现Grafana无法访问的情况,但是Grafana在哪个节点就用该节点的NodePort访问是可以的。

如果无法访问,可以使用NodePort端口访问,且grafana在worker232节点,则可以使用"http://10.0.0.243:24685/login"

后记:

我尝试过使用Traefik的Ingress和IngressRoute来解决这个问题,但也没有效果,因为svc都无法访问,而Trafik底层需要基于svc找到后端Pod。

如果说将来必须要搞定这个事情,可以学习一下iptables语法,手动将svc的解析规则做转换,在对应的worker节点添加相应的规则。

如果不会写iptables规则的话,也可以考虑使用"kubectl port-forward"实现 。

kubectl -n monitoring port-forward deploy/grafana 3000:3000 --address=0.0.0.0

我的猜测:

- A.可能是worker节点资源不足,建议升级内存和CPU;

- B.可能是kubeadm部署的方式对于规则没有生效,可以尝试同版本二进制的k8s集群是否存在该问题。具体问题需要后期验证,希望各位道友一起搞定这个问题。

二.暴露Prometheus的服务WebUI课堂练习讲解

1.基于NodePort方式暴露

[root@master231 kube-prometheus-0.16.0]# cat manifests/prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

...

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9090

nodePort: 9090

targetPort: web

...

[root@master231 kube-prometheus-0.16.0]#

[root@master231 kube-prometheus-0.16.0]# kubectl apply -f manifests/prometheus-service.yaml

service/prometheus-k8s configured

[root@master231 kube-prometheus-0.16.0]#

[root@master241 kube-prometheus-0.16.0]# kubectl get -f manifests/prometheus-service.yaml

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus-k8s NodePort 10.207.3.216 <none> 9090:9090/TCP,8080:35485/TCP 56m

[root@master241 kube-prometheus-0.16.0]#

2.基于LoadBalancer

将上面的案例的"type: NodePort"对应的值改为"type: LoadBalancer"并重新应用资源清单即可。

3.基于端口转发

[root@master241 ~]# kubectl port-forward sts/prometheus-k8s 19090:9090 -n monitoring --address=0.0.0.0

Forwarding from 0.0.0.0:19090 -> 9090

Handling connection for 19090

Handling connection for 19090

4.Ingress实现

略。可参考我Ingress的相关笔记完成即可。

三.Prometheus监控云原生应用etcd案例

1.测试ectd metrics接口

1.1 查看etcd证书存储路径

[root@master231 yinzhengjie]# egrep "\--key-file|--cert-file" /etc/kubernetes/manifests/etcd.yaml

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --key-file=/etc/kubernetes/pki/etcd/server.key

[root@master231 yinzhengjie]#

1.2 测试etcd证书访问的metrics接口

[root@master231 yinzhengjie]# curl -s --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key https://10.0.0.231:2379/metrics -k | tail

# TYPE process_virtual_memory_max_bytes gauge

process_virtual_memory_max_bytes 1.8446744073709552e+19

# HELP promhttp_metric_handler_requests_in_flight Current number of scrapes being served.

# TYPE promhttp_metric_handler_requests_in_flight gauge

promhttp_metric_handler_requests_in_flight 1

# HELP promhttp_metric_handler_requests_total Total number of scrapes by HTTP status code.

# TYPE promhttp_metric_handler_requests_total counter

promhttp_metric_handler_requests_total{code="200"} 4

promhttp_metric_handler_requests_total{code="500"} 0

promhttp_metric_handler_requests_total{code="503"} 0

[root@master231 yinzhengjie]#

2.创建etcd证书的secrets并挂载到Prometheus server

2.1 查找需要挂载etcd的证书文件路径

[root@master231 yinzhengjie]# egrep "\--key-file|--cert-file|--trusted-ca-file" /etc/kubernetes/manifests/etcd.yaml

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

[root@master231 yinzhengjie]#

2.2 根据etcd的实际存储路径创建secrets

[root@master231 yinzhengjie]# kubectl create secret generic etcd-tls --from-file=/etc/kubernetes/pki/etcd/server.crt --from-file=/etc/kubernetes/pki/etcd/server.key --from-file=/etc/kubernetes/pki/etcd/ca.crt -n monitoring

secret/etcd-tls created

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl -n monitoring get secrets etcd-tls

NAME TYPE DATA AGE

etcd-tls Opaque 3 12s

[root@master231 yinzhengjie]#

2.3 修改Prometheus的资源,修改后会自动重启

[root@master231 yinzhengjie]# kubectl -n monitoring edit prometheus k8s

...

spec:

secrets:

- etcd-tls

...

[root@master231 yinzhengjie]# kubectl -n monitoring get pods -l app.kubernetes.io/component=prometheus -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

prometheus-k8s-0 2/2 Running 0 74s 10.100.1.57 worker232 <none> <none>

prometheus-k8s-1 2/2 Running 0 92s 10.100.2.28 worker233 <none> <none>

[root@master231 yinzhengjie]#

2.4.查看证书是否挂载成功

[root@master231 yinzhengjie]# kubectl -n monitoring exec prometheus-k8s-0 -c prometheus -- ls -l /etc/prometheus/secrets/etcd-tls

total 0

lrwxrwxrwx 1 root 2000 13 Jan 24 14:07 ca.crt -> ..data/ca.crt

lrwxrwxrwx 1 root 2000 17 Jan 24 14:07 server.crt -> ..data/server.crt

lrwxrwxrwx 1 root 2000 17 Jan 24 14:07 server.key -> ..data/server.key

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl -n monitoring exec prometheus-k8s-1 -c prometheus -- ls -l /etc/prometheus/secrets/etcd-tls

total 0

lrwxrwxrwx 1 root 2000 13 Jan 24 14:07 ca.crt -> ..data/ca.crt

lrwxrwxrwx 1 root 2000 17 Jan 24 14:07 server.crt -> ..data/server.crt

lrwxrwxrwx 1 root 2000 17 Jan 24 14:07 server.key -> ..data/server.key

[root@master231 yinzhengjie]#

3.编写资源清单

[root@master231 servicemonitors]# cat 01-smon-etcd.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: etcd-k8s

namespace: kube-system

subsets:

- addresses:

- ip: 10.0.0.231

ports:

- name: https-metrics

port: 2379

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: etcd-k8s

namespace: kube-system

labels:

apps: etcd

spec:

ports:

- name: https-metrics

port: 2379

targetPort: 2379

type: ClusterIP

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: oldboyedu-etcd-smon

namespace: monitoring

spec:

# 指定job的标签,可以不设置。

jobLabel: kubeadm-etcd-k8s-yinzhengjie

# 指定监控后端目标的策略

endpoints:

# 监控数据抓取的时间间隔

- interval: 30s

# 指定metrics端口,这个port对应Services.spec.ports.name

port: https-metrics

# Metrics接口路径

path: /metrics

# Metrics接口的协议

scheme: https

# 指定用于连接etcd的证书文件

tlsConfig:

# 指定etcd的CA的证书文件

caFile: /etc/prometheus/secrets/etcd-tls/ca.crt

# 指定etcd的证书文件

certFile: /etc/prometheus/secrets/etcd-tls/server.crt

# 指定etcd的私钥文件

keyFile: /etc/prometheus/secrets/etcd-tls/server.key

# 关闭证书校验,毕竟咱们是自建的证书,而非官方授权的证书文件。

insecureSkipVerify: true

# 监控目标Service所在的命名空间

namespaceSelector:

matchNames:

- kube-system

# 监控目标Service目标的标签。

selector:

# 注意,这个标签要和etcd的service的标签保持一致哟

matchLabels:

apps: etcd

[root@master231 servicemonitors]#

4.Prometheus查看数据

在Prometheus的WebUI使用'etcd_cluster_version'关键字搜索即可。

5.Grafana导入模板

在grafana的dashboard导入'3070'的模板ID即可。

四.Prometheus监控非云原生应用MySQL案例

1.编写资源清单

[root@master231 servicemonitors]# cat 02-smon-mysqld.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql80-deployment

spec:

replicas: 1

selector:

matchLabels:

apps: mysql80

template:

metadata:

labels:

apps: mysql80

spec:

containers:

- name: mysql

image: harbor250.oldboyedu.com/oldboyedu-db/mysql:8.0.36-oracle

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: yinzhengjie

- name: MYSQL_USER

value: linux97

- name: MYSQL_PASSWORD

value: "oldboyedu"

---

apiVersion: v1

kind: Service

metadata:

name: mysql80-service

spec:

selector:

apps: mysql80

ports:

- protocol: TCP

port: 3306

targetPort: 3306

---

apiVersion: v1

kind: ConfigMap

metadata:

name: my.cnf

data:

.my.cnf: |-

[client]

user = linux97

password = oldboyedu

[client.servers]

user = linux96

password = oldboyedu

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-exporter-deployment

spec:

replicas: 1

selector:

matchLabels:

apps: mysql-exporter

template:

metadata:

labels:

apps: mysql-exporter

spec:

volumes:

- name: data

configMap:

name: my.cnf

items:

- key: .my.cnf

path: .my.cnf

containers:

- name: mysql-exporter

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/mysqld-exporter:v0.15.1

command:

- mysqld_exporter

- --config.my-cnf=/root/my.cnf

- --mysqld.address=mysql80-service.default.svc.oldboyedu.com:3306

securityContext:

runAsUser: 0

ports:

- containerPort: 9104

#env:

#- name: DATA_SOURCE_NAME

# value: mysql_exporter:yinzhengjie@(mysql80-service.default.svc.yinzhengjie.com:3306)

volumeMounts:

- name: data

mountPath: /root/my.cnf

subPath: .my.cnf

---

apiVersion: v1

kind: Service

metadata:

name: mysql-exporter-service

labels:

apps: mysqld

spec:

selector:

apps: mysql-exporter

ports:

- protocol: TCP

port: 9104

targetPort: 9104

name: mysql80

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: oldboyedu-mysql-smon

spec:

jobLabel: kubeadm-mysql-k8s-yinzhengjie

endpoints:

- interval: 3s

# 这里的端口可以写svc的端口号,也可以写svc的名称。

# 但我推荐写svc端口名称,这样svc就算修改了端口号,只要不修改svc端口的名称,那么我们此处就不用再次修改哟。

# port: 9104

port: mysql80

path: /metrics

scheme: http

namespaceSelector:

matchNames:

- default

selector:

matchLabels:

apps: mysqld

[root@master231 servicemonitors]#

2.Prometheus访问测试

在Prometheus的WebUI使用'mysql_up'关键字搜索即可。

3.Grafana导入模板

在grafana的dashboard导入'7362'的模板ID即可。

五.Prometheus监控自定义程序指标

推荐阅读:

https://www.cnblogs.com/yinzhengjie/p/19119701

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/19114433,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。

浙公网安备 33010602011771号

浙公网安备 33010602011771号