GlusterFS高可用集群部署及K8S对接实战篇

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

目录

一.GlusterFS存储解决方案概述

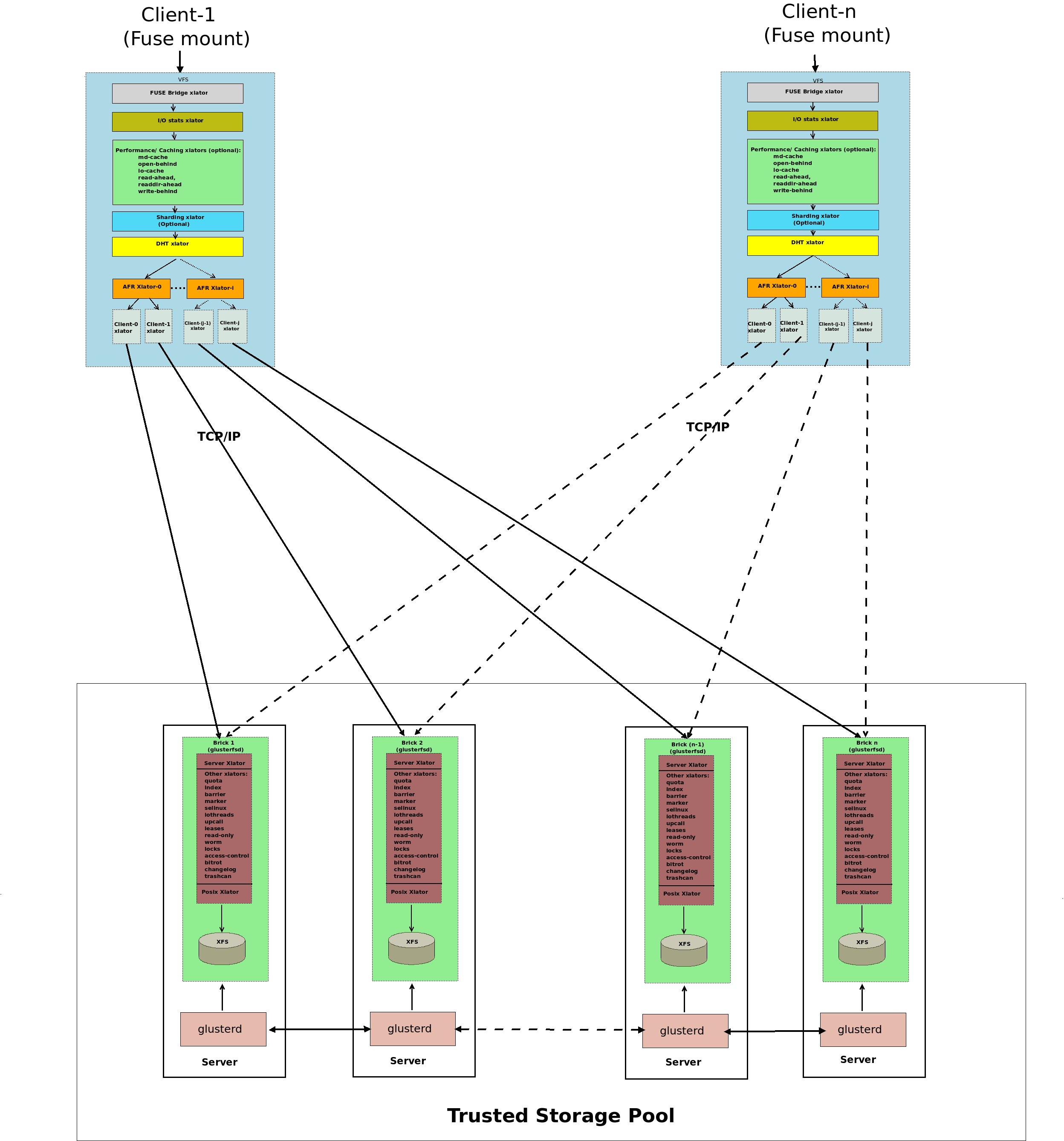

1.glusterfs概述

Gluster是一种软件定义的分布式存储,可以扩展到数PB。它为对象、块和文件存储提供接口。

官网地址:

https://www.gluster.org/

github地址:

https://github.com/gluster/glusterfs

glusterFS架构图:

https://docs.gluster.org/en/latest/Quick-Start-Guide/Architecture/

2.GlusterFS环境准备

| 主机名 | 操作系统 | IP地址 | 磁盘 |

|---|---|---|---|

| master241 | Ubuntu 22.04 LTS | 10.0.0.241 | sdb ---> 300G(磁盘容量可以更大) |

| worker242 | Ubuntu 22.04 LTS | 10.0.0.242 | sdb ---> 300G |

| worker243 | Ubuntu 22.04 LTS | 10.0.0.243 | sdb ---> 300G |

如上表所示,我采用的是Ubuntu 22.04LTS系统,3个节点来部署GlusterFS集群。

3.Ubuntu安装GlusterFS

温馨提示:

官方对于Ubuntu仅支持如下版本:

Ubuntu 16.04 LTS、18.04 LTS、20.04 LTS、20.10、21.04

尽管官方这样说,添加PPA可能会失败,但并不影响安装Glusterfs。

参考链接:

https://docs.gluster.org/en/latest/Install-Guide/Install/#for-ubuntu

https://docs.gluster.org/en/latest/Install-Guide/Community-Packages/#community-packages

实操案例:

1.安装通用的软件包

apt install software-properties-common

2.添加社区GlusterFS PPA(如果Ubuntu 22.04LTS报错404可以忽略,继续执行下一步)

add-apt-repository ppa:gluster/glusterfs-7

apt update

3.安装glusterfs

apt install glusterfs-server

4.配置Glusterfs开机自启动

systemctl enable --now glusterd.service

5.检查GlusterFS是否正常启动

systemctl is-enabled glusterd.service

systemctl status glusterd.service

4.GlusterFS集群配置

1.查看集群状态

[root@master241 ~]# gluster peer status

Number of Peers: 0

[root@master241 ~]#

2.将其他节点加入到现有的集群环境

[root@master241 ~]# gluster peer probe 10.0.0.242

peer probe: success

[root@master241 ~]#

[root@master241 ~]# gluster peer probe 10.0.0.243

peer probe: success

[root@master241 ~]#

3.集群节点查看集群状态

[root@master241 ~]# gluster peer status

Number of Peers: 2

Hostname: 10.0.0.242

Uuid: a2922996-e4cc-4331-a78b-fb3dbf7234e4

State: Peer in Cluster (Connected)

Hostname: 10.0.0.243

Uuid: 301a57f8-21a0-49d0-808f-35b99a061541

State: Peer in Cluster (Connected)

[root@master241 ~]#

[root@worker242 ~]# gluster peer status

Number of Peers: 2

Hostname: 10.0.0.241

Uuid: dc3b2a67-388d-485b-a1e3-302dc0cc8687

State: Peer in Cluster (Connected)

Hostname: 10.0.0.243

Uuid: 301a57f8-21a0-49d0-808f-35b99a061541

State: Peer in Cluster (Connected)

[root@worker242 ~]#

[root@worker243 ~]# gluster peer status

Number of Peers: 2

Hostname: 10.0.0.241

Uuid: dc3b2a67-388d-485b-a1e3-302dc0cc8687

State: Peer in Cluster (Connected)

Hostname: 10.0.0.242

Uuid: a2922996-e4cc-4331-a78b-fb3dbf7234e4

State: Peer in Cluster (Connected)

[root@worker243 ~]#

[root@worker243 ~]#

参考链接:

https://docs.gluster.org/en/latest/Install-Guide/Configure/

二.GlusterFS基本管理

1.各节点准备磁盘

1.各节点创建挂在点

[root@master241 ~]# mkdir -pv /data/glusterfs/

[root@worker242 ~]# mkdir -pv /data/glusterfs/

[root@worker243 ~]# mkdir -pv /data/glusterfs/

2.格式化磁盘

[root@master241 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

...

sdb 8:16 0 300G 0 disk

sdc 8:32 0 500G 0 disk

sdd 8:48 0 1T 0 disk

sr0 11:0 1 1024M 0 rom

[root@master241 ~]#

[root@master241 ~]# mkfs.xfs -i size=512 /dev/sdb

[root@worker242 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

...

sdb 8:16 0 300G 0 disk

sdc 8:32 0 500G 0 disk

sdd 8:48 0 1T 0 disk

sr0 11:0 1 1024M 0 rom

[root@worker242 ~]#

[root@worker242 ~]# mkfs.xfs -i size=512 /dev/sdb

[root@worker243 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

...

sdb 8:16 0 300G 0 disk

sdc 8:32 0 500G 0 disk

sdd 8:48 0 1T 0 disk

sr0 11:0 1 1024M 0 rom

[root@worker243 ~]#

[root@worker243 ~]# mkfs.xfs -i size=512 /dev/sdb

3.配置开机挂载数据盘

[root@master241 ~]# echo "/dev/sdb /data/glusterfs xfs defaults 0 0" >> /etc/fstab

[root@worker242 ~]# echo "/dev/sdb /data/glusterfs xfs defaults 0 0" >> /etc/fstab

[root@worker243 ~]# echo "/dev/sdb /data/glusterfs xfs defaults 0 0" >> /etc/fstab

4.刷新fstab并验证

[root@master241 ~]# mount -a && df -h | grep data

/dev/sdb 300G 2.2G 298G 1% /data/glusterfs

[root@master241 ~]#

[root@worker242 ~]# mount -a && df -h | grep data

/dev/sdb 300G 2.2G 298G 1% /data/glusterfs

[root@worker242 ~]#

[root@worker243 ~]# mount -a && df -h | grep data

/dev/sdb 300G 2.2G 298G 1% /data/glusterfs

[root@worker243 ~]#

温馨提示:

建议大家在各节点准备单独的磁盘进行挂载,便于后续创建复制卷,如果不这样做的话,后续的命令在执行时必须加"--force"。

官方的建议是单独搞一块磁盘单独存储GlusterFS数据,不建议直接使用根分区进行存储。

参考链接:

https://docs.gluster.org/en/latest/Install-Guide/Configure/#partition-the-disk

2.创建复制卷

1.创建复制卷

[root@master241 ~]# gluster volume create yinzhengjie-volume replica 3 10.0.0.241:/data/glusterfs/r1 10.0.0.242:/data/glusterfs/r2 10.0.0.243:/data/glusterfs/r3

volume create: yinzhengjie-volume: success: please start the volume to access data

[root@master241 ~]#

2.查看复制卷状态

[root@master241 ~]# gluster volume status yinzhengjie-volume

Volume yinzhengjie-volume is not started

[root@master241 ~]#

3.启动复制卷

[root@master241 ~]# gluster volume start yinzhengjie-volume

volume start: yinzhengjie-volume: success

[root@master241 ~]#

4.再次查看复制卷状态

[root@master241 ~]# gluster volume status yinzhengjie-volume

Status of volume: yinzhengjie-volume

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick 10.0.0.241:/data/glusterfs/r1 56017 0 Y 16318

Brick 10.0.0.242:/data/glusterfs/r2 54370 0 Y 10750

Brick 10.0.0.243:/data/glusterfs/r3 51212 0 Y 10368

Self-heal Daemon on localhost N/A N/A Y 16335

Self-heal Daemon on 10.0.0.242 N/A N/A Y 10767

Self-heal Daemon on 10.0.0.243 N/A N/A Y 10385

Task Status of Volume yinzhengjie-volume

------------------------------------------------------------------------------

There are no active volume tasks

[root@master241 ~]#

5.查看卷的详细信息

[root@master241 ~]# gluster volume info

Volume Name: yinzhengjie-volume

Type: Replicate

Volume ID: f962bd43-e4d4-424b-b693-52714afb6aed

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: 10.0.0.241:/data/glusterfs/r1

Brick2: 10.0.0.242:/data/glusterfs/r2

Brick3: 10.0.0.243:/data/glusterfs/r3

Options Reconfigured:

cluster.granular-entry-heal: on

storage.fips-mode-rchecksum: on

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

[root@master241 ~]#

6.配置存储卷使用过程中允许某个brick不在线不印象客户端挂载使用(可选操作)

[root@master241 ~]# gluster volume get yinzhengjie-volume cluster.server-quorum-type

Option Value

------ -----

cluster.server-quorum-type off # 默认值为off

[root@master241 ~]#

[root@master241 ~]# gluster volume set yinzhengjie-volume cluster.server-quorum-type none

volume set: success

[root@master241 ~]#

[root@master241 ~]# gluster volume get yinzhengjie-volume cluster.server-quorum-type

Option Value

------ -----

cluster.server-quorum-type none # 将值改为none

[root@master241 ~]#

[root@master241 ~]# gluster volume get yinzhengjie-volume cluster.quorum-type

Option Value

------ -----

cluster.quorum-type auto # 默认值为auto

[root@master241 ~]#

[root@master241 ~]# gluster volume set yinzhengjie-volume cluster.quorum-type none

volume set: success

[root@master241 ~]#

[root@master241 ~]# gluster volume get yinzhengjie-volume cluster.quorum-type

Option Value

------ -----

cluster.quorum-type none # 将值改为none

[root@master241 ~]#

7.配置存储卷支持容量配额功能(可选操作)

[root@master241 ~]# gluster volume quota yinzhengjie-volume enable # 启用容量配额功能

volume quota : success

[root@master241 ~]#

[root@master241 ~]# gluster volume quota yinzhengjie-volume limit-usage / 20GB # 客户端只能使用20GB

volume quota : success

[root@master241 ~]#

8.再次查看卷信息

[root@master241 ~]# gluster volume info yinzhengjie-volume

Volume Name: yinzhengjie-volume

Type: Replicate

Volume ID: f962bd43-e4d4-424b-b693-52714afb6aed

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: 10.0.0.241:/data/glusterfs/r1

Brick2: 10.0.0.242:/data/glusterfs/r2

Brick3: 10.0.0.243:/data/glusterfs/r3

Options Reconfigured:

features.quota-deem-statfs: on

features.inode-quota: on

features.quota: on # 开启了资源限额功能

cluster.quorum-type: none # 参数生效了

cluster.server-quorum-type: none # 参数生效了

cluster.granular-entry-heal: on

storage.fips-mode-rchecksum: on

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

[root@master241 ~]#

参考链接:

https://docs.gluster.org/en/latest/Administrator-Guide/Setting-Up-Volumes/

https://docs.gluster.org/en/latest/Install-Guide/Configure/#set-up-a-gluster-volume

3.GlusterFS客户端挂载

1.加载fuse内核模块

modinfo fuse

modprobe fuse

dmesg | grep -i fuse

2.客户端创建挂载点

[root@master241 ~]# mkdir /k8s-glusterfs

[root@worker242 ~]# mkdir /k8s-glusterfs

[root@worker243 ~]# mkdir /k8s-glusterfs

3.客户端挂着并写入测试数据

[root@master241 ~]# mount -t glusterfs 10.0.0.243:/yinzhengjie-volume /k8s-glusterfs/

[root@master241 ~]#

[root@master241 ~]# df -h | grep k8s-glusterfs

10.0.0.243:/yinzhengjie-volume 20G 0 20G 0% /k8s-glusterfs # 挂载的大小是20GB

[root@master241 ~]#

[root@master241 ~]# cp /etc/os-release /etc/fstab /k8s-glusterfs

[root@master241 ~]#

[root@master241 ~]# ll /k8s-glusterfs

total 6

drwxr-xr-x 4 root root 126 May 27 09:29 ./

drwxr-xr-x 23 root root 4096 May 27 09:25 ../

-rw-r--r-- 1 root root 700 May 27 09:29 fstab

-rw-r--r-- 1 root root 427 May 27 09:29 os-release

[root@master241 ~]#

4.另一个客户端继续挂载测试是否共享数据

[root@worker242 ~]# mount -t glusterfs 10.0.0.241:/yinzhengjie-volume /k8s-glusterfs/

[root@worker242 ~]#

[root@worker242 ~]# df -h | grep k8s-glusterfs

10.0.0.241:/yinzhengjie-volume 20G 0 20G 0% /k8s-glusterfs # 和master241节点挂载的不同的Glusterfs节点。

[root@worker242 ~]#

[root@worker242 ~]# ll /k8s-glusterfs/

total 6

drwxr-xr-x 4 root root 126 May 27 09:29 ./

drwxr-xr-x 23 root root 4096 May 27 09:25 ../

-rw-r--r-- 1 root root 700 May 27 09:29 fstab

-rw-r--r-- 1 root root 427 May 27 09:29 os-release

[root@worker242 ~]#

[root@worker242 ~]# cp /etc/hosts /k8s-glusterfs/

[root@worker242 ~]#

[root@worker242 ~]# ll /k8s-glusterfs/

total 7

drwxr-xr-x 4 root root 139 May 27 09:30 ./

drwxr-xr-x 23 root root 4096 May 27 09:25 ../

-rw-r--r-- 1 root root 700 May 27 09:29 fstab

-rw-r--r-- 1 root root 226 May 27 09:30 hosts

-rw-r--r-- 1 root root 427 May 27 09:29 os-release

[root@worker242 ~]#

5.验证数据是否共享

[root@master241 ~]# ll /k8s-glusterfs

total 7

drwxr-xr-x 4 root root 139 May 27 09:30 ./

drwxr-xr-x 23 root root 4096 May 27 09:25 ../

-rw-r--r-- 1 root root 700 May 27 09:29 fstab

-rw-r--r-- 1 root root 226 May 27 09:30 hosts

-rw-r--r-- 1 root root 427 May 27 09:29 os-release

[root@master241 ~]#

6.卸载Glusterfs

[root@master241 ~]# df -h | grep k8s-glusterfs

10.0.0.243:/yinzhengjie-volume 20G 0 20G 0% /k8s-glusterfs

[root@master241 ~]#

[root@master241 ~]# umount /k8s-glusterfs

[root@master241 ~]#

[root@master241 ~]# df -h | grep k8s-glusterfs

[root@master241 ~]#

三.k8s 1.25+弃用Glusterfs存储卷

1.编写资源清单

[root@master241 volumes]# cat 01-glusterfs-volume.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: glusterfs-cluster

subsets:

- addresses:

- ip: 10.0.0.241

ports:

- port: 1

- addresses:

- ip: 10.0.0.242

ports:

- port: 1

- addresses:

- ip: 10.0.0.243

ports:

- port: 1

---

apiVersion: v1

kind: Service

metadata:

name: glusterfs-cluster

spec:

ports:

- port: 1

---

apiVersion: v1

kind: Pod

metadata:

name: glusterfs

spec:

volumes:

- name: data

glusterfs:

endpoints: glusterfs-cluster

path: yinzhengjie-volume

readOnly: false

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1

volumeMounts:

- mountPath: "/yinzhengjie-glusterfs"

name: data

[root@master241 volumes]#

[root@master241 volumes]#

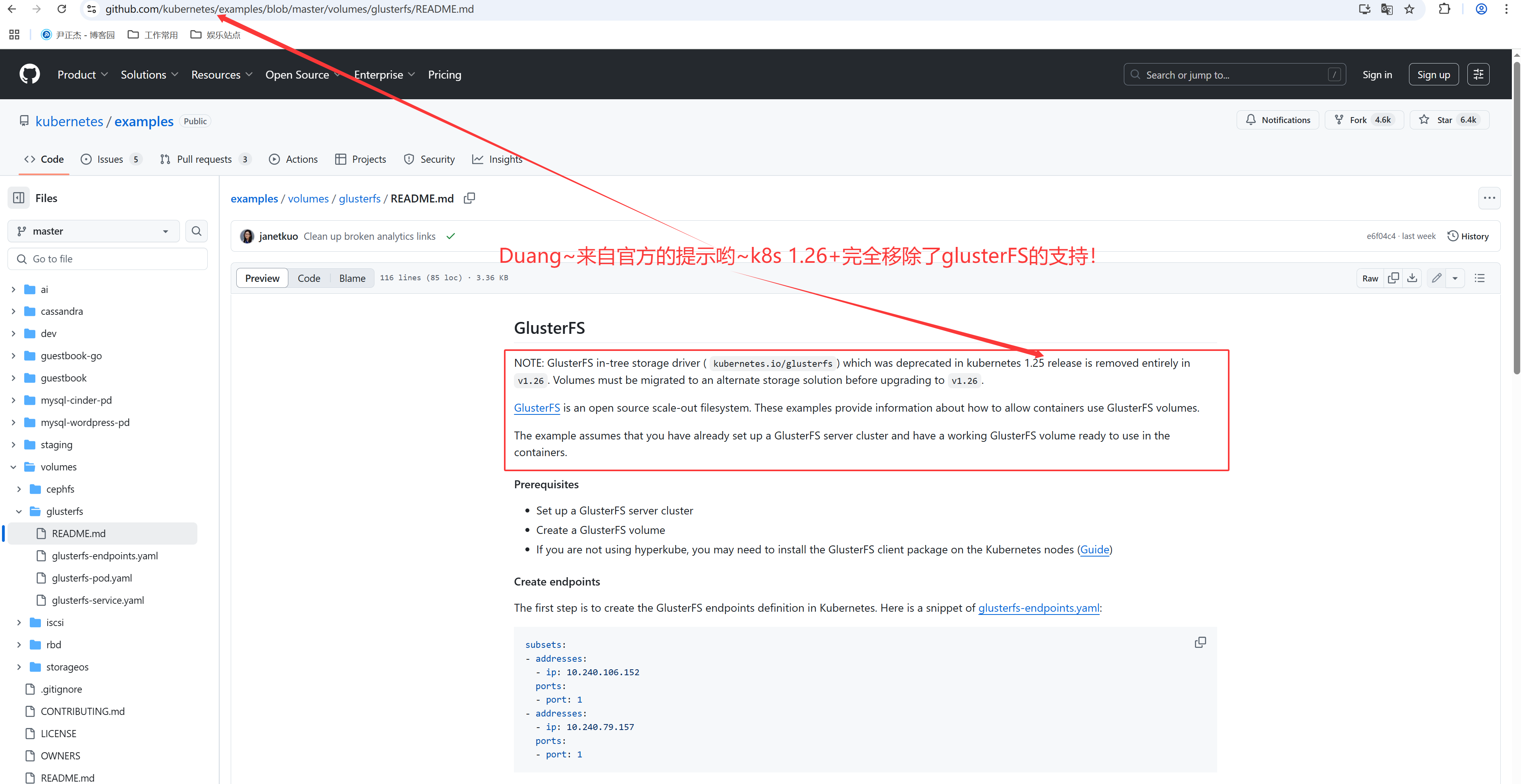

2.K8S 1.26+移除GlusterFS的支持

温馨提示:

不拿发现,如果K8S版本过高,则K8S 1.26+不在支持GlusterFS。

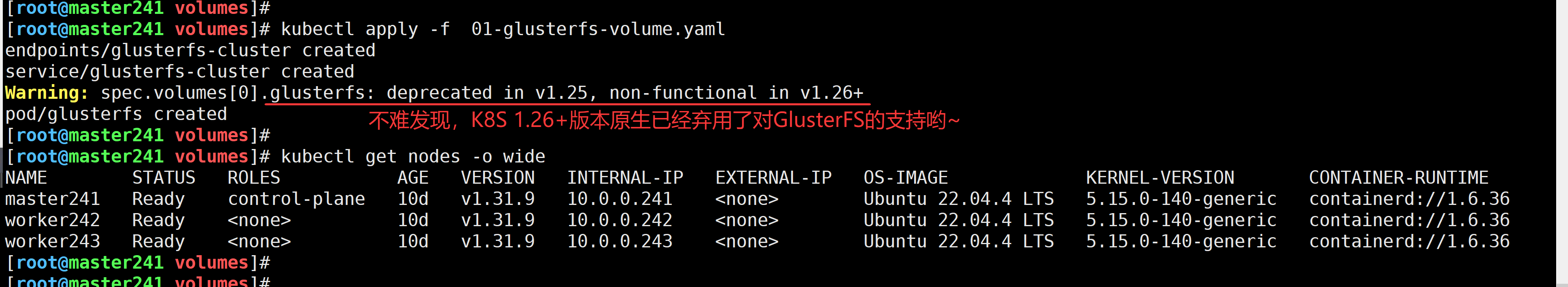

1.创建存储卷

[root@master241 volumes]# kubectl apply -f 01-glusterfs-volume.yaml

endpoints/glusterfs-cluster created

service/glusterfs-cluster created

Warning: spec.volumes[0].glusterfs: deprecated in v1.25, non-functional in v1.26+

pod/glusterfs created

[root@master241 volumes]#

[root@master241 volumes]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master241 Ready control-plane 10d v1.31.9 10.0.0.241 <none> Ubuntu 22.04.4 LTS 5.15.0-140-generic containerd://1.6.36

worker242 Ready <none> 10d v1.31.9 10.0.0.242 <none> Ubuntu 22.04.4 LTS 5.15.0-140-generic containerd://1.6.36

worker243 Ready <none> 10d v1.31.9 10.0.0.243 <none> Ubuntu 22.04.4 LTS 5.15.0-140-generic containerd://1.6.36

[root@master241 volumes]#

2.默认不支持该存储卷

[root@master241 volumes]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

glusterfs 0/1 ContainerCreating 0 4m10s <none> worker243 <none> <none>

[root@master241 volumes]#

[root@master241 volumes]#

[root@master241 volumes]# kubectl describe pod glusterfs

Name: glusterfs

Namespace: default

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 4m14s default-scheduler Successfully assigned default/glusterfs to worker243

Warning FailedMount 8s (x20 over 4m14s) kubelet Unable to attach or mount volumes: unmounted volumes=[data], unattached volumes=[], failed to process volumes=[data]: failed to get Plugin from volumeSpec for volume "data" err=no volume plugin matched

[root@master241 volumes]#

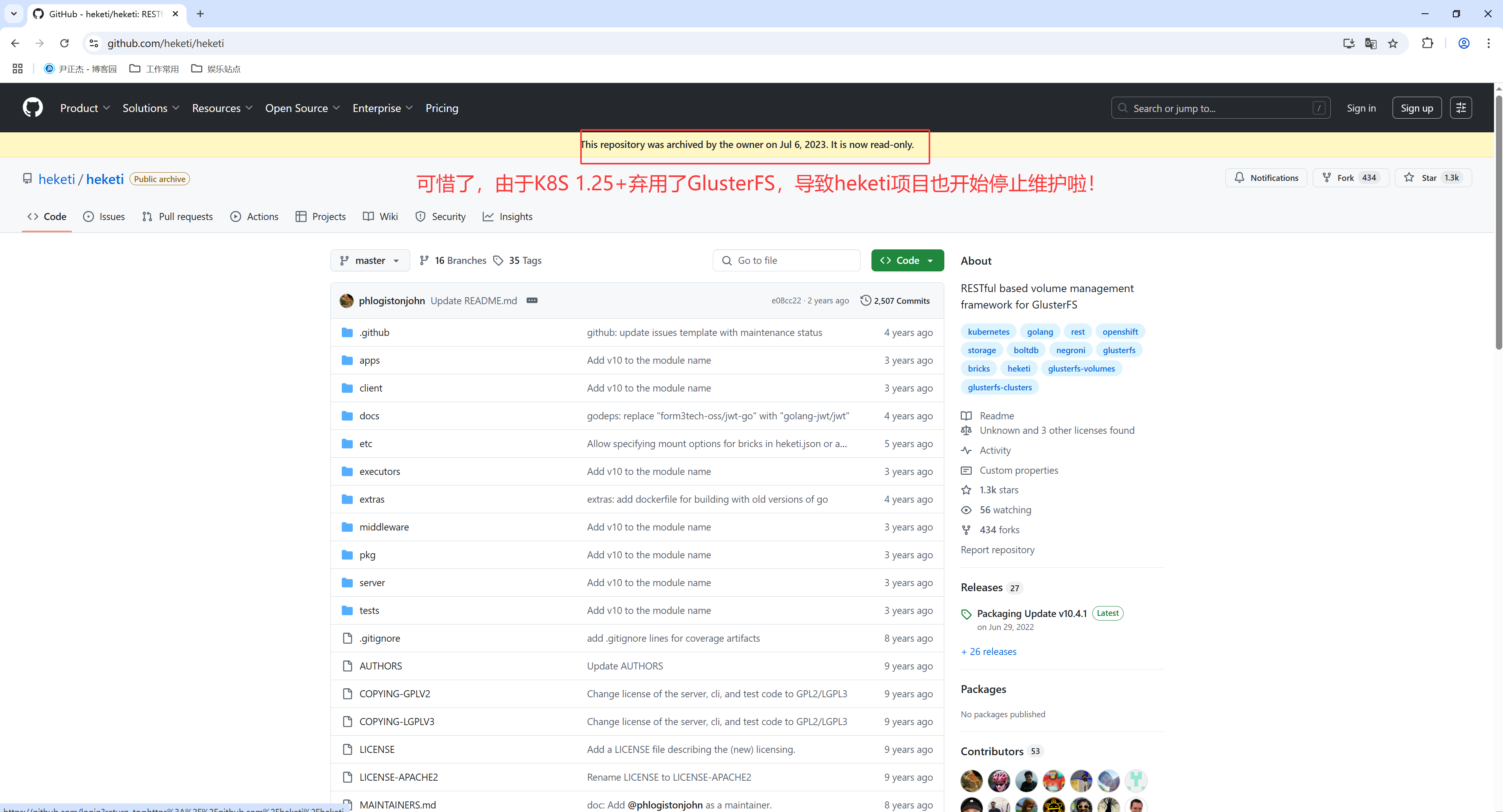

3.Heketi是GlusterFS和K8S的桥梁?

Heketi提供了一个RESTful管理接口,可用于管理GlusterFS卷的生命周期。借助Heketi,OpenStack Manila、Kubernetes和OpenShift等云服务可以动态地为GlusterFS卷提供任何支持的持久性类型。

Heketi还支持任意数量的GlusterFS集群,允许云服务提供网络文件存储,而不限于单个GlusterFS群集。

Heketi是为GlusterFS提供RESETFUL的API,相当于给glusterfs和K8S之间驾通了桥梁,k8s集群可以通过heketi提供的RESTFUL API完成对GlusterFS的POV申请和管理。

github地址:

https://github.com/heketi/heketi

不过可惜的是,此存储库由所有者于2023年7月6日存档。它现在是只读的。

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/18897690,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。

浙公网安备 33010602011771号

浙公网安备 33010602011771号