StatefulSet和Operator实战

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

目录

一.StatefulSet实战

1.StatefulSet概述

StatefulSet控制器自Kubernetes v1.9版本才正式引入,为实现有状态应用编排,它依赖于几个特殊设计

- 1.各Pod副本分别具有唯一的名称标识,这依赖于一个专用的Headless Service实现;

- 2.基于Pod管理策略(Pod Management Policy),可以通过"sts.spec.podManagementPolicy"字段进行定义

定义创建、删除及扩缩容等管理操作期间,施加在Pod副本上的操作方式,包括:OrderedReady和Parallel。

- OrderedReady:

创建或扩容时,顺次完成各Pod副本的创建,且要求只有前一个Pod转为Ready状态后,

才能进行后一个Pod副本的创建;删除或缩容时,逆序、依次完成相关Pod副本的终止。

- Parallel:

各Pod副本的创建或删除操作不存在顺序方面的要求,可同时进行。

- 3.各Pod副本存储的状态数据并不相同,因而需要专用且稳定的Volume

- 基于podTemplate定义Pod模板;

- 在podTemplate上使用volumeTemplate为各Pod副本动态置备PersistentVolume;

StatefulSet功能:负责编排有状态(Stateful Application)应用

- 1.有状态应用会在其会话中保存客户端的数据,并且有可能会在客户端下一次的请求中使用这些数据;

- 2.应用上常见的状态类型:会话状态、连接状态、配置状态、集群状态、持久性状态等;

- 3.大型应用通常具有众多功能模块,这些模块通常会被设计为有状态模块和无状态模块两部分;

- 4.业务逻辑模块一般会被设计为无状态,这些模块需要将其状态数据保存在有状态的中间件服务上,如消息队列、数据库或缓存系统等;

- 5.无状态的业务逻辑模块易于横向扩展,有状态的后端则存在不同的难题;

Pod副本的专用名称标识:

- 1.每个StatefulSet对象强依赖于一个专用的Headless Service对象

- 2.StatefulSet中的各Pod副本分别拥有唯一的名称标识

- 前缀格式为“$(statefulset_name)-$(ordinal)”

- 后缀格式为“$(service_name).$(namespace).cluster.local”

- 3.各Pod的名称标识可由ClustrDNS直接解析为Pod IP

volumeTemplateClaim:

- 1.在创建Pod副本时绑定至专有的PVC

- 2.PVC的名称遵循特定的格式,从而能够与StatefulSet控制器对象的Pod副本建立关联关系

- 3.支持从静态置备或动态置备的PV中完成绑定

- 4.删除Pod(例如缩容),并不会一并删除相关的PVC

2.StatefulSet更新策略

更新策略

- rollingUpdate:滚动更新,自动触发

- onDelete:删除时更新,手动触发

滚动更新

配置策略:

maxUnavailable:

定义单批次允许更新的最大副本数量

partition <integer>:

用于定义更新分区的编号,其序号大于等于该编号的Pod都将被更新,小于该分区号的都不予更新;默认编号为0,即更新所有副本;

更新方式

# kubectl set image (-f FILENAME | TYPE NAME) CONTAINER_NAME_1=CONTAINER_IMAGE_1 ...

# kubectl patch (-f FILENAME | TYPE NAME) [-p PATCH|--patch-file FILE] [options]

# 直接更新原配置文件,而后使用“kubectl apply”命令触发更新

3.StatefulSet存在的问题

各有状态、分布式应用在启动、扩容、缩容等运维操作上的步骤存在差异,甚至完全不同,因而StatefulSet只能提供一个基础的编排框架。

有状态应用所需要的管理操作,需要由用户自行编写代码完成

4.StatefulSet示例

[root@master231 yinzhengjie-k8s]# cat 07-sts-cm-svc.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql-conf

data:

# 定义用于主库的配置文件,主库要求开启二进制日志功能

primary.cnf: |

[mysql]

default-character-set=utf8mb4

[mysqld]

log-bin

character-set-server=utf8mb4

[client]

default-character-set=utf8mb4

# 定义从库的配置文件,从库可以不用开启二进制日志

replica.cnf: |

[mysql]

default-character-set=utf8mb4

[mysqld]

super-read-only

character-set-server=utf8mb4

[client]

default-character-set=utf8mb4

---

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- name: mysql

port: 3306

clusterIP: None

selector:

app: mysql

---

apiVersion: v1

kind: Service

metadata:

name: mysql-read

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

selector:

app: mysql

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: sts-mysql

spec:

selector:

matchLabels:

app: mysql

serviceName: mysql

replicas: 3

template:

metadata:

labels:

app: mysql

spec:

initContainers:

- name: init-mysql

image: mysql:8.0.36-oracle

command:

- bash

- "-c"

- |

set -ex

# Generate mysql server-id from pod ordinal index.

[[ $(cat /proc/sys/kernel/hostname) =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

echo [mysqld] > /mnt/conf.d/server-id.cnf

# Add an offset to avoid reserved server-id=0 value.

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# Copy appropriate conf.d files from config-map to emptyDir.

if [[ $ordinal -eq 0 ]]; then

cp /mnt/config-map/primary.cnf /mnt/conf.d/

else

cp /mnt/config-map/replica.cnf /mnt/conf.d/

fi

volumeMounts:

- name: conf

mountPath: /mnt/conf.d

- name: config-map

mountPath: /mnt/config-map

- name: clone-mysql

image: mysql:8.0.36-oracle

command:

- bash

- "-c"

- |

set -ex

# Skip the clone if data already exists.

[[ -d /var/lib/mysql/mysql ]] && exit 0

# Skip the clone on primary (ordinal index 0).

[[ $(cat /proc/sys/kernel/hostname) =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

[[ $ordinal -eq 0 ]] && exit 0

# Clone data from previous peer.

ncat --recv-only mysql-$(($ordinal-1)).mysql 3307 | xbstream -x -C /var/lib/mysql

# Prepare the backup.

xtrabackup --prepare --target-dir=/var/lib/mysql

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

containers:

- name: mysql

image: mysql:8.0.36-oracle

env:

- name: LANG

value: "C.UTF-8"

- name: MYSQL_ALLOW_EMPTY_PASSWORD

value: "1"

ports:

- name: mysql

containerPort: 3306

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

livenessProbe:

exec:

command: ["mysqladmin", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

exec:

# Check we can execute queries over TCP (skip-networking is off).

command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

- name: xtrabackup

image: mysql:8.0.36-oracle

ports:

- name: xtrabackup

containerPort: 3307

command:

- bash

- "-c"

- |

set -ex

cd /var/lib/mysql

# Determine binlog position of cloned data, if any.

if [[ -f xtrabackup_slave_info && "x$(<xtrabackup_slave_info)" != "x" ]]; then

# XtraBackup already generated a partial "CHANGE MASTER TO" query

# because we're cloning from an existing replica. (Need to remove the tailing semicolon!)

cat xtrabackup_slave_info | sed -E 's/;$//g' > change_master_to.sql.in

# Ignore xtrabackup_binlog_info in this case (it's useless).

rm -f xtrabackup_slave_info xtrabackup_binlog_info

elif [[ -f xtrabackup_binlog_info ]]; then

# We're cloning directly from primary. Parse binlog position.

[[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1

rm -f xtrabackup_binlog_info xtrabackup_slave_info

echo "CHANGE MASTER TO MASTER_LOG_FILE='${BASH_REMATCH[1]}',\

MASTER_LOG_POS=${BASH_REMATCH[2]}" > change_master_to.sql.in

fi

# Check if we need to complete a clone by starting replication.

if [[ -f change_master_to.sql.in ]]; then

echo "Waiting for mysqld to be ready (accepting connections)"

until mysql -h 127.0.0.1 -e "SELECT 1"; do sleep 1; done

echo "Initializing replication from clone position"

mysql -h 127.0.0.1 \

-e "$(<change_master_to.sql.in), \

MASTER_HOST='mysql-0.mysql', \

MASTER_USER='root', \

MASTER_PASSWORD='', \

MASTER_CONNECT_RETRY=10; \

START SLAVE;" || exit 1

# In case of container restart, attempt this at-most-once.

mv change_master_to.sql.in change_master_to.sql.orig

fi

# Start a server to send backups when requested by peers.

exec ncat --listen --keep-open --send-only --max-conns=1 3307 -c \

"xtrabackup --backup --slave-info --stream=xbstream --host=127.0.0.1 --user=root"

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

volumes:

- name: conf

emptyDir: {}

- name: config-map

configMap:

name: mysql-conf

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: "yinzhengjie-local-sc"

resources:

requests:

storage: 10Gi

[root@master231 yinzhengjie-k8s]#

5.sts参考链接

https://kubernetes.io/zh-cn/docs/tutorials/stateful-application/cassandra/#%E4%BD%BF%E7%94%A8-statefulset-%E5%88%9B%E5%BB%BA-cassandra-ring

https://kubernetes.io/zh-cn/docs/tutorials/stateful-application/zookeeper/

二.Operator 简介

1.Operator概述

Operator是由CoreOS开发的,Operator是增强型的控制器(Controller),简单理解就是Operator = Controller + CRD。

Operator扩展了Kubernetes API的功能,并基于该扩展管理复杂应用程序。

- 1.Operator是Kubernetes的扩展软件, 它利用定制的资源类型来增强自动化管理应用及其组件的能力,从而扩展了集群的行为模式;

- 2.使用自定义资源(例如CRD)来管理应用程序及其组件;

- 3.将应用程序视为单个对象,并提供面向该应用程序的自动化管控操作(声明式管理),例如部署、配置、升级、备份、故障转移和灾难恢复等;

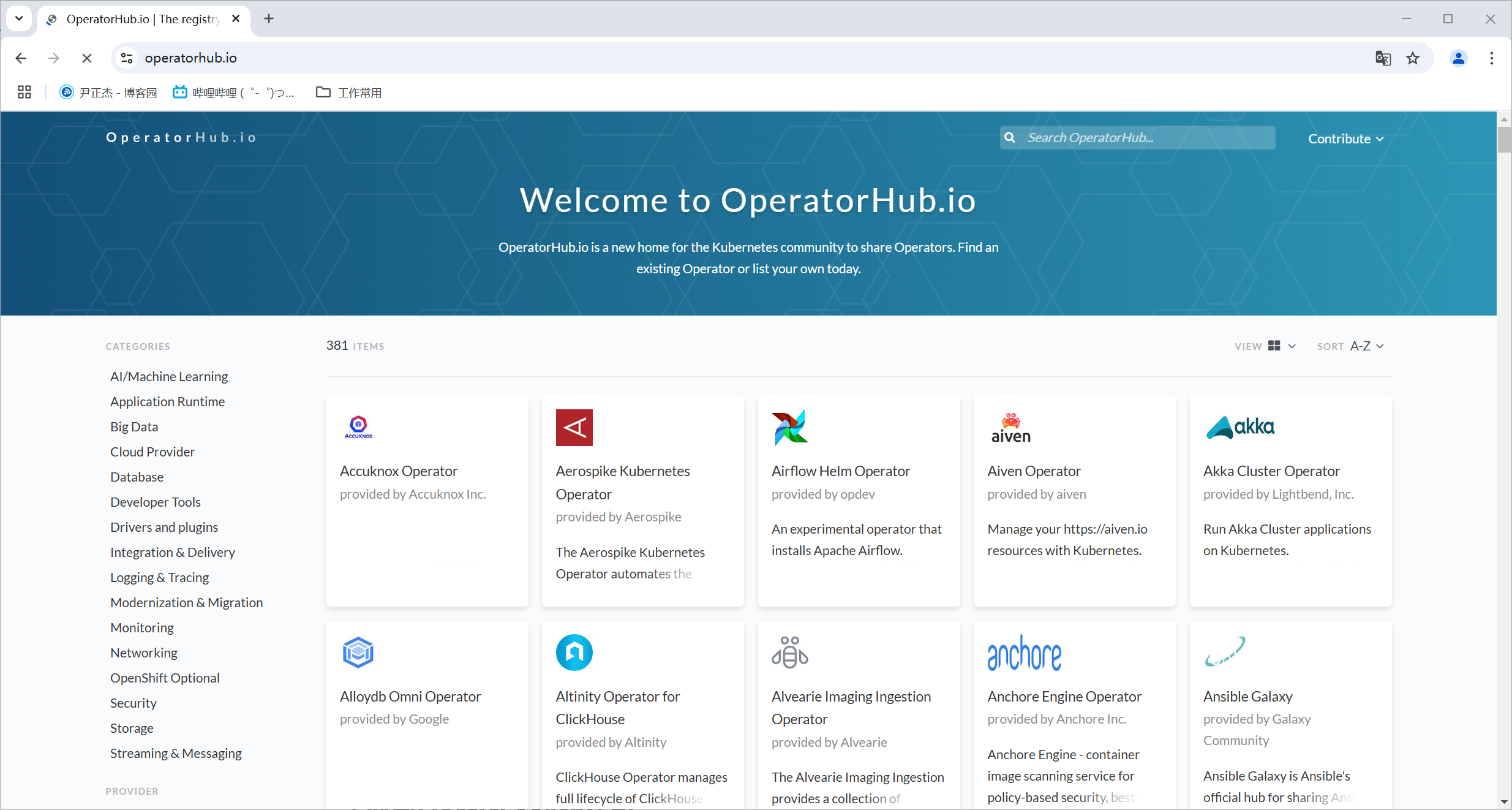

Operator也有很多镜像仓库,其中典型代表如上图所示。

https://operatorhub.io/

基于专用的Operator编排运行某个有状态应用的流程:

- 1.部署Operator及其专用的资源类型;

- 2.使用专用的资源类型,来声明一个有状态应用编排请求:

2.部署ElasticSearch集群

参考链接:

https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-deploy-eck.html

https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-deploy-elasticsearch.html

https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-managing-compute-resources.html

https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-node-configuration.html

https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-volume-claim-templates.html

0.前提条件

部署动态存储类。

1.创建自定义资源

# kubectl create -f https://download.elastic.co/downloads/eck/2.16.1/crds.yaml

2.安装operator及RBAC

# kubectl apply -f https://download.elastic.co/downloads/eck/2.16.1/operator.yaml

3.查看Operator是否部署成功

[root@master231 yinzhengjie-k8s]# kubectl get pods -n elastic-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

elastic-operator-0 1/1 Running 0 77s 10.100.203.135 worker232 <none> <none>

[root@master231 yinzhengjie-k8s]#

4.部署ES集群

cat <<EOF | kubectl apply -f -

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: quickstart

spec:

version: 8.17.2

nodeSets:

- name: masters

count: 3

config:

node.store.allow_mmap: false

node.roles: ["master"]

xpack.ml.enabled: true

- name: data

count: 10

config:

node.roles: ["data", "ingest", "ml", "transform"]

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: standard

podTemplate:

spec:

volumes:

- name: elasticsearch-data

emptyDir: {}

containers:

- name: elasticsearch

env:

- name: ES_JAVA_OPTS

value: -Xms256m -Xmx256m

resources:

requests:

memory: 200Mi

cpu: 0.5

limits:

memory: 500Mi

EOF

3.部署kibana示例

参考链接:

https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-kibana-es.html

4.部署filebeat示例

参考链接:

https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-beat-quickstart.html

5.部署logstash示例

参考链接:

https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-logstash-quickstart.html

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/18720884,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。

浙公网安备 33010602011771号

浙公网安备 33010602011771号