radosgw高可用对象存储网关实战指南

一.对象存储系统概述

1.对象存储网关概述

Ceph对象网关可以将数据存储在用于存储来自cephfs客户端或ceph rbd客户端的数据的同一ceph存储集群中。

object是对象存储系统中数据存储的基本单位,每个Object时数据和数据属性集的综合体,数据数据可以根据应用的需求进行设置,包括数据分布,服务质量等。

每个对象自我维护其属性,从而简化了存储系统的管理任务,对象的大小可以不同,甚至可以包含整个数据结构,如文件,数据库表项等,文件等上传和下载,默认有一个最大的数据块15MB。

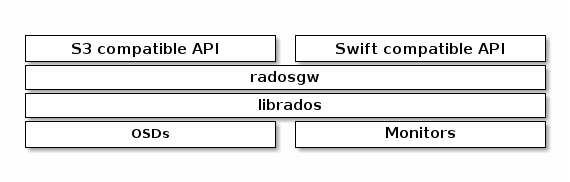

Ceph对象存储使用Ceph对象网关守护进程(Rados GateWay,简称rgw),它是用于与ceph存储集群进行交互式的HTTP服务器。

Ceph RGW基于librados,是为应用提供RESTful类型的对象存储接口,默认使用Civetweb作为其Web Service。

在N版本中Civetweb默认使用法端口7480提供服务,但R版本(18.2.4)中使用了80端口,若想自定义端口就需要修改ceph的配置文件。

- 自0.80版本(Firefly,2014-05-01~2016-04-01)起,Ceph放弃了apache和fastcgi提供radosgw服务;

- 默认嵌入了在ceph-radosgw进程中的Citeweb,这种新的实现方式更加轻便和简洁,但直到Ceph 11.0.1 Kraken(2017-01-01~2017-08-01)版本,Citeweb才开始支持SSL协议。

推荐阅读:

https://docs.ceph.com/en/nautilus/radosgw/

https://docs.ceph.com/en/nautilus/radosgw/bucketpolicy/

https://docs.aws.amazon.com/zh_cn/AmazonS3/latest/userguide/bucketnamingrules.html

https://www.s3express.com/help/help.html

2.对象存储系统的核心资源概述

各种存储方案虽然在设计与实现上有所区别,但大多数对象存储系统对外呈现的核心资源类型大同小异。

一般来说,一个对象存储系统的核心资源应该包括(User),存储桶(Bucket)和对象(object),它们之间的关系是:

- 1.User将Object存储到存储系统上的Bucket;

- 2.存储桶属于某个用户并可以容纳对象,一个存储桶用于存储多个对象;

- 3.同一个用户可以拥有多个存储桶,不同用户允许使用相同名称的bucket;

3.ceph rgw支持的接口

RGW需要自己独有的守护进程服务才可以正常的使用,RGW并非必须的接口,仅在需要用到S3和Swift兼容的RESTful接口时才需要部署RGW实例,RGW在创建的时候,会自动初始化自己的存储池。

如上图所示,由于RGW提供与OpenStack Swift和Amazon S3兼容的接口,因此ceph对象网关具有自己的用户管理。

- Amazon S3:

兼容Amazon S3RESTful API,侧重命令行操作。

提供了user,bucket和object分别表示用户,存储桶和对象,其中bucket隶属于user。

因此user名称即可作为bucket的名称空间,不同用户允许使用相同的bucket。

- OpenStack Swift:

兼容OpenStack Swift API,侧重应用代码实践。

提供了user,container和object分别对应于用户,存储桶和对象,不过它还额外为user提供了父及组件account,用于表示一个项目或租户。

因此一个account中可包含一到多个user,它们可共享使用同一组container,并为container提供名称空间。

- RadosGW:

提供了user,subuser,bucket和object,其中user对应于S3的user,而subuser则对应于Swift的user,不过user和subuser都不支持为bucket提供名称空间,因此不同用户的存储桶也不允许同名。

不过,自Jewel版本(10.2.11,2016-04-01~2018-07-01)起,RadosGW引入了tenant(租户)用于为user和bucket提供名称空间,但它是可选组件。

Jewel版本之前,radosgw的所有user位于同一名称空间,它要求所有user的ID必须唯一,并且即便是不同user的bucket也不允许使用相同的bucket ID。

二.高可用radosgw实操案例

1 部署之前查看集群状态

[root@harbor250 ceph-cluster]# ceph -s

cluster:

id: 5821e29c-326d-434d-a5b6-c492527eeaad

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph141,ceph142,ceph143 (age 4h)

mgr: ceph142(active, since 4h), standbys: ceph141, ceph143

mds: yinzhengjie-linux-cephfs:2 {0=ceph143=up:active,1=ceph141=up:active} 1 up:standby

osd: 7 osds: 7 up (since 4h), 7 in (since 23h)

data:

pools: 5 pools, 256 pgs

objects: 104 objects, 100 MiB

usage: 7.8 GiB used, 1.9 TiB / 2.0 TiB avail

pgs: 256 active+clean

[root@harbor250 ceph-cluster]#

2 部署rgw组件

[root@harbor250 ceph-cluster]# pwd

/yinzhengjie/softwares/ceph-cluster

[root@harbor250 ceph-cluster]#

[root@harbor250 ceph-cluster]# ceph-deploy rgw create ceph141 ceph142 ceph143

...

[ceph141][INFO ] Running command: systemctl start ceph-radosgw@rgw.ceph141

[ceph141][INFO ] Running command: systemctl enable ceph.target

[ceph_deploy.rgw][INFO ] The Ceph Object Gateway (RGW) is now running on host ceph141 and default port 7480

...

[ceph142][INFO ] Running command: systemctl start ceph-radosgw@rgw.ceph142

[ceph142][INFO ] Running command: systemctl enable ceph.target

[ceph_deploy.rgw][INFO ] The Ceph Object Gateway (RGW) is now running on host ceph142 and default port 7480

...

[ceph143][INFO ] Running command: systemctl start ceph-radosgw@rgw.ceph143

[ceph143][INFO ] Running command: systemctl enable ceph.target

[ceph_deploy.rgw][INFO ] The Ceph Object Gateway (RGW) is now running on host ceph143 and default port 7480

[root@harbor250 ceph-cluster]#

3 查看集群状态

[root@ceph141 ~]# ceph -s

cluster:

id: 5821e29c-326d-434d-a5b6-c492527eeaad

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph141,ceph142,ceph143 (age 4h)

mgr: ceph142(active, since 4h), standbys: ceph141, ceph143

mds: yinzhengjie-linux-cephfs:2 {0=ceph143=up:active,1=ceph141=up:active} 1 up:standby

osd: 7 osds: 7 up (since 4h), 7 in (since 23h)

rgw: 3 daemons active (ceph141, ceph142, ceph143)

task status:

data:

pools: 9 pools, 384 pgs

objects: 293 objects, 100 MiB

usage: 7.8 GiB used, 1.9 TiB / 2.0 TiB avail

pgs: 384 active+clean

[root@ceph141 ~]#

4 访问对象存储的WebUI

http://10.0.0.141:7480/

http://10.0.0.142:7480/

http://10.0.0.143:7480/

5 查看rgw默认创建的存储池信息

[root@ceph141 ~]# ceph osd pool ls

...

.rgw.root

default.rgw.control

default.rgw.meta

default.rgw.log

[root@ceph141 ~]#

[root@ceph141 ~]# radosgw-admin zone get --rgw-zone=default --rgw-zonegroup=default

{

"id": "3841e9b3-21d3-42fb-b7b0-ccbf325c7447",

"name": "default",

"domain_root": "default.rgw.meta:root",

"control_pool": "default.rgw.control",

"gc_pool": "default.rgw.log:gc",

"lc_pool": "default.rgw.log:lc",

"log_pool": "default.rgw.log",

"intent_log_pool": "default.rgw.log:intent",

"usage_log_pool": "default.rgw.log:usage",

"reshard_pool": "default.rgw.log:reshard",

"user_keys_pool": "default.rgw.meta:users.keys",

"user_email_pool": "default.rgw.meta:users.email",

"user_swift_pool": "default.rgw.meta:users.swift",

"user_uid_pool": "default.rgw.meta:users.uid",

"otp_pool": "default.rgw.otp",

"system_key": {

"access_key": "",

"secret_key": ""

},

"placement_pools": [

{

"key": "default-placement",

"val": {

"index_pool": "default.rgw.buckets.index",

"storage_classes": {

"STANDARD": {

"data_pool": "default.rgw.buckets.data"

}

},

"data_extra_pool": "default.rgw.buckets.non-ec",

"index_type": 0

}

}

],

"metadata_heap": "",

"realm_id": ""

}

[root@ceph141 ~]#

三.s3cmd工具上传视频访问验证

1 安装s3cmd工具

[root@ceph141 ~]# echo 10.0.0.141 www.yinzhengjie.com >> /etc/hosts

[root@ceph141 ~]#

[root@ceph141 ~]# yum -y install s3cmd

2 创建rgw账号

[root@ceph141 ~]# radosgw-admin user create --uid "jasonyin" --display-name "尹正杰"

{

"user_id": "jasonyin",

"display_name": "尹正杰",

"email": "",

"suspended": 0,

"max_buckets": 1000,

"subusers": [],

"keys": [

{

"user": "jasonyin",

"access_key": "TUU92NW7FLT1F9Q9U1XP",

"secret_key": "OMoGtIafUfV8GvwzsSJYHhaLZGmRJaq7WElww9a5"

}

],

"swift_keys": [],

"caps": [],

"op_mask": "read, write, delete",

"default_placement": "",

"default_storage_class": "",

"placement_tags": [],

"bucket_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"user_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"temp_url_keys": [],

"type": "rgw",

"mfa_ids": []

}

[root@ceph141 ~]#

3 运行s3cmd的运行环境,生成"/root/.s3cfg"配置文件

[root@ceph141 ~]# s3cmd --configure

Enter new values or accept defaults in brackets with Enter.

Refer to user manual for detailed description of all options.

Access key and Secret key are your identifiers for Amazon S3. Leave them empty for using the env variables.

Access Key: EF8F7EZ7T0LW37IHEUL2 # rgw账号的access_key

Secret Key: Hn5zqVPD2PYh1T1gbf5n3HYrlzx33ECS7Gz4Wz4M # rgw账号的secret_key

Default Region [US]: # 直接回车即可

Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3.

S3 Endpoint [s3.amazonaws.com]: www.yinzhengjie.com:7480 # 用于访问rgw的地址

Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be used

if the target S3 system supports dns based buckets.

DNS-style bucket+hostname:port template for accessing a bucket [%(bucket)s.s3.amazonaws.com]: www.yinzhengjie.com:7480/%(bucket) # 设置自定义的风格

Encryption password is used to protect your files from reading

by unauthorized persons while in transfer to S3

Encryption password: # 文件不加密,直接回车即可

Path to GPG program [/usr/bin/gpg]: # 指定自定义的gpg程序路径,直接回车即可

When using secure HTTPS protocol all communication with Amazon S3

servers is protected from 3rd party eavesdropping. This method is

slower than plain HTTP, and can only be proxied with Python 2.7 or newer

Use HTTPS protocol [Yes]: No # 你的rgw是否是https,如果不是设置为No

On some networks all internet access must go through a HTTP proxy.

Try setting it here if you can't connect to S3 directly

HTTP Proxy server name: # 直接回车即可

New settings:

Access Key: EF8F7EZ7T0LW37IHEUL2

Secret Key: Hn5zqVPD2PYh1T1gbf5n3HYrlzx33ECS7Gz4Wz4M

Default Region: US

S3 Endpoint: www.yinzhengjie.com:7480

DNS-style bucket+hostname:port template for accessing a bucket: www.yinzhengjie.com:7480/%(bucket)

Encryption password:

Path to GPG program: /usr/bin/gpg

Use HTTPS protocol: False

HTTP Proxy server name:

HTTP Proxy server port: 0

Test access with supplied credentials? [Y/n] y # 如果上述信息没问题,就输入字母y即可

Please wait, attempting to list all buckets...

Success. Your access key and secret key worked fine :-)

Now verifying that encryption works...

Not configured. Never mind.

Save settings? [y/N] y # 保存配置

Configuration saved to '/root/.s3cfg' # 将配置写入"/root/.s3cfg"文件

[root@ceph141 ~]#

4 创建buckets

[root@ceph141 ~]# s3cmd mb s3://yinzhengjie-bucket

Bucket 's3://yinzhengjie-bucket/' created

[root@ceph141 ~]#

5 查看buckets

[root@ceph141 ~]# s3cmd ls

2024-02-02 08:20 s3://yinzhengjie-bucket

[root@ceph141 ~]#

6 使用s3cmd上传数据到buckets

[root@ceph141 ~]# ll 02-ceph用户管理-用户格式及权限说明.mp4

-rw-r--r-- 1 root root 65575408 Feb 2 16:22 02-ceph用户管理-用户格式及权限说明.mp4

[root@ceph141 ~]#

[root@ceph141 ~]# ll -h 02-ceph用户管理-用户格式及权限说明.mp4

-rw-r--r-- 1 root root 63M Feb 2 16:22 02-ceph用户管理-用户格式及权限说明.mp4

[root@ceph141 ~]#

[root@ceph141 ~]# s3cmd put 02-ceph用户管理-用户格式及权限说明.mp4 s3://yinzhengjie-bucket

upload: '02-ceph用户管理-用户格式及权限说明.mp4' -> 's3://yinzhengjie-bucket/02-ceph用户管理-用户格式及权限说明.mp4' [part 1 of 5, 15MB] [1 of 1]

15728640 of 15728640 100% in 2s 5.95 MB/s done

upload: '02-ceph用户管理-用户格式及权限说明.mp4' -> 's3://yinzhengjie-bucket/02-ceph用户管理-用户格式及权限说明.mp4' [part 2 of 5, 15MB] [1 of 1]

15728640 of 15728640 100% in 0s 35.09 MB/s done

upload: '02-ceph用户管理-用户格式及权限说明.mp4' -> 's3://yinzhengjie-bucket/02-ceph用户管理-用户格式及权限说明.mp4' [part 3 of 5, 15MB] [1 of 1]

15728640 of 15728640 100% in 0s 32.56 MB/s done

upload: '02-ceph用户管理-用户格式及权限说明.mp4' -> 's3://yinzhengjie-bucket/02-ceph用户管理-用户格式及权限说明.mp4' [part 4 of 5, 15MB] [1 of 1]

15728640 of 15728640 100% in 0s 37.63 MB/s done

upload: '02-ceph用户管理-用户格式及权限说明.mp4' -> 's3://yinzhengjie-bucket/02-ceph用户管理-用户格式及权限说明.mp4' [part 5 of 5, 2MB] [1 of 1]

2660848 of 2660848 100% in 0s 25.07 MB/s done

[root@ceph141 ~]#

[root@ceph141 ~]#

[root@ceph141 ~]# echo 15728640*4+2660848 | bc # 很明显,上面在上传视频的时候把文件拆成了5个部分,上传的总大小是一致的。

65575408

[root@ceph141 ~]#

温馨提示:

如上所示,对于一个大的RGW Object,会被切割成多个独立的RGW Object上传,称为"multipart",“multipart”的优势是断点续传。s3接口默认切割大小为15MB。

7 使用s3cmd下载数据

[root@ceph141 ~]# s3cmd get s3://yinzhengjie-bucket/02-ceph用户管理-用户格式及权限说明.mp4 /tmp/

download: 's3://yinzhengjie-bucket/02-ceph用户管理-用户格式及权限说明.mp4' -> '/tmp/02-ceph用户管理-用户格式及权限说明.mp4' [1 of 1]

65575408 of 65575408 100% in 0s 114.53 MB/s done

[root@ceph141 ~]#

[root@ceph141 ~]# ll /tmp/02-ceph用户管理-用户格式及权限说明.mp4

-rw-r--r-- 1 root root 65575408 Feb 2 08:23 /tmp/02-ceph用户管理-用户格式及权限说明.mp4

[root@ceph141 ~]#

[root@ceph141 ~]# diff 02-ceph用户管理-用户格式及权限说明.mp4 /tmp/02-ceph用户管理-用户格式及权限说明.mp4

[root@ceph141 ~]#

[root@ceph141 ~]# md5sum 02-ceph用户管理-用户格式及权限说明.mp4 /tmp/02-ceph用户管理-用户格式及权限说明.mp4

bbcb4f121e74fb546298b501d93f275a 02-ceph用户管理-用户格式及权限说明.mp4

bbcb4f121e74fb546298b501d93f275a /tmp/02-ceph用户管理-用户格式及权限说明.mp4

[root@ceph141 ~]#

8 授权策略

[root@ceph141 ~]# cat yinzhengjie-anonymous-access-policy.json

{

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": {"AWS": ["*"]},

"Action": "s3:GetObject",

"Resource": [

"arn:aws:s3:::yinzhengjie-bucket/*"

]

}]

}

[root@ceph141 ~]#

[root@ceph141 ~]# s3cmd setpolicy yinzhengjie-anonymous-access-policy.json s3://yinzhengjie-bucket

s3://yinzhengjie-bucket/: Policy updated

[root@ceph141 ~]#

[root@ceph141 ~]# s3cmd info s3://yinzhengjie-bucket

s3://yinzhengjie-bucket/ (bucket):

Location: default

Payer: BucketOwner

Expiration Rule: none

Policy: {

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": {"AWS": ["*"]},

"Action": "s3:GetObject",

"Resource": [

"arn:aws:s3:::yinzhengjie-bucket/*"

]

}]

}

CORS: none

ACL: 尹正杰: FULL_CONTROL

[root@ceph141 ~]#

radosgw的策略配置参考链接:

https://docs.ceph.com/en/nautilus/radosgw/bucketpolicy/

9 基于http方式访问对象存储

http://www.yinzhengjie.com:7480/yinzhengjie-bucket/02-ceph用户管理-用户格式及权限说明.mp4

温馨提示:

- 1.对于对象存储网关而言,我们需要将"www.yinzhengjie.com"解析到ceph141,ceph142,ceph143的任意一个节点上;

- 2.生产环境中,建议在rgw设备前加一个负载均衡器,以防止后端rgw宕机的情况,以减少单点故障的问题;

- 3.在使用http方式访问对象存储的时候,我们需要注意以下事项:

- 3.1 资源对象的访问方式

http还是https,依赖于rgw的基本配置。

- 3.2 资源对象的访问控制

通过定制策略的方式来实现。

- 3.3 资源对象的跨域问题

通过定义cors的方式来实现。

- 3.4 资源对象在浏览器端端缓存机制

rgw端基本配置定制。

10 删除策略

[root@ceph141 ~]# s3cmd info s3://yinzhengjie-bucket

s3://yinzhengjie-bucket/ (bucket):

Location: default

Payer: BucketOwner

Expiration Rule: none

Policy: {

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": {"AWS": ["*"]},

"Action": "s3:GetObject",

"Resource": [

"arn:aws:s3:::yinzhengjie-bucket/*"

]

}]

}

CORS: none

ACL: 尹正杰: FULL_CONTROL

[root@ceph141 ~]#

[root@ceph141 ~]# s3cmd delpolicy s3://yinzhengjie-bucket

s3://yinzhengjie-bucket/: Policy deleted

[root@ceph141 ~]#

[root@ceph141 ~]# s3cmd info s3://yinzhengjie-bucket

s3://yinzhengjie-bucket/ (bucket):

Location: default

Payer: BucketOwner

Expiration Rule: none

Policy: none

CORS: none

ACL: 尹正杰: FULL_CONTROL

[root@ceph141 ~]#

请思考:

可道云之前使用阿里云的对象存储,那么现在是否可以使用ceph的对象存储来替代呢?将他封装成一个项目吧!

11 其他使用技巧

其实s3cmd还支持存储桶和文件的其他管理方式,如果需要,自行参考帮助信息即可。

具体实操可以课堂演示下。

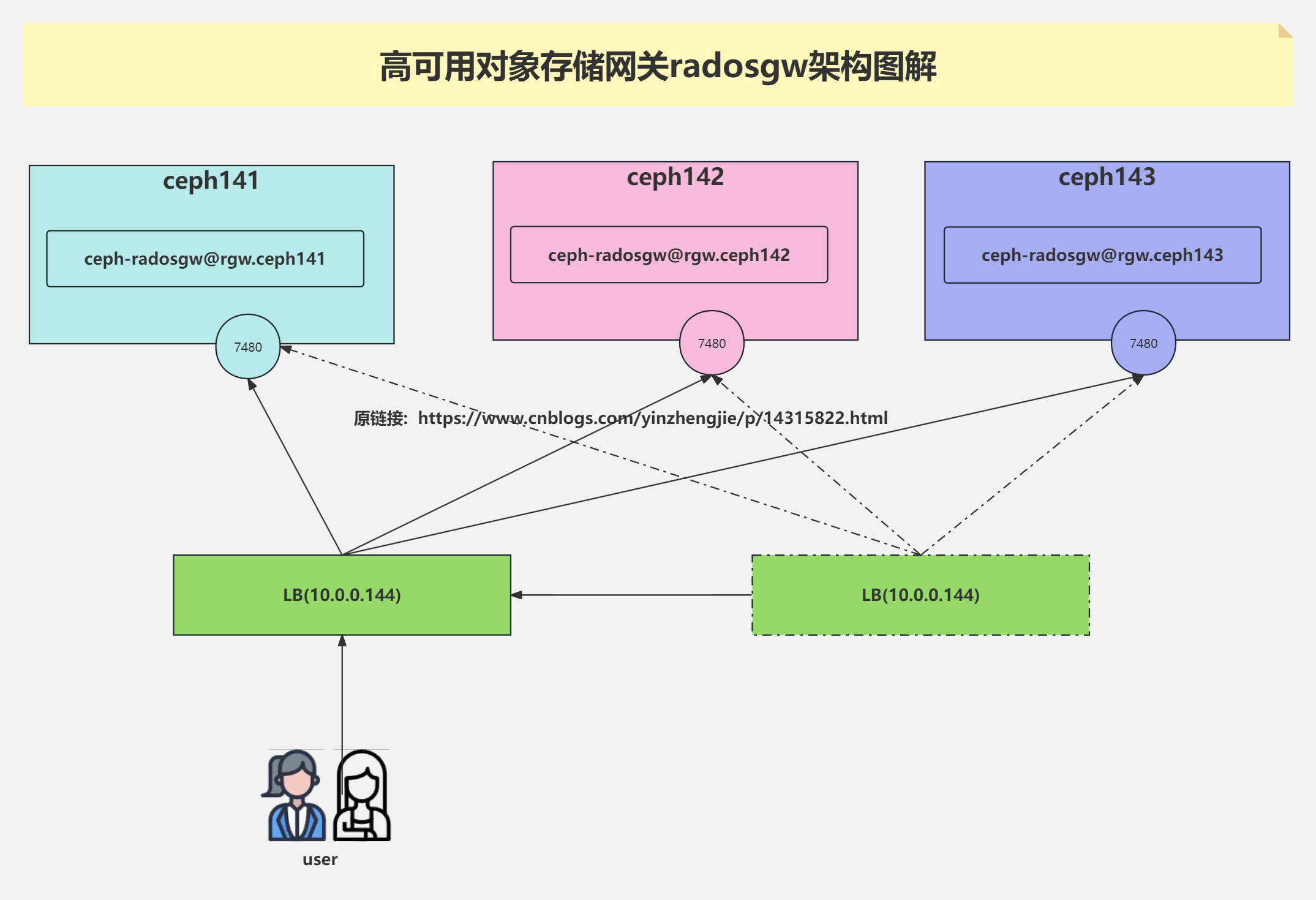

四.高可用对象存储网关radosgw架构实战

1.架构图解

如上图所示,是高可用的对象存储网关。

2.添加授权

[root@ceph141 ~]# cat yinzhengjie-anonymous-access-policy.json

{

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": {"AWS": ["*"]},

"Action": "s3:GetObject",

"Resource": [

"arn:aws:s3:::yinzhengjie-bucket/*"

]

}]

}

[root@ceph141 ~]#

[root@ceph141 ~]# s3cmd setpolicy yinzhengjie-anonymous-access-policy.json s3://yinzhengjie-bucket

s3://yinzhengjie-bucket/: Policy updated

[root@ceph141 ~]#

[root@ceph141 ~]# s3cmd info s3://yinzhengjie-bucket

s3://yinzhengjie-bucket/ (bucket):

Location: default

Payer: BucketOwner

Expiration Rule: none

Policy: {

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": {"AWS": ["*"]},

"Action": "s3:GetObject",

"Resource": [

"arn:aws:s3:::yinzhengjie-bucket/*"

]

}]

}

CORS: none

ACL: 尹正杰: FULL_CONTROL

[root@ceph141 ~]#

3.安装负载均衡器

[root@ceph144 ~]# yum -y install haproxy

4.修改配置文件

[root@ceph144 ~]# cp /etc/haproxy/haproxy.cfg{,.bak}

[root@ceph144 ~]#

[root@ceph144 ~]# ll /etc/haproxy/haproxy.cfg*

-rw-r--r-- 1 root root 3142 Nov 4 2020 /etc/haproxy/haproxy.cfg

-rw-r--r-- 1 root root 3142 Feb 2 16:51 /etc/haproxy/haproxy.cfg.bak

[root@ceph144 ~]#

[root@ceph144 ~]# cat /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-haproxy

bind *:33305

mode http

option httplog

monitor-uri /ayouok

frontend yinzhengjie-rgw

bind 0.0.0.0:7480

bind 127.0.0.1:7480

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend yinzhengjie-ceph-rgw

backend yinzhengjie-ceph-rgw

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server ceph141 10.0.0.141:7480 check

server ceph142 10.0.0.142:7480 check

server ceph143 10.0.0.143:7480 check

[root@ceph144 ~]#

5.启动haproxy

[root@ceph144 ~]# systemctl enable --now haproxy

Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

[root@ceph144 ~]#

[root@ceph144 ~]# ss -ntl | grep 7480

LISTEN 0 128 127.0.0.1:7480 *:*

LISTEN 0 128 *:7480 *:*

[root@ceph144 ~]#

6. window修改解析地址

10.0.0.144 www.yinzhengjie.com

7.windows访问测试

http://www.yinzhengjie.com:7480/yinzhengjie-bucket/02-ceph用户管理-用户格式及权限说明.mp4

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/14315822.html,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。

浙公网安备 33010602011771号

浙公网安备 33010602011771号