Filebeat+kafka+logstash 多路径日志收集

一、准备日志环境

1.配置nginx日志为json格式

vim /usr/local/nginx/nginx.conf

log_format json

'{"@timestamp":"$time_iso8601",'

'"@version":"1",'

'"client":"$remote_addr",'

'"url":"$uri",'

'"status":"$status",'

'"domian":"$host",'

'"host":"$server_addr",'

'"size":"$body_bytes_sent",'

'"responsetime":"$request_time",'

'"referer":"$http_referer",'

'"ua":"$http_user_agent"'

'}'; #自定日志输出样式

access_log logs/access.log json; #引用指定的日志

2.其他示例日志路径

/usr/local/nginx/logs/{access.log,error.log}

/home/tomcat/logs/cata...out

/var/log/message

二、修改filebeat配置文件

vim /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /usr/local/nginx/logs/*.log

fields:

log_topics: nginx

type: nginx

ip: 192.168.137.125

fields_under_root: true

- type: log

enabled: true

paths:

- /home/tomcat/logs/catalina.out

fields:

log_topics: tomcat

type: tomcat

ip: 192.168.137.125

fields_under_root: true

- type: log

enabled: true

paths:

- /var/log/messages

fields:

log_topics: message

type: message

ip: 192.168.137.125

fields_under_root: true

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

output.kafka:

hosts: ["192.168.137.25:9092","192.168.137.125:9092","192.168.137.126:9092"]

enabled: true

topic: '%{[fields][log_topics]}'

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

- add_docker_metadata: ~

注意点:其他一些filebeat的高级配置:https://blog.csdn.net/a464057216/article/details/51233375

fields: #向输出的每一条日志添加额外的信息,比如“level:debug”,方便后续对日志进行分组统计。默认情况下,会在输出信息的fields子目录下以指定的新增fields建立子目录,例如fields.level。

log_topics: nginx

type: nginx

ip: 192.168.137.125 #根据该值可区分不同主机

fields_under_root: true #将fields下级的一些字段提升到上一级;如果该选项设置为true,则新增fields成为顶级目录,而不是将其放在fields目录下。自定义的field会覆盖filebeat默认的field。

...

...

topic: '%{[fields][log_topics]}' #根据上面的自定信息,创建相应的topic

三、配置logstash和kibana可视化

1.配置logstash

vim /etc/logstash/conf.d/test.yml

input {

kafka {

bootstrap_servers => ["192.168.137.125:9092,192.168.137.25:9092,192.168.137.126:9092"]

topics => ["tomcat","nginx","message"] #filebeat里创建的topic

consumer_threads => 1

codec => json

}

}

output {

elasticsearch {

hosts => ["192.168.137.25:9200"]

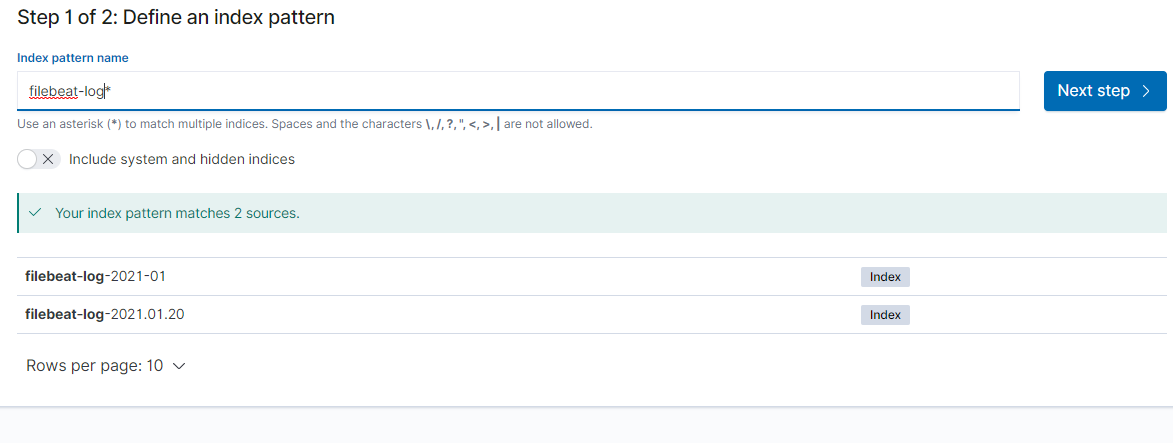

index => "filebeat-log-%{+YYYY.MM.dd}"

}

}

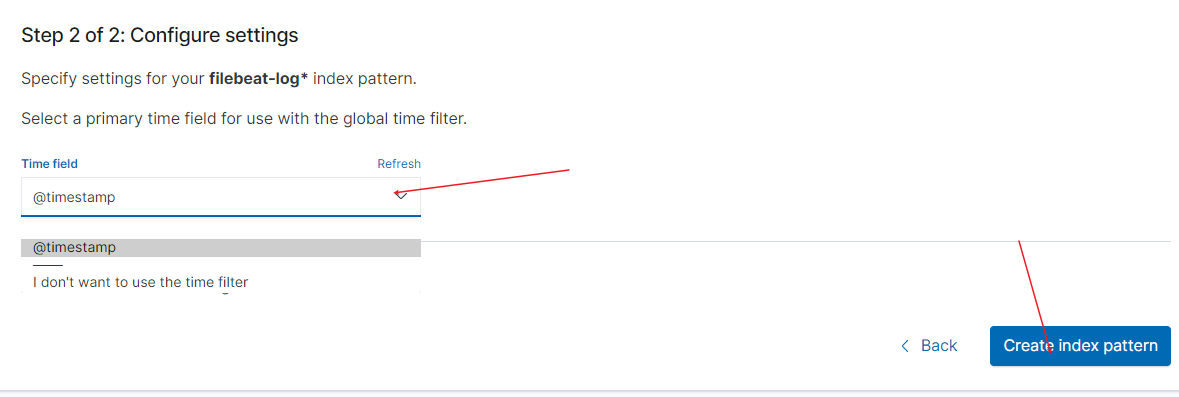

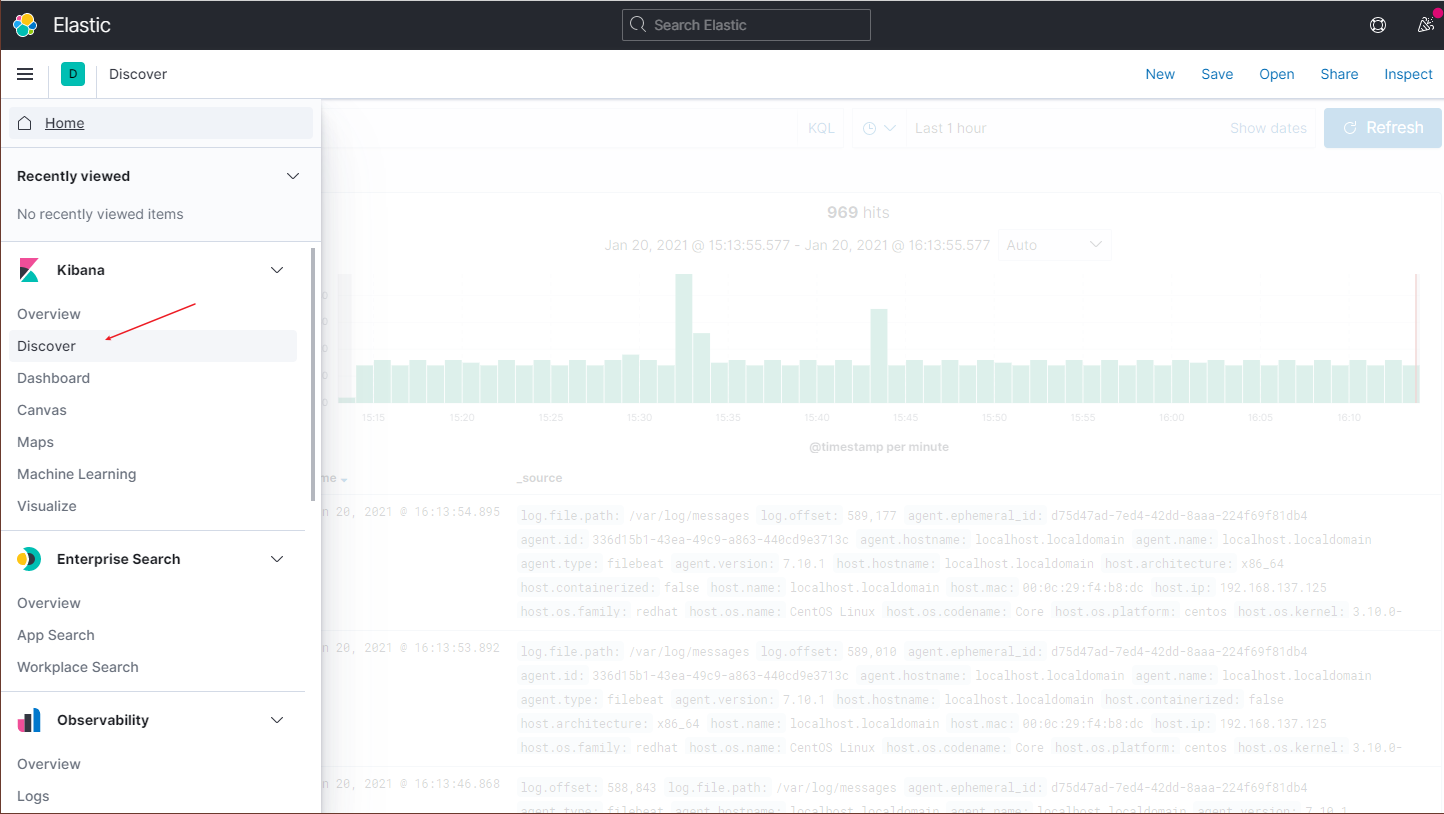

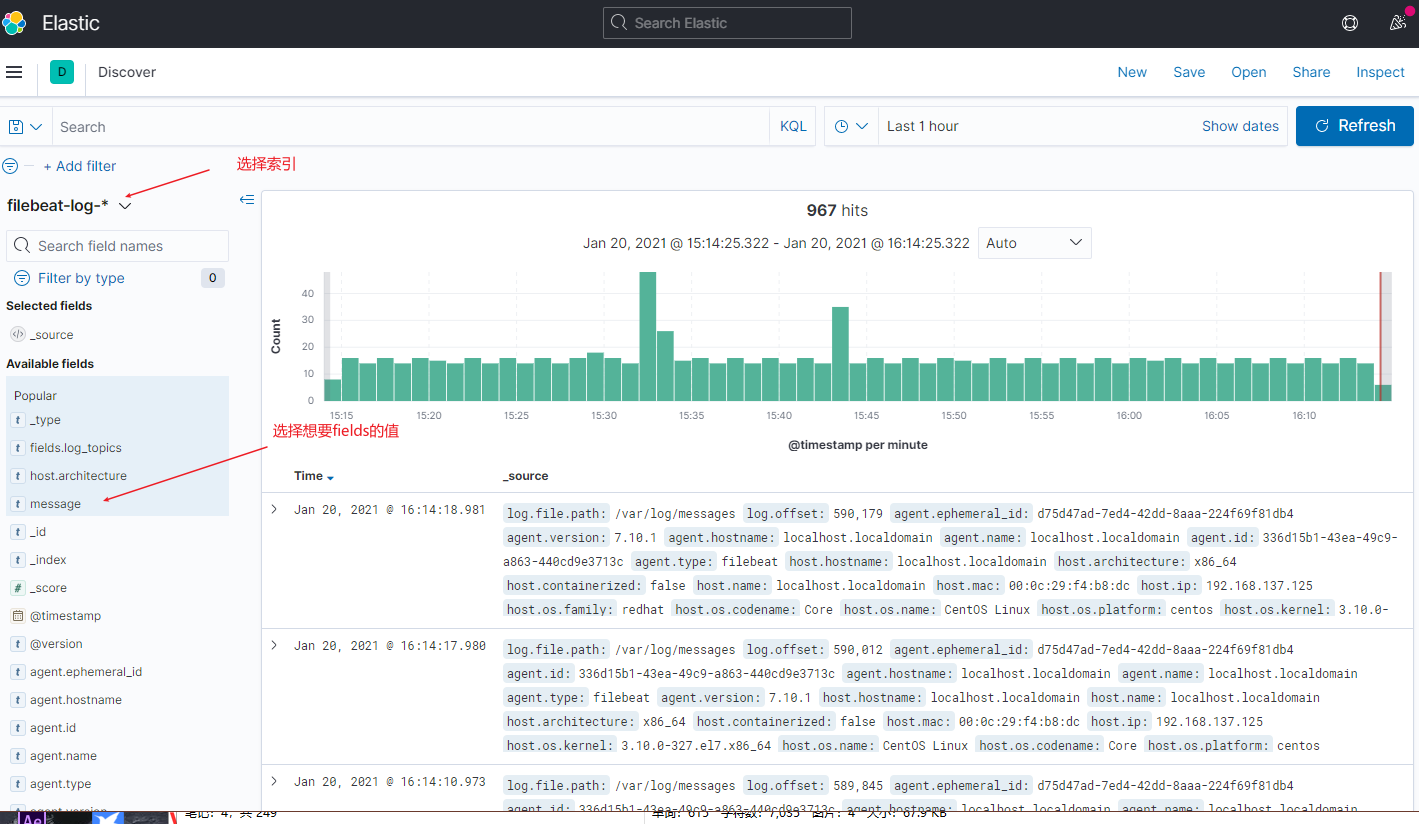

2.配置kibana可视化

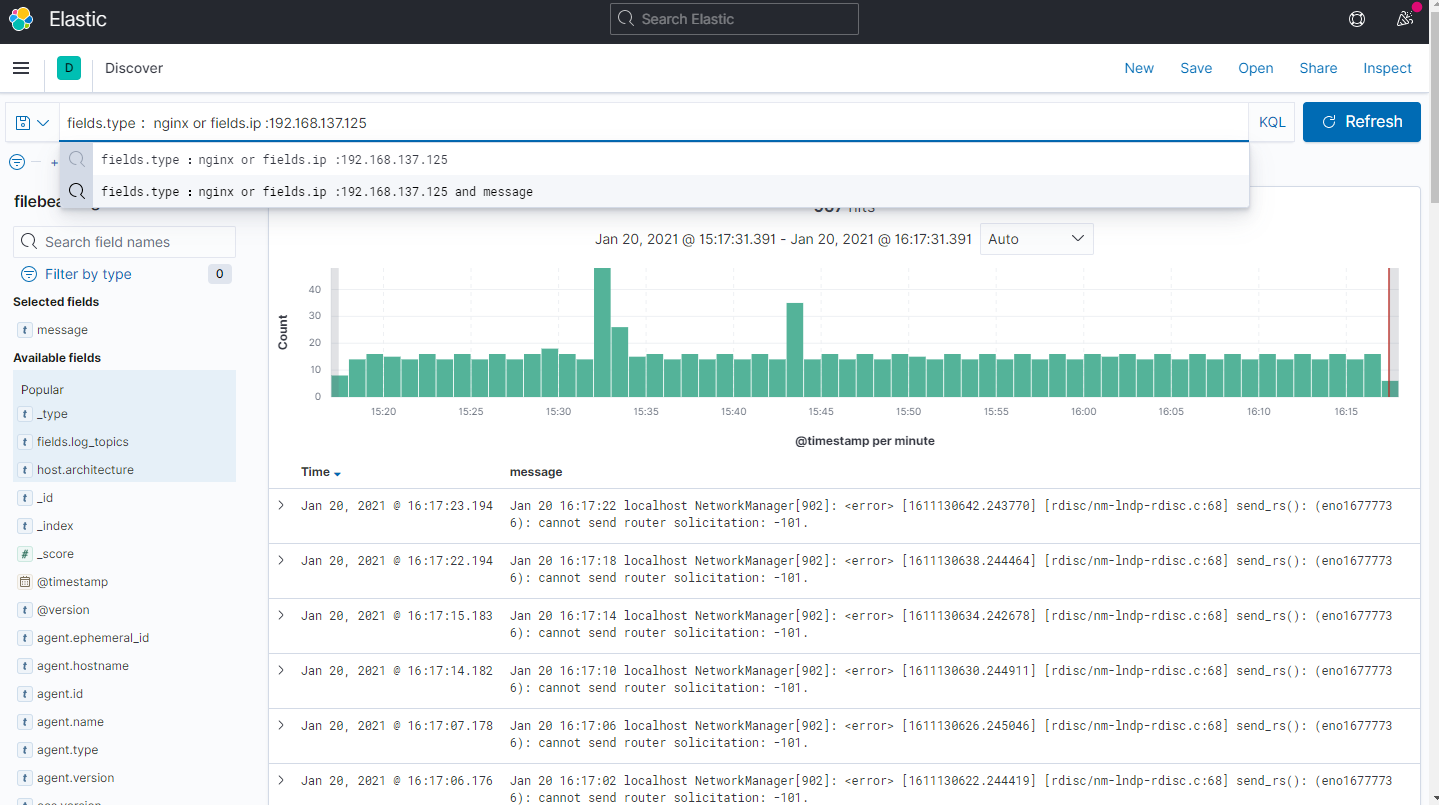

3.根据字段检索日志

浙公网安备 33010602011771号

浙公网安备 33010602011771号