Proxy-Anchor-CVPR2020的bug修复记录

仓库:

https://github.com/tjddus9597/Proxy-Anchor-CVPR2020

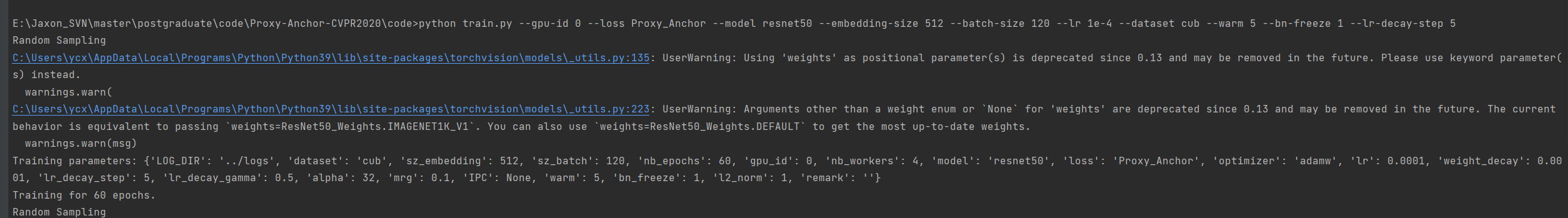

1、Bug1

命令

python train.py --gpu-id 0 --loss Proxy_Anchor --model resnet50 --embedding-size 512 --batch-size 120 --lr 1e-4 --dataset cub --warm 5 --bn-freeze 1 --lr-decay-step 5

UserWarning: Arguments other than a weight enum or None for 'weights' are deprecated since 0.13 and may be removed in the future. The current

behavior is equivalent to passing weights=ResNet50_Weights.IMAGENET1K_V1. You can also use weights=ResNet50_Weights.DEFAULT to get the most up-to-date weights.

解决办法:

这个坑解决了

2、Bug2

命令

python train.py --gpu-id 0 --loss Proxy_Anchor --model resnet50 --embedding-size 512 --batch-size 120 --lr 1e-4 --dataset cub --warm 5 --bn-freeze 1 --lr-decay-step 5

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

解决方法:把train.py封装起来,这是windows导致的

这时候不好改参数了,也是个问题

可以改成这样子

if __name__ == '__main__':

seed = 1

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed_all(seed) # set random seed for all gpus

parser = argparse.ArgumentParser(description=

'Official implementation of `Proxy Anchor Loss for Deep Metric Learning`'

+ 'Our code is modified from `https://github.com/dichotomies/proxy-nca`'

)

# export directory, training and val datasets, test datasets

parser.add_argument('--LOG_DIR',

default='../logs',

help = 'Path to log folder'

)

parser.add_argument('--dataset',

default='cub',

help = 'Training dataset, e.g. cub, cars, SOP, Inshop'

)

parser.add_argument('--embedding-size', default = 512, type = int,

dest = 'sz_embedding',

help = 'Size of embedding that is appended to backbone model.'

)

parser.add_argument('--batch-size', default = 150, type = int,

dest = 'sz_batch',

help = 'Number of samples per batch.'

)

parser.add_argument('--epochs', default = 60, type = int,

dest = 'nb_epochs',

help = 'Number of training epochs.'

)

parser.add_argument('--gpu-id', default = 0, type = int,

help = 'ID of GPU that is used for training.'

)

parser.add_argument('--workers', default = 4, type = int,

dest = 'nb_workers',

help = 'Number of workers for dataloader.'

)

parser.add_argument('--model', default = 'resnet50',

help = 'Model for training'

)

parser.add_argument('--loss', default = 'Proxy_Anchor',

help = 'Criterion for training'

)

parser.add_argument('--optimizer', default = 'adamw',

help = 'Optimizer setting'

)

parser.add_argument('--lr', default = 1e-4, type =float,

help = 'Learning rate setting'

)

parser.add_argument('--weight-decay', default = 1e-4, type =float,

help = 'Weight decay setting'

)

parser.add_argument('--lr-decay-step', default = 10, type =int,

help = 'Learning decay step setting'

)

parser.add_argument('--lr-decay-gamma', default = 0.5, type =float,

help = 'Learning decay gamma setting'

)

parser.add_argument('--alpha', default = 32, type = float,

help = 'Scaling Parameter setting'

)

parser.add_argument('--mrg', default = 0.1, type = float,

help = 'Margin parameter setting'

)

parser.add_argument('--IPC', type = int,

help = 'Balanced sampling, images per class'

)

parser.add_argument('--warm', default = 1, type = int,

help = 'Warmup training epochs'

)

parser.add_argument('--bn-freeze', default = 1, type = int,

help = 'Batch normalization parameter freeze'

)

parser.add_argument('--l2-norm', default = 1, type = int,

help = 'L2 normlization'

)

parser.add_argument('--remark', default = '',

help = 'Any reamrk'

)

args = parser.parse_args()

train(setting_args=args)

这时候坑2也解了

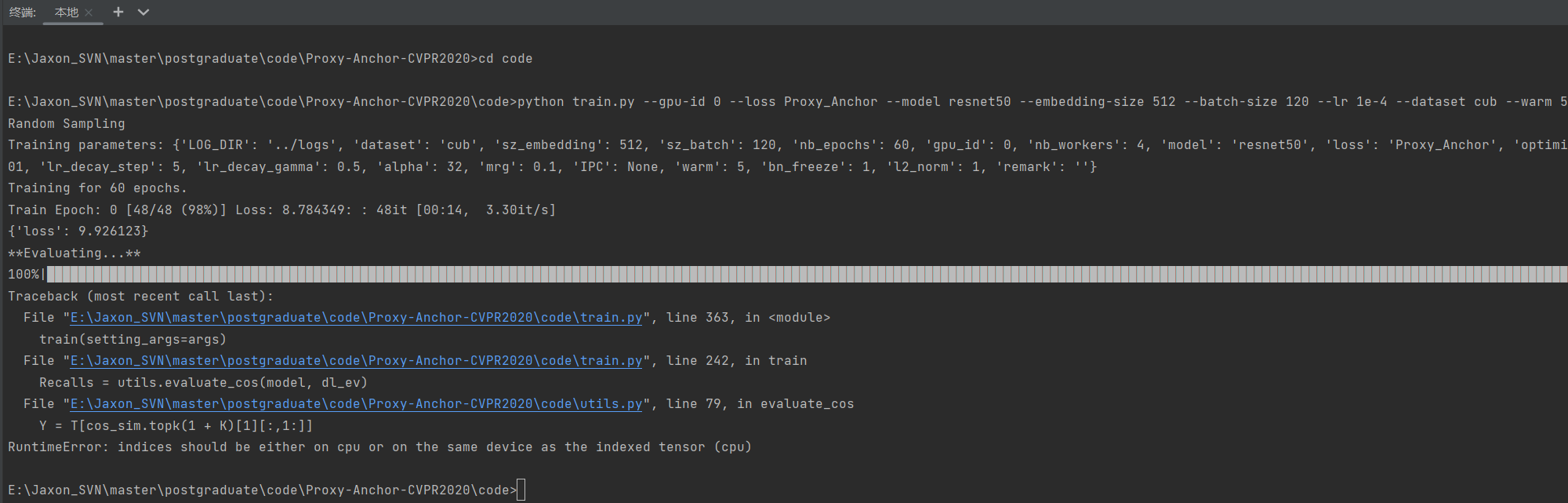

3、Bug3

命令

python train.py --gpu-id 0 --loss Proxy_Anchor --model resnet50 --embedding-size 512 --batch-size 120 --lr 1e-4 --dataset cub --warm 5 --bn-freeze 1 --lr-decay-step 5

报错:

Traceback (most recent call last):

File "E:\Jaxon_SVN\master\postgraduate\code\Proxy-Anchor-CVPR2020\code\train.py", line 363, in

train(setting_args=args)

File "E:\Jaxon_SVN\master\postgraduate\code\Proxy-Anchor-CVPR2020\code\train.py", line 242, in train

Recalls = utils.evaluate_cos(model, dl_ev)

File "E:\Jaxon_SVN\master\postgraduate\code\Proxy-Anchor-CVPR2020\code\utils.py", line 79, in evaluate_cos

Y = T[cos_sim.topk(1 + K)[1][:,1:]]

RuntimeError: indices should be either on cpu or on the same device as the indexed tensor (cpu)

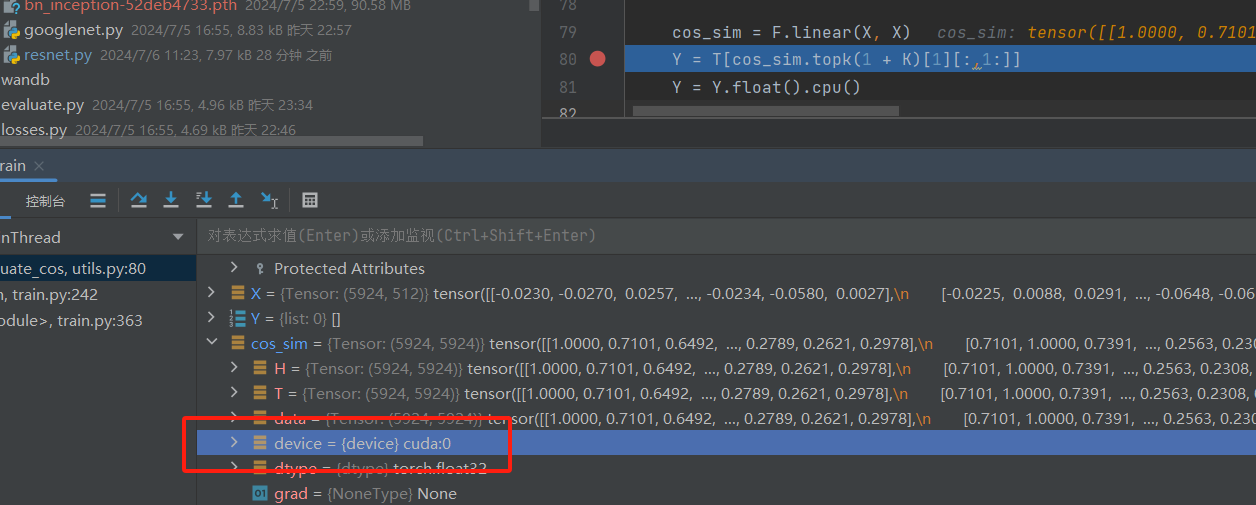

原因检查:

可以看到T是cpu,cos_sim却是cuda0

解决方法:

把cos_sim放cpu上

# cos_sim = F.linear(X, X)

cos_sim = F.linear(X, X).cpu()

此时已经可以正常训练了

4、Bug4

命令

python train.py --gpu-id 0 --loss Proxy_Anchor --model bn_inception --embedding-size 512 --batch-size 180 --lr 1e-4 --dataset cub --warm 1 --bn-freeze 1 --lr-decay-step 10

报错:

self._sslobj.do_handshake()

ssl.SSLCertVerificationError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: certificate has expired (_ssl.c:1129)

解决方法

# 解决ssl验证问题

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

本文来自博客园,作者:JaxonYe,转载请注明原文链接:https://www.cnblogs.com/yechangxin/articles/18287032

侵权必究

浙公网安备 33010602011771号

浙公网安备 33010602011771号