macOS 下sentence transformers分析评论初体验

20210107更新

pip3下载慢时,可以使用阿里云的镜像加速(不用科.学.上.网也快了!)

例如安装opencv

pip3 install -i https://mirrors.aliyun.com/pypi/simple/ opencv-python

参考:

中文翻译版:https://mp.weixin.qq.com/s/gs0MI9xSM-NCPnMGjDGlWA

原作者部分代码:https://gist.github.com/int8/6684f968b252314cc8b5b87296ea2367

sentence transformers源码git仓库: https://github.com/UKPLab/sentence-transformers

sentence transformers clustering文档:https://www.sbert.net/examples/applications/clustering/README.html

sentence transformers clustering示例代码: https://github.com/UKPLab/sentence-transformers/blob/master/examples/applications/clustering/kmeans.py

sentence transformers embedding示例代码:https://github.com/UKPLab/sentence-transformers/blob/master/examples/applications/computing-embeddings/computing_embeddings.py

Topic Modeling with BERT

Leveraging BERT and TF-IDF to create easily interpretable topics. https://towardsdatascience.com/topic-modeling-with-bert-779f7db187e6

目标:使用sentence transformers将评论向量化,umap降维,kmeans分组,分词,统计词频,生成词云

评论数据清洗:去掉emoji,繁体转为简体中文使用snownlp (37M) https://github.com/isnowfy/snownlp

# 繁体转简体 这个库比较旧了 编码都是unicode utf-16就是unicode from snownlp import SnowNLP

s = SnowNLP(sentence)

all_sentences.append(s.han) # 将繁体转为简体中文

环境搭建拦路虎:

pytorch 100M+,pip安装慢,并且中断了就得从头来,参考:https://www.pythonf.cn/read/132147

到官网用浏览器下载对应版本,https://download.pytorch.org/whl/torch_stable.html

下载到本地后,由于使用了virtual env,在PyCharm的Python Console中安装whl文件,numpy 15M 勉强还行

>>> import pip >>> from pip._internal.cli.main import main as pipmain >>> pipmain(['install', '/Users/xxx/Downloads/torch-1.7.1-cp38-none-macosx_10_9_x86_64.whl'])

Processing /Users/xxx/Downloads/torch-1.7.1-cp38-none-macosx_10_9_x86_64.whl Collecting numpy Downloading numpy-1.19.4-cp38-cp38-macosx_10_9_x86_64.whl (15.3 MB) Collecting typing-extensions Downloading typing_extensions-3.7.4.3-py3-none-any.whl (22 kB) Installing collected packages: typing-extensions, numpy, torch Successfully installed numpy-1.19.4 torch-1.7.1 typing-extensions-3.7.4.3 0

这个下载也要好一会 scipy-1.5.4-cp38-cp38-macosx_10_9_x86_64.whl (29.0 MB) scikit_learn-0.24.0-cp38-cp38-macosx_10_9_x86_64.whl (7.2 MB)

最后执行

pip3 install sentence_transformers

...

Successfully installed certifi-2020.12.5 chardet-4.0.0 click-7.1.2 filelock-3.0.12 idna-2.10 joblib-1.0.0 nltk-3.5 packaging-20.8 pyparsing-2.4.7 regex-2020.11.13 requests-2.25.1 sacremoses-0.0.43 scikit-learn-0.24.0 scipy-1.5.4 sentence-transformers-0.4.0 sentencepiece-0.1.94 six-1.15.0 threadpoolctl-2.1.0 tokenizers-0.9.4 tqdm-4.54.1 transformers-4.1.1 urllib3-1.26.2

模型下载

代码执行到这里时会自动下载模型

model = SentenceTransformer('roberta-large-nli-stsb-mean-tokens') embeddings = model.encode(all_sentences) # all_sentences: ['评论1', '评论2', ...]

1%| | 10.2M/1.31G [01:36<3:25:46, 106kB/s]

使用科学.上网.后速度快多了

# 使用umap将sentence_transformers生成1024维降到2维

# pip3 install umap-learn # 不能用pip3 install umap

import umap umap_embeddings = umap.UMAP(n_neighbors=15, min_dist=0.0, n_components=2, metric='cosine').fit_transform(embeddings)

umap官方文档:https://umap-learn.readthedocs.io/en/latest/

参数介绍:https://umap-learn.readthedocs.io/en/latest/parameters.html

# n_neighbors # 默认15,2时是呈现的是无关联、散点图(有时单个点也会被去掉),200时是整体的 # This effect well exemplifies the local/global tradeoff provided by n_neighbors. # min_dist # 两个点间有多紧密 # 默认 0.1 范围0.0 到 0.99 需要clustering 或 拓扑结构时值要小。大的值则避免点在一起,得到 broad topological structure # n_components 指定降维后的纬度 # metric parameter. # This controls how distance is computed in the ambient(环绕的) space of the input data.

# 聚类分析

from sklearn.cluster import KMeans num_clusters = 10 clustering_model = KMeans(n_clusters=num_clusters) clustering_model.fit(umap_embeddings) cluster_assignment = clustering_model.labels_

中文分词工具

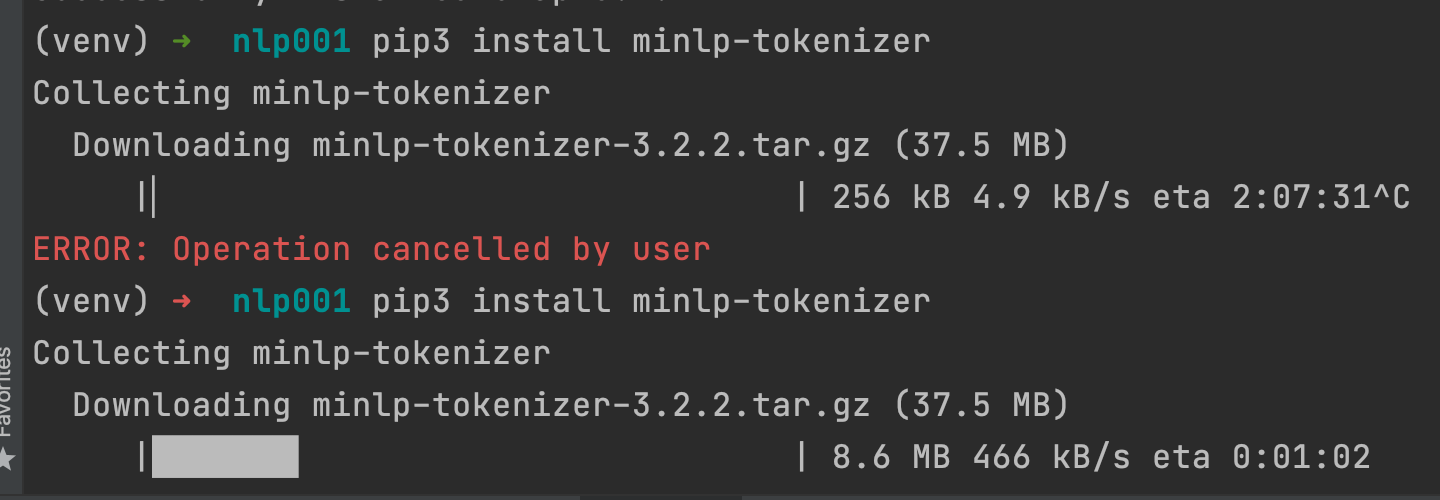

# from minlptokenizer.tokenizer import MiNLPTokenizer # 需要 tensorflow 安装报错就没用

网络无力吐槽了,普通情况就4.9 kB/s

更换为了北大开源了中文分词工具包

import pkuseg # https://github.com/lancopku/PKUSeg-python seg = pkuseg.pkuseg() # 以默认配置加载模型 text = seg.cut('我爱北京天安门') # 进行分词 print(text) # ['我', '爱', '北京', '天安门']

词云配置项:https://blog.csdn.net/jinsefm/article/details/80645588

浙公网安备 33010602011771号

浙公网安备 33010602011771号