一直用storm做实时流的开发,之前系统学过spark但是一直没做个模版出来用,国庆节有时间准备做个sparkStream的模板用来防止以后公司要用。(功能模拟华为日常需求,db入库hadoop环境)

1.准备好三台已经安装好集群环境的的机器,在此我用的是linux red hat,集群是CDH5.5版本(公司建议用华为FI和cloudera manager这种会比较稳定些感觉)

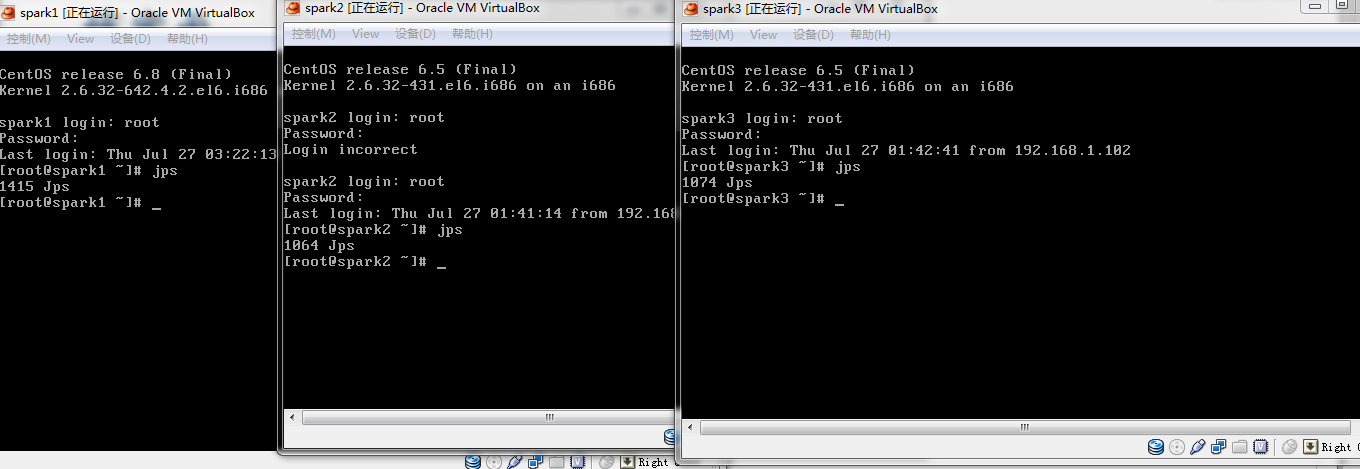

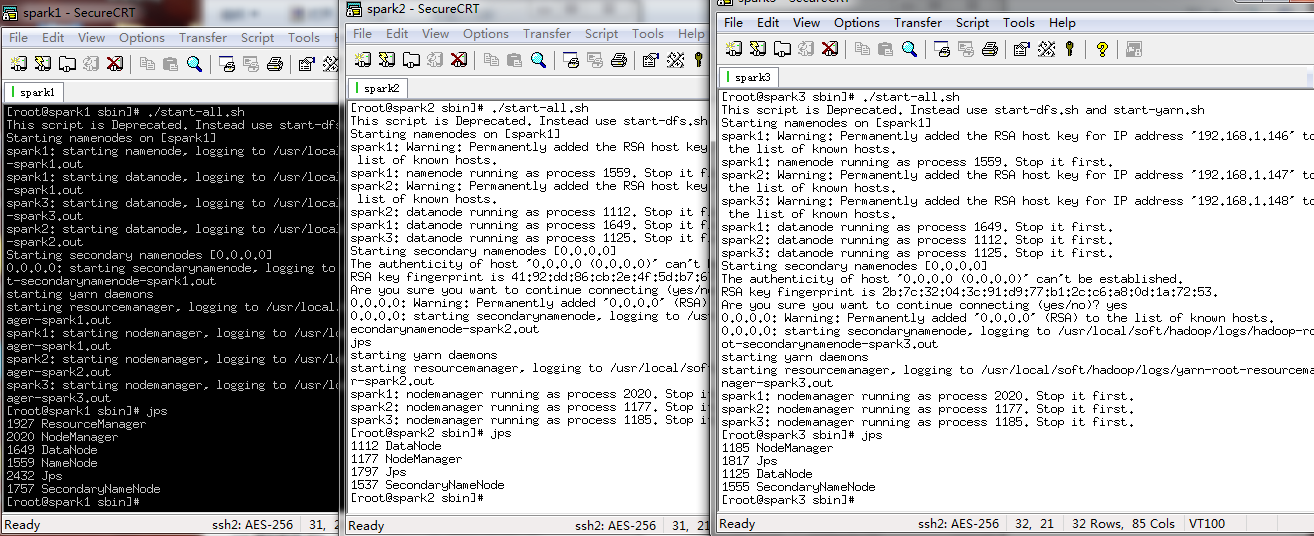

2.CRT工具链接上集群环境,启动hadoop集群,本人是一个master两个salve结构(one namenode two datanode)

3.因为spark依赖与ZK,继续启动ZK,此时会选举出一个leader两个follower

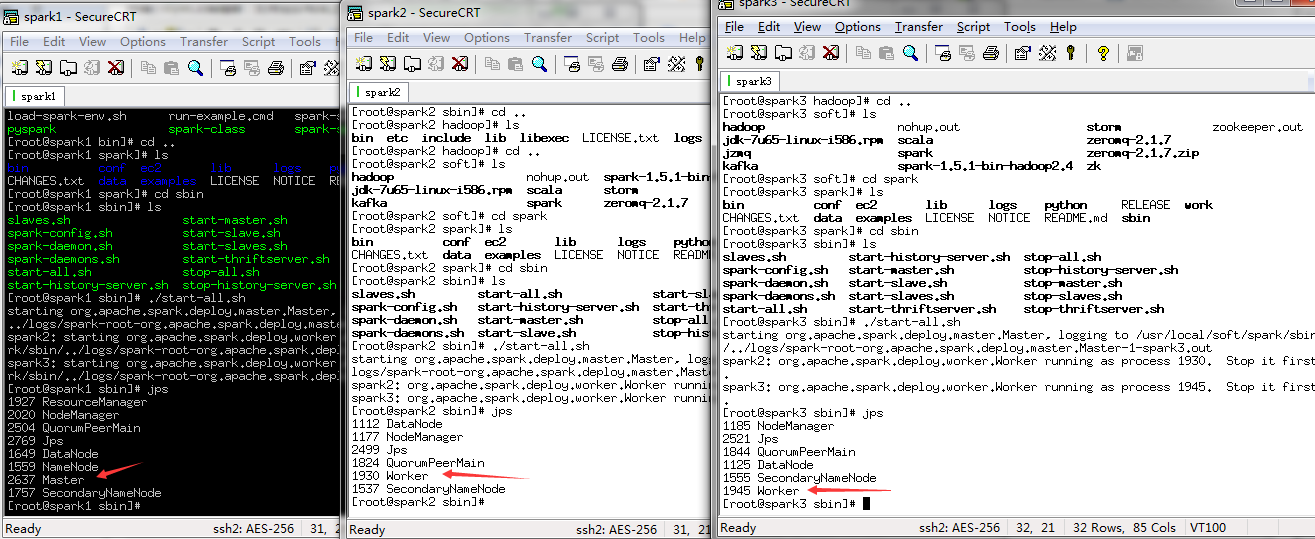

4.启动spark,会有一个master两个worder

5.启动三台kafka

6.查看全部存在的topic,后面用一个来消费(./kafka-topics.sh --zookeeper spark1:2181,spark2:2181,spark3:2181 --list)

7.打开eclipse测试producter和consumer

producter

1 /** 2 * Licensed to the Apache Software Foundation (ASF) under one or more 3 * contributor license agreements. See the NOTICE file distributed with 4 * this work for additional information regarding copyright ownership. 5 * The ASF licenses this file to You under the Apache License, Version 2.0 6 * (the "License"); you may not use this file except in compliance with 7 * the License. You may obtain a copy of the License at 8 * 9 * http://www.apache.org/licenses/LICENSE-2.0 10 * 11 * Unless required by applicable law or agreed to in writing, software 12 * distributed under the License is distributed on an "AS IS" BASIS, 13 * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 14 * See the License for the specific language governing permissions and 15 * limitations under the License. 16 */ 17 package kafka.examples; 18 19 import java.util.Properties; 20 import kafka.producer.KeyedMessage; 21 import kafka.producer.ProducerConfig; 22 23 public class Producer extends Thread { 24 private final kafka.javaapi.producer.Producer<Integer, String> producer; 25 private final String topic; 26 private final Properties props = new Properties(); 27 28 public Producer(String topic) { 29 props.put("serializer.class", "kafka.serializer.StringEncoder");// 字符串消息 30 props.put("metadata.broker.list", 31 "spark1:9092,spark2:9092,spark3:9092"); 32 // Use random partitioner. Don't need the key type. Just set it to 33 // Integer. 34 // The message is of type String. 35 producer = new kafka.javaapi.producer.Producer<Integer, String>( 36 new ProducerConfig(props)); 37 this.topic = topic; 38 } 39 40 public void run() { 41 for (int i = 0; i < 2000; i++) { 42 String messageStr = new String("Message_" + i); 43 System.out.println("product:"+messageStr); 44 producer.send(new KeyedMessage<Integer, String>(topic, messageStr)); 45 } 46 47 } 48 49 public static void main(String[] args) { 50 Producer producerThread = new Producer(KafkaProperties.topic); 51 producerThread.start(); 52 } 53 }

consumer

/**

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package kafka.examples;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

import kafka.consumer.ConsumerConfig;

import kafka.consumer.ConsumerIterator;

import kafka.consumer.KafkaStream;

import kafka.javaapi.consumer.ConsumerConnector;

public class Consumer extends Thread {

private final ConsumerConnector consumer;

private final String topic;

public Consumer(String topic) {

consumer = kafka.consumer.Consumer

.createJavaConsumerConnector(createConsumerConfig());//创建kafka时传入配置文件

this.topic = topic;

}

//配置kafka的配置文件项目

private static ConsumerConfig createConsumerConfig() {

Properties props = new Properties();

props.put("zookeeper.connect", KafkaProperties.zkConnect);

props.put("group.id", KafkaProperties.groupId);//相同的kafka groupID会给同一个customer消费

props.put("zookeeper.session.timeout.ms", "400");

props.put("zookeeper.sync.time.ms", "200");

props.put("auto.commit.interval.ms", "60000");//

return new ConsumerConfig(props);

}

// push消费方式,服务端推送过来。主动方式是pull

public void run() {

Map<String, Integer> topicCountMap = new HashMap<String, Integer>();

topicCountMap.put(topic, new Integer(1));//先整体存到Map中

Map<String, List<KafkaStream<byte[], byte[]>>> consumerMap = consumer

.createMessageStreams(topicCountMap);//用consumer创建message流然后放入到consumerMap中

KafkaStream<byte[], byte[]> stream = consumerMap.get(topic).get(0);//再从流里面拿出来进行迭代

ConsumerIterator<byte[], byte[]> it = stream.iterator();

while (it.hasNext()){

//逻辑处理

System.out.println(new String(it.next().message()));

}

}

public static void main(String[] args) {

Consumer consumerThread = new Consumer(KafkaProperties.topic);

consumerThread.start();

}

}

config

/**

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package kafka.examples;

public interface KafkaProperties

{

final static String zkConnect = "spark1:2181,spark2:2181,spark3:2181";

final static String groupId = "group1";

final static String topic = "track_log";

// final static String kafkaServerURL = "localhost";

// final static int kafkaServerPort = 9092;

// final static int kafkaProducerBufferSize = 64*1024;

// final static int connectionTimeOut = 100000;

// final static int reconnectInterval = 10000;

// final static String topic2 = "topic2";

// final static String topic3 = "topic3";

// final static String clientId = "SimpleConsumerDemoClient";

}

8.编写scala代码.

浙公网安备 33010602011771号

浙公网安备 33010602011771号