通过使用FaNT工具合成:https://blog.csdn.net/qzhou961/article/details/105426440,这个工具没试过,不准备用该工具,尝试自己构建所需要的含噪语音数据集。

参照该博客:

自己设计含噪语音数据生成器:

模块一:噪声数据切片(包括将noise数据降采样和将noise数据切片为训练数据和测试数据)

from scipy.io import wavfile

import numpy as np

import soundfile as sf

import librosa

import os

from os import path

def wav_file_resample(src, dst, dst_sample):

"""

对目标文件进行降采样,采样率为dst_sample

:param src:源文件路径

:param dst:降采样后文件保存路径

:param dst_sample:降采样后的采样率

:return:

"""

src_sig, sr = sf.read(src)

dst_sig = librosa.resample(src_sig, sr, dst_sample)

sf.write(dst, dst_sig, dst_sample)

def data_generate(dir_url):

sample_rate, sig = wavfile.read(dir_url)

#sr, data = wavfile.read('D:/Users/DE/声纹识别研究/数据集/MY_TIMIT/TRAIN/DR1/FCJF0/SI648_.wav')

# 采样率:1S内对声音信号的采样次数

# data:数据作为numpy array 读取

#print("采样率:%d" % sr)

print("采样率: %d" % sample_rate)

print(sig.shape)

print(sig)

#

if sig.dtype == np.int16:

print("PCM16位整形")

# 噪声切片:前50%、后50%分别为训练、测试所用

half = sig.shape[0]/2

data_train = sig[:int(half)]

data_test = sig[int(half):]

# 修改文件名

file_name = os.path.splitext(dir_url)[0]

file_sname = os.path.splitext(dir_url)[-1]

#print(file_name, file_sname)

ntrain_abspath = file_name + '_train' + file_sname

ntest_abspath = file_name + '_test' + file_sname

#print(ntrain_abspath, ntest_abspath)

sf.write(ntrain_abspath, data_train, sample_rate)

sf.write(ntest_abspath, data_test, sample_rate)

def scaner_file(url):

file = os.listdir(url)

for f in file:

real_url = path.join(url, f)

if path.isfile(real_url):

abspath = path.abspath(real_url)

#print(abspath)

#print(type(abspath))

elif path.isdir(real_url):

scaner_file(real_url)

else:

pass

wav_file_resample(abspath, abspath, 16000)

data_generate(abspath)

模块二:数据合成(将sound数据和noise数据进行合成)

import numpy as np

import soundfile as sf

import librosa

import random

import os

sound_name = 'SI'

def switch(item):

switcher = {

0: 'buccaneer1',

1: 'buccaneer2',

2: 'destroyerengine',

3: 'destroyerops',

4: 'f16',

5: 'factory2',

6: 'hfchannel',

7: 'leopard',

8: 'm109',

9: 'machinegun',

10: 'pink',

11: 'volvo',

12: 'white'

}

return switcher.get(item)

# 得到文件名

def get_dir(files_1):

for f1 in files_1:

file_path_22 = file_path_1 + "\\" + f1

files_2 = os.listdir(file_path_22)

for f2 in files_2:

file = file_path_22 + "\\" + f2

file_list.append(file)

f1Af2 = f1 + "/" + f2

f1Af2_list.append(f1Af2)

#格式化文件夹:清空文件夹内所有文件

def format():

mixfile_path = r'D:\Users\DE\声纹识别研究\数据集\train_data\-5dB\mix_sound'

sfile_path = r'D:\Users\DE\声纹识别研究\数据集\train_data\-5dB\sound'

nfile_path = r'D:\Users\DE\声纹识别研究\数据集\train_data\-5dB\noise'

for root, dirs, files in os.walk(mixfile_path):

for f in files:

mf = os.path.join(root, f)

# print(mf)

os.remove(mf)

for root, dirs, files in os.walk(sfile_path):

for f in files:

sdf = os.path.join(root, f)

os.remove(sdf)

for root, dirs, files in os.walk(nfile_path):

for f in files:

nf = os.path.join(root, f)

os.remove(nf)

#合成特定信噪比下的含噪语音 sound + noise

def mix_sANDn(file, tfile):

# 噪音的id

# [)

for root, dirs, files in os.walk(file):

for f in files:

path = os.path.join(root, f)

print(path)

# 随机噪声类型

n_id = random.randint(0, 12)

n_name = switch(n_id)

# 原始语音

a, a_sr = librosa.load(path, sr=16000)

# 噪音

b, b_sr = librosa.load('D:/Users/DE/声纹识别研究/数据集/noisex_train/' + n_name + '_train' + '.wav', sr=16000)

# 随机取一段噪声,保证长度和纯净语音长度一致,保证不会越界

start = random.randint(0, b.shape[0] - a.shape[0])

# 切片

n_b = b[int(start):int(start) + a.shape[0]]

# 平方求和

sum_s = np.sum(a ** 2)

sum_n = np.sum(n_b ** 2)

# 信噪比为-5dB时的权重

x = np.sqrt(sum_s / (sum_n * pow(10, -0.5)))

noise = x * n_b

target = a + noise

sf.write('D:/Users/DE/声纹识别研究/数据集/train_data/-5dB/mix_sound/' + tfile + '/' +

os.path.splitext(f)[0] + '_' + n_name + '.wav', target, 16000)

sf.write('D:/Users/DE/声纹识别研究/数据集/train_data/-5dB/sound/' + tfile + '/' +

os.path.splitext(f)[0] + '.wav', a, 16000)

sf.write('D:/Users/DE/声纹识别研究/数据集/train_data/-5dB/noise/' + tfile + '/' +

os.path.splitext(f)[0] + '_' + n_name + '_train' + '.wav', noise, 16000)

if __name__ == '__main__':

file_list = []

f1Af2_list = []

file_path_1 = "D:\\Users\\DE\\声纹识别研究\\数据集\\MY_TIMIT\\TRAIN"

files_1 = os.listdir(file_path_1)

# print(files_1)

get_dir(files_1)

format()

for i in range(0, len(file_list)):

file = file_list[i]

target_file = f1Af2_list[i]

print(file)

print(target_file)

mix_sANDn(file, target_file)

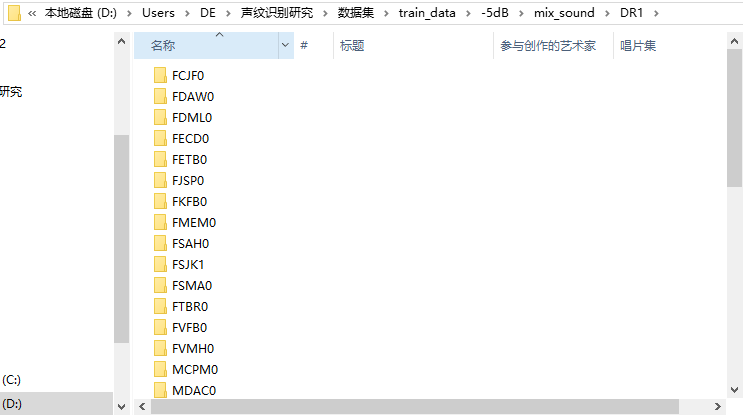

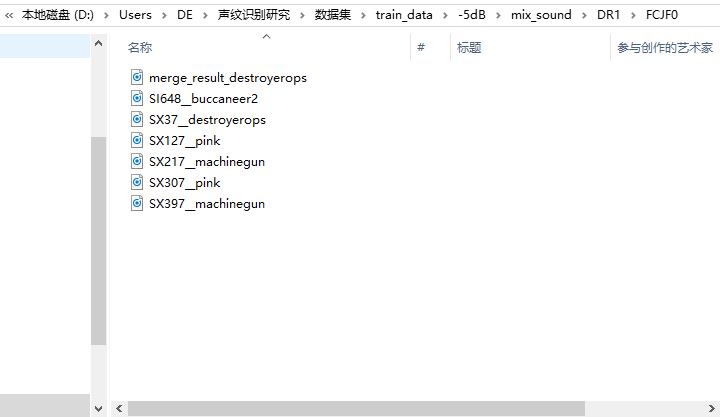

生成的数据结构图

图1

图2

图3

涉及计算公式

首先对于公式进行解释:

\[SNR(s(t),n(t))=10\cdot \log_{10}{\frac{ {\textstyle \sum_{t}^{}} s^2(t)}{ {\textstyle \sum_{t}^{}} n^2(t)} }

\]

- SNR为纯净语音sound与噪生noise的信噪比

- s(t)、n(t)分别为sound与noise

- s(t)、n(t)平方求和\(\textstyle\sum_{t}^{}s^2(t)\)、\(\textstyle\sum_{t}^{}n^2(t)\)分别为纯净语音能量和噪声能量

为了合成特定信噪比的含噪语音,需要对噪声能量进行调整,如果需要某个信噪比qdB的含噪语音,则调整噪声能量大小为原来的\(\alpha\)倍,是为\(\alpha n(t)\),则公式变为:

\[SNR(s(t),n(t))=10\cdot \log_{10}{\frac{ {\textstyle \sum_{t}^{}} s^2(t)}{\alpha^2 {\textstyle \sum_{t}^{}} n^2(t)} }

\]

因此可以推出\(\alpha\)的计算公式为:

\[\alpha=\sqrt{\frac{\textstyle\sum_{t}^{}s^2(t)}{10^{\frac{q}{10}}\textstyle\sum_{t}^{}n^2(t)}}

\]

综上所述,在得到信噪比的计算公式后,通过指定特定的信噪比后,含噪语音的合成公式便为:

\[mix(s(t),n(t))=s(t)+\alpha n(t)

\]

浙公网安备 33010602011771号

浙公网安备 33010602011771号