|NO.Z.00008|——————————|^^ 配置 ^^|——|Hadoop&Spark.V08|——|Spark.v08|sparkcore|Spark-Standalone集群模式&history server|

一、History Server配置

### --- History Server

~~~ # 配置服务的history server:spark-defaults.conf\

[root@hadoop02 ~]# vim $SPARK_HOME/conf/spark-defaults.conf

# history server

spark.master spark://hadoop02:7077

spark.eventLog.enabled true

spark.eventLog.dir hdfs://hadoop01:9000/spark-eventlog

spark.eventLog.compress true

spark.serializer org.apache.spark.serializer.KryoSerializer

spark.driver.memory 512m~~~ # 配置服务的history server:spark-env.sh

[root@hadoop02 ~]# vim $SPARK_HOME/conf/spark-env.sh

export JAVA_HOME=/opt/yanqi/servers/jdk1.8.0_231

export HADOOP_HOME=/opt/yanqi/servers/hadoop-2.9.2

export HADOOP_CONF_DIR=/opt/yanqi/servers/hadoop-2.9.2/etc/hadoop

export SPARK_DIST_CLASSPATH=$(/opt/yanqi/servers/hadoop-2.9.2/bin/hadoop classpath)

export SPARK_MASTER_HOST=hadoop02

export SPARK_MASTER_PORT=7077

export SPARK_WORKER_CORES=1

export SPARK_WORKER_MEMORY=1g

export SPARK_HISTORY_OPTS="-Dspark.history.ui.port=18080 -Dspark.history.retainedApplications=50 -Dspark.history.fs.logDirectory=hdfs://Hadoop01:9000/spark-eventlog"~~~ # 发送到其它节点

[root@hadoop02 ~]# rsync-script $SPARK_HOME/conf/spark-defaults.conf

[root@hadoop02 ~]# rsync-script $SPARK_HOME/conf/spark-env.sh

[root@hadoop02 ~]# stop-all-spark.sh

[root@hadoop02 ~]# start-all-spark.sh### --- spark.history.retainedApplications。

~~~ 设置缓存Cache中保存的应用程序历史记录的个数(默认50),如果超过这个值,旧的将被删除;

~~~ 缓存文件数不表示实际显示的文件总数。只是表示不在缓存中的文件可能需要从硬盘读取,速度稍有差别

~~~ 前提条件:启动hdfs服务(日志写到HDFS)

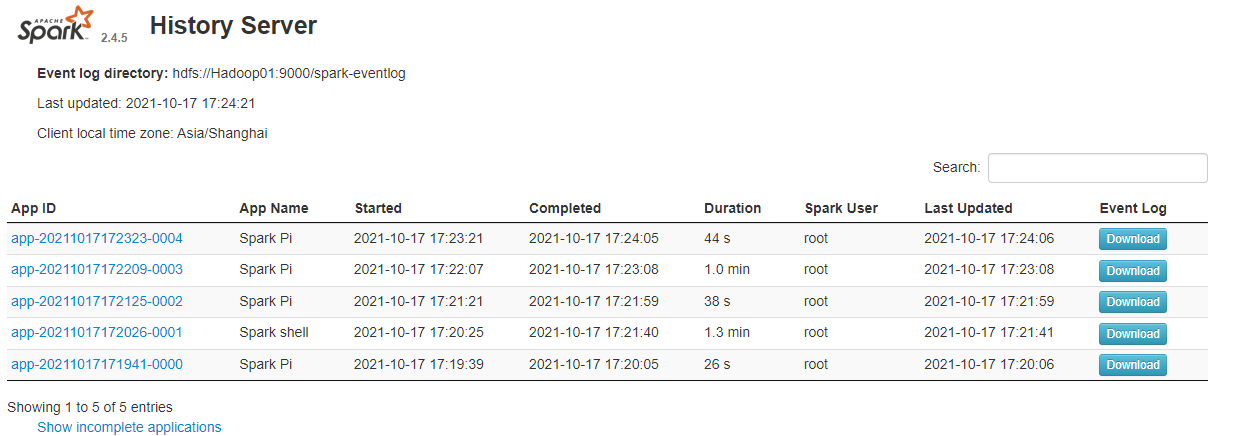

~~~ 启动historyserver,使用 jps 检查,可以看见 HistoryServer 进程。如果看见该进程,请检查对应的日志。### --- web端地址:http://hadoop02:18080/

[root@hadoop02 ~]# start-history-server.sh

[root@hadoop02 ~]# jps

HistoryServer

附录一:定版文件

### --- $SPARK_HOME/conf/spark-defaults.conf

[root@hadoop02 ~]# vim $SPARK_HOME/conf/spark-defaults.conf

# history server

spark.master spark://hadoop02:7077

spark.eventLog.enabled true

spark.eventLog.dir hdfs://hadoop01:9000/spark-eventlog

spark.eventLog.compress true

spark.serializer org.apache.spark.serializer.KryoSerializer

spark.driver.memory 512m### --- $SPARK_HOME/conf/spark-env.sh

[root@hadoop02 ~]# vim $SPARK_HOME/conf/spark-env.sh

export JAVA_HOME=/opt/yanqi/servers/jdk1.8.0_231

export HADOOP_HOME=/opt/yanqi/servers/hadoop-2.9.2

export HADOOP_CONF_DIR=/opt/yanqi/servers/hadoop-2.9.2/etc/hadoop

export SPARK_DIST_CLASSPATH=$(/opt/yanqi/servers/hadoop-2.9.2/bin/hadoop classpath)

export SPARK_MASTER_HOST=hadoop02

export SPARK_MASTER_PORT=7077

export SPARK_WORKER_CORES=1

export SPARK_WORKER_MEMORY=1g

export SPARK_HISTORY_OPTS="-Dspark.history.ui.port=18080 -Dspark.history.retainedApplications=50 -Dspark.history.fs.logDirectory=hdfs://Hadoop01:9000/spark-eventlog"Walter Savage Landor:strove with none,for none was worth my strife.Nature I loved and, next to Nature, Art:I warm'd both hands before the fire of life.It sinks, and I am ready to depart

——W.S.Landor

浙公网安备 33010602011771号

浙公网安备 33010602011771号