LLMs Learn Task Heuristics from Demonstrations: A Heuristic-Driven Prompting Strategy for Document-Level Event Argument Extraction

1. 概述

关于基于COT的Prompt构造有很多的研究,例如:CoT (Wei et al., 2022), Automate-CoT (Shum et al., 2023), Auto-CoT (Zhang et al., 2023), Iter-CoT (Sun et al., 2023), Active-CoT (Diao et al., 2023)。

本篇文章尝试给出了一种解释:LLM基于有监督的ICL(in-context learning),学习到适用于该任务的启发式规则,使其能够达到预期效果。

在这种解释的基础上,文章提出了一种基于启发式规则与类比的COT自动构建方式,对于多任务都有提升。

2. 启发式规则有效性的验证

2.1 训练数据与优质Prompt的关系

假设:如果LLM确实从训练数据中学习到了适用于该任务的启发式规则,那么优质的prompt应该包括这些样例中提到的大量启发式规则。

验证试验:作者对3个数据集,进行了如下实验:

-

提供多个Example给GPT-4,让其去识别启发式规则,进而对启发式规则进行计数。

-

使用6种优秀的方法(CoT (Wei et al., 2022), Automate-CoT (Shum et al., 2023), Auto-CoT (Zhang et al., 2023), Iter-CoT (Sun et al., 2023), Active-CoT (Diao et al., 2023), Boosted(Pitis et al., 2023))构造出的Prompt,也提供给GPT-4,让其去识别启发式规则,进而对启发式规则进行计数。这里使用的prompt同1中展示的一样。

-

使用随机构造的Prompt,做同样的处理,作为baseline进行对比。

-

比较1、2、3中计数的差距。

结果如下:

实验证明:优质的Prompt中蕴含的启发式规则数量同提供的样例中蕴含的,基本一致。

2.2 Prompt复杂度的影响

假设:如果LLM无法从训练数据中学习启发式方法,那么具有较为复杂的Prompt应与简单的Prompt具有相似的性能。

验证实验:通过构造简单和复杂的Prompt,对同一数据集进行实验,对比结果。

- 简单Prompt:所有数据均使用相同的启发式规则进行提示。

- 复杂Prompt:笔者采用了两种不同的构造Prompt的方式

- 对数据集StrategyQA 和 SST-2使用上述两种Prompt进行对比验证。结果如下:

注意:因为文章没有详细论述实验细节,这里对其进行猜测。对于LLM的上下文输入包括Prompt以及n个Example,输出为Result。上图的实验结果表示,复杂的Prompt构造方法较单一的Prompt构造方法效果更优。

实验结果:基于COT的Prompt生成方式效果更优。

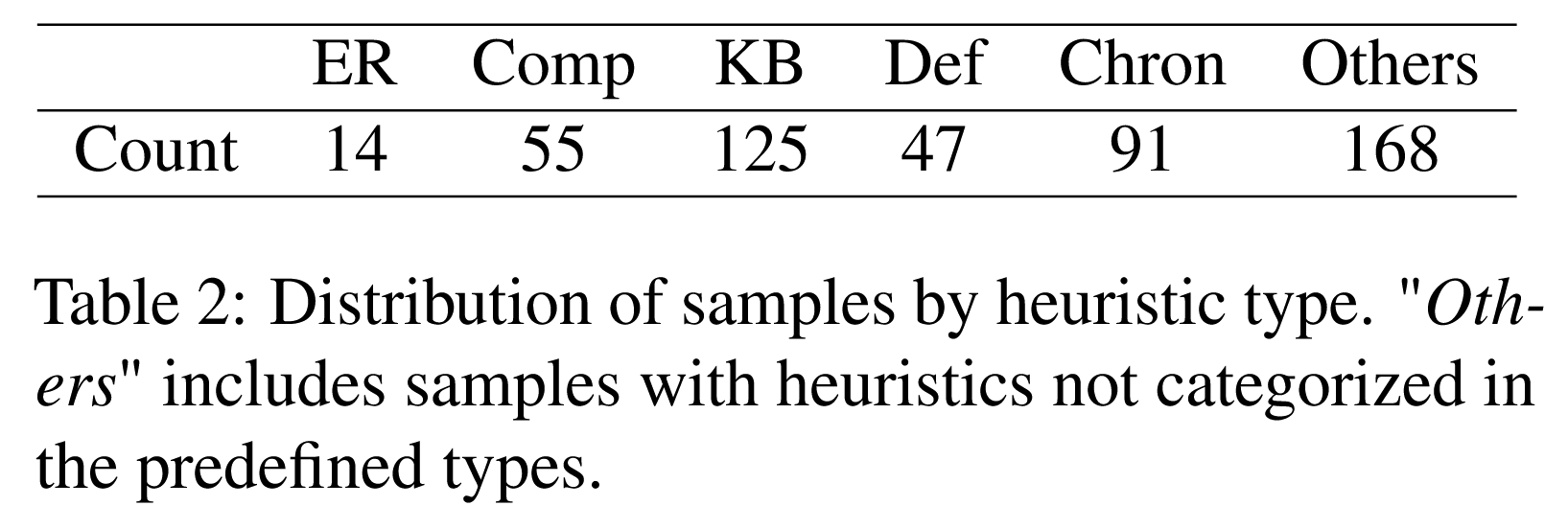

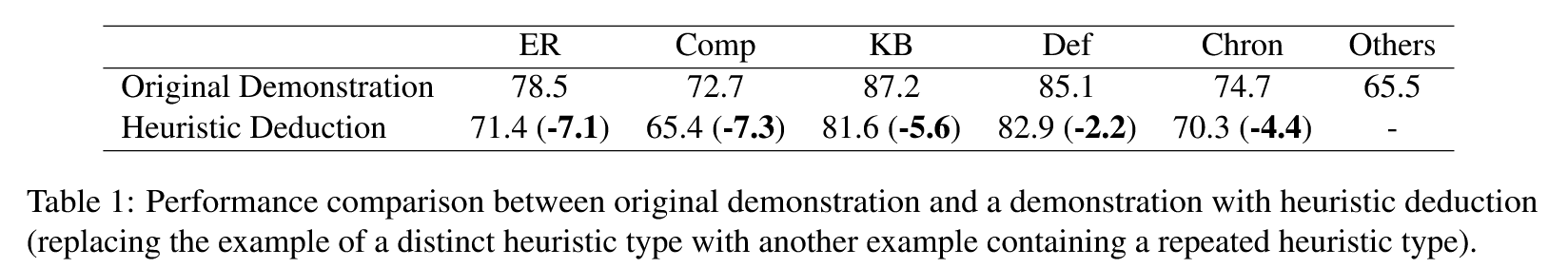

2.3 减少启发式规则数量的影响

实验:

- 数据集:StrategyQA

- Prompt构造方式: Automatic prompt augmentation and selection with chain-of-thought from labeled data

- 使用GPT-4识别StrategyQA中,可能出现的启发式规则:empathetic reasoning (ER), comparison (Comp), knowledge-based (KB), definition-based (Def), and chronological (Chron)

- 分类结果如下:

- 对每条数据,我们消除其中一条启发式规则,然后重复另一条,保证Prompt长度近似不变。使用gpt-4-1106-preview进行预测。

3. Method

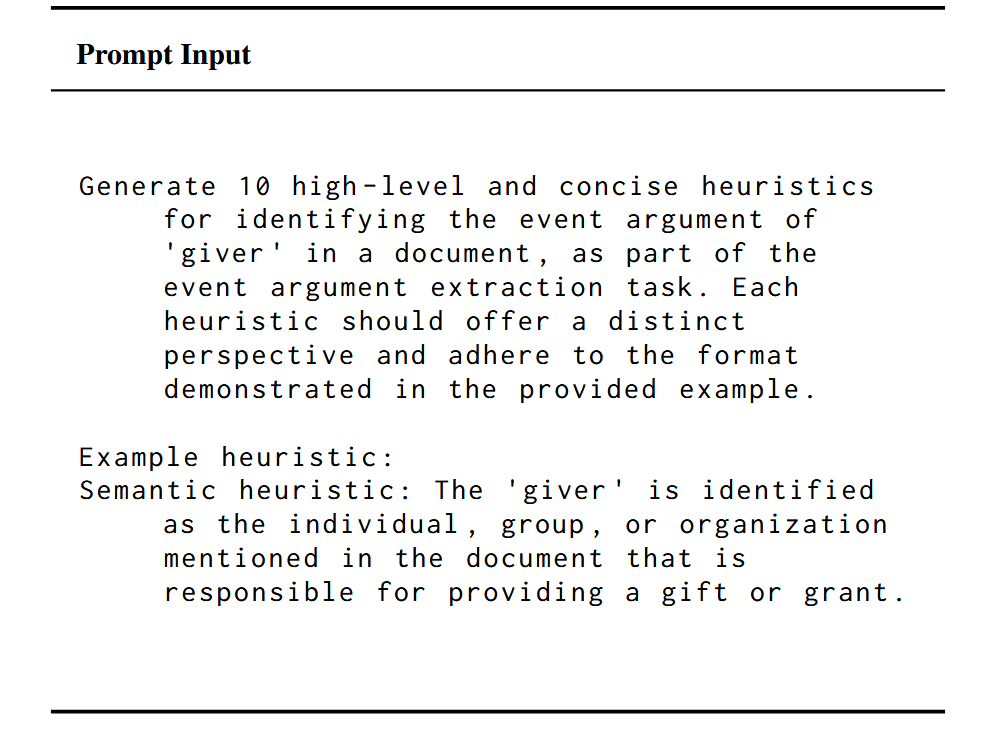

3.1 基于启发式规则的Prompt构建

由于之前的实验证明了LLM通过例子,学习到了特定的启发式规则,进而达到了优秀的效果,笔者提出了直接提供启发式规则作为Prompt,而不是让LLM隐式提供Example,让LLM理解学习。

因此这里需要选择合适的启发式规则。

方法:

- 启发式规则生成:作者通过为GPT-4提供任务的描述信息,为每个任务生成了10个启发式规则。

- 筛选:作者利用1%的训练集进行启发式规则验证(验证规则未详述),取top 3,筛选前后,准确率提升了7.17%,筛选前(26.52%)vs筛选后(33.69%)

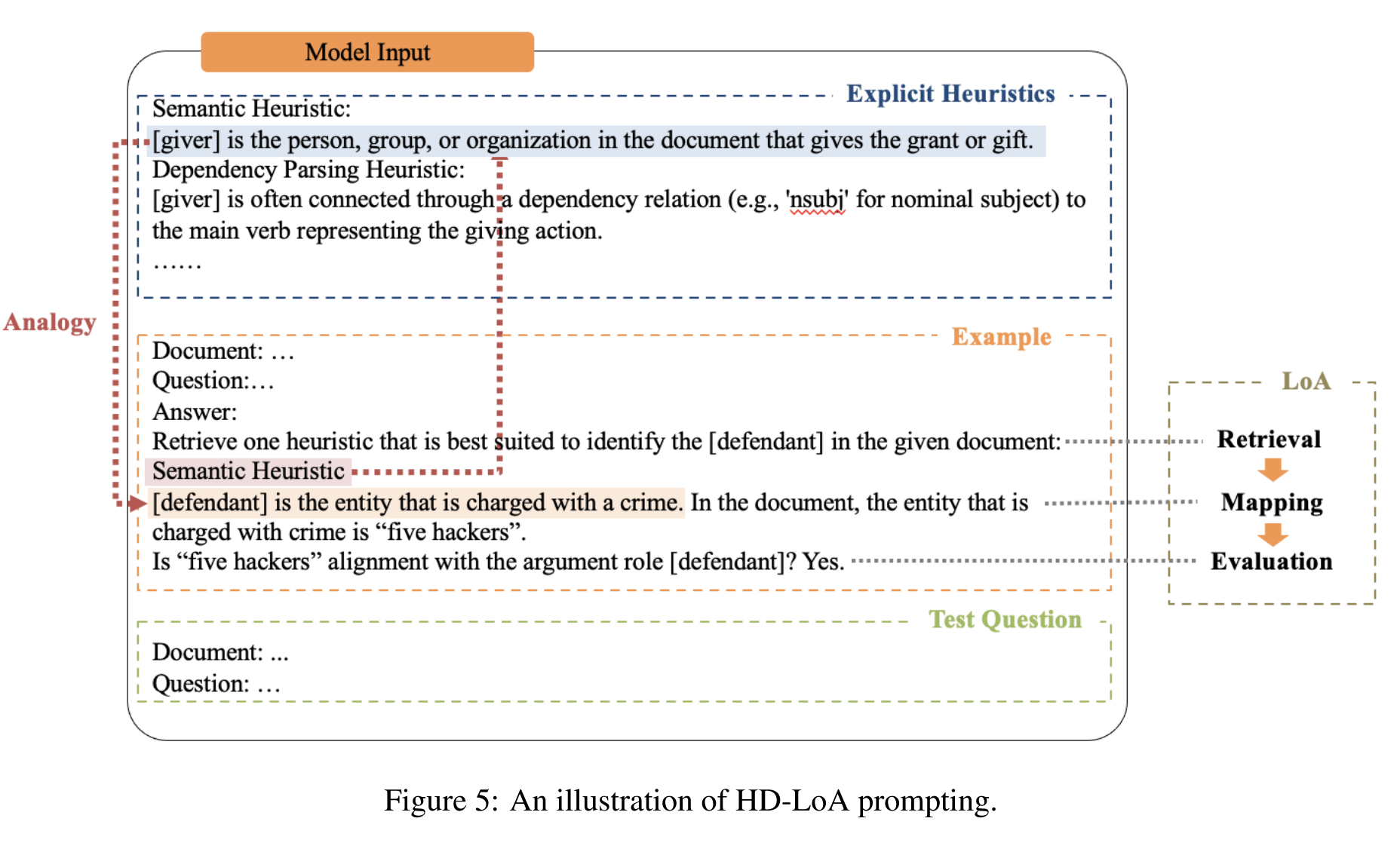

3.2 类比提示Prompt

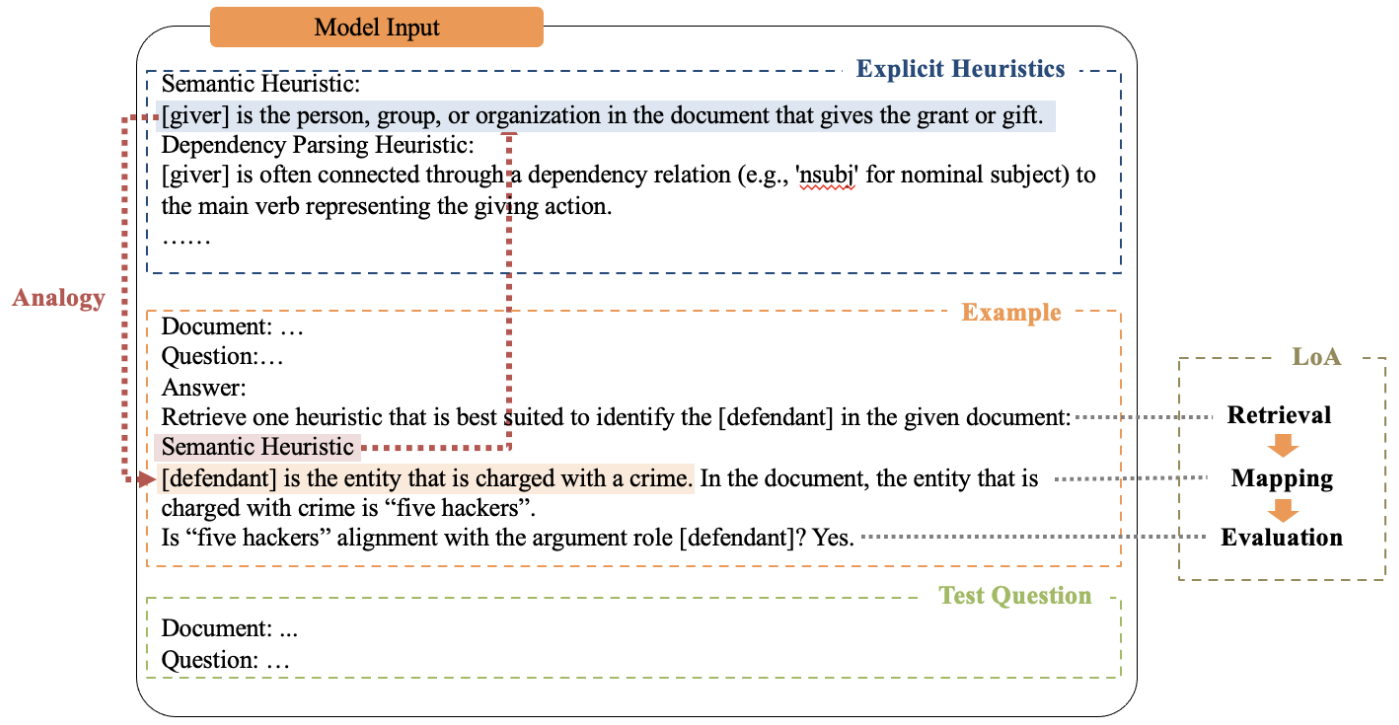

事件参数抽取任务,存在从未见过的事件,为了解决这个问题,笔者指导LLM进行类比,生成对应的携带启发式规则的Prompt。主要分为3个阶段:

- 检索

- 映射

- 评估

Prompt结构如图:

这里给出一个RAMS数据集的样例:

our task is Event Argument Extraction. In this task, you will be provided with a document that describes an event and the goal is to extract the event arguments that correspond to each argument role associated with the event. The terminologies for this task is as follows:

Event trigger: the main word that most clearly expresses an event occurrence, typically a verb or a noun. The trigger word is located between special tokens "<t>" and "<\t>" in the document, and only the event argument explicitly linked to the trigger word should be considered.

Event argument: an entity mention, temporal expression or value that serves as a participant or attribute with a specific role in an event. Event arguments should be quoted exactly as they appear in the given document.

Argument role: the relationship between an argument to the event in which it participates.

Heuristics: serving as guiding rules for extracting event arguments.

Specifically, you will use the heuristic provided in the heuristic list to guide identify event arguments, and re-evaluate the identified argument candidates to get the final answer.

heuristic list:

[

Semantic Heuristic: [giver] is the person, group, or organization in the document that gives the grant or gift.

Syntactic Heuristic: The [giver] may be recognized by analyzing sentence structure, often appearing before prepositional phrases starting with 'to' that introduce the recipient (e.g., "X gives Y to Z", X is the 'giver').

Dependency Parsing Heuristic: In parsing the sentence structure, the [giver] is often connected through a dependency relation (e.g., 'nsubj' for nominal subject) to the main verb representing the giving action.

]

Example task:

Question: Extract the event arguments of giver, beneficiary, and recipient in the "transaction.transaction.giftgrantprovideaid" event in the provided document, with the trigger word be

ing "granted", highlighted between "<t>" and "</t>". When pinpointing each event argument, it's crucial to quote the entity exactly as it appears in the text. If an event argument is not explicitly mentioned or cannot be directly associated with the event indicated by the trigger word, please respond with "not specified".

Document: a news document

Trigger sentence: "The access to the research center in the city was <t>granted</t> by the administrator. The man, Ripley Johnson, earned it."

Answer:

Elaborate the meaning of event type and its argument roles:

"transaction.transaction.giftgrantprovideaid": The event involves a transfer of money or resources in the form of a gift, grant, or provision of aid, signaled by the action of granting.

[giver]: the giver is the person, group, or organization that provides or grants money, resources, or access in the event.

[beneficiary]: the beneficiary is the party who ultimately benefits from the transaction.

[recipient]: the recipient is the entity that receives the money, resources, or access granted in the event.

Recognizing [giver] in the given document:

Step 1: Select one or two heuristics in the heuristic list that are most suitable to identify the [giver] in the given document: Semantic Heuristic and Syntactic Heuristic.

Step 2: Apply selected heuristics to identify [giver] independently.

Step 2.1: Identify the [giver] based on Semantic Heuristic: "[giver] is the person, group, or organization that gives the grant or gift in the document". Applying this heuristic to the document, the entity that gives access of the research center is "administrator".

Step 2.2: Identify the [giver] based on Syntactic Heuristic: "The [giver] may be recognized by analyzing sentence structure, often appearing before prepositional phrases starting with

'to' that introduce the recipient (e.g., 'X gives Y to Z', X is the 'giver')". Applying this heuristic to the given document, the entity that granted access to the research center is 'research center'.

Step 3: Reevaluate argument candidates: ["administrator", "research center"]

Is argument "administrator" alignment with the argument role [giver]? Yes, because "administrator" is directly responsible for the action of granting, establishing their role as the provider of access in the event.

Is argument "research center" alignment with the argument role [giver]? No, because "research center" is the place that access has been granted to, but it doesn't give access.

[giver]: "administrator"

Recognizing [beneficiary] in the given document:

Step 1: Select one or two heuristics in the heuristic list that are most suitable to identify the [beneficiary] in the given document: Semantic Heuristic.

Step 2: Apply selected heuristics to identify [beneficiary] independently.

Step 2.1: Identify the [beneficiary] based on Semantic Heuristic: "[beneficiary] is the entity that ultimately benefits from the gift or grant". Applying this heuristic to the given document, the entity that ultimately benefits from the grant is "not specified".

Step 3: Reevaluate argument candidate: ["not specified"]

Is argument "not specified" alignment with the argument role [beneficiary]? Yes, because the [beneficiary] is not explicitly mentioned so "not specified" is correct.

[beneficiary]: "not specified"

Recognizing [recipient] in the given document:

Step 1: Select one or two heuristics in the heuristic list that are most suitable to identify the [recipient] in the given document: Semantic Heuristic and Dependency Parsing Heuristic.

Step 2: Apply selected heuristics to identify [recipient] independently.

Step 2.1: Identify the [recipient] based on Semantic Heuristic: "[recipient] is the entity that receives the gift or grant". Applying heuristic f1 to the given document, the entity that receives the gift or grant is "Ripley Johnson".

Step 2.2: Identify the [recipient] based on Dependency Parsing Heuristic: "[recipient] is often highlighted in the sentence through a dependency relation that denotes the receiver of t

he action, such as 'dobj' (direct object) for direct transactions linked to the main verb of the event". Applying this heuristic to the given document, the entity connected to the verb 'granted' through a dobj relation is "Ripley Johnson".

Step 3: Reevaluate argument candidate: ["Ripley Johnson"]

Is argument "Ripley Johnson" alignment with the argument role [recipient]? Yes, because phrase "earned it" implies that "Ripley Johnson" was the intended recipient of the access, aligning with the role of [recipient] in the context of the event.

[recipient]: "Ripley Johnson"

Target task:

Question: Extract the event arguments of judgecourt, crime, defendant, and place in the "justice.judicialconsequences.na" event in the provided document, with the trigger word being "e

xtradited", highlighted between "<t>" and "</t>" in the news document. When pinpointing each event argument, it's crucial to quote the entity exactly as it appears in the text. If an event argument is not explicitly mentioned or cannot be directly associated with the event indicated by the trigger word, please respond with "not specified".

Document: MADRID Two Indian men arrested in Spain for smuggling Russian anti - aircraft missiles were <t> extradited </t> to the United States , Spanish military police said in a state

ment on Thursday . Spanish police detained the pair in Barcelona in 2014 along with two Pakistani men , who have already been extradited , as part of a joint operation - dubbed Operati

on Yoga - with the U.S. Drug Enforcement Administration ( DEA ) to bust a smuggling ring based in the Catalonia region . The group had offered the Russian - built Igla missiles to foreign paramilitary groups .

Trigger sentence: "MADRID Two Indian men arrested in Spain for smuggling Russian anti - aircraft missiles were <t> extradited </t> to the United States , Spanish military police said in a statement on Thursday ."

Prioritize the identification of event arguments within the specified trigger sentence. If an event argument is not explicitly mentioned, please answer "not specified".

实际上,作者通过给出一个Example,指导LLM生成一套COT规划,进而完成任务,结果如下:

Elaborate the meaning of event type and its argument roles:

"justice.judicialconsequences.na": The event involves a court or legal judgement, followed by sanctions or consequences, often signaled by the action of extraditing.

[judgecourt]: the entity or group, often a court or legal system, that executes the judgment or sanction in the event.

[crime]: the action, behavior, or circumstance that has led to the legal judgement or consequence.

[defendant]: the person or entity that is being subjected to the legal judgment or sanction.

[place]: the location where the crime occurred, or from where the defendant was extradited.

Recognizing [judgecourt] in the given document:

Step 1: Select one or two heuristics in the heuristic list that are most suitable to identify the [judgecourt] in the given document: Semantic Heuristic.

Step 2: Identify the [judgecourt] based on Semantic Heuristic: "[judgecourt] is the entity or group, often a court that executes the judgement." Applying this heuristic to the given document, the entity that extradites the defendants is the "Spanish military police".

[judgecourt]: "Spanish military police"

Recognizing [crime] in the given document:

Step 1: Select one or two heuristics in the heuristic list that are most suitable to identify the [crime] in the given document: Semantic Heuristic.

Step 2: Apply selected heuristics to identify [crime] independently. Identify the [crime] based on Semantic Heuristic: "[crime] is the action, behavior, or circumstance that has led to the legal judgment or consequence." Thus, the crime leading to extradition is "smuggling Russian anti - aircraft missiles".

[crime]: "smuggling Russian anti - aircraft missiles"

Recognizing [defendant] in the given document:

Step 1: Select one or two heuristics in the heuristic list that are most suitable to identify the [defendant] in the given document: Semantic Heuristic.

Step 2: Apply selected heuristics to identify [defendant] independently. Identify the [defendant] based on Semantic Heuristic: "[defendant] is the person or entity that is being subjected to the legal judgment or sanction." Thus, the defendant in this document is "Two Indian men".

[defendant]: "Two Indian men"

Recognizing [place] in the given document:

Step 1: Select one or two heuristics in the heuristic list that are most suitable to identify the [place] in the given document: Semantic Heuristic.

Step 2: Apply selected heuristics to identify [place] independently. Identify the [place] based on Semantic Heuristic: "[place] is the location where the crime occurred, or from where the defendant was extradited." Thus, the place mentioned in the extradition event in this document is "Spain".

[place]: "Spain"

4. 实验结果

作者通过事件参数抽取任务去评价整个方法的正确性。事件参数抽取任务目的是从无结构化文本中抽取出结构化数据,并为数据打上用户要求的标签。

例如:对于一段文本,我们需要抽取一组结构化数据<Attacker, Victim>。

事件参数抽取任务将其分为两个子任务:

- 识别出可能为Attacker和Victim的信息

- 将1中识别到的信息进行分类,正确标记为Attacker和Victim

评价标准:评价标准使用的是事件参数抽取任务的通用标准。

- Arg-I:事件参数是否被正确识别

- Arg-C:事件参数是否被正确分类

数据集:DocEE 和 RAMS

Baseline:

- Standard:Large language models are few-shot clinical information extractors.

- COT: Chain of thought prompting elicits reasoning in large language models

实验结果:

- 相对于少样本学习,通过LLM+HD-LoA的方式,优秀很多。

- 相比于传统的COT方式,该方法带来了一定的提升。

最后,作者进行了消融实验,减少3.1、3.2的两个模块,证明该方法的准确性。

浙公网安备 33010602011771号

浙公网安备 33010602011771号