kafka-linux环境下集群安装

参考

环境准备

centosOS2 10.3.13.197

centosOS3 10.3.13.194

centosOS1 10.3.13.213

配置host系统文件

sudo vim /etc/hosts

10.3.13.213 node1

10.3.13.197 node2

10.3.13.194 node3

需要jdk环境

java -version #查看

安装zookeeper

下载 zookeeper-3.4.14.tar.gz 安装包

解压:

tar -zxf ./zookeeper-3.4.14.tar.gz

创建数据状态存储文件夹

mkdir -p /usr/local/zookeeper/zookeeper-3.4.14/zkdata/

重命名配置文件

# root @ localhost in /usr/local/zookeeper/zookeeper-3.4.14/conf

mv zoo_sample.cfg zoo.cfg

配置zoo.cfg文件

dataDir=/usr/local/zookeeper/zookeeper-3.4.14/zkdata/

dataLogDir=/usr/local/zookeeper/zookeeper-3.4.14/zklog

clientPort=2181

server.1=node1:2888:3888

server.2=node2:2888:3888

server.3=node3:2888:3888

在/zkdata文件夹下创建myid文件,文件中的整数与zoo.cfg对应,例如node1中设置为1,在node2中设置为2

这些数字对应着kafka的节点,代理节点与其一一对应

将zookeeper加入到环境变量中

vim ~/.bash_profile

PATH=$PATH:$HOME/bin:/usr/local/src/cmake/bin:/usr/local/zookeeper/zookeeper-3.4.14/bin:

export PATH

source ~/.bash_profile

启动zookeeper

zkServer.sh start

//stop restart status 停止,重启,查看状态

查看:

zookeeper.out

最终查看是否启动正常

10.3.13.213

$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.14/bin/../conf/zoo.cfg

Mode: follower

10.3.13.197

$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.14/bin/../conf/zoo.cfg

Mode: leader

10.3.13.194

$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.14/bin/../conf/zoo.cfg

Mode: follower

安装kafka

1、单机版的部署模式

解压

tar -zxvf kafka_2.11-2.2.0.tgz

配置kafka全局变量

/usr/local/kafka/kafka_2.11-2.2.0/bin

添加全局变量

vim ~/.bash_profile

立即生效

source ~/.bash_profile

创建数据存储文件夹:

mkdir /usr/local/kafka/kafka_2.11-2.2.0/data

单机下的zookeeper的配置:

server.1=kafka_node1:2888:3888 只能配一个值

启动zookeeper

$ zkServer.sh start

$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/zookeeper-3.4.14/bin/../conf/zoo.cfg

Mode: standalone

启动kafka

kafka-server-start.sh /usr/local/kafka/kafka_2.11-2.2.0/config/server.properties

关闭kafka

先关闭zookeeper

在关闭kafka

$ kafka-server-stop.sh /usr/local/kafka/kafka_2.11-2.2.0/config/server.properties &

2、分布式部署kafka

配置文件 server.properties

# root @ localhost in /usr/local/kafka/kafka_2.11-2.2.0/config

$ vim server.properties

编辑配置文件

broker.id=0 设置kafka节点唯一ID

delete.topic.enable=true 开启删除topic主题属性

listeners=PLAINTEXT://node1:9092 非SASL模式配置kafka集群 每一个节点的配置不一样

num.network.threads=10 设置网络请求处理线程数

num.io.threads=20 设置磁盘IO请求线程数

socket.send.buffer.bytes=102400 设置发送buffer字节数

socket.receive.buffer.bytes=102400 设置收到buffer字节数

socket.request.max.bytes=104857600 设置最大请求字节数

log.dirs=/usr/local/kafka/kafka_2.11-2.2.0/data 设置消息记录存储路径

num.partitions=6 设置kafka的主题分区数

log.retention.hours=168 设置主题保留时间

zookeeper.connect=node1:2181,node2:2181,node3:2181 设置zookeeper连接地址

zookeeper.connection.timeout.ms=6000 设置zookeeper连接超时时间

三个节点分别启动zookeeper

zkServer.sh start

三个节点分别启动kafka

kafka-server-start.sh /usr/local/kafka/kafka_2.11-2.2.0/config/server.properties

后台进程启动:

nohup /usr/local/kafka/kafka_2.11-2.2.0/bin/kafka-server-start.sh /usr/local/kafka/kafka_2.11-2.2.0/config/server.properties &

查看主题相关

kafka-topics.sh --list -zookeeper node1:2181,node2:2182,node3:2181

安装kafka 监控工具

1、参考资料

Kafka Eagle https://www.cnblogs.com/smartloli/p/5829395.html

https://blog.csdn.net/qq_19524879/article/details/82848797

https://blog.csdn.net/u014532217/article/details/79069841

2、下载kafka-eagle

http://www.kafka-eagle.org/ kafka-eagle-bin-1.3.3.tar.gz

https://docs.kafka-eagle.org/2.Install/2.Installing.html

在10.3.13.197上安装监控工具

tar -zxf kafka-eagle-web-1.3.3-bin.tar.gz

3、加入到环境变量

vim ~/.bash_profile

export KE_HOME=/usr/local/kafka-eagle/kafka-eagle-web-1.3.3

PATH=$PATH:$HOME/bin:/usr/local/src/cmake/bin:/usr/local/zookeeper/zookeeper-3.4.14/bin:/usr/local/kafka/kafka_2.11-2.2.0/bin:$KE_HOME/bin

export PATH

使其生效

$ source ~/.bash_profile

4、配置conf下的system-config.properties文件

# root @ localhost in /usr/local/kafka-eagle/kafka-eagle-web-1.3.3/conf/system-config.properties

######################################

# multi zookeeper&kafka cluster list

######################################

kafka.eagle.zk.cluster.alias=cluster1

cluster1.zk.list=node1:2181,node2:2181,node3:2181

######################################

# zk client thread limit

######################################

kafka.zk.limit.size=25

######################################

# kafka eagle webui port

######################################

kafka.eagle.webui.port=8048

######################################

# kafka offset storage

######################################

cluster1.kafka.eagle.offset.storage=kafka

cluster2.kafka.eagle.offset.storage=zk

######################################

# enable kafka metrics

######################################

kafka.eagle.metrics.charts=true

kafka.eagle.sql.fix.error=true

######################################

# kafka sql topic records max

######################################

kafka.eagle.sql.topic.records.max=5000

######################################

# alarm email configure

######################################

kafka.eagle.mail.enable=true

kafka.eagle.mail.sa=alert_sa

kafka.eagle.mail.username=alert_sa@163.com

kafka.eagle.mail.password=mqslimczkdqabbbh

kafka.eagle.mail.server.host=smtp.163.com

kafka.eagle.mail.server.port=25

######################################

# alarm im configure

######################################

#kafka.eagle.im.dingding.enable=true

#kafka.eagle.im.dingding.url=https://oapi.dingtalk.com/robot/send?access_token=

#kafka.eagle.im.wechat.enable=true

#kafka.eagle.im.wechat.token=https://qyapi.weixin.qq.com/cgi-bin/gettoken?corpid=xxx&corpsecret=xxx

#kafka.eagle.im.wechat.url=https://qyapi.weixin.qq.com/cgi-bin/message/send?access_token=

#kafka.eagle.im.wechat.touser=

#kafka.eagle.im.wechat.toparty=

#kafka.eagle.im.wechat.totag=

#kafka.eagle.im.wechat.agentid=

######################################

# delete kafka topic token

######################################

kafka.eagle.topic.token=keadmin

######################################

# kafka sasl authenticate

######################################

cluster1.kafka.eagle.sasl.enable=false

cluster1.kafka.eagle.sasl.protocol=SASL_PLAINTEXT

cluster1.kafka.eagle.sasl.mechanism=PLAIN

######################################

# kafka jdbc driver address

######################################

kafka.eagle.driver=com.mysql.jdbc.Driver

kafka.eagle.url=jdbc:mysql://10.3.13.250:3306/ke?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull

kafka.eagle.username=root

kafka.eagle.password=111111

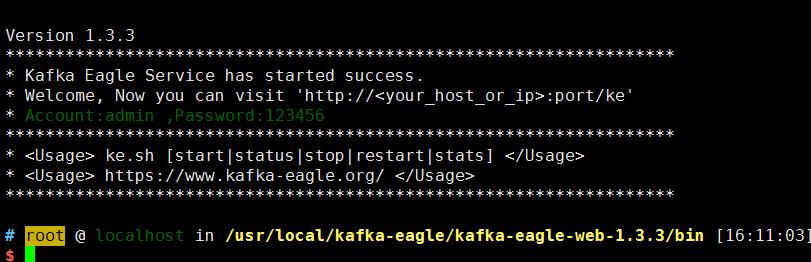

5、启动ke

设置好数据库连接后,在启动ke后会自动去创建数据库。

设置文件的属性:

sudo chomd 777 ke.sh

./ke.sh start

浙公网安备 33010602011771号

浙公网安备 33010602011771号