spring-kafka-生产者

2、环境配置

这里需要有一个注意项:需要在本地的host文件中配置:

10.3.13.213 node1

10.3.13.197 node2

10.3.13.194 node3

不然会报错,无法解析地址

idea 创建springboot工程

其中的依赖包选择web和kafka

3、pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.1.5.RELEASE</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.kafka.example</groupId>

<artifactId>kafka-demo</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>kafka-demo</name>

<description>Demo project for Spring Boot</description

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

common 公共代码模块

1、BeanUtils.java 序列化和反序列化

public class BeanUtils {

private BeanUtils() {

}

/**

* 对象序列化为byte数组

* @param obj

* @return

*/

public static byte[] bean2Byte(Object obj) {

byte[] bytes = null;

try {

ByteArrayOutputStream byteArray = new ByteArrayOutputStream();

ObjectOutputStream outputStream = new ObjectOutputStream(byteArray);

outputStream.writeObject(obj);

outputStream.flush();

bytes = byteArray.toByteArray();

} catch (IOException ex) {

System.out.println(ex.getMessage());

}

return bytes;

}

/**

* 将byte数组反序列化为对象

* @param bytes

* @return

*/

public static Object byte2Obj(byte[] bytes) {

Object obj = null;

try {

ByteArrayInputStream byteArrayInputStream = new ByteArrayInputStream(bytes);

ObjectInputStream inputStream = new ObjectInputStream(byteArrayInputStream);

obj = inputStream.readObject();

} catch (Exception ex) {

System.out.println(ex.getMessage());

}

return obj; }

}

2、CommonApplication.java 主程序入口

@SpringBootApplication

public class CommonApplication {

public static void main(String[] args) {

SpringApplication.run(CommonApplication.class, args);

}

}

3、DateUtils.java 时间工具

package com.utils.common;

import java.util.Calendar;

import java.util.Date;

public class DateUtils {

/**

* 获取UTC时间

* @return

*/

public static Date getUtcDate(){

Calendar calendar=Calendar.getInstance();//取得本地时间

int zoneOffset=calendar.get(Calendar.ZONE_OFFSET);//取得时间偏移量

int dstOffset=calendar.get(Calendar.DST_OFFSET);//取得时令差

calendar.add(Calendar.MILLISECOND,-(zoneOffset+dstOffset));

Date date=calendar.getTime();

return date;

}

}

4、LogInfo.java 接收日志的实体类

package com.utils.common;

import java.io.Serializable;

public class LogInfo implements Serializable {

public String logType;

public String xmlString;

public String calledSource;

public String getLogType() {

return logType;

}

public void setLogType(String logType) {

this.logType = logType;

}

public String getCalledSource() {

return calledSource;

}

public void setCalledSource(String calledSource) {

this.calledSource = calledSource;

}

public String getXmlString() {

return xmlString;

}

public void setXmlString(String xmlString) {

this.xmlString = xmlString;

}

}

5、LogUploadResult.java 日志接收结果实体类

package com.utils.common;

public class LogUploadResult {

public LogUploadResult(boolean isSuccess, ServiceLogUploadResult errorCode) {

this.isSuccess = isSuccess;

this.errorCode = errorCode;

}

private boolean isSuccess;

private String message;

public ServiceLogUploadResult errorCode;

public boolean isSuccess() {

return isSuccess;

}

public void setSuccess(boolean success) {

isSuccess = success;

}

public String getMessage() {

return message;

}

public void setMessage(String message) {

this.message = message;

}

public ServiceLogUploadResult getErrorCode() {

return errorCode;

}

public void setErrorCode(ServiceLogUploadResult errorCode) {

this.errorCode = errorCode;

}

}

6、ServiceLogUploadResult.java 解析日志实体类

package com.utils.common;

public enum ServiceLogUploadResult {

INVALID_IP_ADDRESS("无效ip", 1),

DEVICE_NOT_FOUND("未找到注册设备", 2),

DEVICE_NOT_REGISTERED("设备未注册", 3),

UPLOAD_SUCCESSFUL("上传成功", 4),

UPLOAD_UNSUCCESSFUL("上传失败", 5),

INVALID_LOG_TYPE("无效日志类型", 6),

UNKNOWN("未知错误", 99);

private ServiceLogUploadResult(String description, int code) {

this.code = code;

this.description = description;

}

public String getDescription() {

return description;

}

public void setDescription(String description) {

this.description = description;

}

public int getCode() {

return code;

}

public void setCode(int code) {

this.code = code;

}

private String description;

private int code;

}

Kafka生产者代码模块

1、application.yml 配置了kafka的ip地址,序列化的接口(将类实例化为string)

spring:

kafka:

bootstrap-servers: 10.3.13.213:9092,10.3.13.197:9092,10.3.13.194:9092

producer:

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: com.kafka.example.kafka.EncodeingKafka

buffer-memory: 33554432

2、DecodeingKafka.java 实现kafka的反序列化接口

package com.kafka.example.kafka;

import com.utils.common.BeanUtils;

import org.apache.kafka.common.serialization.Deserializer;

import java.util.Map;

/**

* 实现kafka的反序列化接口

*/

public class DecodeingKafka implements Deserializer {

@Override

public void configure(Map map, boolean b) {

}

@Override

public Object deserialize(String s, byte[] bytes) {

return BeanUtils.byte2Obj(bytes);

}

@Override

public void close() {

}

}

3、EncodeingKafka.java 实现kafka的序列化接口

package com.kafka.example.kafka;

import com.utils.common.BeanUtils;

import org.apache.kafka.common.serialization.Serializer;

import java.util.Map;

/**

* 实现kafka的序列化接口

*/

public class EncodeingKafka implements Serializer<Object> {

@Override

public void configure(Map<String, ?> map, boolean b) {

}

@Override

public byte[] serialize(String s, Object o) {

return BeanUtils.bean2Byte(o);

}

@Override

public void close() {

}

}

4、KafkaSendResultHandler.java 消息发送成功或失败的回调函数

package com.kafka.example.kafka;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.springframework.kafka.support.ProducerListener;

import org.springframework.stereotype.Component;

@Component

public class KafkaSendResultHandler implements ProducerListener {

@Override

public void onSuccess(ProducerRecord producerRecord, RecordMetadata recordMetadata) {

System.out.println("消息发送成功");

}

@Override

public void onError(ProducerRecord producerRecord, Exception exception) {

System.out.println("消息发送失败");

}

}

5、KafkaProducerController.java 发送消息

package com.kafka.example.kafkademo;

import com.kafka.example.kafka.KafkaSendResultHandler;

import com.utils.common.LogInfo;

import com.utils.common.LogUploadResult;

import com.utils.common.ServiceLogUploadResult;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.ComponentScan;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@ComponentScan(basePackages = "com.kafka.example.kafka")

@RestController

@RequestMapping("ServiceLog")

public class KafkaProducerController {

private final static Logger logger= LoggerFactory.getLogger(KafkaProducerController.class);

//异步发送消息

//kafkaTemplate 采用的是默认异步发送消息

@Autowired

private KafkaSendResultHandler kafkaSendResultHandler;

@Autowired

private KafkaTemplate<String, LogInfo> kafkaTemplate;

@RequestMapping("DoAddLog")

public LogUploadResult daAddLog(LogInfo logInfo){

LogUploadResult result=new LogUploadResult(false, ServiceLogUploadResult.UNKNOWN);

try {

if(logInfo==null){

logger.error("logInfo is null");

return null;

}

kafkaTemplate.setProducerListener(kafkaSendResultHandler);

kafkaTemplate.send("log_topic",logInfo);

result.setSuccess(true);

return result;

}catch (Exception ex){

logger.error(ex.getMessage());

result.setSuccess(false);

result.setMessage("upload unsuccsessful");

result.setErrorCode(ServiceLogUploadResult.UPLOAD_UNSUCCESSFUL);

}

return result;

}

}

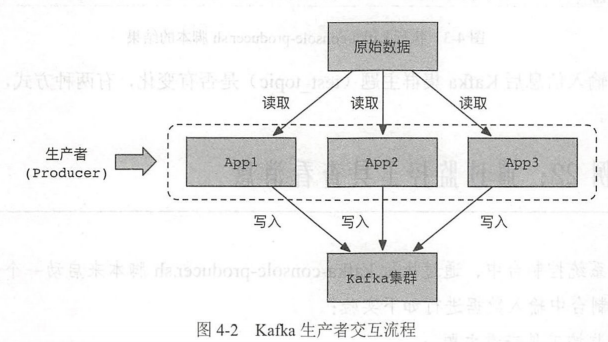

kafka 生产者实时的读取原始数据,在代码中进行业务逻辑处理,然后消息写入到kafka集群中去。

发送消息到Kafka主题

KafkaTemplate 是异步发送消息的模式,可以设置消息发送回调函数。

若需要同步发送消息,只需要在send方法后面加上get方法即可。

kafka发送一个自定义的消息体: https://blog.csdn.net/chen20111/article/details/79320557

浙公网安备 33010602011771号

浙公网安备 33010602011771号