Kubernetes 1.26.0 集群部署Prometheus监控

前言

该存储库收集 Kubernetes 清单、Grafana仪表板和Prometheus 规则,结合文档和脚本,使用Prometheus Operator提供易于操作的端到端 Kubernetes 集群监控。

这个项目的内容是用jsonnet写的。

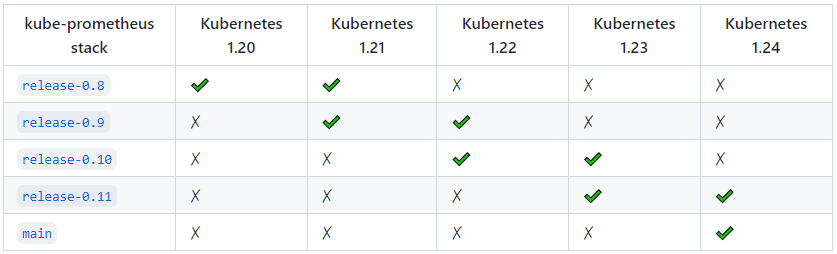

兼容性

支持以下 Kubernetes 版本并在我们在各自分支中针对这些版本进行测试时正常工作。但请注意,其他版本可能有效!

这个项目的内容是用jsonnet写的。这个项目既可以被描述为一个包,也可以被描述为一个库。

此包中包含的组件:

- The Prometheus Operator

- Highly available Prometheus

- Highly available Alertmanager

- Prometheus node-exporter

- Prometheus Adapter for Kubernetes Metrics APIs

- kube-state-metrics

- Grafana

这个堆栈用于集群监控,因此它被预先配置为从所有 Kubernetes 组件收集指标。除此之外,它还提供一组默认的仪表板和警报规则。许多有用的仪表板和警报来自kubernetes-mixin 项目,与该项目类似,它提供可组合的 jsonnet 作为库,供用户根据自己的需要进行定制。

先决条件

您将需要一个 Kubernetes 集群,仅此而已!默认情况下,假定 kubelet 使用令牌身份验证和授权,否则 Prometheus 需要一个客户端证书,这使它可以完全访问 kubelet,而不仅仅是指标。令牌认证和授权允许更细粒度和更容易的访问控制。

这意味着 kubelet 配置必须包含这些标志:

--authentication-token-webhook=true此标志启用ServiceAccount令牌可用于对 kubelet 进行身份验证。这也可以通过将 kubelet 配置值设置为 来authentication.webhook.enabled启用true。--authorization-mode=Webhook此标志使 kubelet 将使用 API 执行 RBAC 请求,以确定是否允许请求实体(在本例中为 Prometheus)访问资源,特别是该项目的/metrics端点。这也可以通过将 kubelet 配置值设置为 来authorization.mode启用Webhook。

该堆栈通过部署Prometheus Adapter提供资源指标。此适配器是一个扩展 API 服务器,Kubernetes 需要启用此功能,否则适配器没有效果,但仍会部署。

一、首先部署k8s集群

参考地址:https://www.cnblogs.com/yangzp/p/16911078.html

[root@master ~]# kubectl get node NAME STATUS ROLES AGE VERSION master Ready control-plane 21h v1.26.0 node1 Ready <none> 21h v1.26.0 node2 Ready <none> 21h v1.26.0

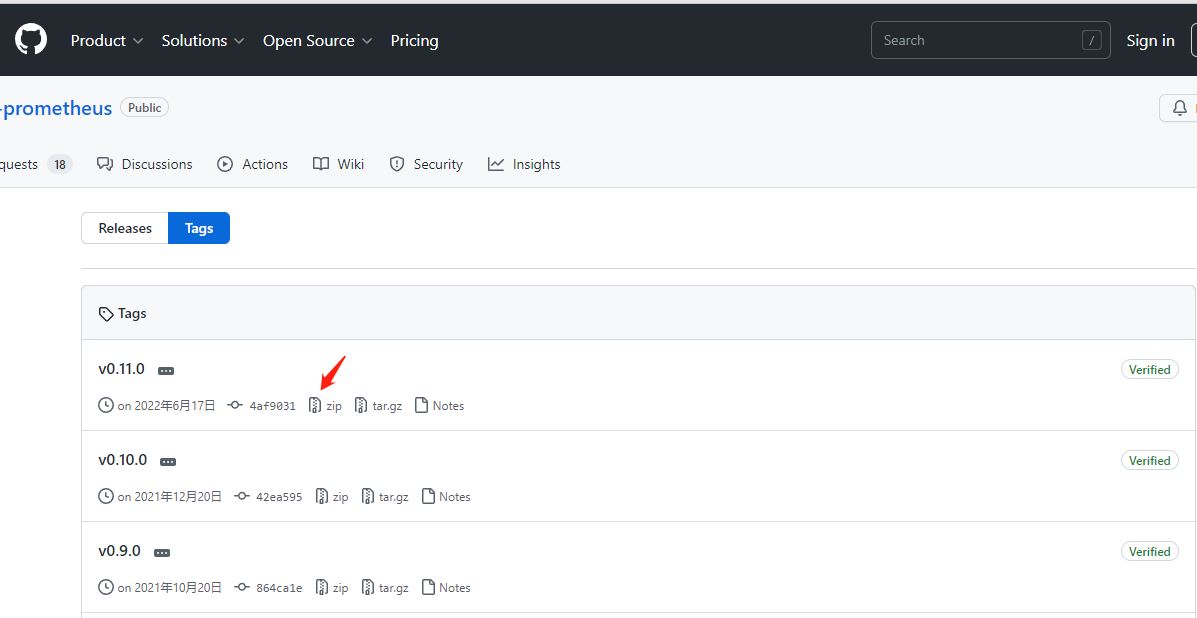

二、下载kube-Prometheus文件

官网地址:https://github.com/prometheus-operator/kube-prometheus/tags

下载:

wget https://github.com/prometheus-operator/kube-prometheus/archive/refs/tags/v0.11.0.zip

解压:

unzip v0.11.0.zip

三、安装

kubectl create -f manifests/setup

待定前面的容器启动后执行

kubectl create -f manifests/

注:等待所有容器启动成功后,即可访问!

安装过程中可能会有个别镜像下载失败的情况,可以使用以下方法单独下载:

1、查看pod状态

kubectl describe pod {podname} -n monitoring(名称空间)

2、在dockerhub上面搜索需要下载的镜像到指定服务器,网址:https://hub.docker.com/

3、修改镜像tag

格式:docker image tag 源镜像:tag 目标镜像:tag

次此出现:

docker pull v5cn/prometheus-adapter:v0.9.1 docker image tag v5cn/prometheus-adapter:v0.9.1 k8s.gcr.io/prometheus-adapter/prometheus-adapter:v0.9.1 docker pull landv1001/kube-state-metrics:v2.5.0 docker image tag landv1001/kube-state-metrics:v2.5.0 k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.5.0

四、访问

端口转发grafana: nohup kubectl --address 0.0.0.0 --namespace monitoring port-forward svc/grafana 3000 > nohupcmd.out 2>&1 &

查看所有pod状态:

[root@master ~]# kubectl get pod -A -owide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-apiserver calico-apiserver-5c555fdcd5-9vh74 1/1 Running 1 (98s ago) 22h 10.244.219.68 master <none> <none> calico-apiserver calico-apiserver-5c555fdcd5-n8tsb 1/1 Running 12 (5m41s ago) 100m 10.244.166.148 node1 <none> <none> calico-system calico-kube-controllers-8cd47c9d7-n2vb4 1/1 Running 1 (98s ago) 107m 10.244.219.70 master <none> <none> calico-system calico-node-8xm5f 1/1 Running 31 (5m38s ago) 22h 192.168.1.156 node1 <none> <none> calico-system calico-node-hcqvd 1/1 Running 1 (98s ago) 22h 192.168.1.155 master <none> <none> calico-system calico-node-n2qbs 1/1 Running 17 (97m ago) 22h 192.168.1.157 node2 <none> <none> calico-system calico-typha-6b47dbb5c8-6b86d 1/1 Running 15 (97m ago) 22h 192.168.1.157 node2 <none> <none> calico-system calico-typha-6b47dbb5c8-cwwx6 1/1 Running 27 (5m39s ago) 22h 192.168.1.156 node1 <none> <none> kube-system coredns-5bbd96d687-rg486 1/1 Running 1 (98s ago) 107m 10.244.219.69 master <none> <none> kube-system coredns-5bbd96d687-tf9dm 1/1 Running 3 (17m ago) 100m 10.244.166.147 node1 <none> <none> kube-system etcd-master 1/1 Running 1 (98s ago) 22h 192.168.1.155 master <none> <none> kube-system kube-apiserver-master 1/1 Running 1 (98s ago) 22h 192.168.1.155 master <none> <none> kube-system kube-controller-manager-master 1/1 Running 1 (98s ago) 22h 192.168.1.155 master <none> <none> kube-system kube-proxy-8qqg5 1/1 Running 0 22h 192.168.1.156 node1 <none> <none> kube-system kube-proxy-9qpck 1/1 Running 0 22h 192.168.1.157 node2 <none> <none> kube-system kube-proxy-zjpwx 1/1 Running 1 (98s ago) 22h 192.168.1.155 master <none> <none> kube-system kube-scheduler-master 1/1 Running 1 (98s ago) 22h 192.168.1.155 master <none> <none> monitoring alertmanager-main-0 2/2 Running 0 97m 10.244.104.24 node2 <none> <none> monitoring alertmanager-main-1 2/2 Running 1 (43m ago) 102m 10.244.166.141 node1 <none> <none> monitoring alertmanager-main-2 2/2 Running 0 97m 10.244.104.25 node2 <none> <none> monitoring blackbox-exporter-78b4bfdf67-kssjd 3/3 Running 0 100m 10.244.166.145 node1 <none> <none> monitoring grafana-86c9f7b457-g9c4n 1/1 Running 0 100m 10.244.166.143 node1 <none> <none> monitoring kube-state-metrics-f4d87bdfb-gx6ms 3/3 Running 0 100m 10.244.166.146 node1 <none> <none> monitoring node-exporter-8fgqt 2/2 Running 0 4h30m 192.168.1.157 node2 <none> <none> monitoring node-exporter-kcx59 2/2 Running 0 4h30m 192.168.1.156 node1 <none> <none> monitoring node-exporter-nqnhn 2/2 Running 2 (98s ago) 4h30m 192.168.1.155 master <none> <none> monitoring prometheus-adapter-8694794d86-8bfsk 1/1 Running 13 (5m38s ago) 107m 10.244.166.140 node1 <none> <none> monitoring prometheus-adapter-8694794d86-x4grw 1/1 Running 0 100m 10.244.104.26 node2 <none> <none> monitoring prometheus-k8s-0 2/2 Running 9 (5m42s ago) 102m 10.244.166.142 node1 <none> <none> monitoring prometheus-k8s-1 2/2 Running 0 96m 10.244.104.27 node2 <none> <none> monitoring prometheus-operator-867dbbcfd9-mkmdp 2/2 Running 0 100m 10.244.166.144 node1 <none> <none> tigera-operator tigera-operator-7795f5d79b-nrrjg 1/1 Running 28 (109s ago) 22h 192.168.1.156 node1 <none> <none>

浏览器访问:

http://master主机IP:3000

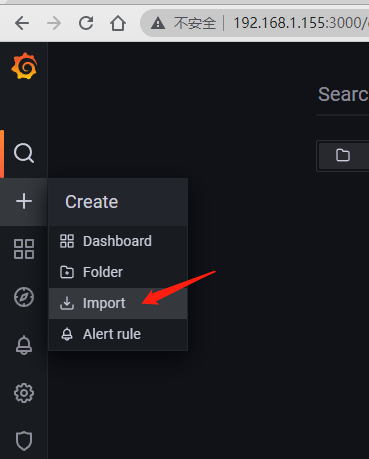

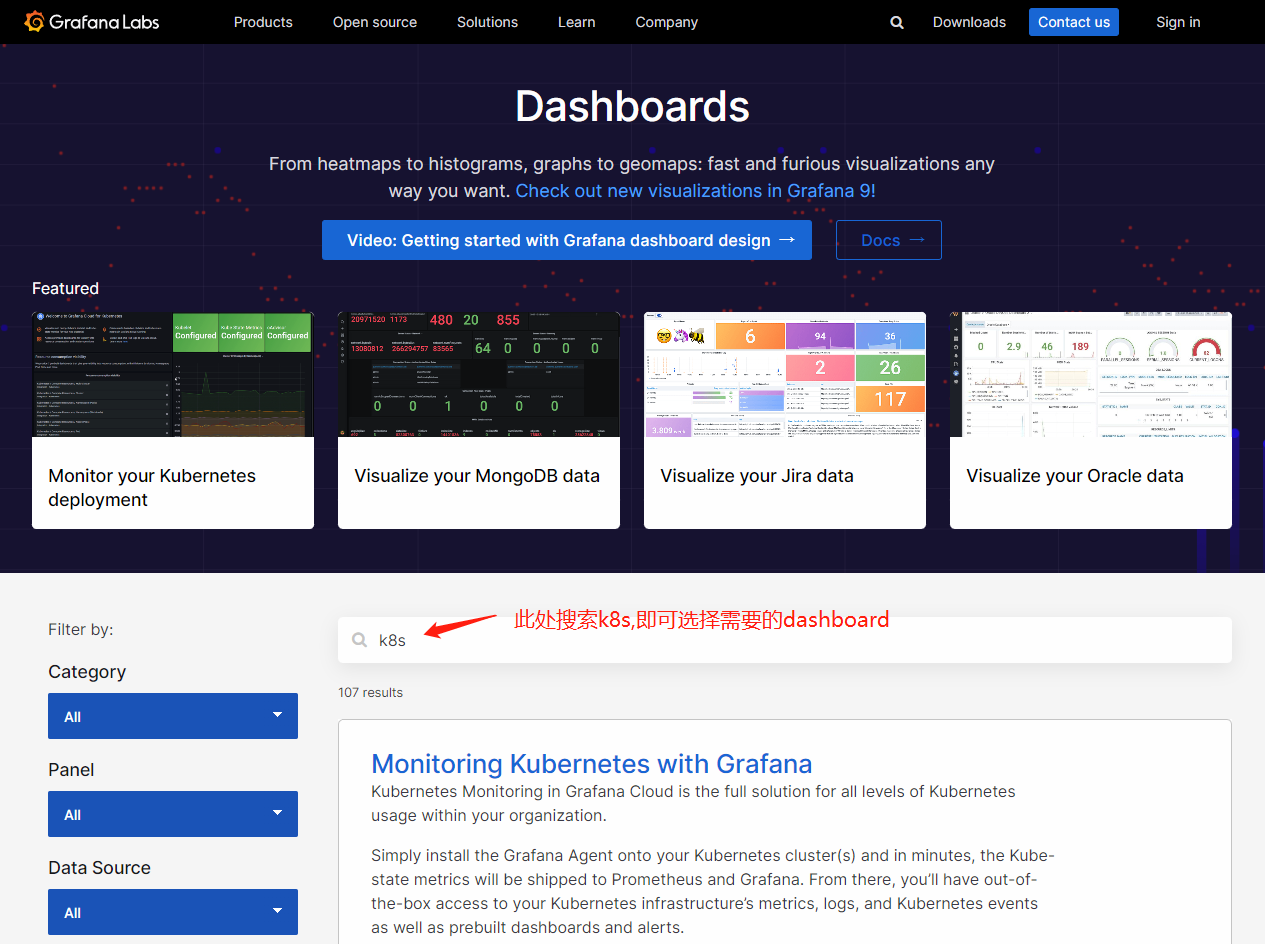

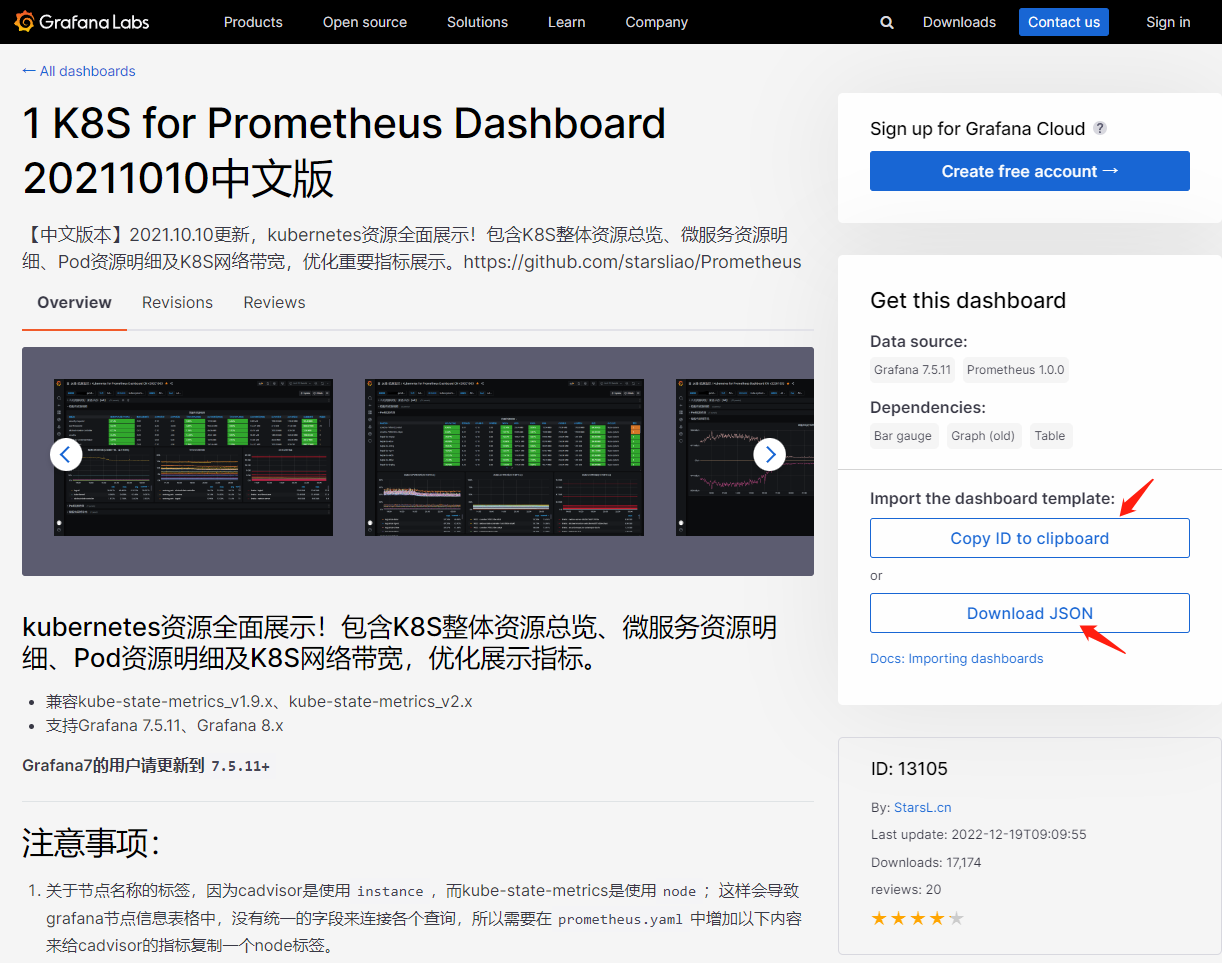

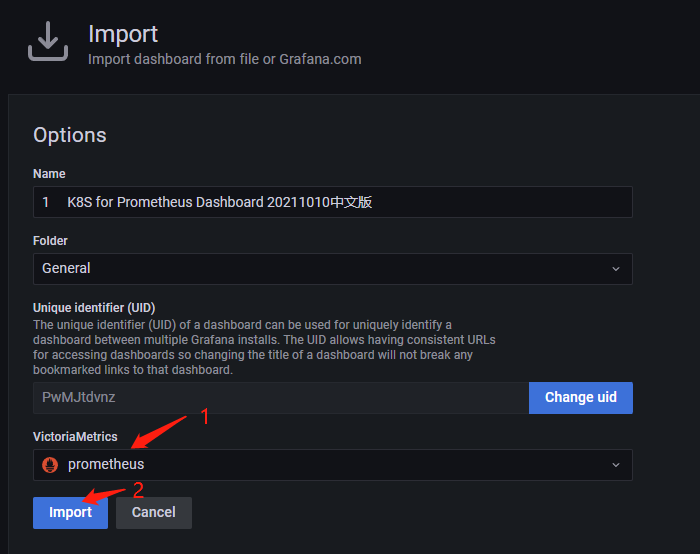

4.1 导入Prometheus Dashboard

grafana访问地址:https://grafana.com/grafana/dashboards/

五、卸载

kubectl delete --ignore-not-found=true -f manifests/ -f manifests/setup

浙公网安备 33010602011771号

浙公网安备 33010602011771号