Kafka之基本操作

1. kafkaServer管理

1. 启动脚本分析

/usr/local/kafka/bin/kafka-server-start.sh

#!/bin/bash

if [ $# -lt 1 ];

then

echo "USAGE: $0 [-daemon] server.properties [--override property=value]*"

exit 1

fi

#定义参数的个数小于1,就输出报错

base_dir=$(dirname $0) #定义变量base_dir

if [ "x$KAFKA_LOG4J_OPTS" = "x" ]; then

export KAFKA_LOG4J_OPTS="-Dlog4j.configuration=file:$base_dir/../config/log4j.properties"

fi

#定义-Dlog4j.configuration的文件,使用固定模式file:{path to file}

if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"

fi

#设置堆的初始化值和最大值

EXTRA_ARGS=${EXTRA_ARGS-'-name kafkaServer -loggc'}

#如果EXTRA_ARGS没有被定义或者值为空,返回值为-name kafkaServer -loggc,类似于:${WXTRA_ARGS:-'-name kafkaServer -loggc'}

COMMAND=$1

case $COMMAND in

-daemon)

EXTRA_ARGS="-daemon "$EXTRA_ARGS

shift

;;

*)

;;

esac

exec $base_dir/kafka-run-class.sh $EXTRA_ARGS kafka.Kafka "$@"

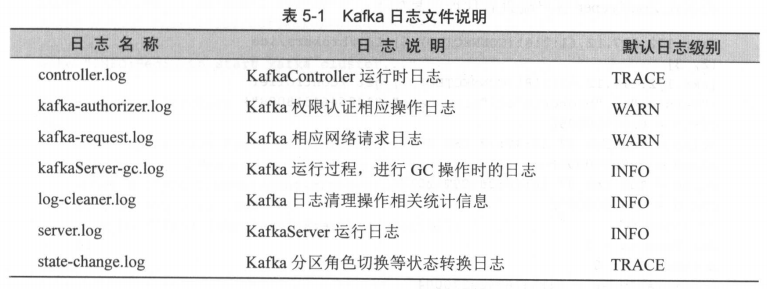

2. 日志文件

会在$KAFKA_HOME/logs目录下产生对应的日志文件

3. 启动kafka集群

#!/bin/bash

brokers=”server-1 server-2 server-3”

KAFKA_HOME="/usr/local/kafka"

echo "INFO: Begin to start kafka cluster ... "

for broker in $brokers

do

echo "INFO:Start kafka on ${broker}

ssh $broker -C "source /etc/profile ; sh ${KAFKA_HOME}/bin/kafka-server-start.sh -daemon ${KAFKA_HOME}/config/server.properties "

if [ $? -eq 0 ]; then

echo "INFO:[${broker}] Start successfully"

fi

done

echo "INFO:Kafka cluster starts successfully !"

4. 关闭kafka集群

#!/bin/bash

brokers=”server-1 server-2 server-3”

KAFKA_HOME="/usr/local/kafka"

echo "INFO: Begin to shudown kafka cluster ... "

for broker in $brokers

do

echo "INFO:Shutdown kafka on ${broker}

ssh $broker -C "sh ${KAFKA_HOME}/bin/kafka-server-stop.sh"

if [ $? -eq 0 ]; then

echo "INFO:[${broker}] shutdown successfully"

fi

done

echo "INFO:Kafka cluster shutdown successfully !"

2. 主题管理

kafka提供了一个kafka-topics.sh工具脚本用于对主题相关的操作

这个脚本只有一行代码: exec $(dirname $0)/kafka-run-class.sh kafka.admin.TopicCommand "$@"

1. 创建主题

1. 命令

kafka-topics.sh --create --zookeeper 172.16.1.228:2181,172.16.1.229:2181,172.16.1.230:2181 --replication-factor 2 --partitions 3 --topic kafka-action

kafka-topics.sh --create --zookeeper 172.16.1.228:2181,172.16.1.229:2181,172.16.1.230:2181/kafka --replication-factor 2 --partitions 3 --topic kafka-action 需要修改zookeeper.connect

2. 参数介绍

--zookeeper 必传参数

--partitions 用于设置主题分区数,必传参数

--replication-factor 用于设置主题副本数,也是必传参数,副本数不能超过分区数

--config 用来设置主题级别的配置

3. 数据所在的位置

1. 创建好主题以后,会在${log.dirs}目录下,创建对应的分区目录

${log.dirs}在/usr/local/kafka/config/server.properties中配置

2. 元数据在zookeeper上查看

[zk: localhost:2181(CONNECTED) 0] ls /brokers/topics/kafka-action/partitions

[0, 1, 2]

[zk: localhost:2181(CONNECTED) 1] get /brokers/topics/kafka-action

{"version":1,"partitions":{"2":[0,1],"1":[2,0],"0":[1,2]}}

cZxid = 0xa500003533

ctime = Mon Jul 04 15:14:27 CST 2022

mZxid = 0xa500003533

mtime = Mon Jul 04 15:14:27 CST 2022

pZxid = 0xa500003535

cversion = 1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 58

numChildren = 1

2. 删除主题

1. 两种方式

1. 手动删除

先删除各节点${log.dirs}目录下该主题分区文件夹

rm -rf /tmp/kafka-logs/kafka-action*

再删除zookeeper上对应的节点的元数据

rmr /config/topics/kafka-action

rmr /brokers/topics/kafka-action

2. 通过脚本kafka-topics.sh删除

kafka-topics.sh --delete --zookeeper 172.16.1.228:2181,172.16.1.229:2181,172.16.1.230:2181 --topic kafka-action

如果server.properties中的delete.topic.enable=true,直接删除topic;如果为false,就在zookeeper的/admin/delete_topics目录下创建一个与待删除主题同名的节点,想要彻底删除主题,需要手工删除${log.dirs}目录下的主题目录。

3. 查看主题

1. 查看所有主题

kafka-topics.sh --list --zookeeper 172.16.1.228,172.16.1.229,172.16.1.230

2. 查看特定主题信息

kafka-topics.sh --describe --zookeeper 172.16.1.228,172.16.1.229,172.16.1.230 --topic kafkaTopicTest

带--topic参数,显示指定topic信息

不带--topic参数,显示所有的topic信息

3. 查看正在同步的主题

kafka-topics.sh --describe --zookeeper 172.16.1.228,172.16.1.229,172.16.1.230 --under-replicated-partitions

4. 查看没有Leader的分区

kafka-topics.sh --describe --zookeeper 172.16.1.228,172.16.1.229,172.16.1.230 --unavailable-partitions

5. 查看主题覆盖的配置

kafka-topics.sh --describe --zookeeper 172.16.1.228,172.16.1.229,172.16.1.230 --topics-with-overrides

4. 修改主题

1. 修改主题的配置

1. 修改主题的max.message.bytes

kafka-topics.sh --alter --zookeeper 172.16.1.228,172.16.1.229,172.16.1.230 --topic yangjianbo --config max.message.bytes=204800

.2 修改主题的segment.bytes

kafka-topics.sh --alter --zookeeper 172.16.1.228,172.16.1.229,172.16.1.230 --topic yangjianbo --config segment.bytes=204800

3. 删除主题的segment.bytes

kafka-topics.sh --alter --zookeeper 172.16.1.228,172.16.1.229,172.16.1.230 --topic yangjianbo --delete-config segment.bytes

2. 增加分区

kafka-topics.sh --alter --zookeeper 172.16.1.228,172.16.1.229,172.16.1.230 --topic yangjianbo --partitions 5

3. kafka不支持减少分区的操作

3. 生产者操作

kafka自带了一个kafka-console-producer.sh脚本,该脚本可以在终端调用kafka生产者向kafka发送消息。

该脚本运行需要指定broker-list和topic两个必传参数

1. 启动生产者

kafka-console-producer.sh --broker-list 172.16.1.228:9092,172.16.1.229:9092,172.16.1.230:9092 --topic yangjianbo --property parse.key=true

默认分隔符是制表符,修改分隔符,使用下面的语句

kafka-console-producer.sh --broker-list 172.16.1.228:9092,172.16.1.229:9092,172.16.1.230:9092 --topic yangjianbo --property parse.key=true ey=true --property key.separator=' '

在控制台输入一批消息

验证消息是否发送成功

kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list 172.16.1.228:9092,172.16.1.229:9092,172.16.1.230:9092 --topic yangjianbo --time -1

2. 创建主题

开启自动创建主题配置项auto.create.topics.enable=true, 在配置文件/usr/local/kafka/config/producer.properties

3. 查看消息

kafka-run-class.sh kafka.tools.DumpLogSegments --files /tmp/kafka-logs/yangjianbo-1/00000000000000000000.log

--files 必传参数

4. 生产者性能测试工具

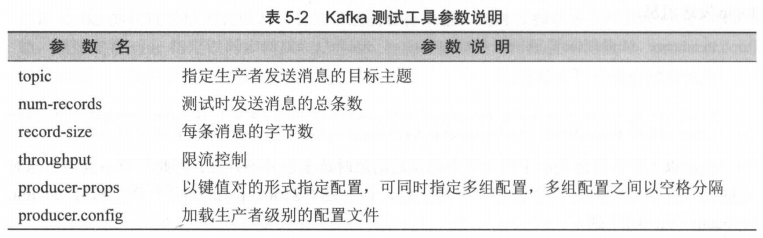

使用脚本kafka-producer-perf-test.sh

1. kafka测试工具参数说明

注意:throughput小于0,不进行限流;当大于0时,当己发送的消息总字节数与当前己执行的时间取整大于该字段时生产者线程会被阻塞一段时间。

等于0时,则生产者在发送一次消息之后检测满足阻塞条件时将会一直被阻塞。

2. 测试性能命令如下:

kafka-producer-perf-test.sh --num-records 1000000 --record-size 1000 --topic yangjianbo --throughput 1000000 --producer-props bootstrap.servers=172.16.1.228:9092,172.16.1.229:9092,172.16.1.230:9092 acks=all

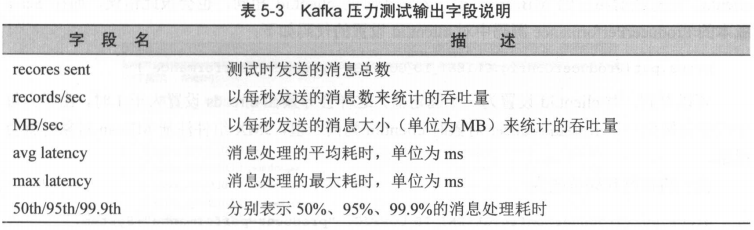

3. 结果分析

91681 records sent, 18332.5 records/sec (17.48 MB/sec), 1104.8 ms avg latency, 1693.0 max latency. 119408 records sent, 23881.6 records/sec (22.78 MB/sec), 1281.0 ms avg latency, 2683.0 max latency. 122368 records sent, 24473.6 records/sec (23.34 MB/sec), 1361.4 ms avg latency, 2747.0 max latency. 120508 records sent, 24101.6 records/sec (22.99 MB/sec), 1317.5 ms avg latency, 2858.0 max latency. 143748 records sent, 28749.6 records/sec (27.42 MB/sec), 1177.4 ms avg latency, 2684.0 max latency. 140704 records sent, 28140.8 records/sec (26.84 MB/sec), 1140.8 ms avg latency, 2672.0 max latency. 89170 records sent, 17834.0 records/sec (17.01 MB/sec), 1062.2 ms avg latency, 5298.0 max latency. 118607 records sent, 23721.4 records/sec (22.62 MB/sec), 1726.1 ms avg latency, 5815.0 max latency. 1000000 records sent, 23029.270202 records/sec (21.96 MB/sec), 1341.48 ms avg latency, 5815.00 ms max latency, 1218 ms 50th, 3874 ms 95th, 5671 ms 99th, 5802 ms 99.9th.

4. 消费者操作

浙公网安备 33010602011771号

浙公网安备 33010602011771号