hive常用操作命令

创建表

创建内部表

create table article

( 列名 类型

) row format delimited fields terminated by '\n'; //列分隔符

创建外部表并从hdfs上载入数据

create external table rating_table_e ( userId STRING, movieId STRING, rating STRING, timestamp STRING ) row format delimited fields terminated by ',' stored as textfile location '/user/root/rating_table'; //外部表不需要导入数据但是需要指定文件路径

创建分区表

create external table rating_table_p ( userId STRING, movieId STRING, rating STRING ) partitioned by (dt STRING) row format delimited fields terminated by ',' lines terminated by '\n';

查看分区数: show partitions rating_table_p;

创建分桶表

create external table rating_table_b

(

userId INT,

movieId STRING,

rating STRING

)

clustered by (userId) into 32 buckets;

开启分桶功能:

set hive.enforce.bucketing = true;

灌数据:

insert overwrite table rating_table_b

select userid, movieid, rating from rating_table_e;

数据抽样:

select * from rating_table_b tablesample(bucket x out of y on id);

查看表的详细信息

desc formatted table_name;

//建表时在最后一行加上该语句可是使该表在载入数据时跳过第一行数据 tblproperties("skip.header.line.count"="1");

数据类型转换

cast(列名 as 新类型)

表中数据的导入和导出

导出

//本地 insert overwrite local directory '/home/badou/hive_test_3/data' select userid, title, rating from behavior_table; //hdfs insert overwrite directory '/behavior_table' select userid, title, rating from behavior_table;

overwrite:覆盖数据

导入

//导入本地数据到内部表中 load data local inpath '/home/badou/Documents/code/mr/The_man_of_property.txt' into table article;

//导入本地数据到分区表中

load data local inpath '/home/badou/hive_test/ml-latest-small/2009-12.data' overwrite into table rating_table_p partition(dt='2009-12');

join on

create table behavior_table as select r.userid, m.movieid, m.title, r.rating from movie_table_e m join rating_table_e r on m.movieid = r.movieid limit 10;

group by

select pageid, age, count(1) from pv_users group by pageid, age;

group by 和 partition by 区别

select user_id,count(order_id) as ord_cnt from orders group by user_id limit 20;

select user_id, count(order_id) over(partition by user_id) --【只对count结果聚合】 from orders limit 20;

笛卡尔积

select t1.user_id as u1,t2.user_id as u2 from (select user_id from dc)t1 join (select user_id from dc)t2 //join过程没有on条件

case when

//如果列order_dow的值是'0'则新建列dow_0的值是1,否则新建列dow_0的值是0 case order_dow when '0' then 1 else 0 end as dow_0

if

//如果条件值为真则为第一值,否则为第二个值 if(days_since_prior_order='','0.0',days_since_prior_order) as dt

相关设置

set hive.cli.print.header=true; --打印表头

排序

sort by 在同一个reduce中进行排序

order by 全局排序,一般是只有一个reduce

collect_list和collect_set

它们都是将分组中的某列转为一个数组返回,不同的是collect_list不去重而collect_set去重。

//收集用户所有的订单放到一个数组里面 select user_id,collect_list(order_id) as order_ids from orders group by user_id limit 10;

concat_ws:将两个字段进行字符串拼接

//(分割符,列1,列2) concat_ws('_',user_id,order_id)

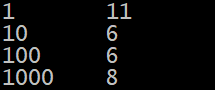

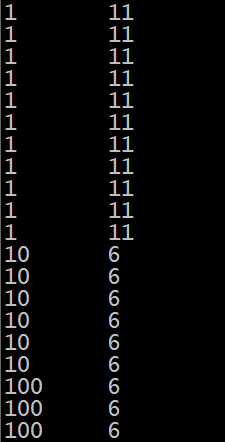

rank(),row_number(),dense_rank()

rank():排序相同时会重复,总数不变

row_number():会根据顺序计算,连续值

dense_rank():排序相同时会重复,总数会减少

select user_id,order_dow,rank() over(partition by user_id order by cast(order_dow as int) desc) as rk from rank_test;

--结果:

user_id order_dow rk

1 4 1

1 4 1

1 4 1

1 4 1

1 3 5

1 3 5

1 2 7

1 2 7

1 1 9

1 1 9

1 1 9

select user_id,order_dow,row_number() over(partition by user_id order by cast(order_dow as int) desc) as rk from rank_test;

user_id order_dow rk

1 4 1

1 4 2

1 4 3

1 4 4

1 3 5

1 3 6

1 2 7

1 2 8

1 1 9

1 1 10

1 1 11

select user_id,order_dow,dense_rank() over(partition by user_id order by cast(order_dow as int) desc) as rk from rank_test;

user_id order_dow rk

1 4 1

1 4 1

1 4 1

1 4 1

1 3 2

1 3 2

1 2 3

1 2 3

1 1 4

1 1 4

1 1 4

时间格式变化

import time import sys file_name = sys.argv[1] with open(file_name, 'r') as fd: for line in fd: ss = line.strip().split(',') if len(ss) == 4: user_id = ss[0].strip() movie_id = ss[1].strip() rating = ss[2].strip() timestamp = ss[3].strip() time_local = time.localtime(int(timestamp)) dt = time.strftime("%Y-%m", time_local) print '\t'.join([user_id, movie_id, rating, dt])

自定义函数(使用transform)

UDF:一进一出

UDAF:多进一出

UDTF:一进多出

实例1:把表中的两列拼成一列

python脚本定义udf函数:

import sys for line in sys.stdin: ss = line.strip().split('\t') if len(ss) >= 2: print '_'.join([ss[0].strip(),ss[1].strip()])

hive命令:

add file /home/badou/hive_test/udf/transform.py; select transform(movieid,title) using "python transform.py" as uuu from movie_table_e limit 10;

实例2:wordcount

创建表:

create external table docs_table (line STRING);

载入数据:

load data local inpath '/home/badou/hive_test_3/data/wc.data' overwrite into table docs;

python定义函数

mapper.py

reducer.py

hive命令

add file /home/badou/hive_test_3/transform_wc/mapper.py; add file /home/badou/hive_test_3/transform_wc/reducer.py; insert overwrite table word_count select transform(wc_map.word, wc_map.count) using 'python reducer.py' from ( select transform(line) using 'python mapper.py' as word, count from docs_table cluster by word ) wc_map;

参考资料

八斗大数据

浙公网安备 33010602011771号

浙公网安备 33010602011771号