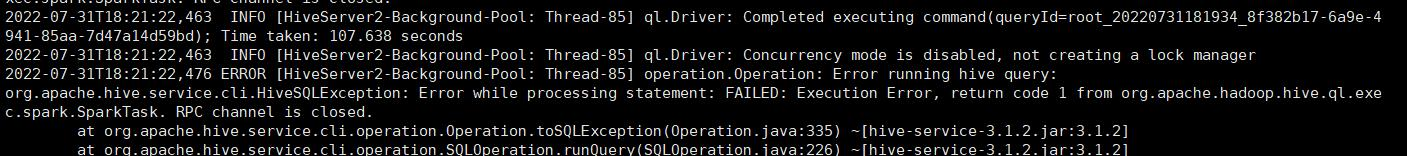

ERROR [HiveServer2-Background-Pool: Thread-85] spark.SparkTask: Failed to execute spark task, with exception 'java.lang.IllegalSt ateException(RPC channel is closed.)'

yarn跑hive的任务失败

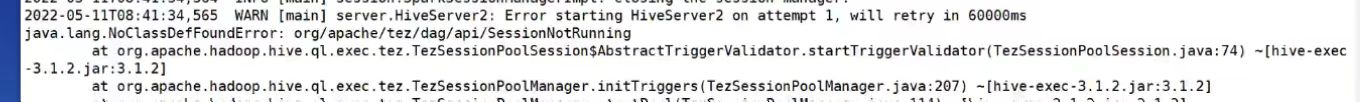

这是hive的报错日志信息

由于资源不够导致动态生成分配资源失败,所以调优需谨慎!!!!

这是执行失败的时候报的错

ERROR [HiveServer2-Background-Pool: Thread-85] spark.SparkTask: Failed to execute spark task, with exception 'java.lang.IllegalSt

ateException(RPC channel is closed.)'

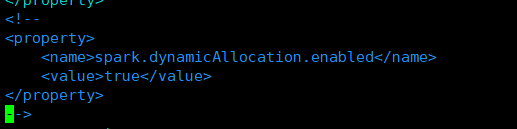

原因一:是我在hive-site.xml里加了这一条配置信息意思是启动spark的动态分配资源

动态的调整其所占用的资源(Executor个数)

<property>

<name>spark.dynamicAllocation.enabled</name>

<value>true</value>

</property>

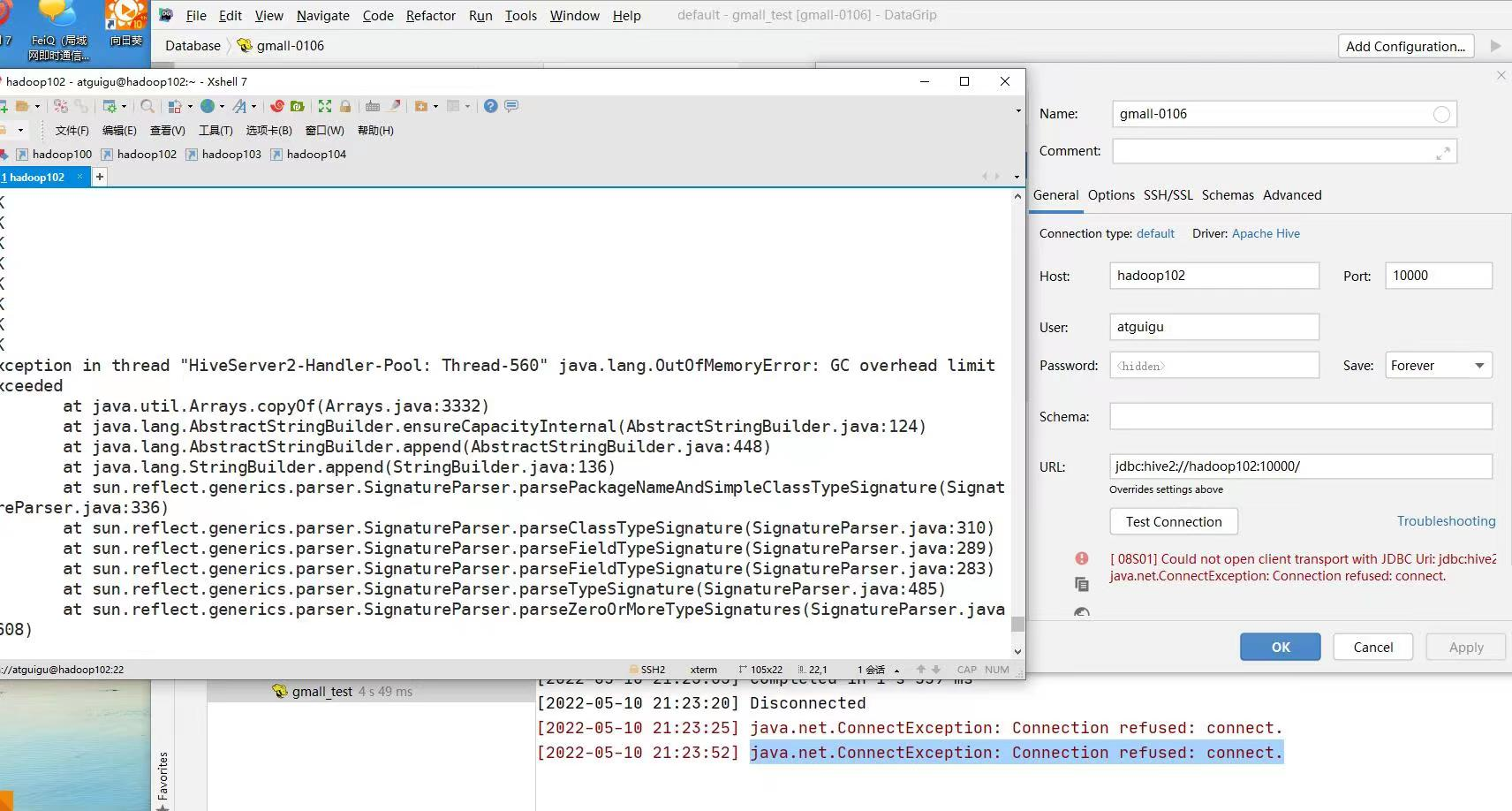

原因二:资源不足(OOM)

jinfo -flag MaxHeapSize 进程号

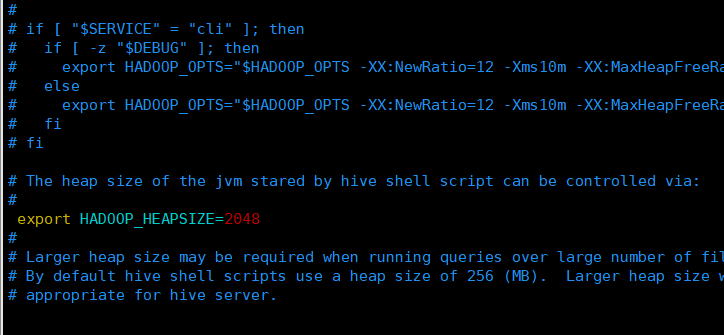

hadoop分配给hiveserver2的堆内存,这个参数调大一点,也会提高hiveserver2的连接时间

位置:/root/module/hive-3.1.2/bin/hive-config.sh

或者修改hive安装目录下的conf目录下的hive-env.sh

原因三:yarn分配给容器的资源不足

修改该文件下的参数 /root/ha/hadoop-3.1.3/etc/hadoop/yarn-site.xml

<!-- yarn容器允许分配的最大最小内存 -->

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>512</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>12288</value>

</property>

<!-- yarn容器允许管理的物理内存大小 -->

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>12288</value>

</property>

问题四:hiveserver2连接超时

在安装目录下/opt/module/hive-3.1.2/conf下的文件hive-default.xml.template中增加连接时间:

<name>hive.server2.sleep.interval.between.start.attempts</name>

<value>60s</value>

<description>

Expects a time value with unit (d/day, h/hour, m/min, s/sec, ms/msec, us/usec, ns/nsec), which is msec if not specified.

The time should be in between 0 msec (inclusive) and 9223372036854775807 msec (inclusive).

Amount of time to sleep between HiveServer2 start attempts. Primarily meant for tests

浙公网安备 33010602011771号

浙公网安备 33010602011771号