k8s中的NFS动态存储供给

[root@bogon harbor]#systemctl enable rpcbind.service [root@bogon harbor]#systemctl enable nfs-server.service [root@bogon harbor]# systemctl start nfs [root@bogon harbor]# systemctl start rpcbind [root@bogon harbor]# ps -ef|grep nfs nfsnobo+ 57673 57599 0 11:04 ? 00:00:00 nginx: worker process nfsnobo+ 58737 58578 0 11:05 ? 00:00:00 nginx: worker process root 61199 7659 0 15:14 pts/0 00:00:00 grep --color=auto nfs

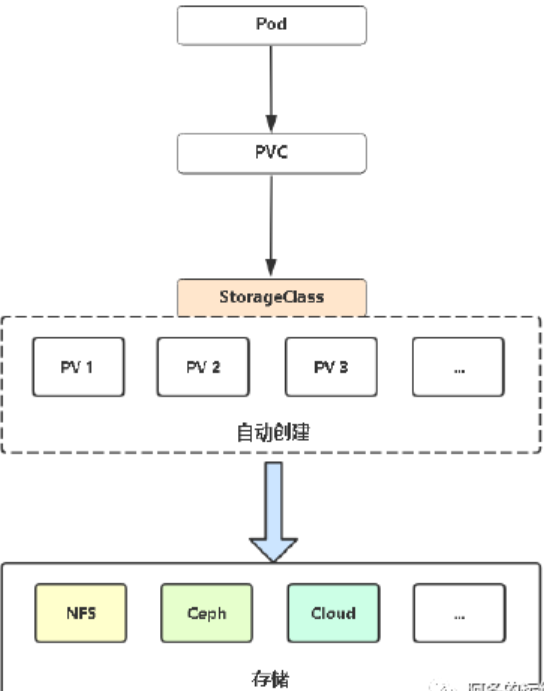

相对于静态存储, 动态存储的优势:

-

管理员无需预先创建大量的PV作为存储资源;

-

静态存储需要用户申请PVC时保证容量和读写类型与预置PV的容量及读写类型完全匹配, 而动态存储则无需如此.

本文使用NFS存储类型完成动态存储, 需要如下步骤

1.准备环境

ip 角色

192.168.73.138 k8s-master

192.168.73.139 k8s-node1

192.168.73.140 k8s-node2

192.168.73.136 nfs #安装nfs

2.创建NFS服务

nfs服务端安装,NFS需要nfs-utils和rpcbind两个包, 但安装nfs-utils时会一起安装上rpcbind:

[root@bogon harbor]#yum install nfs-utils -y

2.1编辑exports文件

编辑/etc/exports文件添加共享目录,每个目录的设置独占一行,每行共三部分, 前边是目录, 后边是客户机, 括号中是配置参数. 编写格式如下:

NFS共享目录路径 客户机IP或者名称(参数1,参数2,...,参数n)

# example: /home/nfs 192.168.64.134(rw,sync,fsid=0) 192.168.64.135(rw,sync,fsid=0)

# 第一部分: /home/nfs, 本地要共享出去的目录。

# 第二部分: 192.168.64.0/24 ,允许访问的主机,可以是一个IP:192.168.64.134,也可以是一个IP段:192.168.64.0/24. "*"表示所有

# 第三部分:

# rw表示可读写,ro只读;

# sync :同步模式,内存中数据时时写入磁盘;async :不同步,把内存中数据定期写入磁盘中;

# no_root_squash :加上这个选项后,root用户就会对共享的目录拥有至高的权限控制,就像是对本机的目录操作一样。不安全,不建议使用;root_squash:和上面的选项对应,root用户对共享目录的权限不高,只有普通用户的权限,即限制了root;all_squash:不管使用NFS的用户是谁,他的身份都会被限定成为一个指定的普通用户身份;

# anonuid/anongid :要和root_squash 以及all_squash一同使用,用于指定使用NFS的用户限定后的uid和gid,前提是本机的/etc/passwd中存在这个uid和gid。

# fsid=0表示将/home/nfs整个目录包装成根目录

/home/nfs *(rw,sync,no_root_squash)[root@bogon harbor]# mkdir /opt/k8s [root@bogon harbor]# vim /etc/exports /opt/k8s 192.168.73.0/24(rw,no_root_squash,no_all_squash,sync)

2.2设置开机自启动

[root@bogon harbor]#systemctl enable rpcbind.service [root@bogon harbor]#systemctl enable nfs-server.service [root@bogon harbor]# systemctl start nfs [root@bogon harbor]# systemctl start rpcbind [root@bogon harbor]# ps -ef|grep nfs nfsnobo+ 57673 57599 0 11:04 ? 00:00:00 nginx: worker process nfsnobo+ 58737 58578 0 11:05 ? 00:00:00 nginx: worker process root 61199 7659 0 15:14 pts/0 00:00:00 grep --color=auto nfs

2.3关闭防火墙

[

yum install nfs-utils -y #安装客户端 mount -t nfs 192.168.73.136:/opt/k8s /mnt/ #挂载测试 cd /mnt df -h #查看是否挂载成功

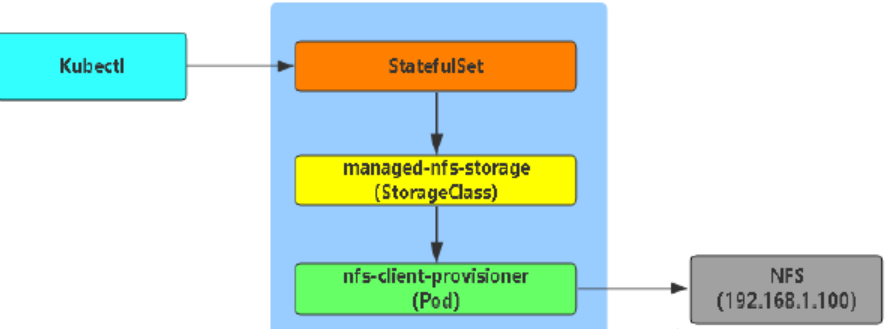

3.PersistenVolume动态供给部署

Dynamic Provisioning机制工作的核心在于StorageClass的API对象

StorageClass声明存储插件,用于自动创建PV

基于NFS存储实现数据持久化:

https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client/deploy

3.1 部署存储供应卷

根据PVC的请求, 动态创建PV存储。

[root@k8s-master nfs-client]# cat deployment.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/open-ali/nfs-client-provisioner

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.73.136

- name: NFS_PATH

value: /opt/k8s

volumes:

- name: nfs-client-root

nfs:

server: 192.168.73.136

path: /opt/k8s

创建:

[root@k8s-master nfs-client]# kubectl apply -f deployment.yaml serviceaccount/nfs-client-provisioner created deployment.extensions/nfs-client-provisioner created [root@k8s-master nfs-client]# kubectl get deploy NAME READY UP-TO-DATE AVAILABLE AGE nfs-client-provisioner 1/1 1 1 61s [root@k8s-master nfs-client]# kubectl get pod NAME READY STATUS RESTARTS AGE nfs-client-provisioner-595f657497-9k878 1/1 Running 0 99s

3.2部署storageclass

[root@k8s-master nfs-client]# cat class.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "true"

创建

[root@k8s-master nfs-client]# kubectl apply -f class.yaml [root@k8s-master nfs-client]# kubectl get sc NAME PROVISIONER AGE managed-nfs-storage fuseim.pri/ifs 142m

3.3 构建权限体系

ServiceAccount也是一种账号, 供运行在pod中的进程使用, 为pod中的进程提供必要的身份证明.

[root@k8s-master nfs-client]# cat rbac.yaml

kind: ServiceAccount

apiVersion: v1

metadata:

name: nfs-client-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

创建:

root@k8s-master nfs-client]# kubectl apply -f rbac.yaml

3.4 部署pod验证动态分配

[root@k8s-master nfs-client]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumes:

- name: www

persistentVolumeClaim:

claimName: my-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

storageClassName: "managed-nfs-storage" #需要跟创建的存储类名字保持一致

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

创建:

[root@k8s-master nfs-client]# kubectl apply -f pod.yam [root@k8s-master nfs-client]# kubectl get pod,pv,pvc NAME READY STATUS RESTARTS AGE pod/my-pod 1/1 Running 0 18s pod/nfs-client-provisioner-595f657497-9k878 1/1 Running 0 13m NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-9886df29-dd4f-11e9-b836-000c297f21d7 5Gi RWX Delete Bound default/my-pvc managed-nfs-storage 5s NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/my-pvc Bound pvc-9886df29-dd4f-11e9-b836-000c297f21d7 5Gi RWX managed-nfs-storage 18s

3.5 排查命令

创建动态供给的时候通过会在创建nfs-client-provisioner和pod的时候出错,可以使用kubectl describe pod "名称",查看详情来进行排错

浙公网安备 33010602011771号

浙公网安备 33010602011771号