重庆--上海手动切换容灾方案执行步骤

一,背景介绍

由于重庆机房1月1日会停电,为了保证重庆停电期间hp内部系统可用,需要在上海安装备份机器。

重庆和上海的服务器通过hp的vpn虽然内部可以互相访问,但是网速相差非常大。比如重庆内部153和154之间用iperf3测试速度为99M/s左右,相当于千兆网。但是153重庆和247上海服务器之间测试速度为6M/s左右。所以为了不影响平时的访问速度,暂时执行停电手动切换方案。

平时不停电的时候所有hp的前端都访问重庆的后端mysql es redis都访问重庆的节点。上海的数据库做个主主同步(mycat切换到重庆了,不影响访问速度),上海的redis cluster安装3个 master node但是把slot全部迁移到重庆。上海的es安装3个node然后配置node.data: false

如果重庆停电了(此方案不能应对突发停电),停电的头天晚上把es的分片切换到上海,redis cluster里面的slot迁移到上海,上海247的后端打开,所有hp前端配置到247

二,es和redis cluster的节点安装和准备

进行redis和es数据迁移前最好把novaback和.net的服务器关掉,不然不知道迁移过程中有没有影响

1,安装上海247的3个redis节点,并且把节点作为master加入到重庆153,154,162的集群

2、服务器集群

服务器 redis节点

node-i(15.32.134.247) 7007,7008,7009

把247关闭防火墙:

systemctl stop iptables

systemctl stop firewalld

查看防火墙状态:

systemctl status iptables

systemctl status firewalld

3、安装gcc

redis进行源码安装,先要安装gcc,再make redis。执行以下命令安装redis:

yum -y install gcc gcc-c++ libstdc++-devel

4、安装ruby

执行以下命令安装ruby2.5,如果ruby版本过低,无法启动redis集群。

yum install -y centos-release-scl-rh

yum install -y rh-ruby25

scl enable rh-ruby25 bash

检验并查看ruby版本:

ruby -v

最后执行如下命令:

gem install redis

5、配置redis节点

(1)、node-i(15.32.134.247)

(a).安装redis

cd /home/tools

从redis官网https://redis.io/下载redis最新版本redis-4.0.11。或者 wget http://download.redis.io/releases/redis-4.0.11.tar.gz

解压redis:

tar -zxvf redis-4.0.11.tar.gz

创建redis目录:

mkdir -p /home/tools/redis-i

mkdir -p /home/tools/redis-cluster/7007 /home/tools/redis-cluster/7008 /home/tools/redis-cluster/7009

进入redis-4.0.11目录,执行make命令,将redis安装在/usr/local/redis-i目录下:

make install PREFIX=/home/tools/redis-i

复制redis.conf到redis集群节点目录下:

cp redis.conf /home/tools/redis-cluster/7007

cp redis.conf /home/tools/redis-cluster/7008

cp redis.conf /home/tools/redis-cluster/7009

进入/home/tools/redis-i目录,将生成的 bin目录复制到redis集群节点目录下:

cp -r bin /home/tools/redis-cluster/7007

cp -r bin /home/tools/redis-cluster/7008

cp -r bin /home/tools/redis-cluster/7009

分别修改节点7007、7008和7009的配置文件redis.conf,修改如下:

7007:

bind 15.32.134.247

protected-mode no

port 7007

daemonize yes

cluster-enabled yes

cluster-node-timeout 15000

7008:

bind 15.32.134.247

protected-mode no

port 7008

daemonize yes

cluster-enabled yes

cluster-node-timeout 15000

7009:

bind 15.32.134.247

protected-mode no

port 7009

daemonize yes

cluster-enabled yes

cluster-node-timeout 15000

(b).启动redis节点

分别启动7007和7008和7009节点:

分别在/home/tools/redis-cluster/7007和/home/tools/redis-cluster/7008和/home/tools/redis-cluster/7009目录,执行如下命令:

./bin/redis-server ./redis.conf

通过ps命令查看启动的redis节点:

ps -ef|grep redis

6,待7007和7008和7009都正常启动后,需要把他们都作为master节点加入重庆的153,154,162的redis集群

先在153服务器执行如下命令,查看当前重庆redis的集群和节点情况

[root@novacq1 redis-4.0.11]# /root/tools/redis-4.0.11/src/redis-trib.rb info 15.99.72.153:7001

15.99.72.153:7001 (ccd2166e...) -> 52222 keys | 5461 slots | 1 slaves.

15.99.72.153:7002 (ff5aa484...) -> 52351 keys | 5461 slots | 1 slaves.

15.99.72.154:7003 (9cdb8de1...) -> 52472 keys | 5462 slots | 1 slaves.

[OK] 157045 keys in 3 masters.

9.59 keys per slot on average.

[root@novacq1 7001]# bin/redis-cli -c -h 15.99.72.153 -p 7001

15.99.72.153:7001>

15.99.72.153:7001>

15.99.72.153:7001>

15.99.72.153:7001> cluster nodes

388bd7613d5eb7334fb2734dd2cb842f01cdae58 15.99.72.162:7006@17006 slave 9cdb8de1ca0b97694e845d344f1e4327cca48561 0 1577441148000 6 connected

ccd2166eb56df50ece8988f790977f61289b0084 15.99.72.153:7001@17001 myself,master - 0 1577441146000 1 connected 0-5460

ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c 15.99.72.153:7002@17002 master - 0 1577441147949 7 connected 10923-16383

c0dd3cddd8b2a5f842c46848830701b7fdc0a27c 15.99.72.162:7005@17005 slave ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c 0 1577441150956 7 connected

b7c383c0663afd90a2e66b830b648180de8ce882 15.99.72.154:7004@17004 slave ccd2166eb56df50ece8988f790977f61289b0084 0 1577441149954 4 connected

9cdb8de1ca0b97694e845d344f1e4327cca48561 15.99.72.154:7003@17003 master - 0 1577441148951 3 connected 5461-10922

15.99.72.153:7001> quit

然后在153上把之前的247上的7007 7008 7009 都添加到集群,如下命令的第一个ip:port是需要添加的新节点 第二个ip:port是之前集群的任意一个节点

[root@novacq1 7001]# /root/tools/redis-4.0.11/src/redis-trib.rb add-node 15.32.134.247:7007 15.99.72.153:7001

>>> Adding node 15.32.134.247:7007 to cluster 15.99.72.153:7001

>>> Performing Cluster Check (using node 15.99.72.153:7001)

M: ccd2166eb56df50ece8988f790977f61289b0084 15.99.72.153:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: 388bd7613d5eb7334fb2734dd2cb842f01cdae58 15.99.72.162:7006

slots: (0 slots) slave

replicates 9cdb8de1ca0b97694e845d344f1e4327cca48561

M: ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c 15.99.72.153:7002

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: c0dd3cddd8b2a5f842c46848830701b7fdc0a27c 15.99.72.162:7005

slots: (0 slots) slave

replicates ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c

S: b7c383c0663afd90a2e66b830b648180de8ce882 15.99.72.154:7004

slots: (0 slots) slave

replicates ccd2166eb56df50ece8988f790977f61289b0084

M: 9cdb8de1ca0b97694e845d344f1e4327cca48561 15.99.72.154:7003

slots:5461-10922 (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 15.32.134.247:7007 to make it join the cluster.

[OK] New node added correctly.

[root@novacq1 7001]#

[root@novacq1 7001]# /root/tools/redis-4.0.11/src/redis-trib.rb add-node 15.32.134.247:7008 15.99.72.153:7001

>>> Adding node 15.32.134.247:7008 to cluster 15.99.72.153:7001

>>> Performing Cluster Check (using node 15.99.72.153:7001)

M: ccd2166eb56df50ece8988f790977f61289b0084 15.99.72.153:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: 388bd7613d5eb7334fb2734dd2cb842f01cdae58 15.99.72.162:7006

slots: (0 slots) slave

replicates 9cdb8de1ca0b97694e845d344f1e4327cca48561

M: ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c 15.99.72.153:7002

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: c0dd3cddd8b2a5f842c46848830701b7fdc0a27c 15.99.72.162:7005

slots: (0 slots) slave

replicates ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c

S: b7c383c0663afd90a2e66b830b648180de8ce882 15.99.72.154:7004

slots: (0 slots) slave

replicates ccd2166eb56df50ece8988f790977f61289b0084

M: 8dbea4fac4dc3fb133fa4e946c44584cf895cd02 15.32.134.247:7007

slots: (0 slots) master

0 additional replica(s)

M: 9cdb8de1ca0b97694e845d344f1e4327cca48561 15.99.72.154:7003

slots:5461-10922 (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 15.32.134.247:7008 to make it join the cluster.

[OK] New node added correctly.

[root@novacq1 7001]# /root/tools/redis-4.0.11/src/redis-trib.rb add-node 15.32.134.247:7009 15.99.72.153:7001

>>> Adding node 15.32.134.247:7009 to cluster 15.99.72.153:7001

>>> Performing Cluster Check (using node 15.99.72.153:7001)

M: ccd2166eb56df50ece8988f790977f61289b0084 15.99.72.153:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: 5c1d9192e2da819af92d4df8546967be54d1c5b1 15.32.134.247:7008

slots: (0 slots) master

0 additional replica(s)

S: 388bd7613d5eb7334fb2734dd2cb842f01cdae58 15.99.72.162:7006

slots: (0 slots) slave

replicates 9cdb8de1ca0b97694e845d344f1e4327cca48561

M: ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c 15.99.72.153:7002

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: c0dd3cddd8b2a5f842c46848830701b7fdc0a27c 15.99.72.162:7005

slots: (0 slots) slave

replicates ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c

S: b7c383c0663afd90a2e66b830b648180de8ce882 15.99.72.154:7004

slots: (0 slots) slave

replicates ccd2166eb56df50ece8988f790977f61289b0084

M: 8dbea4fac4dc3fb133fa4e946c44584cf895cd02 15.32.134.247:7007

slots: (0 slots) master

0 additional replica(s)

M: 9cdb8de1ca0b97694e845d344f1e4327cca48561 15.99.72.154:7003

slots:5461-10922 (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 15.32.134.247:7009 to make it join the cluster.

[OK] New node added correctly.

7007 7008 7009添加完后再查看一些当前redis cluster的情况:

[root@novacq1 7001]# /root/tools/redis-4.0.11/src/redis-trib.rb info 15.99.72.153:7001

15.99.72.153:7001 (ccd2166e...) -> 52222 keys | 5461 slots | 1 slaves.

15.99.72.153:7002 (ff5aa484...) -> 52351 keys | 5461 slots | 1 slaves.

15.32.134.247:7007 (8dbea4fa...) -> 0 keys | 0 slots | 0 slaves.

15.99.72.154:7003 (9cdb8de1...) -> 52472 keys | 5462 slots | 1 slaves.

15.32.134.247:7008 (5c1d9192...) -> 0 keys | 0 slots | 0 slaves.

15.32.134.247:7009 (963bb18d...) -> 0 keys | 0 slots | 0 slaves.

[OK] 157045 keys in 6 masters.

9.59 keys per slot on average.

[root@novacq1 7001]# bin/redis-cli -c -h 15.99.72.153 -p 7001

15.99.72.153:7001>

15.99.72.153:7001>

15.99.72.153:7001> cluster nodes

ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c 15.99.72.153:7002@17002 master - 0 1577441805356 7 connected 10923-16383

b7c383c0663afd90a2e66b830b648180de8ce882 15.99.72.154:7004@17004 slave ccd2166eb56df50ece8988f790977f61289b0084 0 1577441803352 4 connected

8dbea4fac4dc3fb133fa4e946c44584cf895cd02 15.32.134.247:7007@17007 master - 0 1577441804603 10 connected

9cdb8de1ca0b97694e845d344f1e4327cca48561 15.99.72.154:7003@17003 master - 0 1577441801348 3 connected 5461-10922

ccd2166eb56df50ece8988f790977f61289b0084 15.99.72.153:7001@17001 myself,master - 0 1577441805000 1 connected 0-5460

388bd7613d5eb7334fb2734dd2cb842f01cdae58 15.99.72.162:7006@17006 slave 9cdb8de1ca0b97694e845d344f1e4327cca48561 0 1577441801000 6 connected

5c1d9192e2da819af92d4df8546967be54d1c5b1 15.32.134.247:7008@17008 master - 0 1577441801000 9 connected

963bb18d24abff0543811b293551efe74a59db45 15.32.134.247:7009@17009 master - 0 1577441804420 0 connected

c0dd3cddd8b2a5f842c46848830701b7fdc0a27c 15.99.72.162:7005@17005 slave ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c 0 1577441806358 7 connected

15.99.72.153:7001> quit

从上面的信息可以发现,新增的3个master 7007 7008 7009 没有solt分配,如果重庆没停电的情况下理论上这种情况不会影响查询速度,并且即使我把这3个247的redis node都kill也不影响7001--7006的集群使用(可能是因为没有slot分配的情况下7007--7009不会认为参与master挂掉2/3整个redis cluster不可用的选举)

7,然后我来进行slots的迁移操作,就是在重庆停电前一天晚上,把153,154,162的mster的slots迁移到247的7007,7008和7009上

第一步先把 15.99.72.153:7001 (ccd2166e...) -> 52223 keys | 5461 slots | 1 slaves. 的5461个slots迁移到 247的7007

[root@novacq1 7001]# /root/tools/redis-4.0.11/src/redis-trib.rb reshard 15.32.134.247:7007

>>> Performing Cluster Check (using node 15.32.134.247:7007)

M: 8dbea4fac4dc3fb133fa4e946c44584cf895cd02 15.32.134.247:7007

slots: (0 slots) master

0 additional replica(s)

S: b7c383c0663afd90a2e66b830b648180de8ce882 15.99.72.154:7004

slots: (0 slots) slave

replicates ccd2166eb56df50ece8988f790977f61289b0084

M: 963bb18d24abff0543811b293551efe74a59db45 15.32.134.247:7009

slots: (0 slots) master

0 additional replica(s)

S: c0dd3cddd8b2a5f842c46848830701b7fdc0a27c 15.99.72.162:7005

slots: (0 slots) slave

replicates ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c

M: ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c 15.99.72.153:7002

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: 388bd7613d5eb7334fb2734dd2cb842f01cdae58 15.99.72.162:7006

slots: (0 slots) slave

replicates 9cdb8de1ca0b97694e845d344f1e4327cca48561

M: 5c1d9192e2da819af92d4df8546967be54d1c5b1 15.32.134.247:7008

slots: (0 slots) master

0 additional replica(s)

M: ccd2166eb56df50ece8988f790977f61289b0084 15.99.72.153:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: 9cdb8de1ca0b97694e845d344f1e4327cca48561 15.99.72.154:7003

slots:5461-10922 (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 5461

What is the receiving node ID? 8dbea4fac4dc3fb133fa4e946c44584cf895cd02

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:ccd2166eb56df50ece8988f790977f61289b0084

Source node #2:done

......

Moving slot 5454 from ccd2166eb56df50ece8988f790977f61289b0084

Moving slot 5455 from ccd2166eb56df50ece8988f790977f61289b0084

Moving slot 5456 from ccd2166eb56df50ece8988f790977f61289b0084

Moving slot 5457 from ccd2166eb56df50ece8988f790977f61289b0084

Moving slot 5458 from ccd2166eb56df50ece8988f790977f61289b0084

Moving slot 5459 from ccd2166eb56df50ece8988f790977f61289b0084

Moving slot 5460 from ccd2166eb56df50ece8988f790977f61289b0084

Do you want to proceed with the proposed reshard plan (yes/no)? yes

Moving slot 0 from 15.99.72.153:7001 to 15.32.134.247:7007:

[ERR] Calling MIGRATE: ERR Syntax error, try CLIENT (LIST | KILL | GETNAME | SETNAME | PAUSE | REPLY)

解决报错问题

cd /root/tools/redis-4.0.11/src

然后vim redis-trib.rb

把其中的2行的source.r.client.call改为source.r.call

begin

source.r.client.call(["migrate",target.info[:host],target.info[:port],"",0,@timeout,:keys,*keys])

rescue => e

if o[:fix] && e.to_s =~ /BUSYKEY/

xputs "*** Target key exists. Replacing it for FIX."

source.r.client.call(["migrate",target.info[:host],target.info[:port],"",0,@timeout,:replace,:keys,*keys])

然后保存

然后继续执行 /root/tools/redis-4.0.11/src/redis-trib.rb reshard 15.32.134.247:7007

还会报错如下

[root@novacq1 src]# /root/tools/redis-4.0.11/src/redis-trib.rb reshard 15.32.134.247:7007

>>> Performing Cluster Check (using node 15.32.134.247:7007)

M: 8dbea4fac4dc3fb133fa4e946c44584cf895cd02 15.32.134.247:7007

slots: (0 slots) master

0 additional replica(s)

S: b7c383c0663afd90a2e66b830b648180de8ce882 15.99.72.154:7004

slots: (0 slots) slave

replicates ccd2166eb56df50ece8988f790977f61289b0084

M: 963bb18d24abff0543811b293551efe74a59db45 15.32.134.247:7009

slots: (0 slots) master

0 additional replica(s)

S: c0dd3cddd8b2a5f842c46848830701b7fdc0a27c 15.99.72.162:7005

slots: (0 slots) slave

replicates ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c

M: ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c 15.99.72.153:7002

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: 388bd7613d5eb7334fb2734dd2cb842f01cdae58 15.99.72.162:7006

slots: (0 slots) slave

replicates 9cdb8de1ca0b97694e845d344f1e4327cca48561

M: 5c1d9192e2da819af92d4df8546967be54d1c5b1 15.32.134.247:7008

slots: (0 slots) master

0 additional replica(s)

M: ccd2166eb56df50ece8988f790977f61289b0084 15.99.72.153:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: 9cdb8de1ca0b97694e845d344f1e4327cca48561 15.99.72.154:7003

slots:5461-10922 (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

[WARNING] Node 15.32.134.247:7007 has slots in importing state (0).

[WARNING] Node 15.99.72.153:7001 has slots in migrating state (0).

[WARNING] The following slots are open: 0

>>> Check slots coverage...

[OK] All 16384 slots covered.

*** Please fix your cluster problems before resharding

解决办法

先去247服务器 ,上面报错的[WARNING] The following slots are open: 0 这个0数字很关键下面要用到

[root@novasha1 7007]# bin/redis-cli -h 15.32.134.247 -c -p 7007

15.32.134.247:7007> cluster setslot 0 stable

OK

15.32.134.247:7007>

然后再去153服务器执行

[root@novacq1 7001]# bin/redis-cli -h 15.99.72.153 -c -p 7001

15.99.72.153:7001> cluster setslot 0 stable

OK

15.99.72.153:7001>

然后再在153上执行 /root/tools/redis-4.0.11/src/redis-trib.rb fix 15.32.134.247:7007

[root@novacq1 7001]# /root/tools/redis-4.0.11/src/redis-trib.rb fix 15.32.134.247:7007

>>> Performing Cluster Check (using node 15.32.134.247:7007)

M: 8dbea4fac4dc3fb133fa4e946c44584cf895cd02 15.32.134.247:7007

slots: (0 slots) master

0 additional replica(s)

S: b7c383c0663afd90a2e66b830b648180de8ce882 15.99.72.154:7004

slots: (0 slots) slave

replicates ccd2166eb56df50ece8988f790977f61289b0084

M: 963bb18d24abff0543811b293551efe74a59db45 15.32.134.247:7009

slots: (0 slots) master

0 additional replica(s)

S: c0dd3cddd8b2a5f842c46848830701b7fdc0a27c 15.99.72.162:7005

slots: (0 slots) slave

replicates ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c

M: ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c 15.99.72.153:7002

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: 388bd7613d5eb7334fb2734dd2cb842f01cdae58 15.99.72.162:7006

slots: (0 slots) slave

replicates 9cdb8de1ca0b97694e845d344f1e4327cca48561

M: 5c1d9192e2da819af92d4df8546967be54d1c5b1 15.32.134.247:7008

slots: (0 slots) master

0 additional replica(s)

M: ccd2166eb56df50ece8988f790977f61289b0084 15.99.72.153:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: 9cdb8de1ca0b97694e845d344f1e4327cca48561 15.99.72.154:7003

slots:5461-10922 (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

然后再重新分配

/root/tools/redis-4.0.11/src/redis-trib.rb reshard 15.32.134.247:7007

[root@novacq1 7001]# /root/tools/redis-4.0.11/src/redis-trib.rb reshard 15.32.134.247:7007

>>> Performing Cluster Check (using node 15.32.134.247:7007)

M: 8dbea4fac4dc3fb133fa4e946c44584cf895cd02 15.32.134.247:7007

slots: (0 slots) master

0 additional replica(s)

S: b7c383c0663afd90a2e66b830b648180de8ce882 15.99.72.154:7004

slots: (0 slots) slave

replicates ccd2166eb56df50ece8988f790977f61289b0084

M: 963bb18d24abff0543811b293551efe74a59db45 15.32.134.247:7009

slots: (0 slots) master

0 additional replica(s)

S: c0dd3cddd8b2a5f842c46848830701b7fdc0a27c 15.99.72.162:7005

slots: (0 slots) slave

replicates ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c

M: ff5aa484136e7504d9ae4a8e6d795cf1ed4c3a0c 15.99.72.153:7002

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: 388bd7613d5eb7334fb2734dd2cb842f01cdae58 15.99.72.162:7006

slots: (0 slots) slave

replicates 9cdb8de1ca0b97694e845d344f1e4327cca48561

M: 5c1d9192e2da819af92d4df8546967be54d1c5b1 15.32.134.247:7008

slots: (0 slots) master

0 additional replica(s)

M: ccd2166eb56df50ece8988f790977f61289b0084 15.99.72.153:7001

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: 9cdb8de1ca0b97694e845d344f1e4327cca48561 15.99.72.154:7003

slots:5461-10922 (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 5461

What is the receiving node ID? 8dbea4fac4dc3fb133fa4e946c44584cf895cd02

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:ccd2166eb56df50ece8988f790977f61289b0084

Source node #2:done

Moving slot 5457 from ccd2166eb56df50ece8988f790977f61289b0084

Moving slot 5458 from ccd2166eb56df50ece8988f790977f61289b0084

Moving slot 5459 from ccd2166eb56df50ece8988f790977f61289b0084

Moving slot 5460 from ccd2166eb56df50ece8988f790977f61289b0084

Do you want to proceed with the proposed reshard plan (yes/no)? yes

然后就可以迁移数据从了,时间还是比较久的。

Moving slot 203 from 15.99.72.153:7001 to 15.32.134.247:7007: .........

Moving slot 204 from 15.99.72.153:7001 to 15.32.134.247:7007: ........

Moving slot 205 from 15.99.72.153:7001 to 15.32.134.247:7007: ......

Moving slot 206 from 15.99.72.153:7001 to 15.32.134.247:7007: ...............

Moving slot 207 from 15.99.72.153:7001 to 15.32.134.247:7007: ..........

Moving slot 208 from 15.99.72.153:7001 to 15.32.134.247:7007: ........

Moving slot 209 from 15.99.72.153:7001 to 15.32.134.247:7007: .....

Moving slot 210 from 15.99.72.153:7001 to 15.32.134.247:7007: ..............

Moving slot 211 from 15.99.72.153:7001 to 15.32.134.247:7007: .............

Moving slot 212 from 15.99.72.153:7001 to 15.32.134.247:7007: ..........

Moving slot 213 from 15.99.72.153:7001 to 15.32.134.247:7007: .........

Moving slot 214 from 15.99.72.153:7001 to 15.32.134.247:7007: ............

Moving slot 5460 from 421123bf7fb3a4061e34cab830530d87b21148ee

Do you want to proceed with the proposed reshard plan (yes/no)? yes

Moving slot 5045 from 15.99.72.165:7004 to 15.15.181.147:7008:

[ERR] Calling MIGRATE: ERR Target instance replied with error: BUSYKEY Target key name already exists.

如果reshard的时候发现上面的报错,暂时没有找到好的解决办法,我现在的解决思路是到15.99.72.165:7004直接执行flushdb 然后再迁移就不会报错了。

2,安装上海247的3个node的es节点找到重庆的es集群

为了避免脑裂,备选master节点最好为奇数比如3个master,因为重庆会停电,我把上海配置2个master重庆1个master(如果上海停电则应该上海1个master重庆2个master)

这样保证了重庆停电的时候上海的es集群可以继续使用

discovery.zen.minimum_master_nodes为(N/2)+1 N为备选master的个数 比如3个master的情况 3/2+1=2

discovery.zen.ping.unicast.hosts: ["15.99.72.153:9300","15.32.134.247:9300","15.32.134.247:9301"]

discovery.zen.minimum_master_nodes: 2

先在247安装一个master_sha节点

mkdir -p /home/tools/elasticsearch/conf

cd /home/tools/elasticsearch/conf

touch es1.yml

vim es1.yml

#集群名称 所有节点要相同

cluster.name: "nova_es"

#子节点名称

node.name: master_sha

#作为master节点

node.master: true

node.data: false

http.cors.enabled: true

http.cors.allow-origin: "*"

network.bind_host: 0.0.0.0

network.publish_host: 15.32.134.247

network.host: 15.32.134.247

http.port: 9200

transport.tcp.port: 9300

#设置master地址

discovery.zen.ping.unicast.hosts: ["15.99.72.153:9300","15.32.134.247:9300","15.32.134.247:9301"]

discovery.zen.minimum_master_nodes: 2

discovery.zen.ping_timeout: 6s

然后执行

docker run -d --name es5_6_13master_sha -p 9200:9200 -p 9300:9300 --privileged=true -v /home/tools/elasticsearch/conf/es1.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /home/tools/elasticsearch/data:/usr/share/elasticsearch/data elasticsearch:5.6.13

再在247安装一个node2_sha节点

mkdir -p /home/tools/elasticsearch2/conf

cd /home/tools/elasticsearch2/conf

touch es2.yml

vim es2.yml

#集群名称 所有节点要相同

cluster.name: "nova_es"

#子节点名称

node.name: node2_sha

#不作为master节点

node.master: true

node.data: false

http.cors.enabled: true

http.cors.allow-origin: "*"

network.bind_host: 0.0.0.0

network.publish_host: 15.32.134.247

network.host: 15.32.134.247

http.port: 9201

transport.tcp.port: 9301

#设置master地址

discovery.zen.ping.unicast.hosts: ["15.99.72.153:9300","15.32.134.247:9300","15.32.134.247:9301"]

discovery.zen.minimum_master_nodes: 2

discovery.zen.ping_timeout: 6s

然后执行

docker run -d --name es5_6_13node2_sha -p 9201:9201 -p 9301:9301 --privileged=true -v /home/tools/elasticsearch2/conf/es2.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /home/tools/elasticsearch2/data:/usr/share/elasticsearch/data elasticsearch:5.6.13

再在247安装一个node3_sha节点

mkdir -p /home/tools/elasticsearch3/conf

cd /home/tools/elasticsearch3/conf

touch es3.yml

vim es3.yml

#集群名称 所有节点要相同

cluster.name: "nova_es"

#子节点名称

node.name: node3_sha

#不作为master节点

node.master: false

node.data: false

http.cors.enabled: true

http.cors.allow-origin: "*"

network.bind_host: 0.0.0.0

network.publish_host: 15.32.134.247

network.host: 15.32.134.247

http.port: 9202

transport.tcp.port: 9302

#设置master地址

discovery.zen.ping.unicast.hosts: ["15.99.72.153:9300","15.32.134.247:9300","15.32.134.247:9301"]

discovery.zen.minimum_master_nodes: 2

discovery.zen.ping_timeout: 6s

然后执行

docker run -d --name es5_6_13node3_sha -p 9202:9202 -p 9302:9302 --privileged=true -v /home/tools/elasticsearch3/conf/es3.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /home/tools/elasticsearch3/data:/usr/share/elasticsearch/data elasticsearch:5.6.13

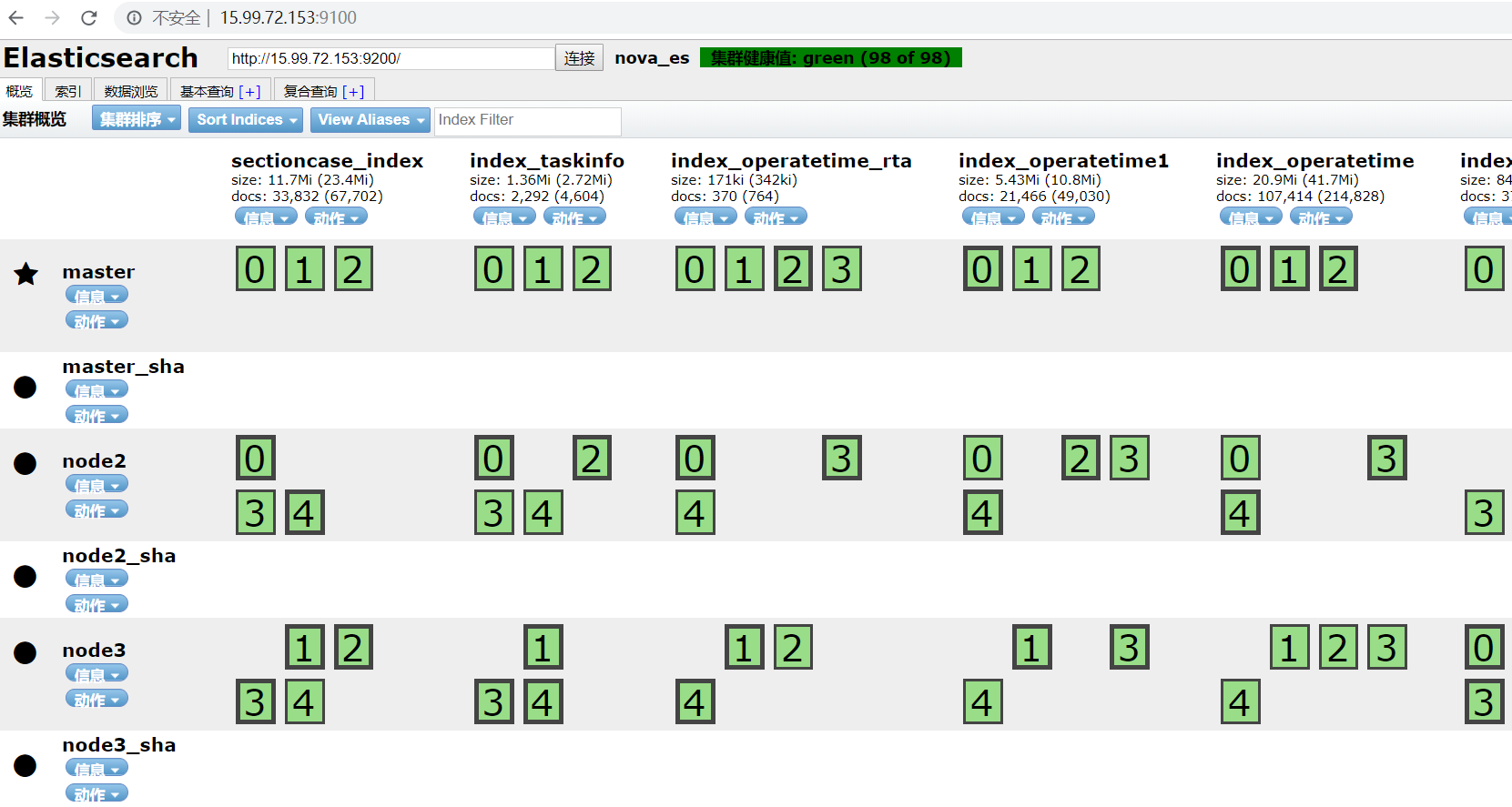

247上海的3个节点启动后,由于node.data: false 所以不会有分片分配,不会影响没停电的时候重庆的查询速度,重庆要停电的时候再把node.data: true把分片切到247上海的3个节点然后一个一个的关掉重庆3个es节点。如下图所示,现在是没有分片在上海的3个节点的

由于上海247新增了1个master_sha和1个node2_sha,所以需要把重庆的153,154,162三个节点的es配置也修改一下,添加上master_sha

在153执行vim /usr/local/elasticsearch/conf/es1.yml

#集群名称 所有节点要相同

cluster.name: "nova_es"

##本节点名称

node.name: master

##作为master节点

node.master: true

##是否存储数据

node.data: true

## head插件设置

http.cors.enabled: true

http.cors.allow-origin: "*"

##设置可以访问的ip 这里全部设置通过

network.bind_host: 0.0.0.0

##设置节点 访问的地址 设置master所在机器的ip

network.publish_host: 15.99.72.153

discovery.zen.ping.unicast.hosts: ["15.99.72.153:9300","15.32.134.247:9300","15.32.134.247:9301"]

discovery.zen.minimum_master_nodes: 2

discovery.zen.ping_timeout: 6s

在154执行 vim /usr/local/elasticsearch/conf/es2.yml

#集群名称 所有节点要相同

cluster.name: "nova_es"

##子节点名称

node.name: node2

##不作为master节点

node.master: false

node.data: true

http.cors.enabled: true

http.cors.allow-origin: "*"

network.bind_host: 0.0.0.0

network.publish_host: 15.99.72.154

##设置master地址

discovery.zen.ping.unicast.hosts: ["15.99.72.153:9300","15.32.134.247:9300","15.32.134.247:9301"]

discovery.zen.minimum_master_nodes: 2

discovery.zen.ping_timeout: 6s

在162执行 vim /usr/local/elasticsearch/conf/es3.yml

#集群名称 所有节点要相同

cluster.name: "nova_es"

##子节点名称

node.name: node3

###不作为master节点

node.master: false

node.data: true

http.cors.enabled: true

http.cors.allow-origin: "*"

network.bind_host: 0.0.0.0

network.publish_host: 15.99.72.162

###设置master地址

discovery.zen.ping.unicast.hosts: ["15.99.72.153:9300","15.32.134.247:9300","15.32.134.247:9301"]

discovery.zen.minimum_master_nodes: 2

discovery.zen.ping_timeout: 6s

然后依次重启162 154 153的es 节点

然后发现153 154 162的es节点内存都是设置的6G,所以我也需要把247的3个节点设置为6G

find /var/lib/docker/ -name jvm.options

[root@novasha1 conf]# find /var/lib/docker/ -name jvm.options

/var/lib/docker/overlay2/04bc8036e827a3a680db855af6192f361d6c3cc3188b7c16c526cce68e2317e8/diff/etc/elasticsearch/jvm.options

/var/lib/docker/overlay2/02a81caa346ccbb1f679fda0ac0d62a24a7d6294dc2ef6cdf58561d45f5ed11f/merged/etc/elasticsearch/jvm.options

/var/lib/docker/overlay2/ee0c306d601667c46a28ccea80eb6c8d695bd77fed6234a5505b88fea5213612/merged/etc/elasticsearch/jvm.options

/var/lib/docker/overlay2/a5c71e8d499a9d15ba5adc5f3e629eecc3f842c5d940674ea9d0c8d511fe68e4/diff/etc/elasticsearch/jvm.options

/var/lib/docker/overlay2/a5c71e8d499a9d15ba5adc5f3e629eecc3f842c5d940674ea9d0c8d511fe68e4/merged/etc/elasticsearch/jvm.options

[root@novasha1 conf]# vim /var/lib/docker/overlay2/04bc8036e827a3a680db855af6192f361d6c3cc3188b7c16c526cce68e2317e8/diff/etc/elasticsearch/jvm.options

把里面改为6G

重启247的3个es节点

3,重庆和上海mysql的主主同步配置

之前的mysql都在重庆 153和154配置的主主同步,162是153的从

现在要改为154和247上海主主同步,然后153和162作为154的从

① 先在 154 执行 stop slave; (因为现在mycat在写154)

然后在154执行

use nova;

flush tables with read lock;

②然后发现153和162的seconds_behind_master为0

在153和162都执行 stop slave;

③ 然后在154 执行 docker exec -it mysql5_7_24 bash

在容器中备份数据 mysqldump -uroot -p nova -e --max_allowed_packet=1048576 --net_buffer_length=16384 > /nova_20191230.sql

然后退出mysql容器 在154中执行 docker cp mysql5_7_24:/nova_20191230.sql /

然后执行 tar -zcvf nova_20191230.sql.tar.gz nova_20191230.sql

然后 scp nova_20191230.sql.tar.gz root@15.32.134.247:/home

把数据库备份文件传到247上海服务器

④ 在247上海服务器安装mysql

mkdir -p /home/tools/mysql/conf

cd /home/tools/mysql/conf/

vim my.cnf

写入如下内容

[mysql]

default-character-set=utf8

[mysqld]

skip-name-resolve

interactive_timeout = 120

wait_timeout = 3600

max_allowed_packet = 64M

log-bin=mysql-bin

server-id=247

character-set-server=utf8

innodb_buffer_pool_size = 1024M

innodb_lock_wait_timeout = 500

transaction_isolation = READ-COMMITTED

log-slave-updates

auto-increment-increment = 2

auto-increment-offset = 2

default-time_zone = '+8:00'

expire_logs_days=30

然后执行

docker run --name mysql5_7_24 -p 3306:3306 -v /home/tools/mysql/conf:/etc/mysql/conf.d -v /home/tools/mysql/log:/var/log/mysql -v /home/tools/mysql/data:/var/lib/mysql --privileged=true -e MYSQL_ROOT_PASSWORD='Nova!123456' -d mysql:5.7.24

然后在247上执行

在247上执行 docker cp /home/nova_20191230.sql.tar.gz mysql5_7_24:/

然后执行 docker exec -it mysql5_7_24 bash 进入容器

然后执行tar -zxvf nova_20191230.sql.tar.gz

在247的mysql容器中执行

mysql -uroot -p

然后

mysql> use nova

mysql> source /nova_20191230.sql

⑤导入完成后开始配置247和154的主主关系

先配置247的

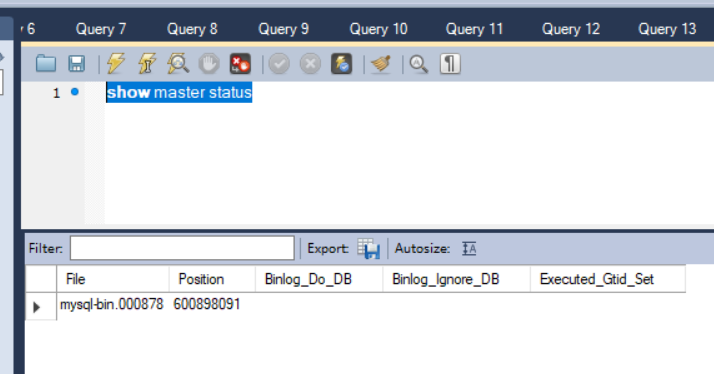

在154 执行 show master status

然后在247执行

SET sql_mode=(SELECT REPLACE(@@sql_mode,'ONLY_FULL_GROUP_BY',''));

change master to master_host='15.99.72.154',master_user='backup',master_password='123456',master_log_file='mysql-bin.000878',master_log_pos=600898091;

然后

start slave;

show slave status

然后再配置154的复制关系

先再154执行 show slave status 发现之前配置了从153的复制关系,所以执行

stop slave;

然后执行 reset slave all;

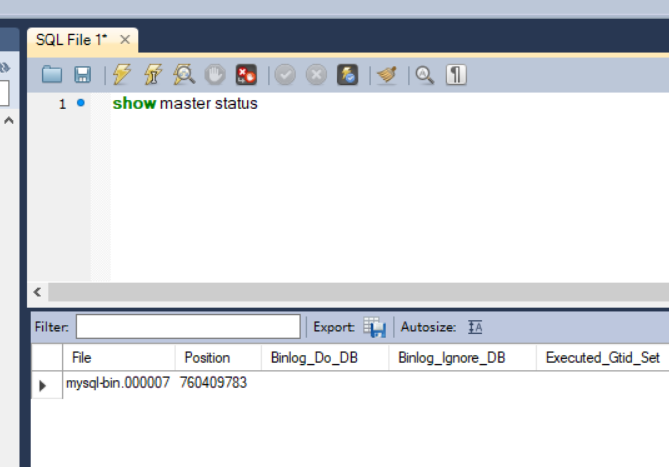

然后在247上执行show master status

然后在247上新建用户

SET sql_mode=(SELECT REPLACE(@@sql_mode,'ONLY_FULL_GROUP_BY',''));

GRANT REPLICATION SLAVE ON *.* to 'backup'@'%' identified by '123456';

然后在154上执行如下

SET sql_mode=(SELECT REPLACE(@@sql_mode,'ONLY_FULL_GROUP_BY',''));

change master to master_host='15.32.134.247',master_user='backup',master_password='123456',master_log_file='mysql-bin.000007',master_log_pos=760409783;

然后

start slave;

show slave status

然后把154解锁

use nova;

unlock tables;

⑥ 现在要变更162的同步配置,因为以前162是153的slave现在要配置成154的slave

先在162执行 stop slave;

然后执行 reset slave all; 清除 162之前配置的同步复制关系

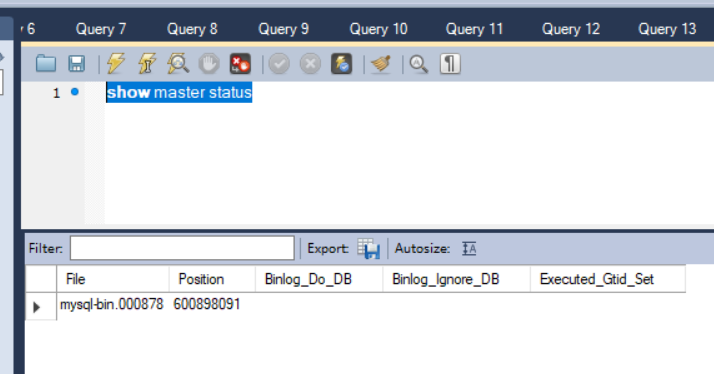

然后在154 执行 show master status

然后在162执行

SET sql_mode=(SELECT REPLACE(@@sql_mode,'ONLY_FULL_GROUP_BY',''));

change master to master_host='15.99.72.154',master_user='backup',master_password='123456',master_log_file='mysql-bin.000878',master_log_pos=600898091;

然后

start slave;

show slave status

浙公网安备 33010602011771号

浙公网安备 33010602011771号