mycat 1.6.6.1安装以及配置docker 安装mysql 5.7.24 双主多从读写分离主主切换

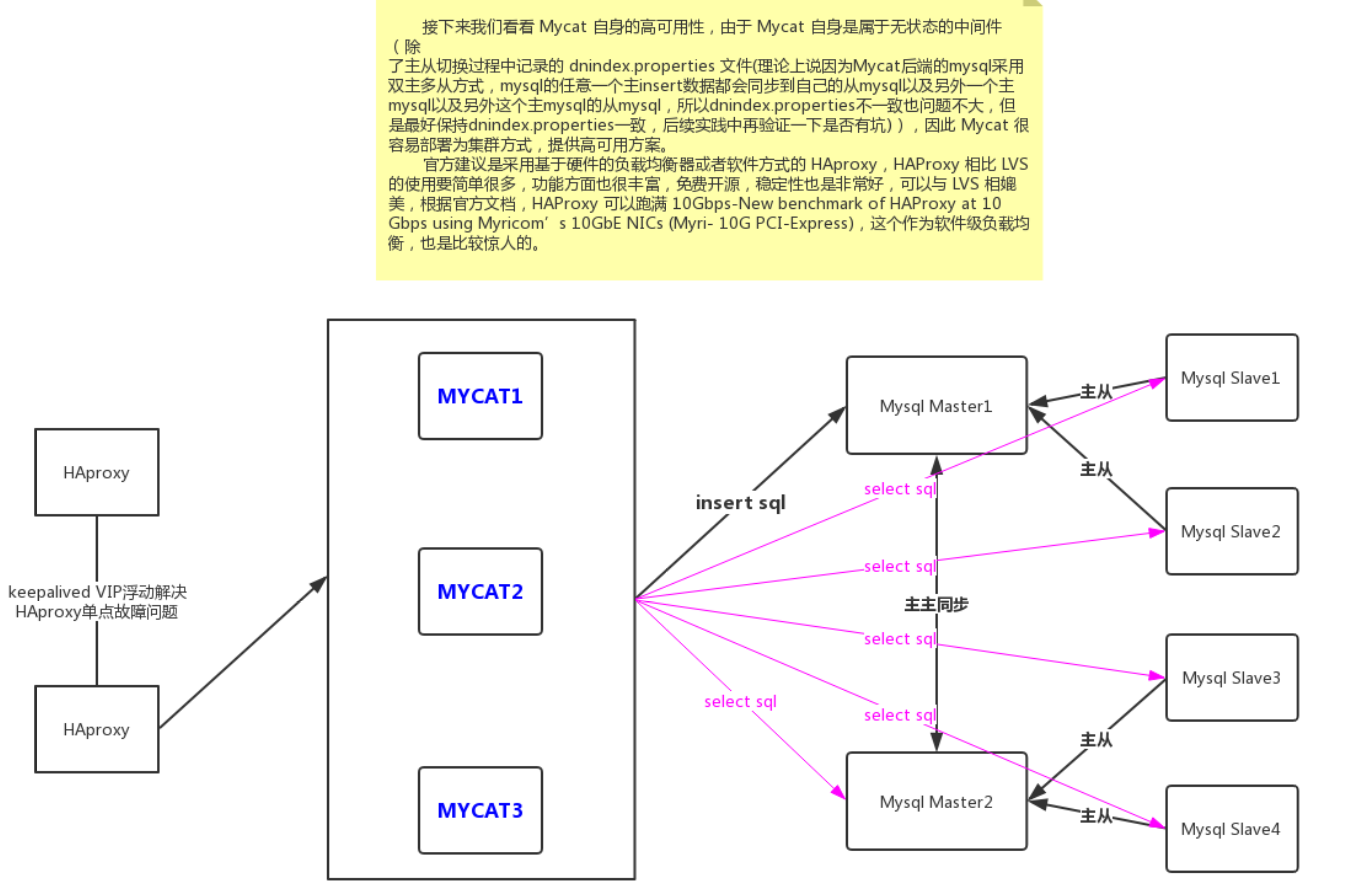

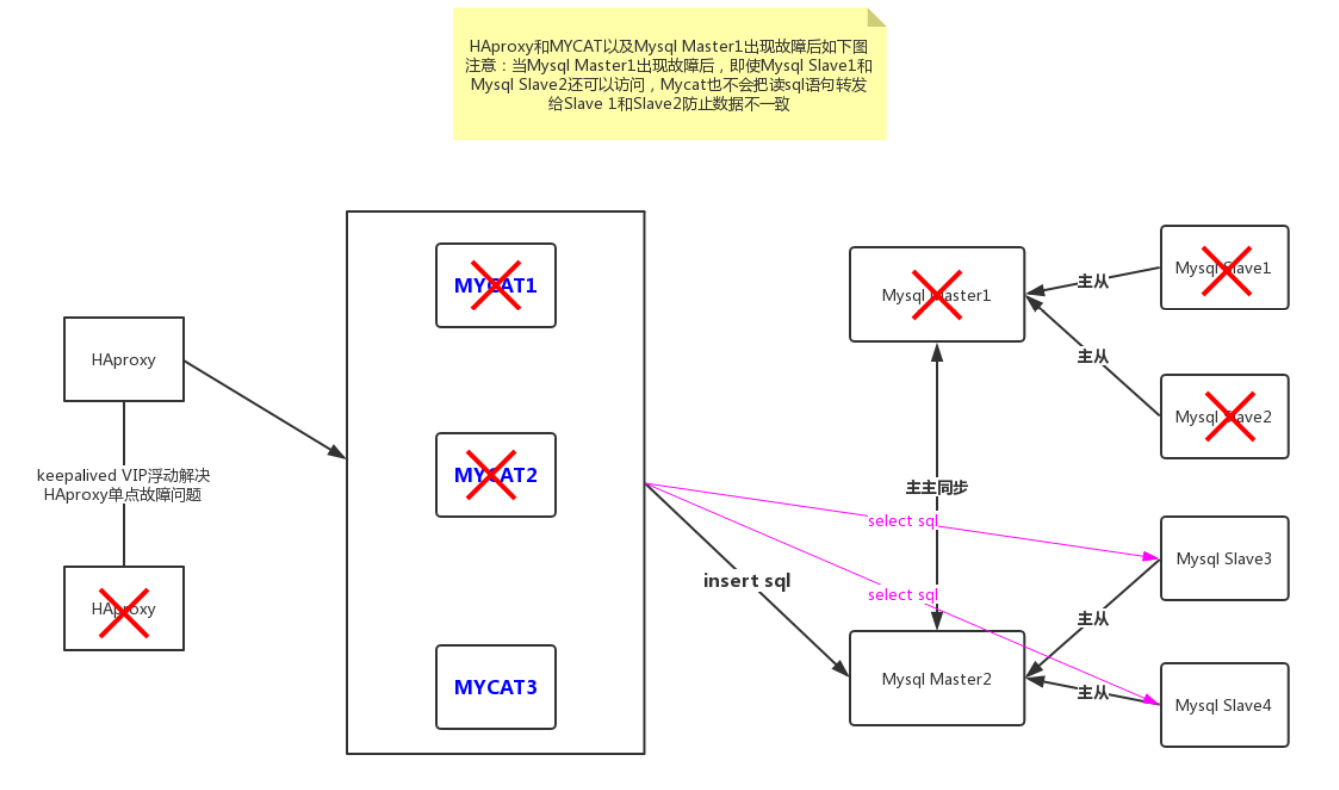

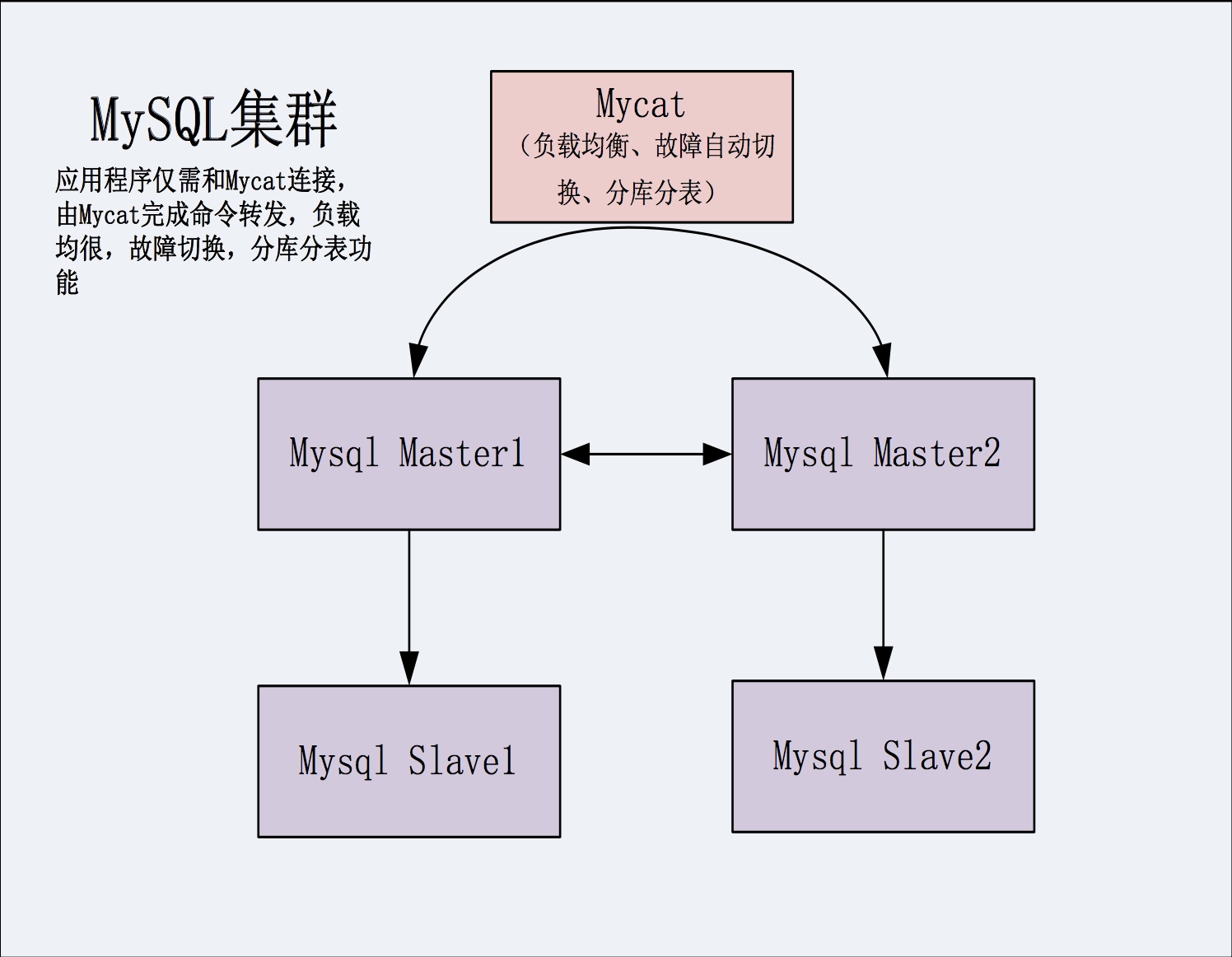

mycat和mysql的高可用参考如下两个图

简介:应用程序仅需要连接HAproxy或者mycat,后端服务器的读写分离由mycat进行控制,后端服务器数据的同步由MySQL主从同步进行控制。

服务器主机规划

| IP | 功能 | 备注 |

|---|---|---|

| 192.168.0.200 | Mysql Master1 | Mysql Master1端口3306 |

| 192.168.0.199 | mycat1 ,Mysql Slave1 | mycat1端口8066 ,Mysql Slave1端口3306 |

| 192.168.0.198 | mycat2 ,Mysql Slave2 | mycat2端口8066 ,Mysql Slave2端口3306 |

| 192.168.0.170 | Mysql Master2 | Mysql Master2端口3306 |

| 192.168.0.169 | Mysql Slave3 | Mysql Slave3端口3306 |

| 192.168.0.168 | Mysql Slave4 | Mysql Slave4端口3306 |

安装MySQL数据库

1)使用docker安装完成mysql5.7.24,我规划的是3台:

192.168.0.200(Master1)

192.168.0.199(Slave1)

192.168.0.198(Slave2)

2)配置三台机器的my.cnf配置文件

我三台机器的配置文件都是/usr/local/mysql/conf/my.cnf

3)设置三台主从服务器配置

vi /usr/local/mysql/conf/my.cnf

[mysql]

default-character-set=utf8

[mysqld]

interactive_timeout = 120

wait_timeout = 120

max_allowed_packet = 32M

log-bin=mysql-bin

server-id=200

character-set-server=utf8

log-slave-updates

auto-increment-increment = 2

auto-increment-offset = 1

default-time_zone = '+8:00'

vi /usr/local/mysql/conf/my.cnf

[mysql]

default-character-set=utf8

[mysqld]

interactive_timeout = 120

wait_timeout = 120

max_allowed_packet = 32M

log-bin=mysql-bin

server-id=199

character-set-server=utf8

default-time_zone = '+8:00'

vi /usr/local/mysql/conf/my.cnf

[mysql]

default-character-set=utf8

[mysqld]

interactive_timeout = 120

wait_timeout = 120

max_allowed_packet = 32M

log-bin=mysql-bin

server-id=198

character-set-server=utf8

default-time_zone = '+8:00'

3)创建主从服务器容器

在200,199,198上运行启动mysql

docker run --name mysql5_7_24 -p 3306:3306 -v /usr/local/mysql/conf:/etc/mysql/conf.d -v /usr/local/mysql/log:/var/log/mysql -v /usr/local/mysql/data:/var/lib/mysql --privileged=true -e MYSQL_ROOT_PASSWORD=root -d mysql:5.7.24

docker run --name mysql5_7_24 -p 3306:3306 -v /usr/local/mysql/conf:/etc/mysql/conf.d -v /usr/local/mysql/log:/var/log/mysql -v /usr/local/mysql/data:/var/lib/mysql --privileged=true -e MYSQL_ROOT_PASSWORD=root -d mysql:5.7.24

docker run --name mysql5_7_24 -p 3306:3306 -v /usr/local/mysql/conf:/etc/mysql/conf.d -v /usr/local/mysql/log:/var/log/mysql -v /usr/local/mysql/data:/var/lib/mysql --privileged=true -e MYSQL_ROOT_PASSWORD=root -d mysql:5.7.24

接下来配置主从

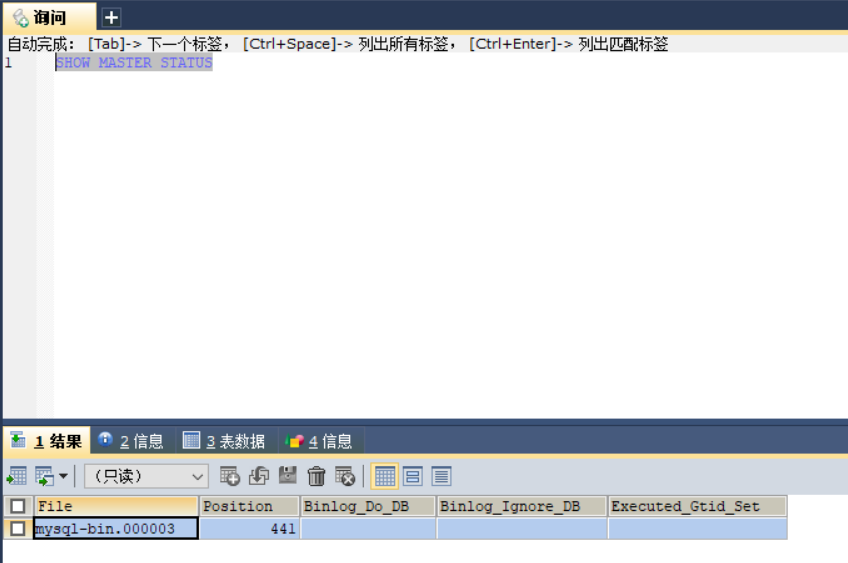

4)登录200主服务器的mysql,查询master的状态

200主库创建用户

SET sql_mode=(SELECT REPLACE(@@sql_mode,'ONLY_FULL_GROUP_BY',''));

GRANT REPLICATION SLAVE ON *.* to 'backup'@'%' identified by '123456';

5)登录199和198从服务器的mysql,设置与主服务器相关的配置参数

SET sql_mode=(SELECT REPLACE(@@sql_mode,'ONLY_FULL_GROUP_BY',''));

change master to master_host='192.168.0.200',master_user='backup',master_password='123456',master_log_file='mysql-bin.000003',master_log_pos=441;

master_host为docker的地址不能写127.0.0.1

master_user是在主库创建的用户

master_log_pos是主库show master status;查询出的Position

然后在199和198上启动服务

start slave;

查看服务状态

show slave status;

Waiting for master to send event 就是成功了

Connecting to master 多半是连接不通

之后主库的修改都能同步到从库了

接下来安装Mysql Master2和Mysql Slave3和Mysql Slave4使用docker安装完成mysql5.7.24,我规划的是3台:

192.168.0.170(Master1)

192.168.0.169(Slave3)

192.168.0.168(Slave4)

具体安装方法和上面一致,我只贴出170,169,168的mysql 配置文件如下

170的配置文件

vi /usr/local/mysql/conf/my.cnf

[mysql]

default-character-set=utf8

[mysqld]

interactive_timeout = 120

wait_timeout = 120

max_allowed_packet = 32M

log-bin=mysql-bin

server-id=170

character-set-server=utf8

log-slave-updates

auto-increment-increment = 2

auto-increment-offset = 2

default-time_zone = '+8:00'

169的配置文件

vi /usr/local/mysql/conf/my.cnf

[mysql]

default-character-set=utf8

[mysqld]

interactive_timeout = 120

wait_timeout = 120

max_allowed_packet = 32M

log-bin=mysql-bin

server-id=169

character-set-server=utf8

default-time_zone = '+8:00'

168的配置文件

vi /usr/local/mysql/conf/my.cnf

[mysql]

default-character-set=utf8

[mysqld]

interactive_timeout = 120

wait_timeout = 120

max_allowed_packet = 32M

log-bin=mysql-bin

server-id=168

character-set-server=utf8

default-time_zone = '+8:00'

依照200,199,198配置好170,169,168的主从同步后,接下来我们配置

200和170的主主同步:

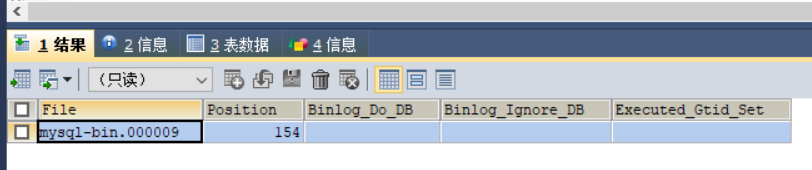

先查询170的 SHOW MASTER STATUS

然后在200这个mysql上执行如下语句

然后执行 start slave;

然后执行show slave status; 查看同步状态

然后再先查询200的 SHOW MASTER STATUS

然后在170这个mysql上执行如下语句

然后执行 start slave;

然后执行show slave status; 查看同步状态,现在170和200配置成功主主同步了,但是我的200,199,198是比170,169,168晚安装2个多月,所以170上面有几万条记录需要通过mysqldump和source手动同步到200上,发现大量数据不一致的时候可以分别在170和200上把主主同步停了,stop slave; 再执行下面步骤具体步骤如下:

这个时候如果发现170和200数据大量不同步,可以采取下面的方式解决

解决步骤如下:

先确保170和200都执行了stop slave;停止主主同步

1.先进入主库170,进行锁表,防止数据写入(也可以用SQLyog执行flush tables with read lock;等等语句,我用windows的cmd连docker安装的mysql5.7.24大概1分钟就自动退出)

C:\Users\1111>mysql -uroot -p -h192.168.0.170

Enter password: ****

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 129

Server version: 5.7.24-log MySQL Community Server (GPL)

Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

使用命令锁定为只读(必须use指定数据库不然锁定只读不生效):

mysql> use novadb2;

mysql> flush tables with read lock;

注意:该处是锁定为只读状态,语句不区分大小写

2.进行数据备份

把170的数据备份到novadb2_20190212.sql文件

mysqldump -uroot -p -h192.168.0.170 novadb2 -e --max_allowed_packet=1048576 --net_buffer_length=16384 >C:\nova_work_document\novaold_mysqldb_backup\novadb2_20190212.sql

这里注意一点:数据库备份一定要定期进行,可以用shell脚本或者python脚本,都比较方便,确保数据万无一失

3.停止从库200的状态

mysql> stop slave;

4.然后到从库200执行mysql命令,导入数据备份

mysql> use novadb2

mysql> source C:\nova_work_document\novaold_mysqldb_backup\novadb2_20190212.sql

5.查看master 170的状态

6.设置从库200同步,注意该处的同步点,就是170主库show master status信息里的| File| Position两项

SET sql_mode=(SELECT REPLACE(@@sql_mode,'ONLY_FULL_GROUP_BY',''));

change master to master_host='192.168.0.170',master_user='backup',master_password='123456',master_log_file='mysql-bin.000011',master_log_pos=1382;

7.重新开启从200的同步

mysql> start slave;

9.查看200的同步状态

mysql> show slave status\G 查看:

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

同理按照上面步骤在170上也配置从200同步,启动start slave;

好了,同步完成啦

最后把主库170解除只读锁定

mysql> use novadb2;

mysql> unlock tables;

mycat安装

cd /root

wget http://dl.mycat.io/1.6.6.1/Mycat-server-1.6.6.1-release-20181031195535-linux.tar.gztar -zxvf Mycat-server-1.6.6.1-release-20181031195535-linux.tar.gz

如果没有配置jdk那么执行 tar -zxvf jdk-8u131-linux-x64.gz

并且在/etc/profile的最后加上

JAVA_HOME=/root/jdk1.8.0_131

JRE_HOME=/root/jdk1.8.0_131/jre

MYCAT_HOME=/root/mycat

PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$MYCAT_HOME/bin

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

export JAVA_HOME JRE_HOME MYCAT_HOME PATH CLASSPATH

然后执行source /etc/profile使配置生效

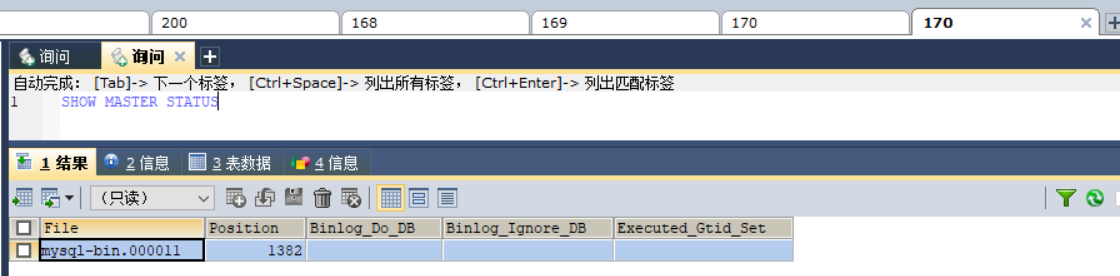

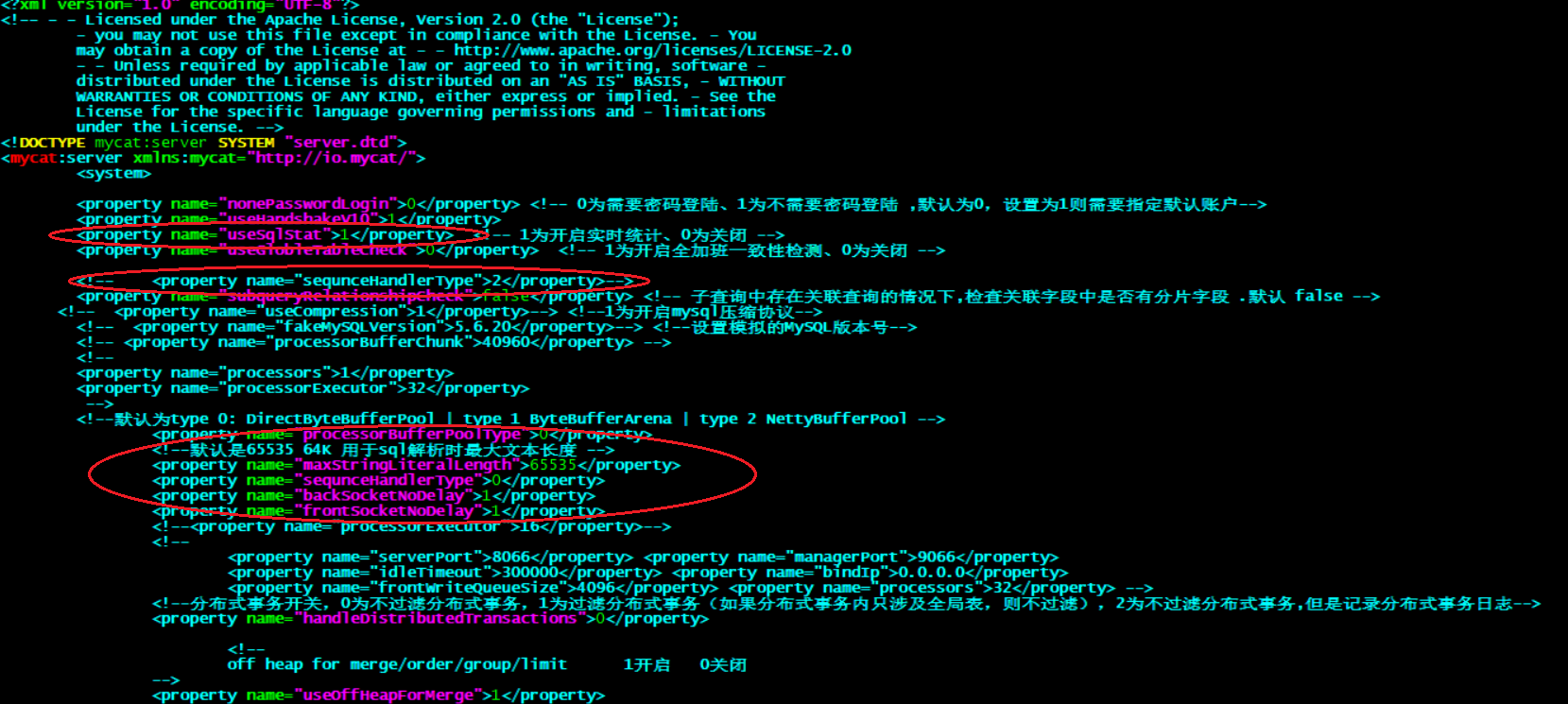

修改server.xml

进入/root/mycat/conf

cp server.xml server2019_bak.xml

vim server.xml

然后对如下截图的几个地方进行修改

接下来修改schema.xml

cp schema.xml schema2019_bak.xml

vim schema.xml

修改为如下内容

<?xml version="1.0"?>

<!DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

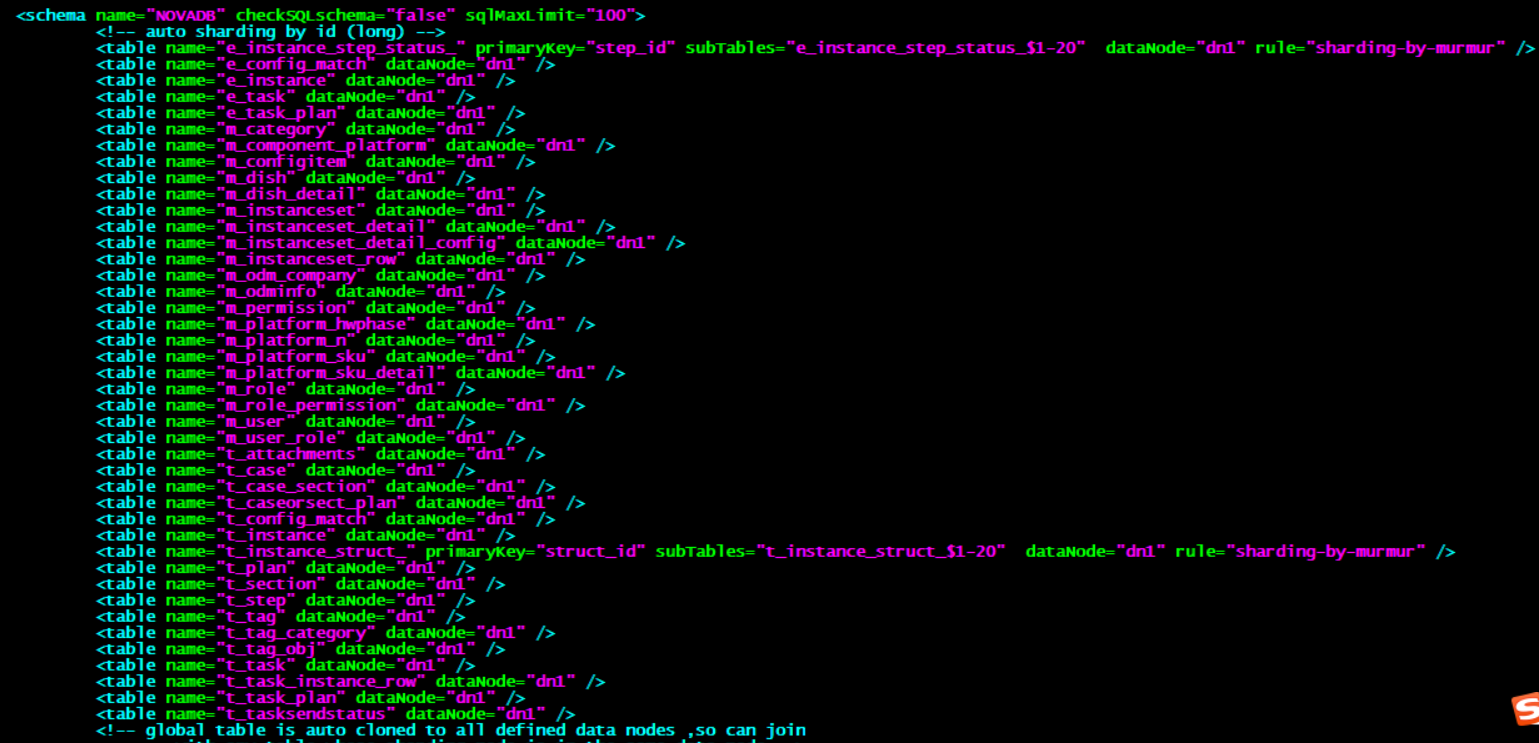

<schema name="NOVADB" checkSQLschema="false" sqlMaxLimit="100">

<!-- auto sharding by id (long) -->

<table name="e_instance_step_status_" primaryKey="step_id" subTables="e_instance_step_status_$1-20" dataNode="dn1" rule="sharding-by-murmur" />

<table name="e_config_match" dataNode="dn1" />

<table name="e_instance" dataNode="dn1" />

<table name="e_task" dataNode="dn1" />

<table name="e_task_plan" dataNode="dn1" />

<table name="m_category" dataNode="dn1" />

<table name="m_component_platform" dataNode="dn1" />

<table name="m_configitem" dataNode="dn1" />

<table name="m_dish" dataNode="dn1" />

<table name="m_dish_detail" dataNode="dn1" />

<table name="m_instanceset" dataNode="dn1" />

<table name="m_instanceset_detail" dataNode="dn1" />

<table name="m_instanceset_detail_config" dataNode="dn1" />

<table name="m_instanceset_row" dataNode="dn1" />

<table name="m_odm_company" dataNode="dn1" />

<table name="m_odminfo" dataNode="dn1" />

<table name="m_permission" dataNode="dn1" />

<table name="m_platform_hwphase" dataNode="dn1" />

<table name="m_platform_n" dataNode="dn1" />

<table name="m_platform_sku" dataNode="dn1" />

<table name="m_platform_sku_detail" dataNode="dn1" />

<table name="m_role" dataNode="dn1" />

<table name="m_role_permission" dataNode="dn1" />

<table name="m_user" dataNode="dn1" />

<table name="m_user_role" dataNode="dn1" />

<table name="t_attachments" dataNode="dn1" />

<table name="t_case" dataNode="dn1" />

<table name="t_case_section" dataNode="dn1" />

<table name="t_caseorsect_plan" dataNode="dn1" />

<table name="t_config_match" dataNode="dn1" />

<table name="t_instance" dataNode="dn1" />

<table name="t_instance_struct_" primaryKey="struct_id" subTables="t_instance_struct_$1-20" dataNode="dn1" rule="sharding-by-murmur" />

<table name="t_plan" dataNode="dn1" />

<table name="t_section" dataNode="dn1" />

<table name="t_step" dataNode="dn1" />

<table name="t_tag" dataNode="dn1" />

<table name="t_tag_category" dataNode="dn1" />

<table name="t_tag_obj" dataNode="dn1" />

<table name="t_task" dataNode="dn1" />

<table name="t_task_instance_row" dataNode="dn1" />

<table name="t_task_plan" dataNode="dn1" />

<table name="t_tasksendstatus" dataNode="dn1" />

<!-- global table is auto cloned to all defined data nodes ,so can join

with any table whose sharding node is in the same data node -->

<!--<table name="company" primaryKey="ID" type="global" dataNode="dn1,dn2,dn3" />

<table name="goods" primaryKey="ID" type="global" dataNode="dn1,dn2" />-->

<!-- random sharding using mod sharind rule -->

<!--<table name="hotnews" primaryKey="ID" autoIncrement="true" dataNode="dn1,dn2,dn3"

rule="mod-long" />-->

<!-- <table name="dual" primaryKey="ID" dataNode="dnx,dnoracle2" type="global"

needAddLimit="false"/> <table name="worker" primaryKey="ID" dataNode="jdbc_dn1,jdbc_dn2,jdbc_dn3"

rule="mod-long" /> -->

<!--<table name="employee" primaryKey="ID" dataNode="dn1,dn2"

rule="sharding-by-intfile" />

<table name="customer" primaryKey="ID" dataNode="dn1,dn2"

rule="sharding-by-intfile">

<childTable name="orders" primaryKey="ID" joinKey="customer_id"

parentKey="id">

<childTable name="order_items" joinKey="order_id"

parentKey="id" />

</childTable>

<childTable name="customer_addr" primaryKey="ID" joinKey="customer_id"

parentKey="id" />

</table>-->

<!-- <table name="oc_call" primaryKey="ID" dataNode="dn1$0-743" rule="latest-month-calldate"

/> -->

</schema>

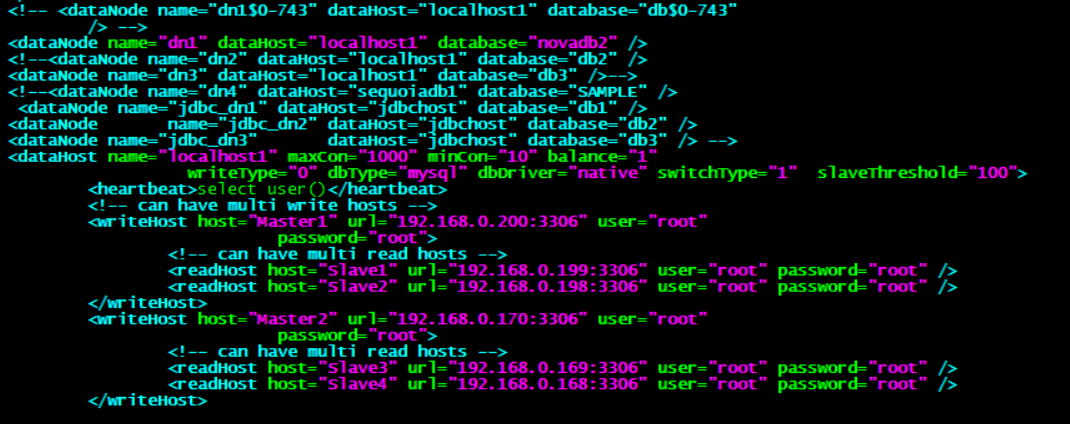

<!-- <dataNode name="dn1$0-743" dataHost="localhost1" database="db$0-743"

/> -->

<dataNode name="dn1" dataHost="localhost1" database="novadb2" />

<!--<dataNode name="dn2" dataHost="localhost1" database="db2" />

<dataNode name="dn3" dataHost="localhost1" database="db3" />-->

<!--<dataNode name="dn4" dataHost="sequoiadb1" database="SAMPLE" />

<dataNode name="jdbc_dn1" dataHost="jdbchost" database="db1" />

<dataNode name="jdbc_dn2" dataHost="jdbchost" database="db2" />

<dataNode name="jdbc_dn3" dataHost="jdbchost" database="db3" /> -->

<dataHost name="localhost1" maxCon="1000" minCon="10" balance="1"

writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

<heartbeat>select user()</heartbeat>

<!-- can have multi write hosts -->

<writeHost host="Master1" url="192.168.0.200:3306" user="root"

password="root">

<!-- can have multi read hosts -->

<readHost host="Slave1" url="192.168.0.199:3306" user="root" password="root" />

<readHost host="Slave2" url="192.168.0.198:3306" user="root" password="root" />

</writeHost>

<writeHost host="Master2" url="192.168.0.170:3306" user="root"

password="root">

<!-- can have multi read hosts -->

<readHost host="Slave3" url="192.168.0.169:3306" user="root" password="root" />

<readHost host="Slave4" url="192.168.0.168:3306" user="root" password="root" />

</writeHost>

<!--<writeHost host="hostS1" url="localhost:3316" user="root"

password="123456" />-->

<!-- <writeHost host="hostM2" url="localhost:3316" user="root" password="123456"/> -->

</dataHost>

<!--

<dataHost name="sequoiadb1" maxCon="1000" minCon="1" balance="0" dbType="sequoiadb" dbDriver="jdbc">

<heartbeat> </heartbeat>

<writeHost host="hostM1" url="sequoiadb://1426587161.dbaas.sequoialab.net:11920/SAMPLE" user="jifeng" password="jifeng"></writeHost>

</dataHost>

<dataHost name="oracle1" maxCon="1000" minCon="1" balance="0" writeType="0" dbType="oracle" dbDriver="jdbc"> <heartbeat>select 1 from dual</heartbeat>

<connectionInitSql>alter session set nls_date_format='yyyy-mm-dd hh24:mi:ss'</connectionInitSql>

<writeHost host="hostM1" url="jdbc:oracle:thin:@127.0.0.1:1521:nange" user="base" password="123456" > </writeHost> </dataHost>

<dataHost name="jdbchost" maxCon="1000" minCon="1" balance="0" writeType="0" dbType="mongodb" dbDriver="jdbc">

<heartbeat>select user()</heartbeat>

<writeHost host="hostM" url="mongodb://192.168.0.99/test" user="admin" password="123456" ></writeHost> </dataHost>

<dataHost name="sparksql" maxCon="1000" minCon="1" balance="0" dbType="spark" dbDriver="jdbc">

<heartbeat> </heartbeat>

<writeHost host="hostM1" url="jdbc:hive2://feng01:10000" user="jifeng" password="jifeng"></writeHost> </dataHost> -->

<!-- <dataHost name="jdbchost" maxCon="1000" minCon="10" balance="0" dbType="mysql"

dbDriver="jdbc"> <heartbeat>select user()</heartbeat> <writeHost host="hostM1"

url="jdbc:mysql://localhost:3306" user="root" password="123456"> </writeHost>

</dataHost> -->

</mycat:schema>

其中关键部分如下截图

balance="1": 全部的readHost与stand by writeHost参与select语句的负载均衡。writeType="0": 所有写操作发送到配置的第一个writeHost,第一个挂了切到还生存的第二个 writeHost,重新启动后以切换后的为准,切换记录在配置文件中:dnindex.properties 。switchType="1": 1 默认值,自动切换。

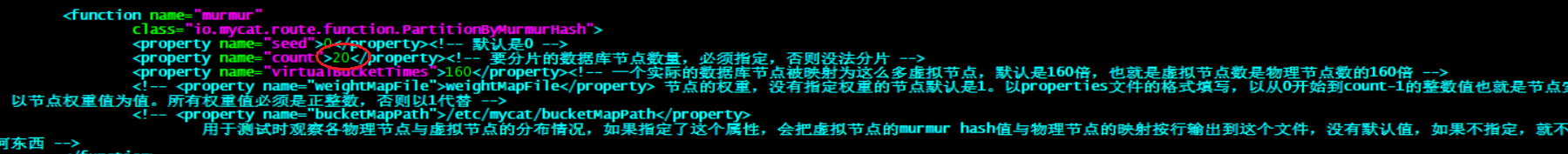

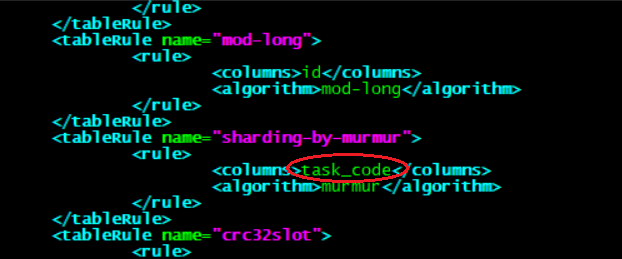

接下来修改rule.xml文件配置里面的一致性hash算法

vim rule.xml

主要需要根据你的分表修改如下截图的内容

这个图是修改需要分表的字段

![]()

这个图是修改为一共多少个分表

启动mycat服务

mycat start

[root@localhost conf]# ss -lntup |egrep '(8066|9066)'

tcp LISTEN 0 100 :::9066 :::* users:(("java",pid=12589,fd=94))

tcp LISTEN 0 100 :::8066 :::* users:(("java",pid=12589,fd=98))

验证mycat服务是否正常

第一步:使用mysql的客户端连接mycat

C:\Windows\system32>mysql -uroot -p123456 -h192.168.0.199 -P8066

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 26

Server version: 5.6.29-mycat-1.6.6.1-release-20181031195535 MyCat Server (OpenCloudDB)

Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+----------+

| DATABASE |

+----------+

| NOVADB |

+----------+

1 row in set (0.00 sec)

mysql> use NOVADB;

Database changed

mysql> select * from t_tag;

+----+-------------+-------------+---------------+---------------------+

| id | tag_name | category_id | category_name | createtime |

+----+-------------+-------------+---------------+---------------------+

| 1 | aaa | 1 | bb | 2019-01-17 16:38:30 |

| 2 | bbb | 1 | bb | 2019-01-17 16:38:48 |

| 3 | adasdqw | 1 | bb | 2019-01-18 11:23:22 |

| 5 | ww | 27 | qq | 2019-01-21 18:14:24 |

| 6 | dasdsad | 28 | dsadsa | 2019-01-23 13:57:47 |

| 7 | gfdgf | 29 | gffd | 2019-01-23 14:01:51 |

| 8 | N | 30 | automation | 2019-01-23 15:15:45 |

| 9 | ccccccc | 31 | wang | 2019-01-23 16:04:56 |

| 11 | ww | 35 | BB | 2019-01-23 17:07:30 |

| 12 | dasdsadsa | 36 | dasdasd | 2019-01-24 18:43:16 |

| 13 | 22222222 | 37 | 1111111111 | 2019-01-24 18:43:16 |

| 14 | 44444444444 | 38 | 3333333333 | 2019-01-24 18:43:16 |

+----+-------------+-------------+---------------+---------------------+

12 rows in set (0.00 sec)

第二步:也可以通过连接9066这个mycat的监控管理端口查看Mycat的读写情况

C:\Windows\system32>mysql -uroot -p123456 -h192.168.0.199 -P9066

mysql> show @@datasource;

+----------+---------+-------+---------------+------+------+--------+------+------+---------+-----------+------------+

| DATANODE | NAME | TYPE | HOST | PORT | W/R | ACTIVE | IDLE | SIZE | EXECUTE | READ_LOAD | WRITE_LOAD |

+----------+---------+-------+---------------+------+------+--------+------+------+---------+-----------+------------+

| dn1 | Master1 | mysql | 192.168.0.200 | 3306 | W | 0 | 10 | 1000 | 106 | 0 | 19 |

| dn1 | Master2 | mysql | 192.168.0.170 | 3306 | W | 0 | 1 | 1000 | 93 | 15 | 0 |

| dn1 | Slave1 | mysql | 192.168.0.199 | 3306 | R | 0 | 6 | 1000 | 100 | 16 | 0 |

| dn1 | Slave2 | mysql | 192.168.0.198 | 3306 | R | 0 | 7 | 1000 | 102 | 18 | 0 |

| dn1 | Slave3 | mysql | 192.168.0.169 | 3306 | R | 0 | 7 | 1000 | 103 | 19 | 0 |

| dn1 | Slave4 | mysql | 192.168.0.168 | 3306 | R | 0 | 7 | 1000 | 100 | 16 | 0 |

+----------+---------+-------+---------------+------+------+--------+------+------+---------+-----------+------------+

6 rows in set (0.00 sec)

参考

故障汇总

第一次配置的时候mysql的Master1端和Master2没有配置log-slave-updates导致,slave1和slave2上没有Master1端的数据。

解释:

从库开启log-bin参数,如果直接往Master1写数据,是可以记录log-bin日志的,但是Master1通过I0线程读取主主同步Master2二进制日志文件,然后通过SQL线程写入的数据,是不会记录binlog日志的。也就是说主主从库Master1从主库Master2上复制的数据,是不写入主主从库Master1的binlog日志的。所以主主从库Master1做为其他从库的主库时需要在配置文件中添加log-slave-updates参数。Master1和Master2都一样

解决办法:

[mysqld]

log-slave-updates

浙公网安备 33010602011771号

浙公网安备 33010602011771号