py爬虫实战

一、糗事百科视频爬取

先找到对应的页面,分析视频的来源,通过正则匹配到链接,然后再通过“美味的汤”来获取对应的视频的标题,进行下载

import requests import re from bs4 import BeautifulSoup url="https://www.qiushibaike.com/video/" headers={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36"} resp=requests.get(url,headers=headers) content = resp.content.decode('utf-8') soup=BeautifulSoup(content,'html.parser') text=resp.text # print(text) list=re.findall(r'<source src="(.*)" type=\'video/mp4\' />',text) divs=soup.find_all('div',class_="content") title=[] for item in divs: title.append(item.find('span').text.strip()) lst=[] for item in list: lst.append("https:"+item) count=0 for item in lst: resp=requests.get(item,headers=headers) with open("video/"+str(title[count])+".mp4","wb") as file: file.write(resp.content) print("已下载完第"+str(count)+"个") count+=1 print("视频下载完毕")

二、链家二手房数据

本次主要利用“BeautifulSoup”和mysql数据库

import re import requests import mysql.connector from bs4 import BeautifulSoup class LianJia(): mydb=mysql.connector.connect(host='localhost',user='root',password='fengge666',database='hotel') mycursor=mydb.cursor() #初始化 def __init__(self): self.url="https://bj.lianjia.com/chengjiao/pg{0}/" self.headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36'} def send_request(self,url): resp=requests.get(url,headers=self.headers) if resp: self.parse_content(resp) def parse_content(self,resp): lst=[] html=resp.text bs=BeautifulSoup(html,'html.parser') ul=bs.find('ul',class_='listContent') li_lst=ul.find_all('li') for item in li_lst: title=item.find('div',class_='title').text houseInfo=item.find('div',class_='houseInfo').text data=item.find('div',class_='dealDate').text money=item.find('div',class_='totalPrice').text flood=item.find('div',class_='positionInfo').text price=item.find('div',class_='unitPrice').text span=item.find('span',class_='dealCycleTxt') span_lst=span.find_all('span') agent=item.find('a',class_='agent_name').text lst.append((title,houseInfo,data,money,flood,price,span_lst[0].text,span_lst[1].text,agent)) #开始存储到数据库 self.write_mysql(lst) def write_mysql(self,lst): # print(self.mydb) tuple_lst=tuple(lst) sql="insert into house (title,houseInfo,data,money,flood,price,current_money,current_data,agent) values (%s,%s,%s,%s,%s,%s,%s,%s,%s)" self.mycursor.executemany(sql,tuple_lst) self.mydb.commit() def start(self): for i in range(1,11): full_url=self.url.format(i) resp=self.send_request(full_url) if resp: self.parse_content(self,resp) if __name__ == '__main__': lianjia=LianJia() lianjia.start()

三、爬取招聘职位网信息

主要利用beautifulSoup对其网页进行分析,去除对应的数据,将其保存在xlsx文件里,对其进行分页爬取

import re import openpyxl from bs4 import BeautifulSoup import requests import time def send_request(id,page): url='https://www.jobui.com/company/{0}/jobs/p{1}'.format(id,page) headers={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36"} resp=requests.get(url,headers=headers) return resp.text lst=[] def parse_html(html): bs=BeautifulSoup(html,'html.parser') job_lst=bs.find_all('div',class_='c-job-list') for item in job_lst: name=item.find('h3').text div_tag=item.find('div',class_='job-desc') span_tag=div_tag.find_all('span') url=item.find('a',class_='job-name')['href'] url='https://www.jobui.com'+url lst.append([name,span_tag[0].text,span_tag[1].text,url]) def save(lst): wk=openpyxl.Workbook() sheet=wk.active for item in lst: sheet.append(item) wk.save('招聘信息.xlsx') def start(id,pages): for page in range(1,pages+1): resp_data=send_request(id,page) parse_html(resp_data) time.sleep(2) save(lst) if __name__ == '__main__': id='10375749' pages=3 start(id,pages)

四、爬取QQ音乐排行旁信息

这个排行榜的信息封装在json数据里,因此需要我们对json格式进行分析,取出想要的数据,保存到数据库里

import requests import re import mysql.connector def get_request(): url="https://u.y.qq.com/cgi-bin/musics.fcg?-=getUCGI9057052357882678&g_tk=130572444&sign=zzan8er9xsqr1dg0y3e7df30d14b15a2b335cedcd0d6c6c883f&loginUin=1751520702&hostUin=0&format=json&inCharset=utf8&outCharset=utf-8¬ice=0&platform=yqq.json&needNewCode=0&data=%7B%22detail%22%3A%7B%22module%22%3A%22musicToplist.ToplistInfoServer%22%2C%22method%22%3A%22GetDetail%22%2C%22param%22%3A%7B%22topId%22%3A4%2C%22offset%22%3A0%2C%22num%22%3A20%2C%22period%22%3A%222020-07-23%22%7D%7D%2C%22comm%22%3A%7B%22ct%22%3A24%2C%22cv%22%3A0%7D%7D" resp=requests.get(url) return resp.json() def parse_data(): data=[] data_json=get_request() lst_song=data_json['detail']['data']['data']['song'] for item in lst_song: data.append((item['rank'],item['title'],item['singerName'])) return data def save(): mydb=mysql.connector.connect(host='localhost',user='root',password='fengge666',database='python_database') mycursor=mydb.cursor() sql='insert into song_table values(%s,%s,%s)' lst=parse_data() mycursor.executemany(sql,lst) mydb.commit() print(mycursor.rowcount,'记录插入成功') if __name__ == '__main__': save()

五、爬取12306车次信息

在这里也是对json数据进行解析,唯一有点难度的就是城市的名称和其对应的简称之间的对应,找到对应的城市与简称的json数据报,解析后,得到对应的字典。

然后再对起分析对应的数据,存储到相应的xlsx文件里。

最终的程序模式是:输入起始站,终点站以及出发时间,就会自动帮你找对应的车次以及相应的信息

import requests import re import openpyxl def send_request(begin,end,data): lst=getHcity() begin=lst[begin] end=lst[end] url='https://kyfw.12306.cn/otn/leftTicket/query?leftTicketDTO.train_date={0}&leftTicketDTO.from_station={1}&leftTicketDTO.to_station={2}&purpose_codes=ADULT'.format(data,begin,end) headers={'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36' ,'Cookie':'_uab_collina=159514803739447239524678; JSESSIONID=C6B40F3602421EA3F1C176AAAD6D07CD; tk=t4DWOVEUjJCURbdg5YymB_o_T5iBATU7sT2cagOk978qrx1x0; RAIL_EXPIRATION=1595441480862; RAIL_DEVICEID=dCwOxY9htFoUbg-W-ZiiJivIJneE0K0MYpVRFCEGJZVXr2VWjywrVdOvEJ6HKFapkeqFwD82pjGtJv0fB1SeILpr-60WLkdvjz6zV-hcnclaYrz1AcbOskdjaz3e3fJd007cLRkk4OiauQxiu6zjnhVnI4fytM01; BIGipServerpool_passport=283968010.50215.0000; route=6f50b51faa11b987e576cdb301e545c4; _jc_save_fromStation=%u90AF%u90F8%2CHDP; _jc_save_toStation=%u77F3%u5BB6%u5E84%2CSJP; _jc_save_fromDate=2020-07-19; _jc_save_toDate=2020-07-19; _jc_save_wfdc_flag=dc; BIGipServerotn=3990290698.50210.0000; BIGipServerpassport=887619850.50215.0000; uKey=97a13289be6445586b819425f91b9bcbcc15446c5f37bceb8352cc085d1017a4; current_captcha_type=Z'} resp=requests.get(url,headers=headers) resp.encoding='utf-8' # print(resp.text) return resp def pare_json(resp,city): json_ticket=resp.json() data_lst=json_ticket['data']['result'] content=data_lst[0].split('|') #遍历车次信息 lst=[] lst.append(['车次','起始站','到达站','一等座','二等座','软卧','硬卧','硬座','出行日期','出发时间','到达时间']) for item in data_lst: d=item.split('|') # d[3]为车次 # d[6]查询起始站 # d[7]查询到达站 # d[31]一等座 # d[30]二等座 # d[29]硬座 # d[23]软卧 # d[28]硬卧 # d[8] 出发时间 # d[9] 到达时间 # d[13]出行时间 lst.append([d[3],city[d[6]],city[d[7]],d[31],d[30],d[23],d[28],d[29],d[13],d[8],d[9]]) return lst def start(begin,end,data): lst=pare_json(send_request(begin,end,data),getcity()) wk = openpyxl.Workbook() sheet = wk.active for item in lst: sheet.append(item) wk.save('车票查询.xlsx') def getcity(): url="https://kyfw.12306.cn/otn/resources/js/framework/station_name.js?station_version=1.9151" headers={'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36'} resp=requests.get(url,headers=headers) resp.encoding='utf-8' stations=re.findall('([\u4e00-\u9fa5]+)\|([A-Z]+)',resp.text) stations_data=dict(stations) stations_d={} for item in stations_data: stations_d[stations_data[item]]=item return stations_d def getHcity(): url = "https://kyfw.12306.cn/otn/resources/js/framework/station_name.js?station_version=1.9151" headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36'} resp = requests.get(url, headers=headers) resp.encoding = 'utf-8' stations = re.findall('([\u4e00-\u9fa5]+)\|([A-Z]+)', resp.text) stations_data = dict(stations) return stations_data if __name__ == '__main__': begin=input('begin:') end=input('end:') data=input('data:') start(begin,end,data)

六、笔趣阁小说爬取

闲来无事,回忆当初看过的奇幻小说,想来就盘他吧!!!

import re import requests from bs4 import BeautifulSoup import demjson import pymysql import os headers = {'user-agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.131 Safari/537.36'}#创建头部信息 url='https://www.xsbiquge.com/12_12735/' ht='https://www.xsbiquge.com' r=requests.get(url,headers=headers) content=r.content.decode('utf-8') soup = BeautifulSoup(content, 'html.parser') if not os.path.exists('校园纯情霸主小说'): os.mkdir('校园纯情霸主小说') content=soup.find('div',id='list') Title = str(content.dt.text[:-2]).strip() print(Title) #len(content.dl.contents)-1 lst_dd=content.find_all('dd') for item in lst_dd: link=ht+item.a['href'] name=item.a.text r = requests.get(link, headers=headers) content = r.content.decode('utf-8') soup = BeautifulSoup(content, 'html.parser') text=soup.find('div',id='content').text.replace(' ','\n') with open('校园纯情霸主小说' + '/' + name + '.txt', 'a',encoding='utf-8')as f: f.write(text) print("已完成"+name+" 的爬取!!!")

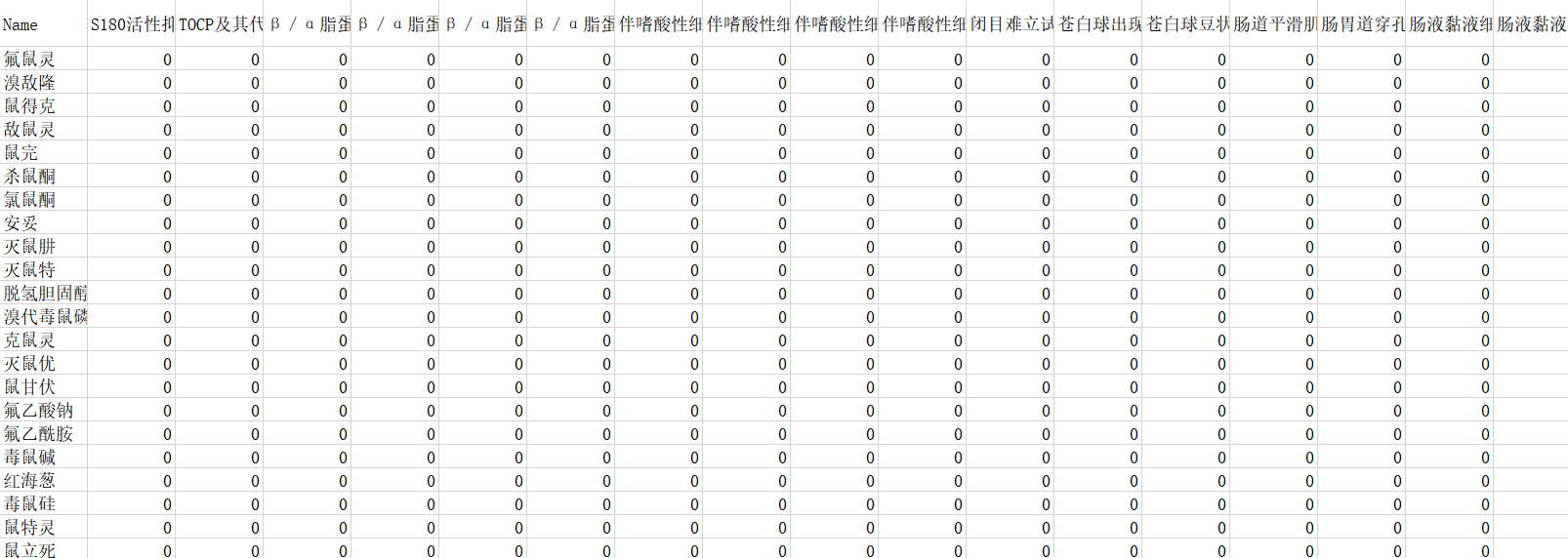

七、毒物特征与症状提取

这是和老师正在做的一个项目,需要将毒物的特征描述文字与对应的一些症状进行匹对,然后生成一个表,又该症状则为1,反之则为0

import csv import re import pandas as pd import openpyxl import xlrd lst_xlsx=['二级其他检查.xlsx','二级库呼吸.xlsx','二级库尿液检查.xlsx','二级库循环.xlsx','二级库泌尿生殖.xlsx','二级库消化.xlsx','二级库甲状腺.xlsx','二级库皮肤.xlsx','二级库眼部.xlsx','二级库神经.xlsx', '二级库粪便检查.xlsx','二级库肌电图.xlsx','二级库肝功能肾功能.xlsx','二级库胃部检查.xlsx','二级库脑电图.xlsx','二级库血液检查.xlsx','二级心电图.xlsx','其它.xlsx'] name_lst = [] content_lst = [] ans_lst=[] def get_dw(): wk = xlrd.open_workbook('duwuxiangqing.xls') table = wk.sheet_by_name('Sheet1') rows = table.nrows cols = table.ncols for i in range(2, rows): name = table.cell(i, 2).value str = table.cell(i, 13).value + table.cell(i, 14).value + table.cell(i, 17).value + table.cell(i, 18).value content_lst.append(str) name_lst.append(name) # for i in range(len(content_lst)): # print(name_lst[i],content_lst[i]) def get_title(): lst_title=[] lst_title.append("Name") for item in lst_xlsx: wk = openpyxl.load_workbook(item) sheet = wk.active rows = sheet.max_row cols = sheet.max_column for i in range(1, cols + 1): for j in range(1, rows + 1): size = sheet.cell(j, i).value if (size != None): lst_title.append(size) return lst_title def head(): global flag, num lst_title=[] lst_title.append("Name") #构建毒物名字 for item in name_lst: k=[] k.append(item) ans_lst.append(k) #遍历毒物特征 p=0 for cont_item in content_lst: cont_item=str(cont_item) print("目前正在进行第:"+str(p)) #读取二级库里的特征 for item in lst_xlsx: wk = openpyxl.load_workbook(item) sheet = wk.active rows = sheet.max_row cols = sheet.max_column for i in range(1, cols + 1): flag=0 num=0 for j in range(1, rows + 1): size = sheet.cell(j, i).value if(size!=None): num+=1 if cont_item.find(size)>0 : flag=1 if flag==1: for f in range(num): ans_lst[p].append("1") else: for f in range(num): ans_lst[p].append("0") p=p+1 lst_title=get_title() write_title(lst_title,ans_lst) def write_title(title,ans): f = open('data.csv', 'w',encoding='utf-8',newline='') # 2. 基于文件对象构建 csv写入对象 csv_writer = csv.writer(f) # 3. 构建列表头 csv_writer.writerow(title) for item in ans: csv_writer.writerow(item) if __name__ == '__main__': get_dw() head()

data.csv部分截图:

浙公网安备 33010602011771号

浙公网安备 33010602011771号