MNN学习笔记(1)-----MNN中基于kl_divergence的8bit量化的方法和代码解析

mnn中8bit的量化方法基于两种KL_divergenc 和ADMM 两种方法,本文主要介绍基于kl_divergence的8bit量化方法;

mnn 编译和执行命令

编译:

cd MNN mkdir build cd build cmake -DMNN_BUILD_QUANTOOLS=ON .. make -j4

执行命令:

./quantized.out origin.mnn quan.mnn preprocessConfig.json

配置参数:

{

"format":"RGB",

"mean":[

127.5,

127.5,

127.5

],

"normal":[

0.00784314,

0.00784314,

0.00784314

],

"width":224,

"height":224,

"path":"path/to/images/",

"used_image_num":500,

"feature_quantize_method":"KL",

"weight_quantize_method":"MAX_ABS"

}

默认的量化方法是采用kl_divergenc。

mnn 量化的原理:

借用几张tensor rt的图说明问题,将fp32装换为int8,就是原来使用32bit来表示一个tensor,现在使用8bit来表示一个tensor。最简单的方式就是线性量化:

FP32 Tensor (T) = scale_factor(sf) * 8-bit Tensor(t) + FP32_bias (b)

实验证明,偏置实际上是不需要的,去掉偏置,

T=sf∗t

sf是每一层上每一个tensor 的比例因子(scaling factor)。实际mnn在处理过程中,卷积运算的每一个通道都计算了不同的比例因子。

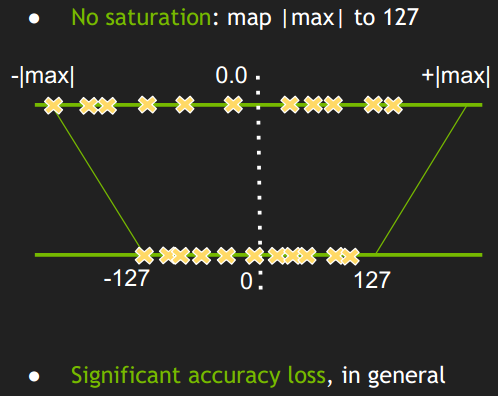

简单的将一个tensor 中的-|MAX|和|MAX| value 映射为-127和127.中间按照线性关系映射。这种映射关系是不饱和的。这种简单的映射关系,会造成很大的精度损失。mnn和tensorrt的做法是如下:

这种做法不是讲|max|映射为127,而是存在一个阈值|T|,将±|T|映射为±127.,大于±|T|的值直接映射为阈值±127. 目前使用的普遍方法是,使用kl散度取获取kl散度最小的阈值T。

1、什么是kl_divergence

KL(Kullback-Leigler divergence)散度,是用来描述两个概率分布P和Q的差异的一种方法。多应用于概率论和信息论中。在信息论中D(P||Q)表示用概率分布Q来拟合真实分布P时,产生的信息损耗,其中P表示真实分布,Q表示P的拟合分布。

KL散度的定义

python sample 代码:

import numpy as np

def get_distribution(P):

pmax = np.max(P)

distribution=np.zeros(2048)

interval =2048/pmax

for i in P:

index = int(np.fabs(i*interval))

if index >= 2048:

index = 2047

distribution[index]= distribution[index] +1

return distribution

def kl_divergence(P,Q,len):

KL =0.0

for i in range(len):

try:

if Q[i] == 0.0:

KL = KL + 1

else:

KL = KL+ P[i]*np.log(P[i]/Q[i])

except:

print 'Q:{},p:{}'.format(Q[i],P[i])

return KL

def test():

#P = np.random.rand(96*3*11*11)

P = np.random.standard_normal(96*3*11*11)

#Q = np.random.rand(96*3*11*11)

Pdistribution = get_distribution(P)

kl = np.inf

for i in Pdistribution:

if i ==0.0:

print 'zeor'

for k in range(128,2048):

reference_distribution = Pdistribution[:k].copy()

reference_distribution[k-1] = sum(Pdistribution[k::])

interval = k/128.0

#print interval

quantized_distribution = np.zeros(k)

for i in range(128):

start = i*interval

end = (i+1)*interval

leftupper = int(np.ceil(start))

if leftupper > start:

scale = leftupper-start

quantized_distribution[i] += scale * Pdistribution[leftupper-1]

rightlower = int(np.floor(end))

if rightlower < end:

scale = end - rightlower

quantized_distribution[i] += scale * Pdistribution[rightlower]

rightlower = int(np.floor(end))

quantized_distribution[i] = sum(Pdistribution[leftupper:rightlower])

expand_distribution = np.zeros(k)

for i in range(128):

start = i*interval

end = (i+1)*interval

leftupper = int(np.ceil(start))

count = 0

if leftupper > start:

count +=leftupper-start;

rightlower =int(np.floor(end))

if rightlower < end:

count +=end -rightlower

count = count+ rightlower - leftupper

if count ==0:

continue

expandvalue = quantized_distribution[i]/count

if leftupper > start and expand_distribution[leftupper-1] !=0:

expand_distribution[leftupper-1] = expandvalue*(leftupper-start)

if rightlower < end and expand_distribution[rightlower] !=0:

expand_distribution[rightlower] = expandvalue*(rightlower - end)

expand_distribution[leftupper:rightlower] = expandvalue

tempkl = kl_divergence(reference_distribution,expand_distribution,k)

if tempkl < kl:

kl = tempkl

print 'kl :{},index:{}'.format(kl,k)

#print 'kl :{},index:{}'.format(tempkl,k)

#break

return

if __name__=="__main__":

test()

深度学习量化的过程中,真实的分布P,即每一个tensor 都会分为2048个bin。Q用int8 即[0-127]来拟合真实的分布P

MNN中是怎么计算kl_divergence

1、获取Q的真实分布:

从量化模型的命令中可以看到,需要500张图片来模拟真实数据的分布,500张图片前向计算 ,来获取每一层的分布 ,代码入口在Calibration.cpp 文件中,代码如下:

void Calibration::_computeFeatureScaleKL() {

_computeFeatureMapsRange();

_collectFeatureMapsDistribution();

_scales.clear();

for (auto& iter : _featureInfo) {

AUTOTIME;

_scales[iter.first] = iter.second->finishAndCompute();

}

//_featureInfo.clear();//No need now

}

函数_computeFeatureMapsRange 是统计每一个卷积层下每个channel下前向计算的最大值和最小值。_collectFeatureMapsDistribution,是根据获取到每个channel下的最大值来统计2048个bin,每个bin下的权重分布。

finishAndCompute中的_computeThreshold 计算kl散度的最小值,找到最合适的阈值T

int TensorStatistic::_computeThreshold(const std::vector<float>& distribution) {

const int targetBinNums = 128;

int threshold = targetBinNums;

if (mThresholdMethod == THRESHOLD_KL) {

float minKLDivergence = 10000.0f;

float afterThresholdSum = 0.0f;

std::for_each(distribution.begin() + targetBinNums, distribution.end(),

[&](float n) { afterThresholdSum += n; });

for (int i = targetBinNums; i < mBinNumber; ++i) {

std::vector<float> quantizedDistribution(targetBinNums);

std::vector<float> candidateDistribution(i);

std::vector<float> expandedDistribution(i);

std::copy(distribution.begin(), distribution.begin() + i, candidateDistribution.begin());

candidateDistribution[i - 1] += afterThresholdSum;

afterThresholdSum -= distribution[i];

const float binInterval = (float)i / (float)targetBinNums;

// merge i bins to target bins

for (int j = 0; j < targetBinNums; ++j) {

const float start = j * binInterval;

const float end = start + binInterval;

const int leftUpper = static_cast<int>(std::ceil(start));

if (leftUpper > start) {

const float leftScale = leftUpper - start;

quantizedDistribution[j] += leftScale * distribution[leftUpper - 1];

}

const int rightLower = static_cast<int>(std::floor(end));

if (rightLower < end) {

const float rightScale = end - rightLower;

quantizedDistribution[j] += rightScale * distribution[rightLower];

}

std::for_each(distribution.begin() + leftUpper, distribution.begin() + rightLower,

[&](float n) { quantizedDistribution[j] += n; });

}

// expand target bins to i bins

for (int j = 0; j < targetBinNums; ++j) {

const float start = j * binInterval;

const float end = start + binInterval;

float count = 0;

const int leftUpper = static_cast<int>(std::ceil(start));

float leftScale = 0.0f;

if (leftUpper > start) {

leftScale = leftUpper - start;

if (distribution[leftUpper - 1] != 0) {

count += leftScale;

}

}

const int rightLower = static_cast<int>(std::floor(end));

float rightScale = 0.0f;

if (rightLower < end) {

rightScale = end - rightLower;

if (distribution[rightLower] != 0) {

count += rightScale;

}

}

std::for_each(distribution.begin() + leftUpper, distribution.begin() + rightLower, [&](float n) {

if (n != 0) {

count += 1;

}

});

if (count == 0) {

continue;

}

const float toExpandValue = quantizedDistribution[j] / count;

if (leftUpper > start && distribution[leftUpper - 1] != 0) {

expandedDistribution[leftUpper - 1] += toExpandValue * leftScale;

}

if (rightLower < end && distribution[rightLower] != 0) {

expandedDistribution[rightLower] += toExpandValue * rightScale;

}

for (int k = leftUpper; k < rightLower; ++k) {

if (distribution[k] != 0) {

expandedDistribution[k] += toExpandValue;

}

}

}

const float curKL = _klDivergence(candidateDistribution, expandedDistribution);

// std::cout << "=====> KL: " << i << " ==> " << curKL << std::endl;

if (curKL < minKLDivergence) {

minKLDivergence = curKL;

threshold = i;

}

}

} else if (mThresholdMethod == THRESHOLD_MAX) {

threshold = mBinNumber - 1;

} else {

// TODO, support other method

MNN_ASSERT(false);

}

return threshold;

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号