pytorch实现vgg19 训练自定义分类图片

1、vgg19模型——pytorch 版本= 1.1.0 实现

# coding:utf-8

import torch.nn as nn

import torch

class vgg19_Net(nn.Module):

def __init__(self,in_img_rgb=3,in_img_size=64,out_class=1000,in_fc_size=25088):

super(vgg19_Net,self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels=in_img_rgb, out_channels=in_img_size, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(in_img_size, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU()

)

self.conv2 = nn.Sequential(

nn.Conv2d(in_channels=in_img_size,out_channels=64,kernel_size=3,stride=1,padding=1),

nn.BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2,stride=2,padding=0,dilation=1,ceil_mode=False)

)

self.conv3 = nn.Sequential(

nn.Conv2d(in_channels=64,out_channels=128,kernel_size=3,stride=1,padding=1),

nn.BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU()

)

self.conv4 = nn.Sequential(

nn.Conv2d(in_channels=128,out_channels=128,kernel_size=3,stride=1,padding=1),

nn.BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2,stride=2,padding=0,dilation=1,ceil_mode=False)

)

self.conv5 = nn.Sequential(

nn.Conv2d(in_channels=128,out_channels=256,kernel_size=3,stride=1,padding=1),

nn.BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU()

)

self.conv6 = nn.Sequential(

nn.Conv2d(in_channels=256,out_channels=256,kernel_size=3,stride=1,padding=1),

nn.BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU()

)

self.conv7 = nn.Sequential(

nn.Conv2d(in_channels=256,out_channels=256,kernel_size=3,stride=1,padding=1),

nn.BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU()

)

self.conv8 = nn.Sequential(

nn.Conv2d(in_channels=256,out_channels=256,kernel_size=3,stride=1,padding=1),

nn.BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

self.conv9 = nn.Sequential(

nn.Conv2d(in_channels=256, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU()

)

self.conv10 = nn.Sequential(

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU()

)

self.conv11 = nn.Sequential(

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU()

)

self.conv12 = nn.Sequential(

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

self.conv13 = nn.Sequential(

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU()

)

self.conv14 = nn.Sequential(

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU()

)

self.conv15 = nn.Sequential(

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU()

)

self.conv16 = nn.Sequential(

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

self.fc17 = nn.Sequential(

nn.Linear(in_features=in_fc_size, out_features=4096, bias=True),

nn.ReLU(),

nn.Dropout(p=0.5)

)

self.fc18 = nn.Sequential(

nn.Linear(in_features=4096, out_features=4096, bias=True),

nn.ReLU(),

nn.Dropout(p=0.5)

)

self.fc19 = nn.Sequential(

nn.Linear(in_features=4096, out_features=out_class, bias=True)

)

self.conv_list = [self.conv1,self.conv2,self.conv3,self.conv4,self.conv5,self.conv6,self.conv7,self.conv8,

self.conv9,self.conv10,self.conv11,self.conv12,self.conv13,self.conv14,self.conv15,self.conv16]

self.fc_list = [self.fc17,self.fc18,self.fc19]

def forward(self, x):

for conv in self.conv_list:

x = conv(x)

fc = x.view(x.size(0), -1)

# 查看全连接层的参数:in_fc_size 的值

# print("vgg19_model_fc:",fc.size(1))

for fc_item in self.fc_list:

fc = fc_item(fc)

return fc

# 检测 gpu是否可用

CUDA = torch.cuda.is_available()

print(CUDA)

if CUDA:

vgg19_model = vgg19_Net(in_img_rgb=1, in_img_size=32, out_class=13,in_fc_size=512).cuda()

else:

vgg19_model = vgg19_Net(in_img_rgb=1, in_img_size=32, out_class=13,in_fc_size=512)

print(vgg19_model)

# 优化方法

optimizer = torch.optim.Adam(vgg19_model.parameters())

# 损失函数

loss_func = nn.MultiLabelSoftMarginLoss()#nn.CrossEntropyLoss()

# 批次训练分割数据集

def batch_training_data(x_train,y_train,batch_size,i):

n = len(x_train)

left_limit = batch_size*i

right_limit = left_limit+batch_size

if n>=right_limit:

return x_train[left_limit:right_limit,:,:,:],y_train[left_limit:right_limit,:]

else:

return x_train[left_limit:, :, :, :], y_train[left_limit:, :]

2、训练main方法,自定义数据集

# coding:utf-8

import time

import os

import torch

import numpy as np

from data_processing import get_DS

# from CNN_nework_model import cnn_face_discern_model

from torch.autograd import Variable

from vgg19_model import optimizer, vgg19_model, loss_func, batch_training_data,CUDA

from sklearn.metrics import accuracy_score

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

st = time.time()

# 获取训练集与测试集以 8:2 分割

img_resize = 32

x_,y_,y_true,label = get_DS(img_resize)

label_number = len(label)

x_train,y_train = x_[:960,:,:,:].reshape((960,1,img_resize,img_resize)),y_[:960,:]

x_test,y_test = x_[1250:,:,:,:].reshape((50,1,img_resize,img_resize)),y_[1250:,:]

y_test_label = y_true[1250:]

print(time.time() - st)

print(x_train.shape,x_test.shape)

batch_size = 128

n = int(len(x_train)/batch_size)+1

for epoch in range(100):

global loss

for batch in range(n):

x_training,y_training = batch_training_data(x_train,y_train,batch_size,batch)

batch_x,batch_y = Variable(torch.from_numpy(x_training)).float(),Variable(torch.from_numpy(y_training)).float()

if CUDA:

batch_x=batch_x.cuda()

batch_y=batch_y.cuda()

out = vgg19_model(batch_x)

loss = loss_func(out, batch_y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 测试精准度

if epoch%5==0:

global x_test_tst

if CUDA:

x_test_tst = Variable(torch.from_numpy(x_test)).float().cuda()

y_pred = vgg19_model(x_test_tst)

y_predict = np.argmax(y_pred.cpu().data.numpy(),axis=1)

# print(y_test_label,"\n",y_predict)

acc = accuracy_score(y_test_label,y_predict)

print("loss={} aucc={}".format(loss.cpu().data.numpy(),acc))

# 保存模型

# torch.save(model.state_dict(),'save_torch_model/face_image_recognition_model.pkl')

# 导入模型

# model.load_state_dict(torch.load('params.pkl'))

# 两种保存模型的方法

# https://blog.csdn.net/u012436149/article/details/68948816/

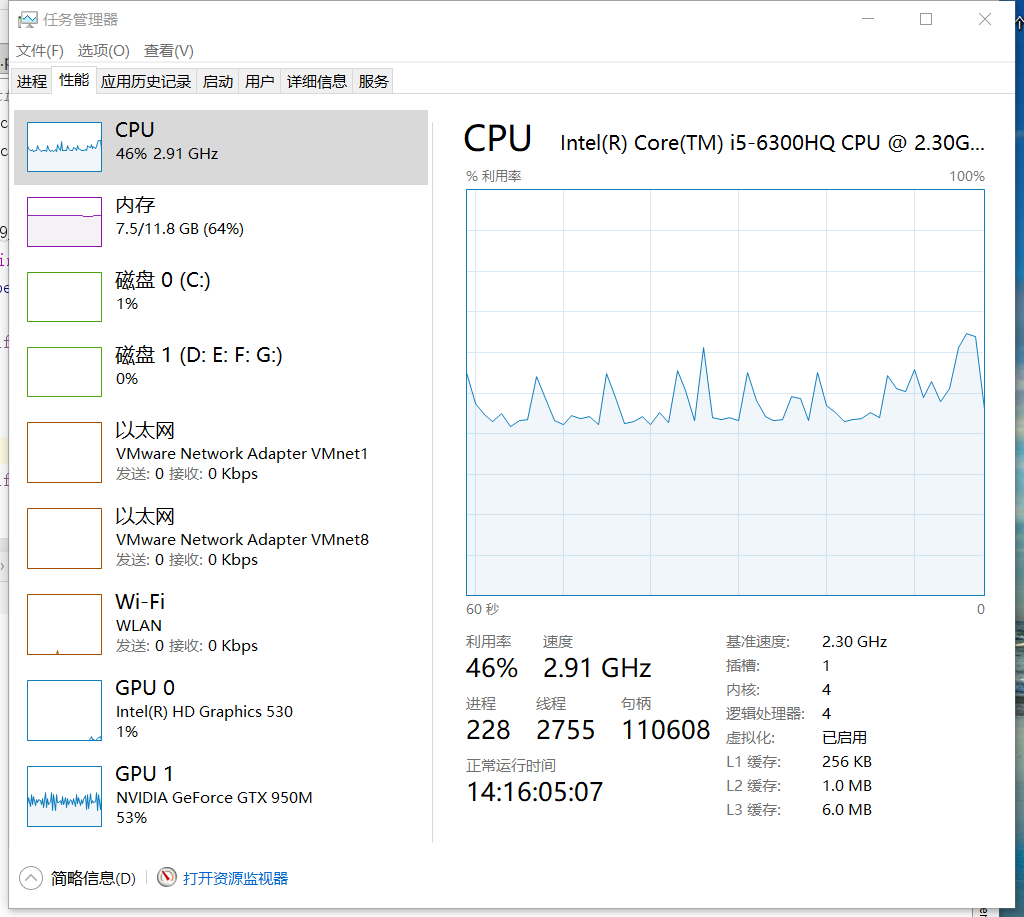

3、启用GPU训练

4、训练输出结果

True

vgg19_Net(

(conv1): Sequential(

(0): Conv2d(1, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv2): Sequential(

(0): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv3): Sequential(

(0): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv4): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv5): Sequential(

(0): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv6): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv7): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv8): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv9): Sequential(

(0): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv10): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv11): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv12): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv13): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv14): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv15): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv16): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(fc17): Sequential(

(0): Linear(in_features=512, out_features=4096, bias=True)

(1): ReLU()

(2): Dropout(p=0.5)

)

(fc18): Sequential(

(0): Linear(in_features=4096, out_features=4096, bias=True)

(1): ReLU()

(2): Dropout(p=0.5)

)

(fc19): Sequential(

(0): Linear(in_features=4096, out_features=13, bias=True)

)

)

0.7689414024353027

(960, 1, 32, 32) (50, 1, 32, 32)

loss=0.29043662548065186 aucc=0.06

loss=0.25410425662994385 aucc=0.12

loss=0.21404671669006348 aucc=0.26

loss=0.1869402676820755 aucc=0.38

loss=0.18018770217895508 aucc=0.4

loss=0.16859599947929382 aucc=0.36

loss=0.161808043718338 aucc=0.58

loss=0.14093756675720215 aucc=0.44

loss=0.1079934760928154 aucc=0.58

loss=0.08194318413734436 aucc=0.76

loss=0.07743502408266068 aucc=0.62

loss=0.05460016056895256 aucc=0.86

loss=0.058618828654289246 aucc=0.74

loss=0.05457763373851776 aucc=0.72

loss=0.045047733932733536 aucc=0.82

loss=0.040015704929828644 aucc=0.86

loss=0.024097014218568802 aucc=0.88

loss=0.02294076606631279 aucc=0.82

loss=0.10601243376731873 aucc=0.78

loss=0.006499956361949444 aucc=0.94

Process finished with exit code 0

自动化学习。

浙公网安备 33010602011771号

浙公网安备 33010602011771号