ELK+filebeat安装部署监控springboot日志

ELK服务器端部署

1. 安装dockercompose,略

2. 配置docker-compose.yml

cd /root/elk

vi docker-compose.yml

version: "3"

services:

es-master:

container_name: es-master

hostname: es-master

image: elasticsearch:7.5.1

restart: always

ports:

- 9200:9200

- 9300:9300

volumes:

- ./elasticsearch/master/conf/es-master.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- ./elasticsearch/master/data:/usr/share/elasticsearch/data

- ./elasticsearch/master/logs:/usr/share/elasticsearch/logs

environment:

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

es-slave1:

container_name: es-slave1

image: elasticsearch:7.5.1

restart: always

ports:

- 9201:9200

- 9301:9300

volumes:

- ./elasticsearch/slave1/conf/es-slave1.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- ./elasticsearch/slave1/data:/usr/share/elasticsearch/data

- ./elasticsearch/slave1/logs:/usr/share/elasticsearch/logs

environment:

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

es-slave2:

container_name: es-slave2

image: elasticsearch:7.5.1

restart: always

ports:

- 9202:9200

- 9302:9300

volumes:

- ./elasticsearch/slave2/conf/es-slave2.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- ./elasticsearch/slave2/data:/usr/share/elasticsearch/data

- ./elasticsearch/slave2/logs:/usr/share/elasticsearch/logs

environment:

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

kibana:

container_name: kibana

hostname: kibana

image: kibana:7.5.1

restart: always

ports:

- 5601:5601

volumes:

- ./kibana/conf/kibana.yml:/usr/share/kibana/config/kibana.yml

environment:

- elasticsearch.hosts=http://es-master:9200

depends_on:

- es-master

- es-slave1

- es-slave2

logstash:

container_name: logstash

hostname: logstash

image: logstash:7.5.1

command: logstash -f ./conf/logstash-filebeat.conf

restart: always

volumes:

# 映射到容器中

- ./logstash/conf/logstash-filebeat.conf:/usr/share/logstash/conf/logstash-filebeat.conf

- ./logstash/ssl:/usr/share/logstash/ssl

environment:

- elasticsearch.hosts=http://es-master:9200

# 解决logstash监控连接报错

- xpack.monitoring.elasticsearch.hosts=http://es-master:9200

ports:

- 5044:5044

depends_on:

- es-master

- es-slave1

- es-slave2

3. 配置Elasticsearch

mkdir elasticsearch

mkdir master

mkdir slave1

mkdir slave2

添加配置文件 master>conf>es-master.yml

# 集群名称

cluster.name: es-cluster

# 节点名称

node.name: es-master

# 是否可以成为master节点

node.master: true

# 是否允许该节点存储数据,默认开启

node.data: false

# 网络绑定

network.host: 0.0.0.0

# 设置对外服务的http端口

http.port: 9200

# 设置节点间交互的tcp端口

transport.port: 9300

# 集群发现

discovery.seed_hosts:

- es-master

- es-slave1

- es-slave2

# 手动指定可以成为 mater 的所有节点的 name 或者 ip,这些配置将会在第一次选举中进行计算

cluster.initial_master_nodes:

- es-master

# 支持跨域访问

http.cors.enabled: true

http.cors.allow-origin: "*"

# 安全认证

xpack.security.enabled: false

#http.cors.allow-headers: "Authorization"

添加配置文件 slave1>conf>es-slave1.yml

# 集群名称

cluster.name: es-cluster

# 节点名称

node.name: es-slave1

# 是否可以成为master节点

node.master: true

# 是否允许该节点存储数据,默认开启

node.data: true

# 网络绑定

network.host: 0.0.0.0

# 设置对外服务的http端口

http.port: 9201

# 设置节点间交互的tcp端口

#transport.port: 9301

# 集群发现

discovery.seed_hosts:

- es-master

- es-slave1

- es-slave2

# 手动指定可以成为 mater 的所有节点的 name 或者 ip,这些配置将会在第一次选举中进行计算

cluster.initial_master_nodes:

- es-master

# 支持跨域访问

http.cors.enabled: true

http.cors.allow-origin: "*"

# 安全认证

xpack.security.enabled: false

#http.cors.allow-headers: "Authorization"

添加配置文件 slave2>conf>es-slave2.yml

# 集群名称

cluster.name: es-cluster

# 节点名称

node.name: es-slave2

# 是否可以成为master节点

node.master: true

# 是否允许该节点存储数据,默认开启

node.data: true

# 网络绑定

network.host: 0.0.0.0

# 设置对外服务的http端口

http.port: 9202

# 设置节点间交互的tcp端口

#transport.port: 9302

# 集群发现

discovery.seed_hosts:

- es-master

- es-slave1

- es-slave2

# 手动指定可以成为 mater 的所有节点的 name 或者 ip,这些配置将会在第一次选举中进行计算

cluster.initial_master_nodes:

- es-master

# 支持跨域访问

http.cors.enabled: true

http.cors.allow-origin: "*"

# 安全认证

xpack.security.enabled: false

#http.cors.allow-headers: "Authorization"

解决 elasticsearch 报错max virtual memory areas vm.max_map_count [65530] is too low

vi /etc/sysctl.conf

添加 一行 vm.max_map_count=655360

加载参数

sysctl -p

3. 配置kibana

cd /root/elk

mkdir kibana

添加配置文件kibana>conf>kibana.yml

# Default Kibana configuration for docker target

server.name: kibana

server.host: "0"

elasticsearch.hosts: [ "http://****:9200" ]

xpack.monitoring.ui.container.elasticsearch.enabled: true

4. 配置logstash

cd /root/elk

mkdir logstash

添加配置文件logstash>conf>logstash-filebeat.conf

input {

# 来源beats

beats {

# 端口

port => "5044"

#ssl_certificate_authorities => ["/usr/share/logstash/ssl/ca.crt"]

#ssl_certificate => "/usr/share/logstash/ssl/server.crt"

#ssl_key => "/usr/share/logstash/ssl/server.key"

#ssl_verify_mode => "force_peer"

}

}

# 分析、过滤插件,可以多个

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}"}

}

geoip {

source => "clientip"

}

}

output {

# 选择elasticsearch

elasticsearch {

hosts => ["http://****:9200"]

index => "%{[fields][service]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}

5. 启动ELK

cd /root/elk

docker-compose up --build -d

Filebeat安装

1. 下载filebeat

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.9.1-linux-x86_64.tar.gz

tar -xf filebeat-7.9.1-linux-x86_64.tar.gz -C /usr/local/

cd /usr/local/

mv filebeat-7.9.1-linux-x86_64 filebeat

2. 配置filebeat.yml

vim /usr/local/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /home/api.aftersalesservices/logs/*.log

fields:

service: "205-api.aftersalesservices"

log_source: "springboot"

multiline.pattern: ^[0-9]{4}-[0-9]{2}-[0-9]{2}

multiline.negate: true

multiline.match: after

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.ilm.enabled: false

output.logstash:

hosts: ["****:5044"]

检查配置 cd /usr/local/filebeat/ && ./filebeat test config

3. 配置成系统服务

vim /usr/lib/systemd/system/filebeat.service

[Unit]

Description=Filebeat is a lightweight shipper for metrics.

Documentation=https://www.elastic.co/products/beats/filebeat

Wants=network-online.target

After=network-online.target

[Service]

Environment="LOG_OPTS=-e"

Environment="CONFIG_OPTS=-c /usr/local/filebeat/filebeat.yml"

Environment="PATH_OPTS=-path.home /usr/local/filebeat -path.config /usr/local/filebeat -path.data /usr/local/filebeat/data -path.logs /usr/local/filebeat/logs"

ExecStart=/usr/local/filebeat/filebeat $LOG_OPTS $CONFIG_OPTS $PATH_OPTS

Restart=always

[Install]

WantedBy=multi-user.target

4. 启动服务

systemctl daemon-reload

systemctl enable filebeat

systemctl start filebeat

5. 查看运行状态

systemctl status filebeat.service

Kibana配置

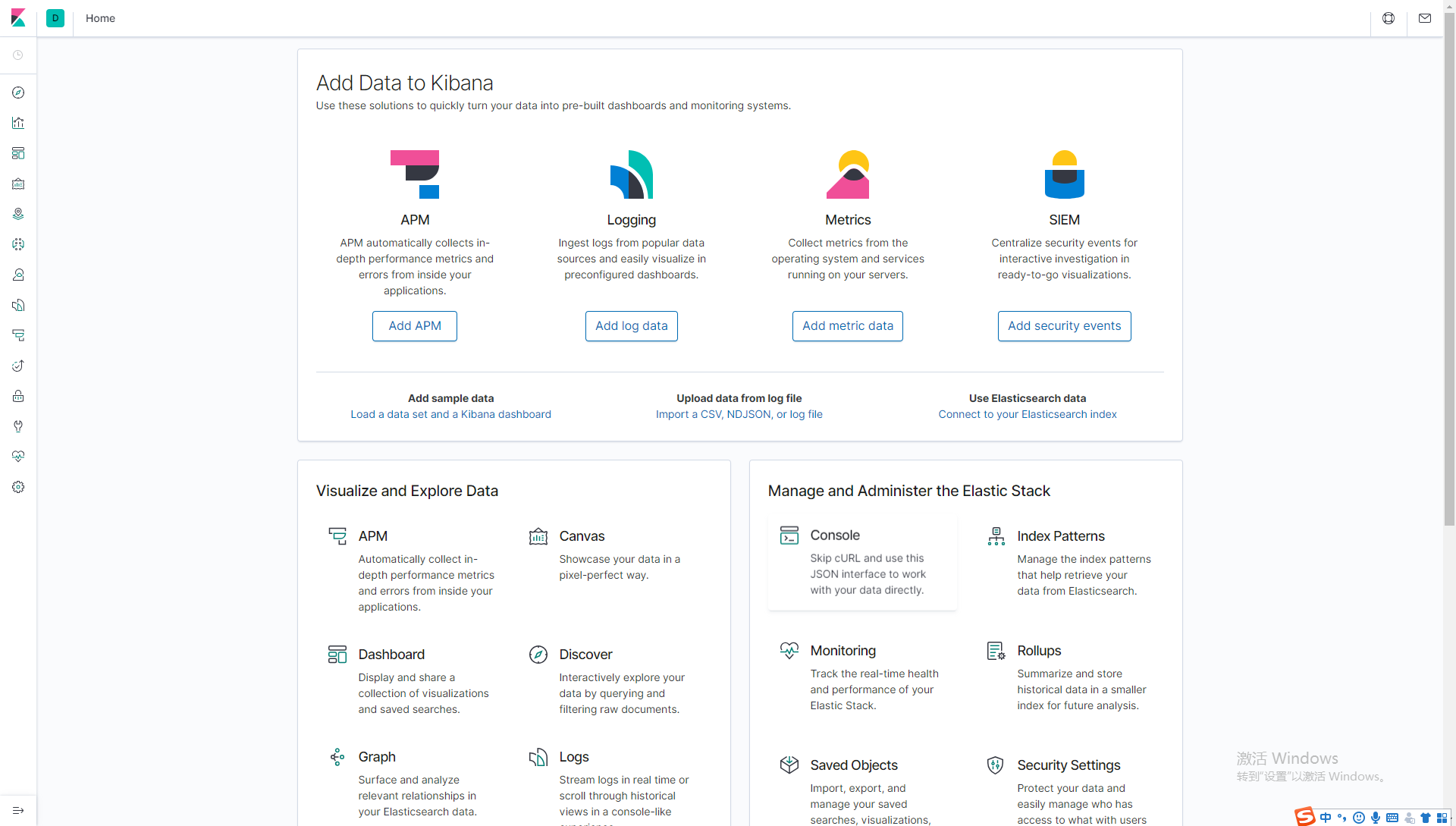

1. 在浏览器打开[Host-IP]:5601,是Kibana日志查看平台

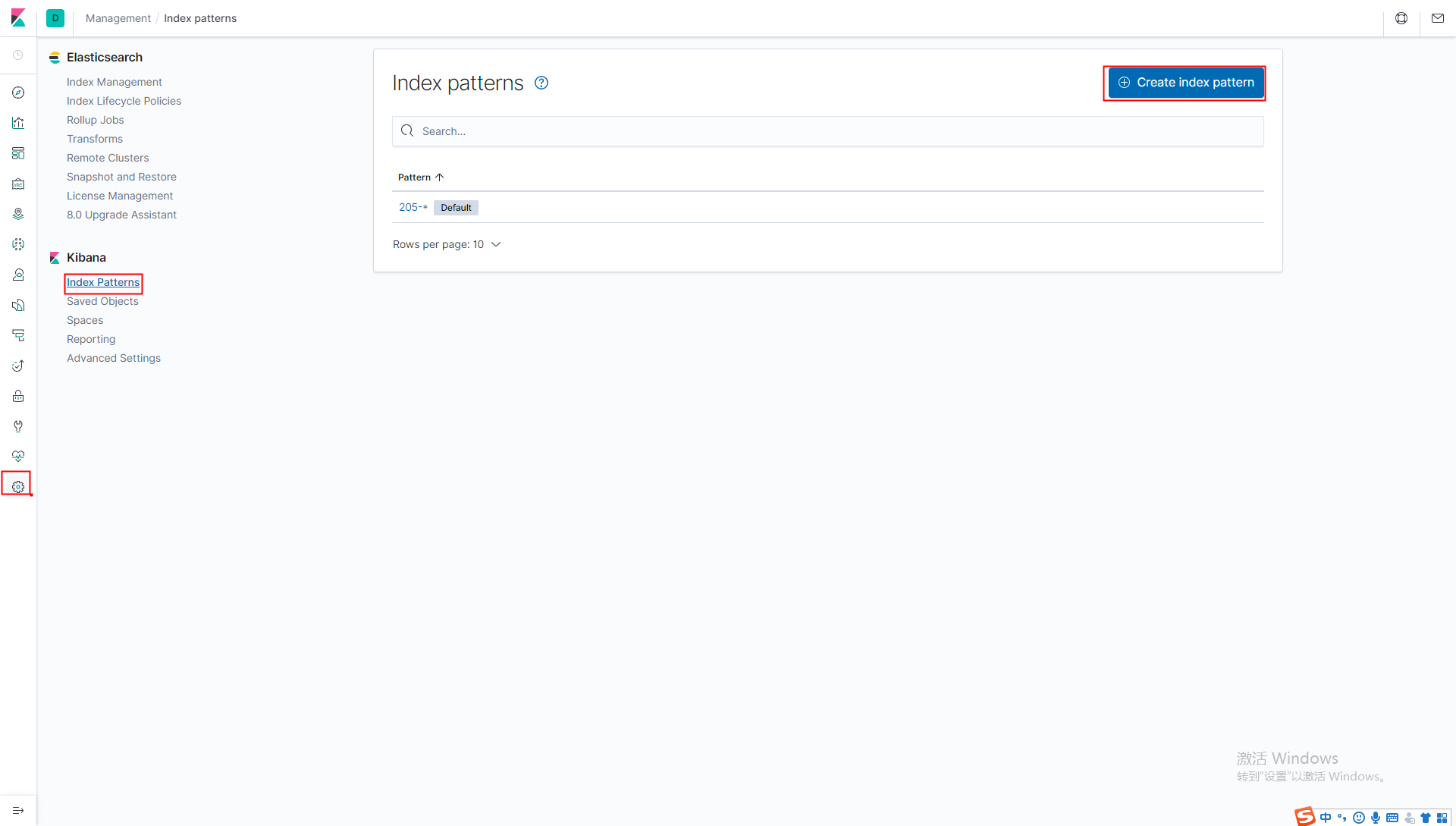

2. 进入至系统菜单【管理】中的【index-pattern】

3. 创建创建index-pattern

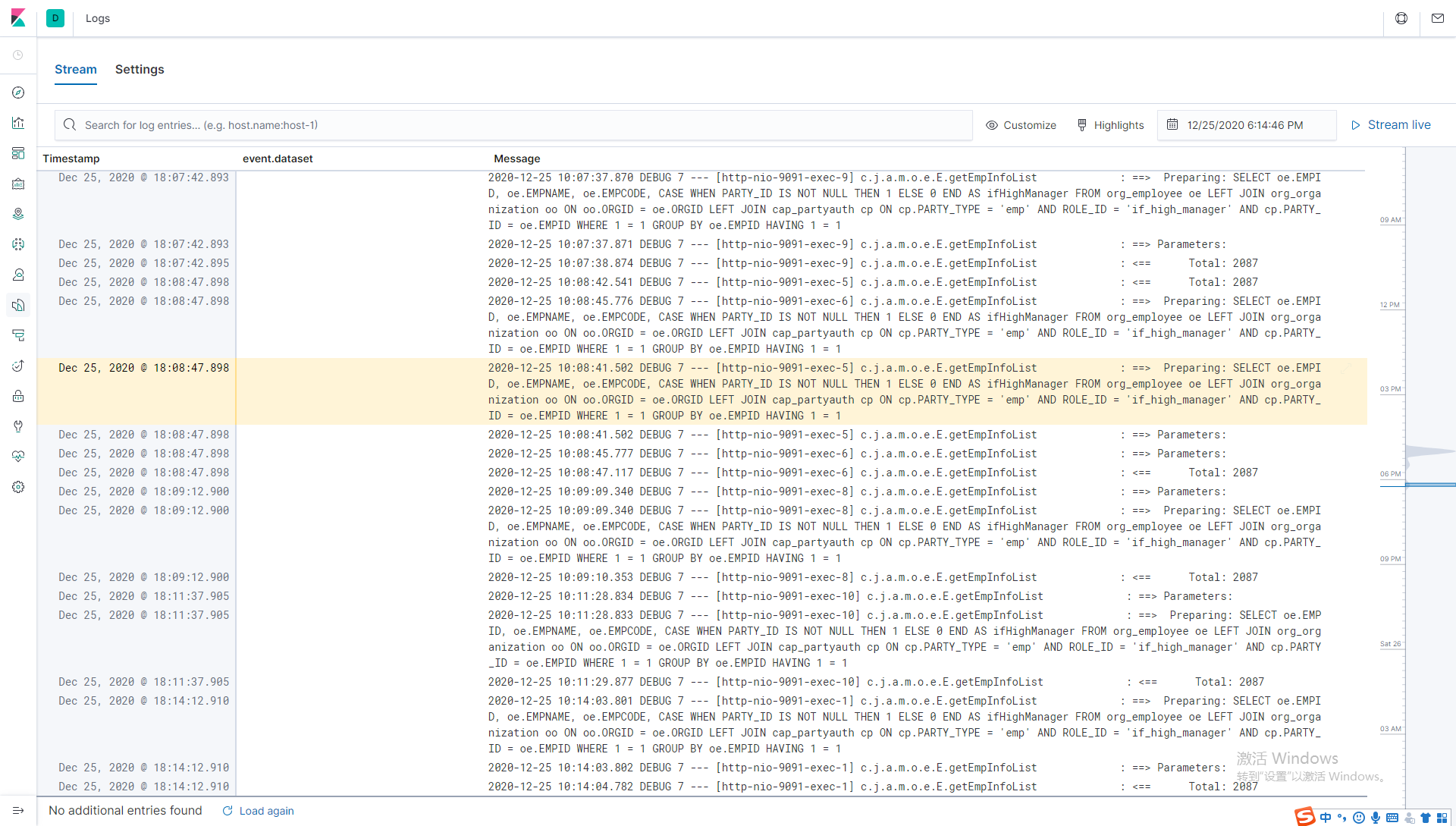

4. 在logs查看日志

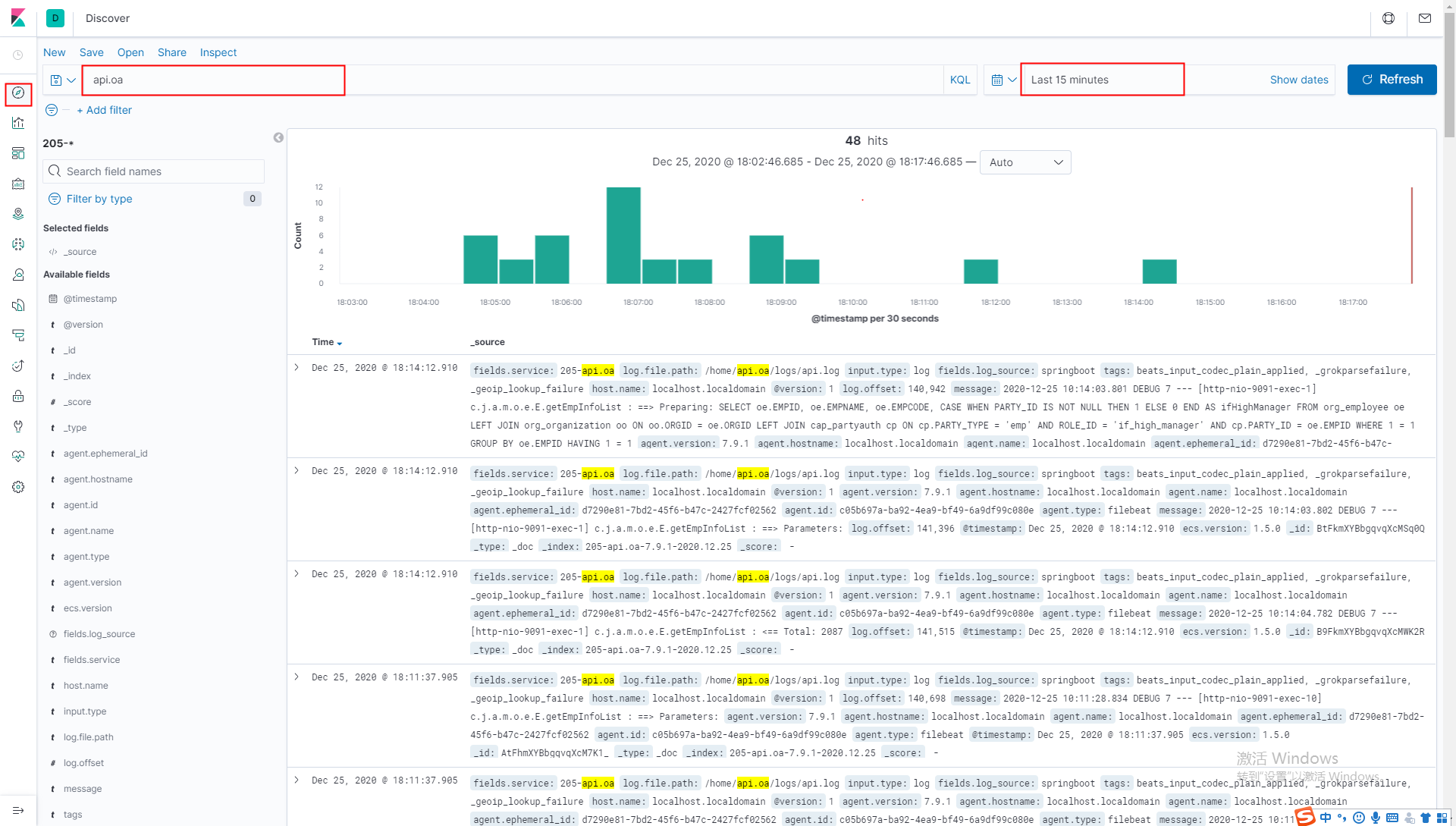

5. 首页查看日志

浙公网安备 33010602011771号

浙公网安备 33010602011771号