spark编写WordCount代码(scala)

代码demo

package com.spark.wordcount

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

object WordCount {

def main(args: Array[String]) {

//文件位置

val inputFile = "hdfs://192.168.10.106:8020/tmp/README.txt"

//创建SparkConf()并且设置App的名称

val conf = new SparkConf().setAppName("WordCount").setMaster("local")

//创建SparkContext,该对象是提交spark app的入口

val sc = new SparkContext(conf)

//读取文件

val textFile = sc.textFile(inputFile)

//使用sc创建rdd,并且执行相应的transformation和action

val wordCount = textFile.flatMap(line => line.split(" ")).map(word => (word, 1)).reduceByKey((a, b) => a + b)

//打印输出

wordCount.foreach(println)

//停止sc,结束任务

sc.stop()

}

}

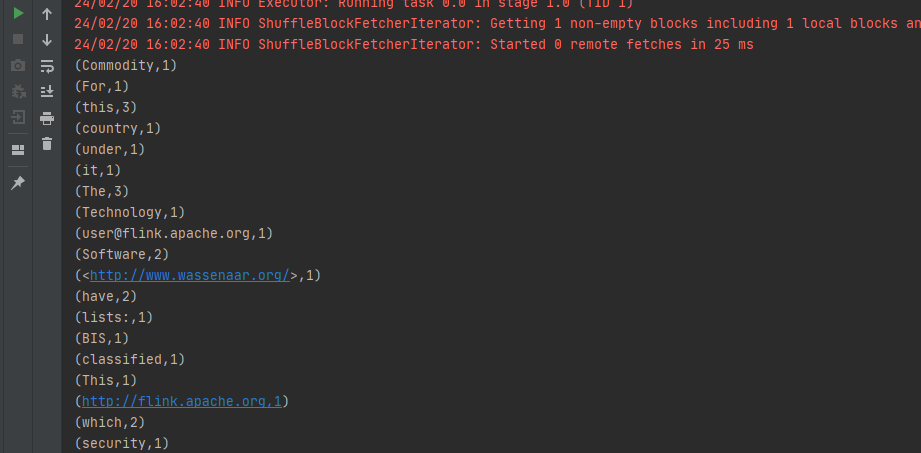

运行结果

本文来自博客园,作者:whiteY,转载请注明原文链接:https://www.cnblogs.com/whiteY/p/18023425

浙公网安备 33010602011771号

浙公网安备 33010602011771号