基于object_detection API的车牌检测 (二)

一、数据集格式转换

我的数据集一共有400张车牌图片,其中训练集360张,测试集40张,分别放在D:\PythonNotebook\models-master\research\object_detection\images下的train和test文件夹,因为labellmg生成的是xml格式的文件,所以需要将xml文件转换成csv文件,再转换成tfrecord格式文件,其中xml文件转换成csv文件代码如下:

1 import os 2 import glob 3 import pandas as pd 4 import xml.etree.ElementTree as ET 5 6 path = 'images/test' 7 8 def xml_to_csv(path): 9 xml_list = [] 10 for xml_file in glob.glob(path + '/*.xml'): 11 tree = ET.parse(xml_file) 12 root = tree.getroot() 13 for member in root.findall('object'): 14 value = (root.find('filename').text, 15 int(root.find('size')[0].text), 16 int(root.find('size')[1].text), 17 member[0].text, 18 int(member[4][0].text), 19 int(member[4][1].text), 20 int(member[4][2].text), 21 int(member[4][3].text) 22 ) 23 xml_list.append(value) 24 column_name = ['filename', 'width', 'height', 'class', 'xmin', 'ymin', 'xmax', 'ymax'] 25 xml_df = pd.DataFrame(xml_list, columns=column_name) 26 return xml_df 27 28 29 def main(): 30 image_path = path 31 xml_df = xml_to_csv(path) 32 xml_df.to_csv('data/plate_test_labels.csv', index=None) 33 print('Successfully converted xml to csv.')

保存在D:\PythonNotebook\models-master\research\object_detection文件夹下为xml_to_csv.py,由csv文件转换成tf_record文件代码为generate_tfrecord.py,代码也保存在D:\PythonNotebook\models-master\research\object_detection下

1 import os 2 import io 3 import pandas as pd 4 import tensorflow as tf 5 6 from PIL import Image 7 from object_detection.utils import dataset_util 8 from collections import namedtuple, OrderedDict 9 10 11 # TO-DO replace this with label map 12 def class_text_to_int(row_label): 13 if row_label == 'plate': 14 return 1 15 else: 16 None 17 18 19 def split(df, group): 20 data = namedtuple('data', ['filename', 'object']) 21 gb = df.groupby(group) 22 print('split') 23 return [data(filename, gb.get_group(x)) for filename, x in zip(gb.groups.keys(), gb.groups)] 24 25 26 def create_tf_example(group, path): 27 with tf.gfile.GFile(os.path.join(path, '{}'.format(group.filename)), 'rb') as fid: 28 encoded_jpg = fid.read() 29 encoded_jpg_io = io.BytesIO(encoded_jpg) 30 image = Image.open(encoded_jpg_io) 31 width, height = image.size 32 print(width) 33 34 filename = group.filename.encode('utf8') 35 image_format = b'jpg' 36 xmins = [] 37 xmaxs = [] 38 ymins = [] 39 ymaxs = [] 40 classes_text = [] 41 classes = [] 42 43 for index, row in group.object.iterrows(): 44 xmins.append(row['xmin'] / width) 45 xmaxs.append(row['xmax'] / width) 46 ymins.append(row['ymin'] / height) 47 ymaxs.append(row['ymax'] / height) 48 classes_text.append(row['class'].encode('utf8')) 49 classes.append(class_text_to_int(row['class'])) 50 51 tf_example = tf.train.Example(features=tf.train.Features(feature={ 52 'image/height': dataset_util.int64_feature(height), 53 'image/width': dataset_util.int64_feature(width), 54 'image/filename': dataset_util.bytes_feature(filename), 55 'image/source_id': dataset_util.bytes_feature(filename), 56 'image/encoded': dataset_util.bytes_feature(encoded_jpg), 57 'image/format': dataset_util.bytes_feature(image_format), 58 'image/object/bbox/xmin': dataset_util.float_list_feature(xmins), 59 'image/object/bbox/xmax': dataset_util.float_list_feature(xmaxs), 60 'image/object/bbox/ymin': dataset_util.float_list_feature(ymins), 61 'image/object/bbox/ymax': dataset_util.float_list_feature(ymaxs), 62 'image/object/class/text': dataset_util.bytes_list_feature(classes_text), 63 'image/object/class/label': dataset_util.int64_list_feature(classes), 64 })) 65 return tf_example 66 67 68 def main(): 69 writer = tf.python_io.TFRecordWriter('data/test.record') 70 path = 'images/test' 71 examples = pd.read_csv('data/plate_test_labels.csv') 72 grouped = split(examples, 'filename') 73 for group in grouped: 74 tf_example = create_tf_example(group, path) 75 writer.write(tf_example.SerializeToString()) 76 77 writer.close() 78 output_path = 'data/test.record' 79 print('Successfully created the TFRecords: {}'.format(output_path)) 80 81 82 if __name__ == '__main__': 83 main()

然后分别执行这两段代码就可以了,我将csv文件以及tfrecord文件都放在了object_detection/data下了。当然我这里都只是对测试集进行了格式转换,对于训练集只需要将代码里所有的test改成train就可以了。

二、选择模型和配置文件、

在tensorflow的物体检测模型界面选择你的模型,这里我用的是ssd_mobilenet_v1_coco.config模型,点击ssd_mobilenet_v1_coco.config进入代码界面,将代码拷贝下来,在D:\PythonNotebook\models-master\research\object_detection下新建一个文件夹training,在training文件夹下新建一个文本文档,命名为ssd_mobilenet_v1_coco.config,将刚才拷贝的代码复制到里面,并修改一些代码:

1、input_path改为自己的train.tfrecord以及test.tfrecord路径,注意不要把test跟train弄反了;

2、将 num_classes 按照实际情况更改,本文中是1;

3、batch_size 原本是24改为1或者2,因为电脑内存的原因;

4、fine_tune_checkpoint: "ssd_mobilenet_v1_coco_11_06_2017/model.ckpt"

from_detection_checkpoint: true这两行删除。

5、eval_config中的num_examples改为40,对应你的测试集样本数。

6、在object_detection/data文件夹下随便拷贝一个.pbtxt文件副本命名为plate.pbtxt,将里面的代码改成如下:

item {

name: "plate"

id: 1

}

代表label的名称以及对应的数字,将label_map_path设置为对应的plate.pbtxt路径,我这里是data/plate.pbtxt。

整个配置文件如下:

1 # SSD with Mobilenet v1 configuration for MSCOCO Dataset. 2 # Users should configure the fine_tune_checkpoint field in the train config as 3 # well as the label_map_path and input_path fields in the train_input_reader and 4 # eval_input_reader. Search for "PATH_TO_BE_CONFIGURED" to find the fields that 5 # should be configured. 6 7 model { 8 ssd { 9 num_classes: 1 10 box_coder { 11 faster_rcnn_box_coder { 12 y_scale: 10.0 13 x_scale: 10.0 14 height_scale: 5.0 15 width_scale: 5.0 16 } 17 } 18 matcher { 19 argmax_matcher { 20 matched_threshold: 0.5 21 unmatched_threshold: 0.5 22 ignore_thresholds: false 23 negatives_lower_than_unmatched: true 24 force_match_for_each_row: true 25 } 26 } 27 similarity_calculator { 28 iou_similarity { 29 } 30 } 31 anchor_generator { 32 ssd_anchor_generator { 33 num_layers: 6 34 min_scale: 0.2 35 max_scale: 0.95 36 aspect_ratios: 1.0 37 aspect_ratios: 2.0 38 aspect_ratios: 0.5 39 aspect_ratios: 3.0 40 aspect_ratios: 0.3333 41 } 42 } 43 image_resizer { 44 fixed_shape_resizer { 45 height: 300 46 width: 300 47 } 48 } 49 box_predictor { 50 convolutional_box_predictor { 51 min_depth: 0 52 max_depth: 0 53 num_layers_before_predictor: 0 54 use_dropout: false 55 dropout_keep_probability: 0.8 56 kernel_size: 1 57 box_code_size: 4 58 apply_sigmoid_to_scores: false 59 conv_hyperparams { 60 activation: RELU_6, 61 regularizer { 62 l2_regularizer { 63 weight: 0.00004 64 } 65 } 66 initializer { 67 truncated_normal_initializer { 68 stddev: 0.03 69 mean: 0.0 70 } 71 } 72 batch_norm { 73 train: true, 74 scale: true, 75 center: true, 76 decay: 0.9997, 77 epsilon: 0.001, 78 } 79 } 80 } 81 } 82 feature_extractor { 83 type: 'ssd_mobilenet_v1' 84 min_depth: 16 85 depth_multiplier: 1.0 86 conv_hyperparams { 87 activation: RELU_6, 88 regularizer { 89 l2_regularizer { 90 weight: 0.00004 91 } 92 } 93 initializer { 94 truncated_normal_initializer { 95 stddev: 0.03 96 mean: 0.0 97 } 98 } 99 batch_norm { 100 train: true, 101 scale: true, 102 center: true, 103 decay: 0.9997, 104 epsilon: 0.001, 105 } 106 } 107 } 108 loss { 109 classification_loss { 110 weighted_sigmoid { 111 } 112 } 113 localization_loss { 114 weighted_smooth_l1 { 115 } 116 } 117 hard_example_miner { 118 num_hard_examples: 3000 119 iou_threshold: 0.99 120 loss_type: CLASSIFICATION 121 max_negatives_per_positive: 3 122 min_negatives_per_image: 0 123 } 124 classification_weight: 1.0 125 localization_weight: 1.0 126 } 127 normalize_loss_by_num_matches: true 128 post_processing { 129 batch_non_max_suppression { 130 score_threshold: 1e-8 131 iou_threshold: 0.6 132 max_detections_per_class: 100 133 max_total_detections: 100 134 } 135 score_converter: SIGMOID 136 } 137 } 138 } 139 140 train_config: { 141 batch_size: 2 142 optimizer { 143 rms_prop_optimizer: { 144 learning_rate: { 145 exponential_decay_learning_rate { 146 initial_learning_rate: 0.004 147 decay_steps: 800720 148 decay_factor: 0.95 149 } 150 } 151 momentum_optimizer_value: 0.9 152 decay: 0.9 153 epsilon: 1.0 154 } 155 } 156 # Note: The below line limits the training process to 200K steps, which we 157 # empirically found to be sufficient enough to train the pets dataset. This 158 # effectively bypasses the learning rate schedule (the learning rate will 159 # never decay). Remove the below line to train indefinitely. 160 num_steps: 200000 161 data_augmentation_options { 162 random_horizontal_flip { 163 } 164 } 165 data_augmentation_options { 166 ssd_random_crop { 167 } 168 } 169 } 170 171 train_input_reader: { 172 tf_record_input_reader { 173 input_path: "data/train.record" 174 } 175 label_map_path: "data/plate.pbtxt" 176 } 177 178 eval_config: { 179 num_examples: 40 180 # Note: The below line limits the evaluation process to 10 evaluations. 181 # Remove the below line to evaluate indefinitely. 182 max_evals: 10 183 } 184 185 eval_input_reader: { 186 tf_record_input_reader { 187 input_path: "data/test.record" 188 } 189 label_map_path: "data/plate.pbtxt" 190 shuffle: false 191 num_readers: 1 192 }

接下来就是训练模型了

三、模型训练

打开Anaconda Prompt,定位到object_detection文件夹路径,我的是D:\PythonNotebook\models-master\research\object_detection,输入如下命令:

python model_main.py --logtostderr --train_dir=training/ --pipeline_config_path=training/ssd_mobilenet_v1_coco.config

期间遇到很多问题,从网上查找了各种解决方法,汇总如下:

1、No modul named pycocotools

原生态的pycocotools是不支持windows系统的,但是有大神已经改写了代码支持windows版本,链接在此,下载后解压,打开Anaconda Prompt,定位到你的解压路径下的cocoapi-master\PythonAPI ,输入命令:python setup.py install即可安装成功。

2、TypeError: can’t pickle dict_values objects”

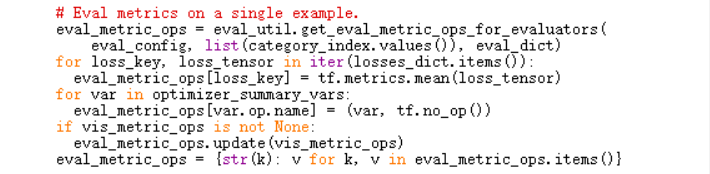

找到前面拷贝到Anaconda安装路径里面的object_detection文件夹,我的路径是D:\Anaconda3\Lib\site-packages\object_detection,打开model_lib.py文件,修改其中的代码:

在category_index.values()前加上list()即可。

再次运行python model_main.py --logtostderr --train_dir=training/ --pipeline_config_path=training/ssd_mobilenet_v1_coco.config发现已经可以训练了。

部分参考博主dy_guox,在此表示感谢。

浙公网安备 33010602011771号

浙公网安备 33010602011771号