第7章:Kubernetes存储

Kubernetes存储

1.为什么需要存储卷?

容器部署过程中一般有以下三种数据:

·启动时需要的初始数据,可以是配置文件

·启动过程中产生的临时数据,该临时数据需要多个容器间共享

·启动过程中产生的持久化数据

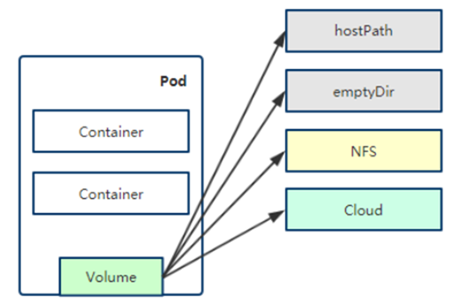

2.数据卷概述

Kubernetes 中的 Volume提供了在容器中挂载外部存储的能力

Pod需要设置卷来源( spec.volume)和挂载点( spec.containers.volumeMounts)两个信息后才可以使用相应的 Volume

官方搜索查看支持的类型

awsElasticBlockStore

azureDisk

azureFile

cephfs

cinder

configMap

csi

downwardAPI

emptyDir

fc (fibre channel)

flexVolume

flocker

gcePersistentDisk

gitRepo (deprecated)

glusterfs

hostPath

iscsi

local

nfs

persistentVolumeClaim

projected

portworxVolume

quobyte

rbd

scaleIO

secret

storageos

vsphereVolume

简单的分类:

1、本地,例如emptyDir、hostPath

2、网络,例如nfs、cephfs、glusterfs

3、公有云,例如azureDisk、awsElasticBlockStore

4、k8s资源,例如secret、configMap

3.临时存储卷,节点存储卷,网络存储卷

临时存储卷:emptyDir

创建一个空卷,挂载到Pod中的容器。Pod删除该卷也会被删除。

应用场景:Pod中容器之间数据共享

emptydir默认工作目录:

/var/lib/kubelet/pods/<pod-id>/volumes/kubernetes.io~empty-dir

什么样的适合在pod中运行多个容器?

{} 空值

[root@k8s-m1 chp7]# cat emptyDir.yml apiVersion: v1 kind: Pod metadata: name: emptydir spec: containers: - name: write image: centos command: ["bash","-c","for i in {1..100};do echo $i >> /data/hello;sleep 1;done"] volumeMounts: - name: data mountPath: /data - name: read image: centos command: ["bash","-c","tail -f /data/hello"] volumeMounts: - name: data mountPath: /data volumes: - name: data emptyDir: {} [root@k8s-m1 chp7]# kubectl apply -f emptyDir.yml pod/emptydir created [root@k8s-m1 chp7]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES emptydir 2/2 Running 1 116s 10.244.111.203 k8s-n2 <none> <none> [root@k8s-n2 data]# docker ps |grep emptydir cbaf1b92b4a8 centos "bash -c 'for i in {…" About a minute ago Up About a minute k8s_write_emptydir_default_df40c32a-9f0a-44b7-9c17-89c9e9725da2

_3 bce0f2607620 centos "bash -c 'tail -f /d…" 7 minutes ago Up 7 minutes k8s_read_emptydir_default_df40c32a-9f0a-44b7-9c17-89c9e9725da2_0 0b804b8db60f registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 7 minutes ago Up 7 minutes k8s_POD_emptydir_default_df40c32a-9f0a-44b7-9c17-89c9e9725da2_0 [root@k8s-n2 data]# pwd /var/lib/kubelet/pods/df40c32a-9f0a-44b7-9c17-89c9e9725da2/volumes/kubernetes.io~empty-dir/data

节点存储卷:hostPath

挂载Node文件系统上文件或者目录到Pod中的容器。

应用场景:Pod中容器需要访问宿主机文件

[root@k8s-m1 chp7]# cat hostPath.yml apiVersion: v1 kind: Pod metadata: name: host-path spec: containers: - name: centos image: centos command: ["bash","-c","sleep 36000"] volumeMounts: - name: data mountPath: /data volumes: - name: data hostPath: path: /tmp type: Directory [root@k8s-m1 chp7]# kubectl apply -f hostPath.yml pod/host-path created [root@k8s-m1 chp7]# kubectl exec host-path -it bash kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead. [root@host-path data]# pwd /data [root@host-path data]# touch test.txt [root@k8s-m1 ~]# ls -l /tmp/test.txt -rw-r--r--. 1 root root 5 8月 18 22:25 /tmp/test.txt

网络存储卷:NFS

yum install nfs-utils -y [root@k8s-n2 ~]# mkdir /nfs/k8s -p [root@k8s-n2 ~]# vim /etc/exports [root@k8s-n2 ~]# cat /etc/exports /nfs/k8s 10.0.0.0/24(rw,no_root_squash) # no_root_squash:当登录NFS主机使用共享目录的使用者是root时,其权限将被转换成为匿名使用者,通常它的UID与GID都会变成nobody身份。 [root@k8s-n2 ~]# systemctl restart nfs [root@k8s-n2 ~]# systemctl enable nfs Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service. [root@k8s-n2 ~]# # 测试 [root@k8s-n1 ~]# mount -t nfs 10.0.0.25:/nfs/k8s /mnt/ [root@k8s-n1 ~]# df -h |grep nfs 10.0.0.25:/nfs/k8s 26G 5.8G 21G 23% /mnt 查看nfs共享目录: [root@k8s-n2 ~]# showmount -e Export list for k8s-n2: /nfs/k8s 10.0.0.0/24 创建应用 [root@k8s-m1 chp7]# cat nfs-deploy.yml apiVersion: apps/v1 kind: Deployment metadata: name: nfs-nginx-deploy spec: selector: matchLabels: app: nfs-nginx replicas: 3 template: metadata: labels: app: nfs-nginx spec: containers: - name: nginx image: nginx volumeMounts: - name: wwwroot mountPath: /usr/share/nginx/html ports: - containerPort: 80 volumes: - name: wwwroot nfs: server: 10.0.0.25 path: /nfs/k8s [root@k8s-m1 chp7]# kubectl apply -f nfs-deploy.yml [root@k8s-m1 chp7]# kubectl get pod -o wide|grep nfs nfs-nginx-deploy-848f4597c9-658ws 1/1 Running 0 2m33s 10.244.111.205 k8s-n2 <none> <none> nfs-nginx-deploy-848f4597c9-bzl5w 1/1 Running 0 2m33s 10.244.111.207 k8s-n2 <none> <none> nfs-nginx-deploy-848f4597c9-wz422 1/1 Running 0 2m33s 10.244.111.208 k8s-n2 <none> <none>

在本地创建index 页面,然后在容器中也可以看到文件

[root@k8s-n2 ~]# echo "hello world" >/nfs/k8s/index.html [root@k8s-m1 chp7]# curl 10.244.111.205 hello world

[root@k8s-m1 chp7]# kubectl exec nfs-nginx-deploy-848f4597c9-wz422 -it -- bash

root@nfs-nginx-deploy-848f4597c9-wz422:/# mount|grep k8s

10.0.0.25:/nfs/k8s on /usr/share/nginx/html type nfs4 (rw,relatime,vers=4.1,rsize=524288,wsize=524288,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=10.0.0.25,local_lock=none,addr=10.0.0.25)

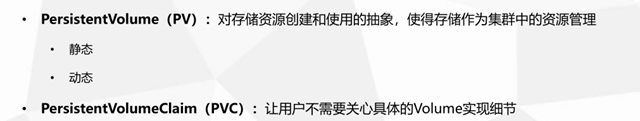

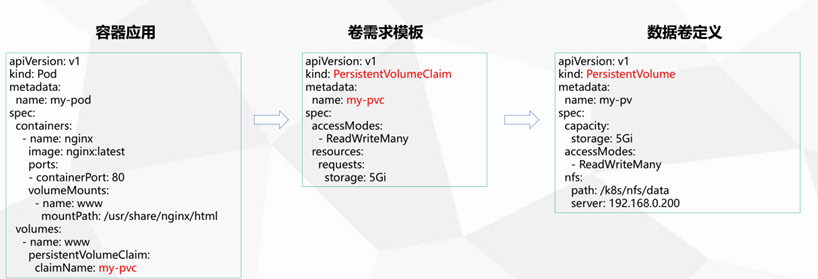

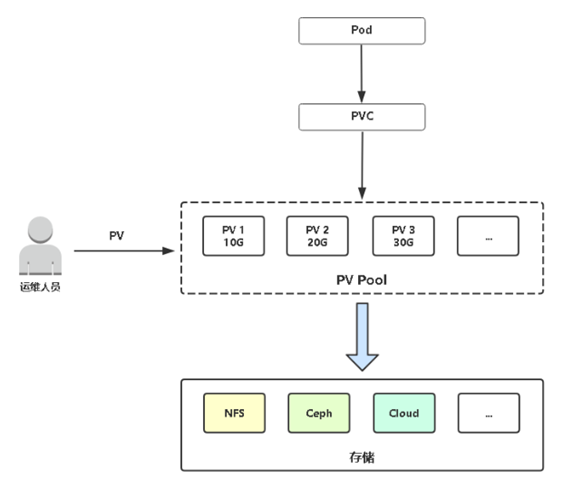

4.持久卷概述

5.PV静态供给

[root@k8s-m1 chp7]# cat pv.yml apiVersion: v1 kind: PersistentVolume metadata: name: pv0001 spec: capacity: storage: 5Gi volumeMode: Filesystem accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Recycle nfs: path: /nfs/k8s/pv0001 server: 10.0.0.25 --- apiVersion: v1 kind: PersistentVolume metadata: name: pv0002 spec: capacity: storage: 15Gi volumeMode: Filesystem accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Recycle nfs: path: /nfs/k8s/pv0002 server: 10.0.0.25 --- apiVersion: v1 kind: PersistentVolume metadata: name: pv0003 spec: capacity: storage: 30Gi volumeMode: Filesystem accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Recycle nfs: path: /nfs/k8s/pv0003 server: 10.0.0.25

[root@k8s-m1 chp7]# kubectl apply -f pv.yml persistentvolume/pv0001 created persistentvolume/pv0002 created persistentvolume/pv0003 created [root@k8s-m1 chp7]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pv0001 5Gi RWX Recycle Available 53s pv0002 15Gi RWX Recycle Available 53s pv0003 30Gi RWX Recycle Available 53s

[root@k8s-m1 chp7]# cat pvc-deploy.yml apiVersion: apps/v1 kind: Deployment metadata: name: pvc-ngnix spec: selector: matchLabels: app: pvc-nginx replicas: 3 template: metadata: labels: app: pvc-nginx spec: containers: - name: nginx image: nginx volumeMounts: - name: wwwroot mountPath: /usr/share/nginx/html ports: - containerPort: 80 volumes: - name: wwwroot persistentVolumeClaim: claimName: my-pvc --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: my-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 5Gi

[root@k8s-m1 chp7]# kubectl apply -f pvc-deploy.yml deployment.apps/pvc-ngnix unchanged persistentvolumeclaim/my-pvc created [root@k8s-m1 chp7]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE my-pvc Bound pv0001 5Gi RWX 12m [root@k8s-n2 pv0001]# echo "hello pvc" >index.html [root@k8s-m1 chp7]# curl 10.244.111.209 hello pvc

AccessModes(访问模式):

AccessModes 是用来对 PV 进行访问模式的设置,用于描述用户应用对存储资源的访问权限,访问权限包括下面几种方式:

ReadWriteOnce(RWO):读写权限,但是只能被单个节点挂载

ReadOnlyMany(ROX):只读权限,可以被多个节点挂载

ReadWriteMany(RWX):读写权限,可以被多个节点挂载

RECLAIM POLICY(回收策略):目前 PV 支持的策略有三种:

Retain(保留)- 保留数据,需要管理员手工清理数据

Recycle(回收)- 清除 PV 中的数据,效果相当于执行 rm -rf /ifs/kuberneres/*

Delete(删除)- 与 PV 相连的后端存储同时删除

STATUS(状态):

一个 PV 的生命周期中,可能会处于4中不同的阶段:

Available(可用):表示可用状态,还未被任何 PVC 绑定

Bound(已绑定):表示 PV 已经被 PVC 绑定

Released(已释放):PVC 被删除,但是资源还未被集群重新声明

Failed(失败): 表示该 PV 的自动回收失败

PV与PVC如何绑定:主要通过访问模式和容量进行匹配

默认行为:可以分配大于你申请的容量,不会分配小于你申请的容量。

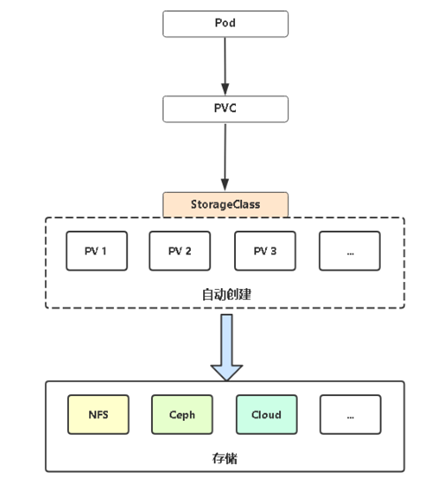

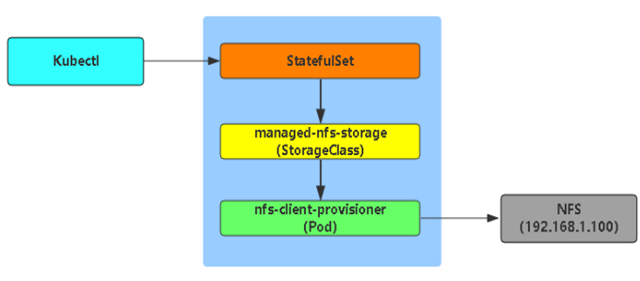

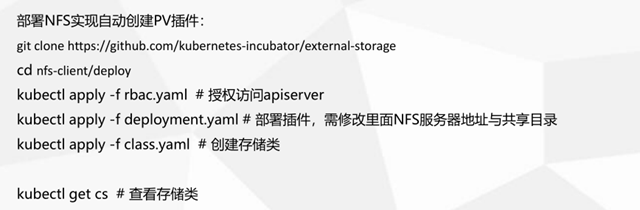

6.PV动态供给

7.案例:应用程序使用持久卷存储数据

kind: ServiceAccount apiVersion: v1 metadata: name: nfs-client-provisioner --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io

[root@k8s-m1 nfs-client]# cat deployment.yaml apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner --- kind: Deployment apiVersion: apps/v1 metadata: name: nfs-client-provisioner spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: quay.io/external_storage/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs - name: NFS_SERVER value: 10.0.0.25 - name: NFS_PATH value: /nfs/k8s volumes: - name: nfs-client-root nfs: server: 10.0.0.25 path: /nfs/k8s

[root@k8s-m1 nfs-client]# cat class.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "true"

[root@k8s-m1 chp7]# cat dynamic-pvc.yml apiVersion: apps/v1 kind: Deployment metadata: name: dynamic-pvc-ngnix spec: selector: matchLabels: app: dynamic-pvc-nginx replicas: 3 template: metadata: labels: app: dynamic-pvc-nginx spec: containers: - name: nginx image: nginx volumeMounts: - name: wwwroot mountPath: /usr/share/nginx/html ports: - containerPort: 80 volumes: - name: wwwroot persistentVolumeClaim: claimName: dynamic-pvc --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: dynamic-pvc spec: storageClassName: managed-nfs-storage accessModes: - ReadWriteMany resources: requests: storage: 20Gi

[root@k8s-m1 nfs-client]# kubectl apply -f rbac.yaml serviceaccount/nfs-client-provisioner created [root@k8s-m1 nfs-client]# kubectl apply -f deployment.yaml serviceaccount/nfs-client-provisioner created deployment.apps/nfs-client-provisioner created [root@k8s-m1 nfs-client]# kubectl apply -f class.yaml storageclass.storage.k8s.io/managed-nfs-storage created [root@k8s-m1 nfs-client]# kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE managed-nfs-storage fuseim.pri/ifs Delete Immediate false 2s [root@k8s-m1 chp7]# kubectl apply -f dynamic-pvc.yml deployment.apps/dynamic-pvc-ngnix created persistentvolumeclaim/dynamic-pvc created [root@k8s-m1 chp7]# kubectl get po |grep dynamic-pvc dynamic-pvc-ngnix-d4d789c68-bbjj9 1/1 Running 0 51s dynamic-pvc-ngnix-d4d789c68-l8cjc 1/1 Running 0 51s dynamic-pvc-ngnix-d4d789c68-mdm6j 1/1 Running 0 51s [root@k8s-m1 chp7]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE dynamic-pvc Bound pvc-2c7a9c3b-84ef-452b-9322-2d3530c01014 20Gi RWX managed-nfs-storage 74s [root@k8s-m1 chp7]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pv0001 5Gi RWX Recycle Available 3h29m pv0002 15Gi RWX Recycle Available 3h29m pv0003 30Gi RWX Recycle Available 3h29m pvc-2c7a9c3b-84ef-452b-9322-2d3530c01014 20Gi RWX Delete Bound default/dynamic-pvc managed-nfs-storage 110s [root@k8s-n2 ~]# echo dynamic-pvc >/nfs/k8s/default-dynamic-pvc-pvc-2c7a9c3b-84ef-452b-9322-2d3530c01014/index.html [root@k8s-m1 chp7]# curl 10.244.111.217 dynamic-pvc [root@k8s-m1 chp7]# curl 10.244.111.220 dynamic-pvc [root@k8s-m1 chp7]# kubectl delete -f dynamic-pvc.yml deployment.apps "dynamic-pvc-ngnix" deleted persistentvolumeclaim "dynamic-pvc" deleted [root@k8s-m1 chp7]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pv0001 5Gi RWX Recycle Available 3h34m pv0002 15Gi RWX Recycle Available 3h34m pv0003 30Gi RWX Recycle Available 3h34m 删除归档 [root@k8s-n2 ~]# ls /nfs/k8s/ archived-default-dynamic-pvc-pvc-2c7a9c3b-84ef-452b-9322-2d3530c01014 index.html pv0001 pv0002 pv0003 [root@k8s-m1 chp7]# cat nfs-client/class.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "true" [root@k8s-m1 chp7]# [root@k8s-m1 chp7]# kubectl get pv --sort-by={.spec.capacity.storage} NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pv0001 5Gi RWX Recycle Available 3h41m pv0002 15Gi RWX Recycle Available 3h41m pv0003 30Gi RWX Recycle Available 3h41m

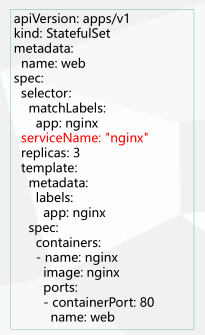

8.有状态应用部署:Statefulset控制器

StatefulSet:

• 部署有状态应用

• 解决Pod独立生命周期,保持Pod启动顺序和唯一性

1. 稳定,唯一的网络标识符,持久存储

2. 有序,优雅的部署和扩展、删除和终止

3. 有序,滚动更新

应用场景:数据库

StatefulSet:稳定的网络ID

Headless Service:也是一种service,但不同在于 spec.clusterIP定义为None,也就是不需要ClusterIP

多了一个serviceName: “nginx”字段,

这就告诉StatefulSet控制器要使用nginx这个headless

service来保证Pod的身份。

ClusterIP A记录格式: <service-name>.<namespace-name>.svc.cluster.local ClusterIP=None A记录格式: <statefulsetName-index>.<service-name> .<namespace-name>.svc.cluster.local 示例:web-0.nginx.default.svc.cluster.local

[root@k8s-m1 chp8]# cat statefulset.yml apiVersion: v1 kind: Service metadata: name: handless-nginx labels: app: nginx spec: ports: - port: 80 protocol: TCP targetPort: 80 clusterIP: None selector: app: nginx --- apiVersion: apps/v1 kind: StatefulSet metadata: name: web spec: serviceName: "handless-nginx" replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80 name: web

[root@k8s-m1 chp8]# kubectl get po|grep web- web-0 1/1 Running 0 5h49m web-1 1/1 Running 0 5h56m web-2 1/1 Running 0 5h55m [root@k8s-m1 chp8]# kubectl get ep NAME ENDPOINTS AGE handless-nginx 10.244.111.218:80,10.244.111.226:80,10.244.111.227:80 64s [root@k8s-m1 chp8]# kubectl run -it --rm --image=busybox:1.28.4 -- sh If you don't see a command prompt, try pressing enter. / # nslookup handless-nginx Server: 10.96.0.10 Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local Name: handless-nginx Address 1: 10.244.111.226 web-2.handless-nginx.default.svc.cluster.local Address 2: 10.244.111.218 10-244-111-218.nginx.default.svc.cluster.local Address 3: 10.244.111.227 10-244-111-227.nginx.default.svc.cluster.local

StatefulSet:稳定的存储

[root@k8s-m1 chp8]# cat statefulset.yml apiVersion: v1 kind: Service metadata: name: statefulset-nginx labels: app: nginx spec: ports: - port: 80 protocol: TCP targetPort: 80 clusterIP: None selector: app: nginx --- apiVersion: apps/v1 kind: StatefulSet metadata: name: statefulset-web spec: selector: matchLabels: app: nginx # has to match .spec.template.metadata.labels serviceName: "statefulset-nginx" replicas: 3 # by default is 1 template: metadata: labels: app: nginx # has to match .spec.selector.matchLabels spec: containers: - name: nginx image: nginx ports: - containerPort: 80 name: web-statefulset volumeMounts: - name: www mountPath: /usr/share/nginx/html volumeClaimTemplates: - metadata: name: www spec: accessModes: [ "ReadWriteOnce" ] storageClassName: managed-nfs-storage resources: requests: storage: 1Gi

这里的 storageClassName: managed-nfs-storage 用到了前面创建的sc

[root@k8s-m1 chp8]# kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE managed-nfs-storage fuseim.pri/ifs Delete Immediate false 14h

创建过程

[root@k8s-m1 chp8]# kubectl get pv|grep state pvc-5fdecf86-1e71-4e5d-b9d8-be69ec0496a2 1Gi RWO Delete Bound default/www-statefulset-web-2 managed-nfs-storage 2m54s pvc-67cc7471-f012-4c17-90e2-82b9618cd33c 1Gi RWO Delete Bound default/www-statefulset-web-1 managed-nfs-storage 3m33s pvc-70268f3f-5053-411e-807a-68245b513f0a 1Gi RWO Delete Bound default/www-statefulset-web-0 managed-nfs-storage 3m51s [root@k8s-m1 chp8]# kubectl get pvc|grep state www-statefulset-web-0 Bound pvc-70268f3f-5053-411e-807a-68245b513f0a 1Gi RWO managed-nfs-storage 3m58s www-statefulset-web-1 Bound pvc-67cc7471-f012-4c17-90e2-82b9618cd33c 1Gi RWO managed-nfs-storage 3m40s www-statefulset-web-2 Bound pvc-5fdecf86-1e71-4e5d-b9d8-be69ec0496a2 1Gi RWO managed-nfs-storage 3m1s 产生的数据独立化 [root@k8s-n2 k8s]# echo web-0 >default-www-statefulset-web-0-pvc-70268f3f-5053-411e-807a-68245b513f0a/index.html [root@k8s-n2 k8s]# echo web-1 >default-www-statefulset-web-1-pvc-67cc7471-f012-4c17-90e2-82b9618cd33c/index.html [root@k8s-n2 k8s]# echo web-2 >default-www-statefulset-web-2-pvc-5fdecf86-1e71-4e5d-b9d8-be69ec0496a2/index.html [root@k8s-m1 chp8]# curl 10.244.111.227 web-0 [root@k8s-m1 chp8]# curl 10.244.111.218 web-1 [root@k8s-m1 chp8]# curl 10.244.111.226 web-2 [root@k8s-m1 chp8]# kubectl run dns-test -it --rm --image=busybox:1.28.4 -- sh If you don't see a command prompt, try pressing enter. / # nslookup statefulset-nginx Server: 10.96.0.10 Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local Name: statefulset-nginx Address 1: 10.244.111.221 web-1.nginx.default.svc.cluster.local Address 2: 10.244.111.216 web-2.nginx.default.svc.cluster.local Address 3: 10.244.111.218 10-244-111-218.nginx.default.svc.cluster.local Address 4: 10.244.111.227 10-244-111-227.nginx.default.svc.cluster.local Address 5: 10.244.111.226 10-244-111-226.nginx.default.svc.cluster.local Address 6: 10.244.111.222 10-244-111-222.statefulset-nginx.default.svc.cluster.local

StatefulSet的存储卷使用VolumeClaimTemplate创建,称为卷申请模板,当StatefulSet使用VolumeClaimTemplate创建一个PersistentVolume时,同样也会为每个Pod分配并创建一个编号的PVC。

无状态和有状态主要在于两点:网络和存储无状态不考虑存储,不考虑网络无状态应用,例如nginx。主要可以任意飘逸,每个副本是对等的有状态应用,例如etcd、zookeeper、mysql主从,每个副本是不对等etcd节点唯一信息有哪些?1、ip、主机名(headless service)为每个pod维护一个固定dns名称2、端口3、节点名称,通过主机名区分在k8s中部署有状态分布式应用主要解决的问题:1、通过一个镜像怎么自动化生成各自独立的配置文件2、部署这个应用在k8s中的一个拓扑图根据客户端证书里包含user、groups来确认一个用户。CN:用户名O:用户组

StatefulSet:小结

StatefulSet与Deployment区别:有身份的!

身份三要素:

• 域名

• 主机名

• 存储(PVC)

9.应用程序配置文件存储:ConfigMap

ConfigMap 是一种 API 对象,用来将非机密性的数据保存到健值对中。使用时可以用作环境变量、命令行参数或者存储卷中的配置文件。

ConfigMap 将您的环境配置信息和 容器镜像 解耦,便于应用配置的修改。当您需要储存机密信息时可以使用 Secret 对象。

ConfigMap 并不提供保密或者加密功能。如果你想存储的数据是机密的,请使用 Secret ,或者使用其他第三方工具来保证你的数据的私密性,而不是用 ConfigMap。

创建ConfigMap后,数据实际会存储在K8s中(Etcd)Etcd,然后通过创建Pod时

引用该数据。

应用场景:应用程序配置

Pod使用configmap数据有两种方式:

• 变量注入

• 数据卷挂载

apiVersion: v1 kind: ConfigMap metadata: name: game-demo data: # 类属性键;每一个键都映射到一个简单的值 player_initial_lives: "3" ui_properties_file_name: "user-interface.properties" # # 类文件键 game.properties: | enemy.types=aliens,monsters player.maximum-lives=5 user-interface.properties: | color.good=purple color.bad=yellow allow.textmode=true --- apiVersion: v1 kind: Pod metadata: name: configmap-demo-pod spec: containers: - name: configmap-demo image: nginx env: # 定义环境变量 - name: PLAYER_INITIAL_LIVES # 请注意这里和 ConfigMap 中的键名是不一样的 valueFrom: configMapKeyRef: name: game-demo # 这个值来自 ConfigMap key: player_initial_lives # 需要取值的键 - name: UI_PROPERTIES_FILE_NAME valueFrom: configMapKeyRef: name: game-demo key: ui_properties_file_name volumeMounts: - name: config mountPath: "/config" readOnly: true volumes: # 您可以在 Pod 级别设置卷,然后将其挂载到 Pod 内的容器中 - name: config configMap: # 提供你想要挂载的 ConfigMap 的名字 name: game-demo

# configmap 应用实例 [root@k8s-m1 chp8]# kubectl apply -f configmap.yml configmap/game-demo unchanged pod/configmap-demo-pod created [root@k8s-m1 chp8]# kubectl get po -o wide |grep config configmap-demo-pod 1/1 Running 0 85s 10.244.111.223 k8s-n2 <none> <none> [root@k8s-m1 chp8]# kubectl get configmap NAME DATA AGE game-demo 4 6m49s # 查看configmap应用里面的变量 [root@k8s-m1 chp8]# kubectl exec configmap-demo-pod -it -- bash root@configmap-demo-pod:/# ls /config/ game.properties player_initial_lives ui_properties_file_name user-interface.properties root@configmap-demo-pod:/# cat /config/game.properties enemy.types=aliens,monsters player.maximum-lives=5 root@configmap-demo-pod:/# cat /config/player_initial_lives 3 root@configmap-demo-pod:/# cat /config/ui_properties_file_name user-interface.properties root@configmap-demo-pod:/# cat /config/user-interface.properties color.good=purple color.bad=yellow allow.textmode=true root@configmap-demo-pod:/# echo $PLAYER_INITIAL_LIVES 3 root@configmap-demo-pod:/# echo $UI_PROPERTIES_FILE_NAME user-interface.properties

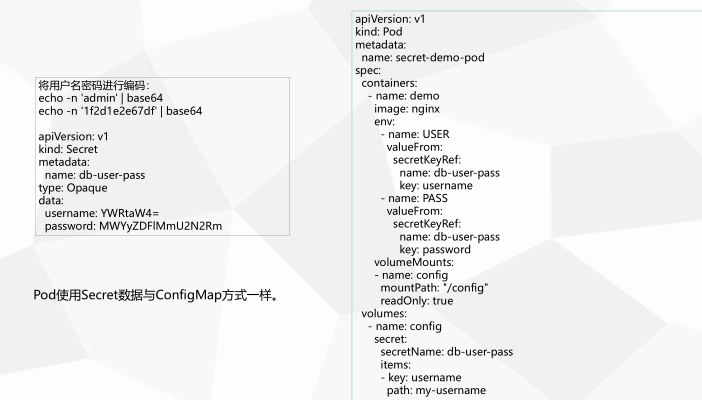

10.敏感数据存储:Secret

Secret 对象类型用来保存敏感信息,例如密码、OAuth 令牌和 SSH 密钥。 将这些信息放在 secret 中比放在 Pod 的定义或者 容器镜像 中来说更加安全和灵活。

与ConfigMap类似,区别在于Secret主要存储敏感数据,所有的数据要经过base64编码。

应用场景:凭据

kubectl create secret 支持三种类似数据:

• docker-registry 存储镜像仓库认证信息

• generic 从文件、目录或者字符串创建,例如存储用户名密码

• tls 存储证书,例如HTTPS证书

[root@k8s-m1 chp8]# vim secret.yml apiVersion: v1 kind: Pod metadata: name: secret-env-pod spec: containers: - name: mycontainer image: nginx env: - name: USER valueFrom: secretKeyRef: name: db-user-pass key: username - name: PASSWORD valueFrom: secretKeyRef: name: db-user-pass key: password volumeMounts: - name: secret-volume readOnly: true mountPath: "/etc/secret-volume" volumes: - name: secret-volume secret: secretName: db-user-pass restartPolicy: Never

# secret 应用实例 [root@k8s-m1 chp8]# echo -n 'admin' >./username.txt [root@k8s-m1 chp8]# echo -n '1f2dle2e67df' >./password.txt [root@k8s-m1 chp8]# kubectl create secret generic db-user-pass --from-file=username=./username.txt --from-file=password=./password.txt secret/db-user-pass created [root@k8s-m1 chp8]# kubectl apply -f secret.yml pod/secret-env-pod configured [root@k8s-m1 chp8]# kubectl get po|grep secret secret-env-pod 1/1 Running 0 64s [root@k8s-m1 chp8]# kubectl exec secret-env-pod -it -- bash root@secret-env-pod:/# echo $USER admin root@secret-env-pod:/# echo $PASSWORD 1f2dle2e67df root@secret-env-pod:/# ls /etc/secret-volume/ password username root@secret-env-pod:/# cat /etc/secret-volume/password 1f2dle2e67df

本文来自博客园,作者:元贞,转载请注明原文链接:https://www.cnblogs.com/yuleicoder/p/13526872.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号