1 import requests

2 import json

3 import csv

4

5

6 class DoubantvSpider:

7 def __init__(self):

8 # self.proxies = {"http":"http://125.123.152.81:3000"}

9 self.url = "https://movie.douban.com/j/search_subjects?type=tv&tag=%E5%9B%BD%E4%BA%A7%E5%89%A7&sort=rank&page_limit=20&page_start={}" # 手机模式下国产剧请求网址

10 self.headers = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36"}

11

12 # 发送请求,获得json,转化为字典

13 def parse_url(self, url):

14 res = requests.get(url, headers=self.headers)

15 return json.loads(res.content.decode())

16

17 # 保存数据

18 def save(self, dic):

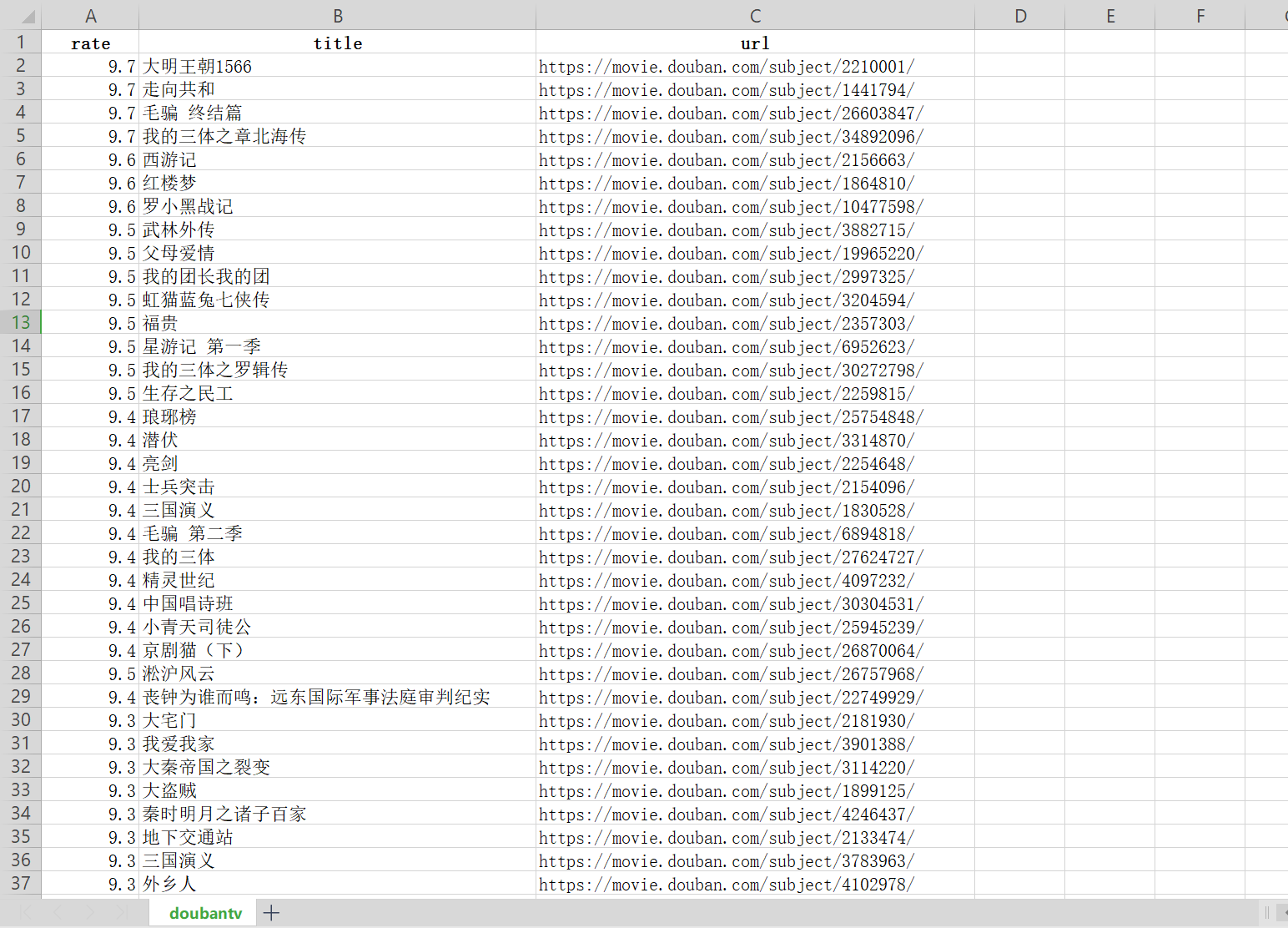

19 with open("doubantv.csv", "a", newline='', encoding="utf8") as f:

20 for data in dic["subjects"]:

21 writer = csv.writer(f, delimiter=',')

22 writer.writerow([data["rate"], data["title"], data["url"]])

23

24 # 实现主要逻辑

25 def run(self):

26 page_num = 0

27 while True:

28 # 构造url

29 url = self.url.format(page_num)

30 # 发送请求,获取响应

31 dic = self.parse_url(url)

32 # 因为动态加载,通过判断每页电视剧数量来确定是不是到了尾页

33 if len(dic["subjects"]) < 20:

34 break

35 self.save(dic)

36 page_num += 20

37 print("ok")

38

39

40 if __name__ == "__main__":

41 doubantv = DoubantvSpider()

42 doubantv.run()

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号