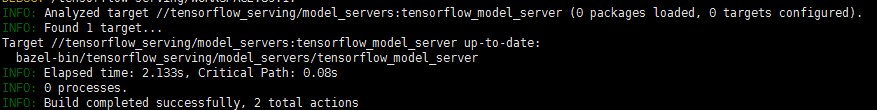

bazel编译tvm和cuda

在docker中编译tvm和cuda,工程是tensorflow-serving所以要使用bazel编译器,在docker中可以看到显卡驱动和cuda版本,但是编译的时候总是找不到头文件

后来做了个软连接,将cuda目录映射到库目录下就解决了

ln -s /usr/local/cuda-11.0/include /usr/include/cuda

然后把对应文件(调用到cuda.h)的头文件位置改一下

#include<cuda.h>改为#include<cuda/include/cuda.h>

#include<cuda_runtime.h>改为#include<cuda/include/cuda_runtime.h>

就ok了

BUILD文件

# TVM (tvm.ai) library. # from https://github.com/apache/tvm.git package( default_visibility = ["//visibility:public"], ) licenses(["notice"]) # Apache exports_files(["LICENSE"]) cc_library( name = "tvm_runtime", srcs = [ "src/runtime/c_runtime_api.cc", "src/runtime/cpu_device_api.cc", "src/runtime/file_utils.cc", "src/runtime/library_module.cc", "src/runtime/module.cc", "src/runtime/ndarray.cc", "src/runtime/object.cc", "src/runtime/registry.cc", "src/runtime/thread_pool.cc", "src/runtime/threading_backend.cc", "src/runtime/workspace_pool.cc", "src/runtime/dso_library.cc", "src/runtime/system_library.cc", "src/runtime/graph/graph_runtime.cc", "src/runtime/graph/graph_runtime_factory.cc", "src/runtime/cuda/cuda_device_api.cc", "src/runtime/cuda/cuda_module.cc", ], hdrs = glob([ "3rdparty/dmlc-core/include/**/*.h", "include/**/*.h", "3rdparty/dlpack/include/**/*.h", "src/runtime/**/*.h", ]), includes = ["3rdparty/dmlc-core/include", "include", "3rdparty/dlpack/include", "src/runtime", ], alwayslink = 1, )

无情的摸鱼机器

浙公网安备 33010602011771号

浙公网安备 33010602011771号