wordcount-java:

pom.xml文件如下:

<dependencies> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>3.8.1</version> <scope>test</scope> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.10</artifactId> <version>1.3.0</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_2.10</artifactId> <version>1.3.0</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-hive_2.10</artifactId> <version>1.3.0</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-streaming_2.10</artifactId> <version>1.3.0</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.4.1</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-streaming-kafka_2.10</artifactId> <version>1.3.0</version> </dependency> </dependencies>

package cn.spark.study.core;

import java.util.Arrays;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction;

import org.apache.spark.api.java.function.VoidFunction;

import scala.Tuple2;

public class WordCount3 {

public static void main(String[] args) {

SparkConf conf=new SparkConf().setAppName("WorldCountLocal").setMaster("local");

JavaSparkContext sc=new JavaSparkContext(conf);

JavaRDD<String> lines=sc.textFile("C:\\Users\\wanglonglong\\Desktop\\word.txt");

JavaRDD<String> words=lines.flatMap(new FlatMapFunction<String, String>() {

@Override

public Iterable<String> call(String t) throws Exception {

// TODO Auto-generated method stub

return Arrays.asList(t.split(" "));

}

});

JavaPairRDD<String, Integer> pairs = words.mapToPair(new PairFunction<String, String, Integer>() {

private static final long serialVersionUID=1;

@Override

public Tuple2<String, Integer> call(String word) throws Exception {

return new Tuple2<String, Integer>(word,1);

}

});

JavaPairRDD<String, Integer> wordCounts = pairs.reduceByKey(

new Function2<Integer, Integer, Integer>() {

private static final long serialVersionUID = 1L;

public Integer call(Integer v1, Integer v2) throws Exception {

return v1 + v2;

}

});

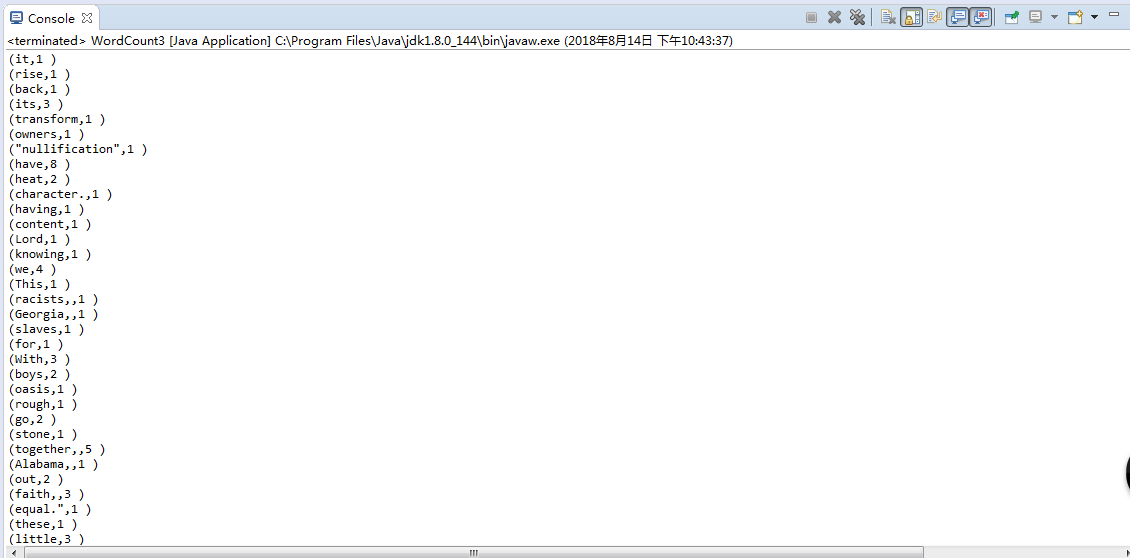

wordCounts.foreach(new VoidFunction<Tuple2<String,Integer>>() {

private static final long serialVersionUID = 1L;

public void call(Tuple2<String, Integer> wordCount) throws Exception {

System.out.println("("+wordCount._1 + "," + wordCount._2 + " )");

}

});

sc.close();

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号