k8s Operator

K8s Operator 与 Controller

Kubernetes Operator 是一种软件扩展模式,用于基于自定义资源(Custom Resources)来管理应用程序及其组件。它遵循 Kubernetes 的核心原则,特别是控制循环(Control Loop)机制。

在 Kubernetes 中,用户通常希望通过自动化来处理重复性任务,而 Operator 正是为了在 Kubernetes 提供的基础能力之上,进一步扩展自动化管理的范围。

一句话描述:Operator 的工作核心就是通过 自定义资源(CRD) 和 自定义控制器(Controller) 实现对应用的自动化管理。

CRD(Custom Resource Definition):是 Kubernetes 中扩展 API 的方式,它允许定义一种新的资源类型,使其看起来就像是 Kubernetes 本身的原生资源(例如 Pods、Services 等)。每个 CRD 都是一个资源类型,它定义了要管理的应用程序或服务的状态、字段以及控制器的行为。CRD 对象本身是 Kubernetes 中的一种 API 对象,用于扩展 Kubernetes 的 API,使得你可以创建自定义的资源类型。

Controller: 控制器是 Kubernetes 中的核心概念之一,它负责不断地检查集群中的资源对象(如 Pod、Service、Deployment 等)是否符合预期状态。Kubernetes 内置的控制器包括 Deployment 控制器、ReplicaSet 控制器等。

[!NOTE]

在 Kubernetes 中,GVK(Group, Version, Kind)和 GVR(Group, Version, Resource)是用于标识和访问 Kubernetes 资源的两个重要概念。

- Group:API 组的名称。例如,

apps组包含 Deployment、StatefulSet 等资源,batch组包含 Job、CronJob 等资源。

- 注意;早期的

core组的资源(如 Pod、Service 等)是没有组名的,默认使用空字符串""。- Version:API 版本。每个资源在 Kubernetes 中可能会有多个版本(如

v1、v1beta1、v1alpha1等),每个版本可能会有不同的功能和行为。- Kind:资源的类型。通常是资源的单数形式,如

Pod、Deployment、Service。- Resource:资源的名称。通常是复数形式,如

pods、deployments、servicesGVK 和 GVR 区别在于:

- GVR 更侧重于资源的实际操作,特别是动态客户端和工具中使用,用于指定和操作特定类型的资源实例。

- GVK 更侧重于资源的定义和描述,特别是在控制器、操作器以及元数据操作中使用,用于唯一标识和处理特定类型的资源。

Operator-SDK开发Opeartor

Operator-SDK是一款用于Operator程序构建和发布的工具,即Operator开发框架,类似Java的SpringBoot。

KubeBuilder 是在

controller-runtime和controller-tools两个库的基础上开发的,而Operator-SDK 是使用 Kubebuilder 作为库的进一步开发的项目。

需求

目前一个应用部署+服务创建需要deployment和service两个资源,既需要两个yaml文件,因此创建一个新类型App,可以一次性创建应用和服务。

定义一个 crd ,spec 包含以下信息:

Replicas # 副本数

Image # 镜像

Resources # 资源限制

Envs # 环境变量

Ports # 服务端口

根据以上信息,controller 自动创建或者更新一个 deployment + service

初始化和创建GVK

创建 APP

$ mkdir -p $GOPATH/src/github.com/leffss/app

$ cd $GOPATH/src/github.com/leffss/app

$ operator-sdk init --domain=example.com --repo=github.com/leffss/app

创建 API

$ operator-sdk create api --group app --version v1 --kind App --resource=true --controller=true

CRD实现

/*

Copyright 2021.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

*/

package v1

import (

appsv1 "k8s.io/api/apps/v1"

corev1 "k8s.io/api/core/v1"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

)

/*

修改定义后需要使用 make generate 生成新的 zz_generated.deepcopy.go 文件

*/

// EDIT THIS FILE! THIS IS SCAFFOLDING FOR YOU TO OWN!

// NOTE: json tags are required. Any new fields you add must have json tags for the fields to be serialized.

// AppSpec defines the desired state of App

type AppSpec struct {

// INSERT ADDITIONAL SPEC FIELDS - desired state of cluster

// Important: Run "make" to regenerate code after modifying this file

Replicas *int32 `json:"replicas"` // 副本数

Image string `json:"image"` // 镜像

Resources corev1.ResourceRequirements `json:"resources,omitempty"` // 资源限制

Envs []corev1.EnvVar `json:"envs,omitempty"` // 环境变量

Ports []corev1.ServicePort `json:"ports,omitempty"` // 服务端口

}

// AppStatus defines the observed state of App

type AppStatus struct {

// INSERT ADDITIONAL STATUS FIELD - define observed state of cluster

// Important: Run "make" to regenerate code after modifying this file

//Conditions []AppCondition

//Phase string

appsv1.DeploymentStatus `json:",inline"` // 直接引用 DeploymentStatus

}

//type AppCondition struct {

// Type string

// Message string

// Reason string

// Ready bool

// LastUpdateTime metav1.Time

// LastTransitionTime metav1.Time

//}

//+kubebuilder:object:root=true

//+kubebuilder:subresource:status

// App is the Schema for the apps API

type App struct {

metav1.TypeMeta `json:",inline"`

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec AppSpec `json:"spec,omitempty"`

Status AppStatus `json:"status,omitempty"`

}

//+kubebuilder:object:root=true

// AppList contains a list of App

type AppList struct {

metav1.TypeMeta `json:",inline"`

metav1.ListMeta `json:"metadata,omitempty"`

Items []App `json:"items"`

}

func init() {

SchemeBuilder.Register(&App{}, &AppList{})

}

增加创建deployment和service的业务逻辑

新增 resource/deployment/deployment.go和resource/service/service.go,这两个业务逻辑用于app_controller调用创建deployment和service。

package deployment

import (

appv1 "github.com/leffss/app/api/v1"

appsv1 "k8s.io/api/apps/v1"

corev1 "k8s.io/api/core/v1"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/runtime/schema"

)

func New(app *appv1.App) *appsv1.Deployment {

labels := map[string]string{"app.example.com/v1": app.Name}

selector := &metav1.LabelSelector{MatchLabels: labels}

return &appsv1.Deployment{

TypeMeta: metav1.TypeMeta{

APIVersion: "apps/v1",

Kind: "Deployment",

},

ObjectMeta: metav1.ObjectMeta{

Name: app.Name,

Namespace: app.Namespace,

OwnerReferences: []metav1.OwnerReference{

*metav1.NewControllerRef(app, schema.GroupVersionKind{

Group: appv1.GroupVersion.Group,

Version: appv1.GroupVersion.Version,

Kind: "App",

}),

},

},

Spec: appsv1.DeploymentSpec{

Replicas: app.Spec.Replicas,

Selector: selector,

Template: corev1.PodTemplateSpec{

ObjectMeta: metav1.ObjectMeta{

Labels: labels,

},

Spec: corev1.PodSpec{

Containers: newContainers(app),

},

},

},

}

}

func newContainers(app *appv1.App) []corev1.Container {

var containerPorts []corev1.ContainerPort

for _, servicePort := range app.Spec.Ports {

var cport corev1.ContainerPort

cport.ContainerPort = servicePort.TargetPort.IntVal

containerPorts = append(containerPorts, cport)

}

return []corev1.Container{

{

Name: app.Name,

Image: app.Spec.Image,

Ports: containerPorts,

Env: app.Spec.Envs,

Resources: app.Spec.Resources,

ImagePullPolicy: corev1.PullIfNotPresent,

},

}

}

package service

import (

appv1 "github.com/leffss/app/api/v1"

corev1 "k8s.io/api/core/v1"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/runtime/schema"

)

func New(app *appv1.App) *corev1.Service {

return &corev1.Service{

TypeMeta: metav1.TypeMeta{

Kind: "Service",

APIVersion: "v1",

},

ObjectMeta: metav1.ObjectMeta{

Name: app.Name,

Namespace: app.Namespace,

OwnerReferences: []metav1.OwnerReference{

*metav1.NewControllerRef(app, schema.GroupVersionKind{

Group: appv1.GroupVersion.Group,

Version: appv1.GroupVersion.Version,

Kind: "App",

}),

},

},

Spec: corev1.ServiceSpec{

Ports: app.Spec.Ports,

Selector: map[string]string{

"app.example.com/v1": app.Name,

},

},

}

}

修改 controller 代码 controllers/app_controller.go

controllers/app_controller.go中的Reconcile是Controller核心函数,根据deployment和service状态变化修正资源。

/*

Copyright 2021.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

*/

package controllers

import (

"context"

"encoding/json"

"reflect"

"github.com/leffss/app/resource/deployment"

"github.com/leffss/app/resource/service"

"k8s.io/apimachinery/pkg/api/errors"

"github.com/go-logr/logr"

"k8s.io/apimachinery/pkg/runtime"

ctrl "sigs.k8s.io/controller-runtime"

"sigs.k8s.io/controller-runtime/pkg/client"

appv1 "github.com/leffss/app/api/v1"

corev1 "k8s.io/api/core/v1"

appsv1 "k8s.io/api/apps/v1"

)

// AppReconciler reconciles a App object

type AppReconciler struct {

client.Client

Log logr.Logger

Scheme *runtime.Scheme

}

//+kubebuilder:rbac:groups=app.example.com,resources=apps,verbs=get;list;watch;create;update;patch;delete

//+kubebuilder:rbac:groups=app.example.com,resources=apps/status,verbs=get;update;patch

//+kubebuilder:rbac:groups=app.example.com,resources=apps/finalizers,verbs=update

// Reconcile is part of the main kubernetes reconciliation loop which aims to

// move the current state of the cluster closer to the desired state.

// TODO(user): Modify the Reconcile function to compare the state specified by

// the App object against the actual cluster state, and then

// perform operations to make the cluster state reflect the state specified by

// the user.

//

// For more details, check Reconcile and its Result here:

// - https://pkg.go.dev/sigs.k8s.io/controller-runtime@v0.7.2/pkg/reconcile

func (r *AppReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

_ = r.Log.WithValues("app", req.NamespacedName)

// your logic here

// 获取 crd 资源

instance := &appv1.App{}

if err := r.Client.Get(ctx, req.NamespacedName, instance); err != nil {

if errors.IsNotFound(err) {

return ctrl.Result{}, nil

}

return ctrl.Result{}, err

}

// crd 资源已经标记为删除

if instance.DeletionTimestamp != nil {

return ctrl.Result{}, nil

}

oldDeploy := &appsv1.Deployment{}

if err := r.Client.Get(ctx, req.NamespacedName, oldDeploy); err != nil {

// deployment 不存在,创建

if errors.IsNotFound(err) {

// 创建deployment

if err := r.Client.Create(ctx, deployment.New(instance)); err != nil {

return ctrl.Result{}, err

}

// 创建service

if err := r.Client.Create(ctx, service.New(instance)); err != nil {

return ctrl.Result{}, err

}

// 更新 crd 资源的 Annotations

data, _ := json.Marshal(instance.Spec)

if instance.Annotations != nil {

instance.Annotations["spec"] = string(data)

} else {

instance.Annotations = map[string]string{"spec": string(data)}

}

if err := r.Client.Update(ctx, instance); err != nil {

return ctrl.Result{}, err

}

} else {

return ctrl.Result{}, err

}

} else {

// deployment 存在,更新

oldSpec := appv1.AppSpec{}

if err := json.Unmarshal([]byte(instance.Annotations["spec"]), &oldSpec); err != nil {

return ctrl.Result{}, err

}

if !reflect.DeepEqual(instance.Spec, oldSpec) {

// 更新deployment

newDeploy := deployment.New(instance)

oldDeploy.Spec = newDeploy.Spec

if err := r.Client.Update(ctx, oldDeploy); err != nil {

return ctrl.Result{}, err

}

// 更新service

newService := service.New(instance)

oldService := &corev1.Service{}

if err := r.Client.Get(ctx, req.NamespacedName, oldService); err != nil {

return ctrl.Result{}, err

}

clusterIP := oldService.Spec.ClusterIP // 更新 service 必须设置老的 clusterIP

oldService.Spec = newService.Spec

oldService.Spec.ClusterIP = clusterIP

if err := r.Client.Update(ctx, oldService); err != nil {

return ctrl.Result{}, err

}

// 更新 crd 资源的 Annotations

data, _ := json.Marshal(instance.Spec)

if instance.Annotations != nil {

instance.Annotations["spec"] = string(data)

} else {

instance.Annotations = map[string]string{"spec": string(data)}

}

if err := r.Client.Update(ctx, instance); err != nil {

return ctrl.Result{}, err

}

}

}

return ctrl.Result{}, nil

}

// SetupWithManager sets up the controller with the Manager.

func (r *AppReconciler) SetupWithManager(mgr ctrl.Manager) error {

return ctrl.NewControllerManagedBy(mgr).

For(&appv1.App{}).

Complete(r)

}

修改 CRD 资源定义 config/samples/app_v1_app.yaml

资源定义模板如下

apiVersion: app.example.com/v1

kind: App

metadata:

name: app-sample

namespace: default

spec:

# Add fields here

replicas: 2

image: nginx:1.16.1

ports:

- targetPort: 80

port: 8080

envs:

- name: DEMO

value: app

- name: GOPATH

value: gopath

resources:

limits:

cpu: 500m

memory: 500Mi

requests:

cpu: 100m

memory: 100Mi

修改 Dockerfile

此Dockerfile是打包Controller镜像用的。

# Build the manager binary

FROM golang:1.15 as builder

WORKDIR /workspace

# Copy the Go Modules manifests

COPY go.mod go.mod

COPY go.sum go.sum

# cache deps before building and copying source so that we don't need to re-download as much

# and so that source changes don't invalidate our downloaded layer

# 添加了 goproxy 环境变量

ENV GOPROXY https://goproxy.cn,direct

RUN go mod download

# Copy the go source

COPY main.go main.go

COPY api/ api/

COPY controllers/ controllers/

# 新增 COPY 自定义的文件夹 resource

COPY resource/ resource/

# Build

RUN CGO_ENABLED=0 GOOS=linux GOARCH=amd64 GO111MODULE=on go build -a -o manager main.go

# Use distroless as minimal base image to package the manager binary

# Refer to https://github.com/GoogleContainerTools/distroless for more details

#FROM gcr.io/distroless/static:nonroot

# gcr.io/distroless/static:nonroot 变更为 kubeimages/distroless-static:latest

FROM kubeimages/distroless-static:latest

WORKDIR /

COPY --from=builder /workspace/manager .

USER 65532:65532

ENTRYPOINT ["/manager"]

Mysql Operator逻辑

MHA方案

实现数据库在k8s上的运行是Operator的重要应用领域。

在Kubernetes内部,可以认为”万物”皆是对象,所有资源对象可以抽象成如下格式:

type Object struct {

metav1.TypeMeta

metav1.ObjectMeta

Spec ObjectSpec

Status ObjectStatus

}

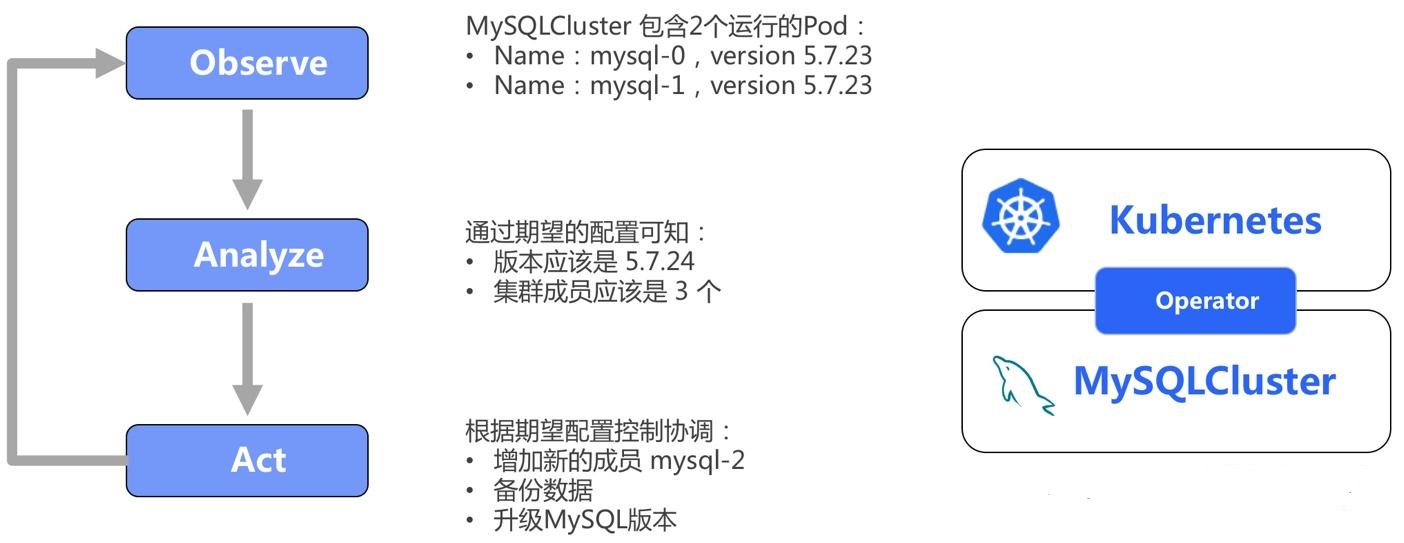

所有的资源对象均包含对象元数据,Spec(期望配置),Status(运行状态)。Operator 基于 CRD 扩展了新的资源对象,并通过控制器来保证应用处于预期状态。比如 MySQL Operator 通过下面的三个步骤模拟管理 MySQL集群的行为:

- 通过 Kubernetes API 观察集群的当前状态;

- 分析当前状态与期望状态的差别;

- 调用 MySQL集群管理API 或 Kubernetes API 消除这些差别。

首先,确定MySQL容器化目标:

- 快速部署MySQL主从集群

- 支持MySQL集群高可用,数据不丢失

- 支持MySQL集群弹性伸缩

- 支持MySQL 5.7 & 8.0

目标确定后,可分析出MySQL集群容器化的需求和解决措施:

- 保证每个MySQL服务启动Pod名固定且启动有序,可通过StatefulSet资源对象调度MySQL容器。

- 保证MySQL数据存储,可通过PV/PVC资源对象挂载LocalVolume(本地持久化存储)或者远端存储,比如Ceph、NFS、NAS等。使用LocalVolume好处是利用机器上的磁盘来存放业务需要持久化的数据,数据依然独立于 Pod 的生命周期,即使业务 Pod 被删除,数据也不会丢失。同时,和远端存储相比,本地存储可以避免网络 IO 开销,拥有更高的读写性能,所以分布式文件系统和分布式数据库这类对 IO 要求很高的应用非常适合本地存储,而远端存储更适合对读写IO要求不高的场景。

- MySQL集群扩容安全,通过Operator内部逻辑条件保证扩容安全。

- MySQL集群高可用方案,本文采用MHA方案,该方案是比较常用的高可用方案,支持大多数MySQL版本,使用MySQL半同步复制,有数据丢失的风险。

需求确定后,可分解出MySQL集群CRD的Spec配置包含:

- MySQL配置如端口、版本、存储信息、配置文件等字段;

- 集群配置包含高可用模式、副本数等字段;

- 调度信息包含资源套餐(CPU、内存、存储)、亲和性、NodeSelector等字段。

在controller中的协调函数Reconcile中添加实现Operator逻辑:

首先,实现MySQL集群的自动化部署和有序启动的功能,则需要在Reconcile逻辑中添加创建StatefulSet、Headless Service、PV/PVC和配置文件相关资源对象逻辑。StatefulSet能够保证每个Pod有唯一的网络标识,有序的、优雅的部署与伸缩,有序的、优雅的饿删除和停止,并且绑定了稳定的、持久化存储,该存储可以是本地持久化存储或远程存储,保证数据不丢失。Headless Service保证通过DNS RR解析到后端其中一个处于Running and Ready状态的Pod。

然后,添加高可用策略逻辑,本例中高可用方案选用成熟的MHA方案,MHA方案中包含MHA Manager和MHA Node。可将MHA Manager进程构建在Operator容器中,Operator会在集群成员都正常运行后,启动Manager进程,此时设置StatefulSet中最后的Pod为MySQL的Master,其他为Slave。MHA Node设置为Sidecar容器与MySQL Pod部署在一起,同时需要设置包含Manager进程的Operator容器与包含Node工具的Sidecar容器ssh免密登录,保证Manager脚本能够触发Node工具包的动作。高可用中故障迁移策略中包含主库故障和从库故障两种场景,Reconcile逻辑中需要判断MySQL Pod的状态是否正常。如果是从库故障,此时影响不大,会由kubelet负责拉起从库的Pod。如果是主库故障,则需要MHA Manager负责切换主库到StatefulSet中第一个Pod,并将原主库中对应的数据拷贝到第一个Pod,完成后删除原主库中数据,当kubelet将原主库的Pod拉起后数据恢复,MHA再重新调度切换到StatefulSet中第最后Pod为Master,此时故障恢复完毕。

最后,添加优雅扩缩容逻辑,该部分逻辑相对简单,如果是扩容MySQL集群,逻辑比较简单,扩容完成后,MHA再重新调度到StatefulSet中第最后Pod为Master即可。如果是缩容MySQL集群,则需要先MHA Manger提前切换Master到缩容后的的最后一个Pod,比如原来集群数是5个Pod,缩容为3个Pod,则Master角色切换到Pod-2中,然后执行StatefulSet缩容动作,完成整个过程。

Orchestrator方案

myql cluster cr 创建后operator会创建mysql statefulset,由statefulset负责创建并管理pod。

pod里的init容器负责从健康的节点备份数据,并执行prepare backup。如果是master节点第一次启动不需要备份数据,但是可能是从s3获取备份数据恢复mysql集群。

heartbeat容器负责发送心跳

sidecar容器是一个mysql数据备份和传输的容器,第一个从节点向master pod的sidecar容器发从backup请求,master pod的sidecar容器先执行“xtrabackup --backup”,然后把备份数据传给从pod。

mysql容器启动后,node controller会感知到,然后进行change master等操作。到此集群已经跑起来了

orch controller会将mysql节点加入到orchestrator中管理,并且通过orchestrator服务获取集群的健康状态,更新mysqlcluster资源对象

orchestrator服务负责故障恢复(master 挂了之后,从新选主)。

MGR方案源码分析

MHA和Orchestrator都需要借助第三方工具辅助,而MGR是MySQL官方方案,通过MySQL自身配置(内置MGR插件)即可实现主从切换、一致性等集群方案,其Operator也最简单。

自定义资源控制器

在mysql-operator启动时,首先会向k8s创建资源类型控制器,除了自定义Cluster类型,其他都是k8s中标准类型。

clusterController := cluster.NewController(

*s,

mysqlopClient,

kubeClient,

operatorInformerFactory.MySQL().V1alpha1().Clusters(), //自定义资源类型

kubeInformerFactory.Apps().V1beta1().StatefulSets(), //statefulsets

kubeInformerFactory.Core().V1().Pods(), //pods

kubeInformerFactory.Core().V1().Services(), //services

30*time.Second,

s.Namespace,

)

监听上面几种资源的状态变化事件。具体代码与相关处理函数在NewController函数内,如下:

func NewController(

opConfig operatoropts.MySQLOperatorOpts,

opClient clientset.Interface,

kubeClient kubernetes.Interface,

clusterInformer informersv1alpha1.ClusterInformer,

statefulSetInformer appsinformers.StatefulSetInformer,

podInformer coreinformers.PodInformer,

serviceInformer coreinformers.ServiceInformer,

resyncPeriod time.Duration,

namespace string,

) *MySQLController {

opscheme.AddToScheme(scheme.Scheme) // TODO: This shouldn't be done here I don't think.

// Create event broadcaster.

glog.V(4).Info("Creating event broadcaster")

eventBroadcaster := record.NewBroadcaster()

eventBroadcaster.StartLogging(glog.Infof)

eventBroadcaster.StartRecordingToSink(&typedcorev1.EventSinkImpl{Interface: kubeClient.CoreV1().Events("")})

recorder := eventBroadcaster.NewRecorder(scheme.Scheme, corev1.EventSource{Component: controllerAgentName})

m := MySQLController{

opConfig: opConfig,

opClient: opClient,

kubeClient: kubeClient,

// 设置关注各种自定义类型的资源

clusterLister: clusterInformer.Lister(),

clusterListerSynced: clusterInformer.Informer().HasSynced,

clusterUpdater: newClusterUpdater(opClient, clusterInformer.Lister()),

serviceLister: serviceInformer.Lister(),

serviceListerSynced: serviceInformer.Informer().HasSynced,

serviceControl: NewRealServiceControl(kubeClient, serviceInformer.Lister()),

statefulSetLister: statefulSetInformer.Lister(),

statefulSetListerSynced: statefulSetInformer.Informer().HasSynced,

statefulSetControl: NewRealStatefulSetControl(kubeClient, statefulSetInformer.Lister()),

podLister: podInformer.Lister(),

podListerSynced: podInformer.Informer().HasSynced,

podControl: NewRealPodControl(kubeClient, podInformer.Lister()),

secretControl: NewRealSecretControl(kubeClient),

queue: workqueue.NewNamedRateLimitingQueue(workqueue.DefaultControllerRateLimiter(), "mysqlcluster"),

recorder: recorder,

}

/*

监控自定义类型mysqlcluster的变化(增加、更新、删除),

这里看一看m.enqueueCluster函数可以发现都只是把发生变化的自定义对象的名称放入工作队列中

*/

clusterInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: m.enqueueCluster,

UpdateFunc: func(old, new interface{}) {

m.enqueueCluster(new)

},

DeleteFunc: func(obj interface{}) {

cluster, ok := obj.(*v1alpha1.Cluster)

if ok {

m.onClusterDeleted(cluster.Name)

}

},

})

//同样监控statefulset变化,handleObject首先查看书否属于哪个自定义类型cluster,然后加入到相应集群

statefulSetInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: m.handleObject,

UpdateFunc: func(old, new interface{}) {

newStatefulSet := new.(*apps.StatefulSet)

oldStatefulSet := old.(*apps.StatefulSet)

if newStatefulSet.ResourceVersion == oldStatefulSet.ResourceVersion {

return

}

// If cluster is ready ...

if newStatefulSet.Status.ReadyReplicas == newStatefulSet.Status.Replicas {

clusterName, ok := newStatefulSet.Labels[constants.ClusterLabel]

if ok {

m.onClusterReady(clusterName)

}

}

m.handleObject(new)

},

DeleteFunc: m.handleObject,

})

return &m

}

Controller核心逻辑

controller主要维护services是否存在以及statefulset集群是否与期望的一致,故障迁移完全依赖statefulset自愈,扩缩容也是通过设置statefulset副本数来实现。因为只要保证数据库节点拉起,集群恢复和同步都可以由Agent和MySQL MGR自身实现。

// syncHandler compares the actual state with the desired, and attempts to

// converge the two. It then updates the Status block of the Cluster

// resource with the current status of the resource.

func (m *MySQLController) syncHandler(key string) error {

// Convert the namespace/name string into a distinct namespace and name.

// 获取资源的命名空间与资源名

namespace, name, err := cache.SplitMetaNamespaceKey(key)

if err != nil {

utilruntime.HandleError(fmt.Errorf("invalid resource key: %s", key))

return nil

}

nsName := types.NamespacedName{Namespace: namespace, Name: name}

// Get the Cluster resource with this namespace/name.

cluster, err := m.clusterLister.Clusters(namespace).Get(name)

if err != nil {

// The Cluster resource may no longer exist, in which case we stop processing.

if apierrors.IsNotFound(err) {

utilruntime.HandleError(fmt.Errorf("mysqlcluster '%s' in work queue no longer exists", key))

return nil

}

return err

}

cluster.EnsureDefaults()

// 校验自定义资源对象

if err = cluster.Validate(); err != nil {

return errors.Wrap(err, "validating Cluster")

}

/*

给自定义资源对象设置一些默认属性

*/

if cluster.Spec.Repository == "" {

cluster.Spec.Repository = m.opConfig.Images.DefaultMySQLServerImage

}

operatorVersion := buildversion.GetBuildVersion()

// Ensure that the required labels are set on the cluster.

sel := combineSelectors(SelectorForCluster(cluster), SelectorForClusterOperatorVersion(operatorVersion))

if !sel.Matches(labels.Set(cluster.Labels)) {

glog.V(2).Infof("Setting labels on cluster %s", SelectorForCluster(cluster).String())

if cluster.Labels == nil {

cluster.Labels = make(map[string]string)

}

cluster.Labels[constants.ClusterLabel] = cluster.Name

cluster.Labels[constants.MySQLOperatorVersionLabel] = buildversion.GetBuildVersion()

return m.clusterUpdater.UpdateClusterLabels(cluster.DeepCopy(), labels.Set(cluster.Labels))

}

// Create a MySQL root password secret for the cluster if required.

if cluster.RequiresSecret() {

err = m.secretControl.CreateSecret(secrets.NewMysqlRootPassword(cluster))

if err != nil && !apierrors.IsAlreadyExists(err) {

return errors.Wrap(err, "creating root password Secret")

}

}

// 检测services是否存在,不存在则新建重启

svc, err := m.serviceLister.Services(cluster.Namespace).Get(cluster.Name)

// If the resource doesn't exist, we'll create it

if apierrors.IsNotFound(err) {

glog.V(2).Infof("Creating a new Service for cluster %q", nsName)

svc = services.NewForCluster(cluster)

err = m.serviceControl.CreateService(svc)

}

// If an error occurs during Get/Create, we'll requeue the item so we can

// attempt processing again later. This could have been caused by a

// temporary network failure, or any other transient reason.

if err != nil {

return err

}

// If the Service is not controlled by this Cluster resource, we should

// log a warning to the event recorder and return.

if !metav1.IsControlledBy(svc, cluster) {

msg := fmt.Sprintf(MessageResourceExists, "Service", svc.Namespace, svc.Name)

m.recorder.Event(cluster, corev1.EventTypeWarning, ErrResourceExists, msg)

return errors.New(msg)

}

//同样如果自定义类型cluster中statefulset不存在,同样重启一个statefulset

ss, err := m.statefulSetLister.StatefulSets(cluster.Namespace).Get(cluster.Name)

// If the resource doesn't exist, we'll create it

if apierrors.IsNotFound(err) {

glog.V(2).Infof("Creating a new StatefulSet for cluster %q", nsName)

ss = statefulsets.NewForCluster(cluster, m.opConfig.Images, svc.Name)

err = m.statefulSetControl.CreateStatefulSet(ss)

}

// If an error occurs during Get/Create, we'll requeue the item so we can

// attempt processing again later. This could have been caused by a

// temporary network failure, or any other transient reason.

if err != nil {

return err

}

// If the StatefulSet is not controlled by this Cluster resource, we

// should log a warning to the event recorder and return.

if !metav1.IsControlledBy(ss, cluster) {

msg := fmt.Sprintf(MessageResourceExists, "StatefulSet", ss.Namespace, ss.Name)

m.recorder.Event(cluster, corev1.EventTypeWarning, ErrResourceExists, msg)

return fmt.Errorf(msg)

}

//检测mysql-operator版本

// Upgrade the required component resources the current MySQLOperator version.

if err := m.ensureMySQLOperatorVersion(cluster, ss, buildversion.GetBuildVersion()); err != nil {

return errors.Wrap(err, "ensuring MySQL Operator version")

}

//检测数据库版本

// Upgrade the MySQL server version if required.

if err := m.ensureMySQLVersion(cluster, ss); err != nil {

return errors.Wrap(err, "ensuring MySQL version")

}

// 如果cluster副本数与期望的不一致,则更新statefulset,从而消除差异

// If this number of the members on the Cluster does not equal the

// current desired replicas on the StatefulSet, we should update the

// StatefulSet resource.

if cluster.Spec.Members != *ss.Spec.Replicas {

glog.V(4).Infof("Updating %q: clusterMembers=%d statefulSetReplicas=%d",

nsName, cluster.Spec.Members, ss.Spec.Replicas)

old := ss.DeepCopy()

ss = statefulsets.NewForCluster(cluster, m.opConfig.Images, svc.Name)

if err := m.statefulSetControl.Patch(old, ss); err != nil {

// Requeue the item so we can attempt processing again later.

// This could have been caused by a temporary network failure etc.

return err

}

}

//更新自定义类型状态

// Finally, we update the status block of the Cluster resource to

// reflect the current state of the world.

err = m.updateClusterStatus(cluster, ss)

if err != nil {

return err

}

m.recorder.Event(cluster, corev1.EventTypeNormal, SuccessSynced, MessageResourceSynced)

return nil

}

MySQL-Agent

作为mysql服务器的sidecar,与mysql服务器部署在同一个pod中,主要的作用有:

1、用于实时监控数据库状态,以及操作数据库以完成集群搭建;并且通过节点是否可读写来区分和判断集群主节点,然后进行新节点或者旧节点的加入。

2、通过探针,从而保证当mysql集群搭建完成之前或者mysql mgr故障时,设置pod不可用;

v1.Container{

Name: MySQLAgentName, //容器名

Image: fmt.Sprintf("%s:%s", mysqlAgentImage, agentVersion), //相应镜像

Args: []string{"--v=4"},

VolumeMounts: volumeMounts(cluster), //挂载卷

Env: []v1.EnvVar{ //传入的相关环境变量,主要是连接同pod下mysql的相关配置

clusterNameEnvVar(cluster),

namespaceEnvVar(),

replicationGroupSeedsEnvVar(replicationGroupSeeds),

multiMasterEnvVar(cluster.Spec.MultiMaster),

rootPassword,

{

Name: "MY_POD_IP",

ValueFrom: &v1.EnvVarSource{

FieldRef: &v1.ObjectFieldSelector{

FieldPath: "status.podIP",

},

},

},

},

// 设置Livesness探针,用于k8s检测pod存活情况

LivenessProbe: &v1.Probe{

Handler: v1.Handler{

HTTPGet: &v1.HTTPGetAction{

Path: "/live",

Port: intstr.FromInt(int(agentopts.DefaultMySQLAgentHeathcheckPort)),

},

},

},

// 设置Readiness探针,用于k8s检测该pod是否可以对外提供服务

ReadinessProbe: &v1.Probe{

Handler: v1.Handler{

HTTPGet: &v1.HTTPGetAction{

Path: "/ready",

Port: intstr.FromInt(int(agentopts.DefaultMySQLAgentHeathcheckPort)),

},

},

},

Resources: resourceLimits,

}

agent监控数据库同步逻辑主要在Sync函数中,在agent主函数中的调用逻辑如下:

// 首先调用Sync函数,通过返回值检测数据库是否启动成功,如果成功,则进入后续逻辑

for !manager.Sync(ctx) {

time.Sleep(10 * time.Second)

}

wg.Add(1)

go func() {

defer wg.Done()

manager.Run(ctx) //run函数启动一个协程,每隔一定的时间运行Sync函数

}()

// Run函数调用wait.Until,启动一个协程,每个15秒运行Sync函数

func (m *ClusterManager) Run(ctx context.Context) {

wait.Until(func() { m.Sync(ctx) }, time.Second*pollingIntervalSeconds, ctx.Done())

<-ctx.Done()

}

下面来具体分析Sync函数的实现

/*

检测数据库是否运行,并监控器其在集群中的运行状态,进行相应的操作

*/

func (m *ClusterManager) Sync(ctx context.Context) bool {

//通过mysqladmin连接数据库,检测数据库是否运行

if !isDatabaseRunning(ctx) {

glog.V(2).Infof("Database not yet running. Waiting...")

return false

}

// 通过mysqlsh连接数据库获取集群状态信息,dba.get_cluster('%s').status()

clusterStatus, err := m.getClusterStatus(ctx)

if err != nil {

myshErr, ok := errors.Cause(err).(*mysqlsh.Error)

if !ok {

glog.Errorf("Failed to get the cluster status: %+v", err)

return false

}

/*

如果没有想过信息,说明集群还没有建立,并且本pod编号为0,说明是整个集群第一个启动的数据库

则调用bootstrap自己建立集群

*/

if m.Instance.Ordinal == 0 {

// mysqlsh dba.create_cluster('%s', %s).status()创建集群

clusterStatus, err = m.bootstrap(ctx, myshErr)

if err != nil {

glog.Errorf("Error bootstrapping cluster: %v", err)

metrics.IncEventCounter(clusterCreateErrorCount)

return false

}

metrics.IncEventCounter(clusterCreateCount)

} else {

glog.V(2).Info("Cluster not yet present. Waiting...")

return false

}

}

// 到这一步,说明以及获得集群状态信息,设置集群状态信息,以便healthckeck协程进行检查

cluster.SetStatus(clusterStatus)

if clusterStatus.DefaultReplicaSet.Status == innodb.ReplicaSetStatusNoQuorum {

glog.V(4).Info("Cluster as seen from this instance is in NO_QUORUM state")

metrics.IncEventCounter(clusterNoQuorumCount)

}

online := false

// 获取状态信息中本节点的状态

instanceStatus := clusterStatus.GetInstanceStatus(m.Instance.Name())

// 下面是根据整体进行相应的处理

switch instanceStatus {

case innodb.InstanceStatusOnline:

metrics.IncStatusCounter(instanceStatusCount, innodb.InstanceStatusOnline)

glog.V(4).Info("MySQL instance is online")

online = true

case innodb.InstanceStatusRecovering:

metrics.IncStatusCounter(instanceStatusCount, innodb.InstanceStatusRecovering)

glog.V(4).Info("MySQL instance is recovering")

/*

如果状态为missing

1、首先获得主节点信息GetPrimaryAddr(其是通过节点是否可以读写来判断哪个节点是主);

2、调用handleInstanceMissing函数,

检查是否可以重新加入,主要是查看主节点是否存活

然后重新加入集群dba.get_cluster('%s').rejoin_instance('%s', %s)

*/

case innodb.InstanceStatusMissing:

metrics.IncStatusCounter(instanceStatusCount, innodb.InstanceStatusMissing)

primaryAddr, err := clusterStatus.GetPrimaryAddr()

if err != nil {

glog.Errorf("%v", err)

return false

}

online = m.handleInstanceMissing(ctx, primaryAddr)

if online {

metrics.IncEventCounter(instanceRejoinCount)

} else {

metrics.IncEventCounter(instanceRejoinErrorCount)

}

/*

如果状态为notfound,也就说明该实例还没有加入集群,

获取主节点信息,然后调用handleInstanceNotFound添加到集群

dba.get_cluster('%s').add_instance('%s', %s)

*/

case innodb.InstanceStatusNotFound:

metrics.IncStatusCounter(instanceStatusCount, innodb.InstanceStatusNotFound)

primaryAddr, err := clusterStatus.GetPrimaryAddr()

if err != nil {

glog.Errorf("%v", err)

return false

}

online = m.handleInstanceNotFound(ctx, primaryAddr)

if online {

metrics.IncEventCounter(instanceAddCount)

} else {

metrics.IncEventCounter(instanceAddErrorCount)

}

case innodb.InstanceStatusUnreachable:

metrics.IncStatusCounter(instanceStatusCount, innodb.InstanceStatusUnreachable)

default:

metrics.IncStatusCounter(instanceStatusCount, innodb.InstanceStatusUnknown)

glog.Errorf("Received unrecognised cluster membership status: %q", instanceStatus)

}

if online && !m.Instance.MultiMaster {

m.ensurePrimaryControllerState(ctx, clusterStatus)

}

return online

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号