Spark学习笔记——使用PySpark

1.启动pyspark

2.读取文件

>>> from pyspark.sql import SparkSession

>>>

>>> spark = SparkSession.builder.appName("myjob").getOrCreate()

>>> df = spark.read.text("hdfs:///user/lintong/logs/test")

>>> df.show()

+-----+

|value|

+-----+

| 1|

| 2|

| 3|

| 4|

+-----+

3.退出pyspark使用exit()

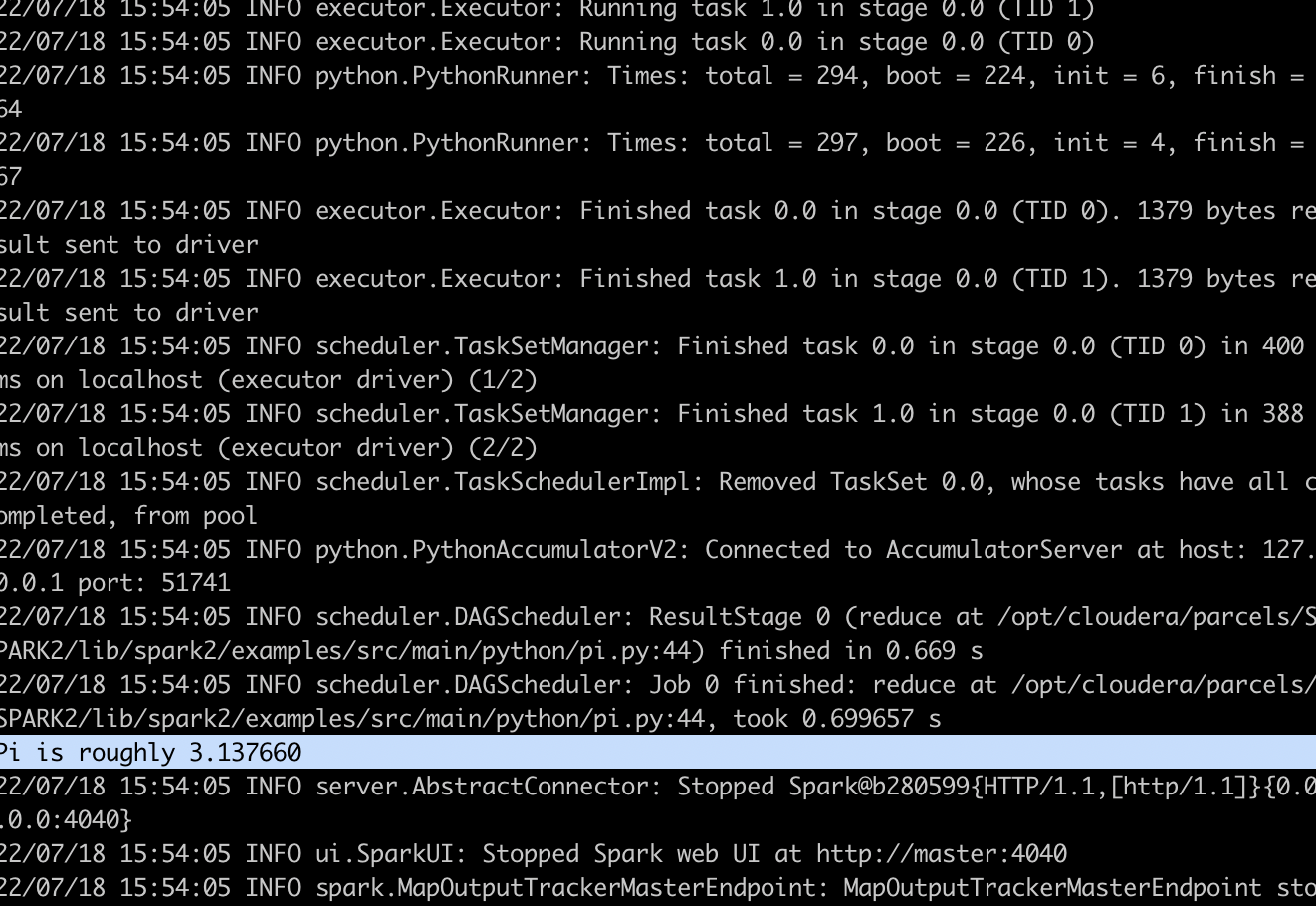

4.使用spark-submit提交pyspark任务pi.py

spark2-submit --master local[*] /opt/cloudera/parcels/SPARK2/lib/spark2/examples/src/main/python/pi.py

本文只发表于博客园和tonglin0325的博客,作者:tonglin0325,转载请注明原文链接:https://www.cnblogs.com/tonglin0325/p/5463816.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号