根据druid将慢sql通过钉钉的方式进行告警功能记录

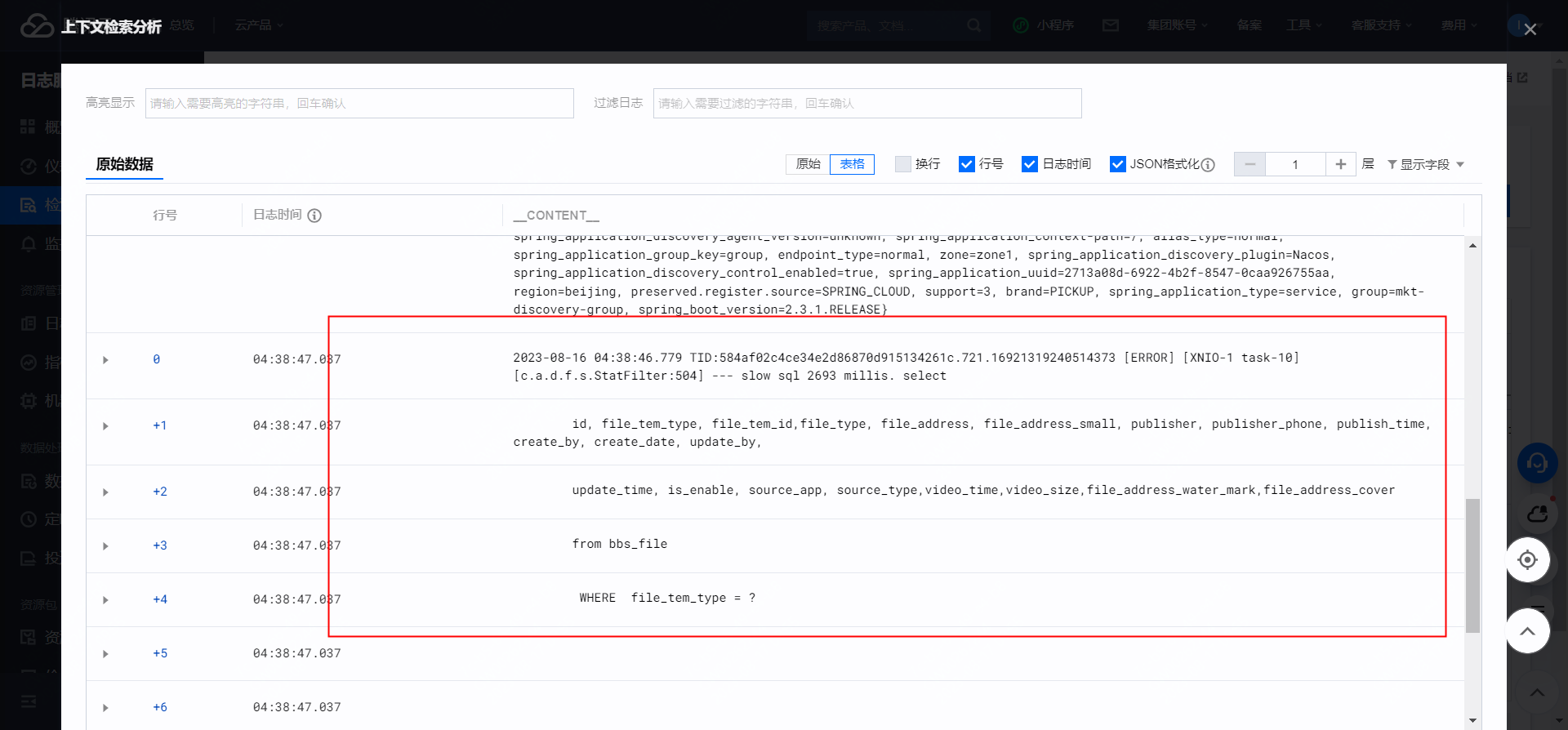

想要借助接入的druid把日志里面输入的慢sql通过钉钉的方式进行告警,由于项目里面之前接入了druid,格式大概如下:

这个是接入druid并且配置了slow sql为true的情况下,日志里面打印的slow sql。刚开始我的想法是通过重写log4j的日志来进行记录,然后看了druid的源码,看到这个日志是怎么打印的;最终发现不行,自己重写子类发现dubug不进自己的重写的方法里面;

然后又去github上面,看到有人在issue里面问这个问题,作者也给出了方式:参考(https://github.com/alibaba/druid/wiki/%E6%80%8E%E4%B9%88%E4%BF%9D%E5%AD%98Druid%E7%9A%84%E7%9B%91%E6%8E%A7%E8%AE%B0%E5%BD%95)

但是这个方法我试了,也不行。

刚开始因为对druid不是很熟悉,看到有人在issue里面提到一种方案是:通过获取druid的日志,来定时轮询分析里面的慢sql。获取druid sql 日志 可以通过durid提供的接口来实现,其接口为:

List<Map<String, Object>> mapList = DruidStatManagerFacade.getInstance().getDataSourceStatDataList();

这个就是接入druid后在控制台看到的那些sql信息,刚开始我以为是慢sql的记录,就想如果能够通过定时每次拉取这些慢sql,然后去定时告警不就行了? 但是如果每次都堆积慢sql,那随着堆积越来越多,会有问题啊。于是这个方案自己给推翻了。

网上看到在druid里面可以设置一个属性,timeBetweenLogStatsMillis。可以设置日志的删除间隔时间,那么是不是可以通过设置该属性,然后去定时获取呢?

于是按照这个思路:定时去拉取里面的sql信息,对里面的sql进行解析;然后去进行告警打印。(次方案有问题,后文会再说)先把一些用到的代码贴出来:

package com.gwm.marketing.filter.slowsql; @Data @Builder @AllArgsConstructor @NoArgsConstructor @ToString public class DruidSlowSqlDto { /**druid慢sql的id值*/ @JsonProperty(value = "ID") private Long id; /**慢sql语句*/ @JsonProperty(value = "SQL") private String sql; /**耗时分布直方图,用于跟踪查询执行所花费的时间以及保持结果的时间 比如[0, 0, 2, 0, 0, 0, 0, 0], * 第一个索引位置表示小于1毫秒的时间范围,第二个索引位置表示1毫秒到10毫秒的时间范围,以此类推. * 这个表示2条时间范围在10到100毫秒的数据*/ @JsonProperty(value = "ExecuteAndResultHoldTimeHistogram") private String[] executeAndResultHoldTimeHistogram; /**effectedRowCountHistogram表示查询执行期间受影响的行数的直方图。第一个索引位置表示受影响行数为1到10的查询次数, * 第二个索引位置表示受影响行数为10到100的查询次数,以此类推*/ @JsonProperty(value = "EffectedRowCountHistogram") private String[] effectedRowCountHistogram; /**Druid中表示从查询结果中获取的行数的直方图,跟踪查询结果中行数的分布情况。第一个索引位置表示受影响行数为1到10的查询次数, * 第二个索引位置表示受影响行数为10到100的查询次数,以此类推*/ @JsonProperty(value = "FetchRowCountHistogram") private String[] fetchRowCountHistogram; /**耗时最久的sql执行的时间*/ @JsonProperty(value = "MaxTimespanOccurTime") private Long maxTimespanOccurTime; /**最近一次的慢sql执行时间*/ @JsonProperty(value = "LastTime") private Long lastTime; /**最后一次慢sql的where条件参数*/ @JsonProperty(value = "LastSlowParameters") private String lastSlowParameters; /**执行次数*/ @JsonProperty(value = "ExecuteCount") private Long executeCount; /**最大执行耗时 单位:毫秒*/ @JsonProperty(value = "MaxTimespan") private Long maxTimespan; /**当前并发数*/ @JsonProperty(value = "ConcurrentMax") private Long concurrentMax; /**正在执行的SQL数量*/ @JsonProperty(value = "RunningCount") private Long runningCount; /** * 根据id做分组统计次数 * @param id */ public DruidSlowSqlDto(Long id) { this.id = id; } }

package com.gwm.marketing.filter.slowsql; @Component public class SlowSqlBufferTrigger { private Logger logger = LoggerFactory.getLogger(this.getClass()); @Resource private SlowSqlConfig slowSqlConfig; private static String[] colors = { // 蓝色 "#0000FF", // 红色 "#FF0000", // 绿色 "#00FF00", // 紫色 "#FF00FF", // 青色 "#00FFFF", // 橙色 "#FFA500", // 粉色 "#FFC0CB", // 灰色 "#808080", // 黑色 "#000000" }; BufferTrigger<DruidSlowSqlDto> stringBufferTrigger = BufferTrigger.<DruidSlowSqlDto, List<DruidSlowSqlDto>>simple() .maxBufferCount(1000) .interval(50, TimeUnit.SECONDS) .setContainer(() -> synchronizedList(new ArrayList<>()), List::add) .consumer(this::slowSqlSendAlarm) .build(); private void slowSqlSendAlarm(List<DruidSlowSqlDto> list) { if (CollectionUtils.isNotEmpty(list)) { //根据druid ID做分组,统计每个慢sql次数 Map<DruidSlowSqlDto, Long> druidMap = list.stream().collect(Collectors.groupingBy(t -> t, Collectors.mapping(m -> new DruidSlowSqlDto(m.getId()), Collectors.counting()))); if (druidMap.size() > 0) { long sum = druidMap.values().stream().mapToLong(Long::longValue).sum(); String slowSqlStr = druidMap.entrySet().stream().map(m -> buildSql(m.getKey()) + "执行次数:" + m.getValue() + "次;").collect(Collectors.joining()); Tag tag = Tag.builder().applicationName(DingdingAlarmUtil.applicationName).env(DingdingAlarmUtil.env).ip(IpUtil.initIp()).build(); DingdingAlarmUtil.sendAlarm(slowSqlStr, tag, sum); } } } private String buildSql(DruidSlowSqlDto dto) { Random random = new Random(); String randomColor = colors[random.nextInt(colors.length)]; String randomTimeColor = colors[random.nextInt(colors.length)]; StringBuilder builder = new StringBuilder(); builder.append("").append("\n\n**sql语句:** <font color=").append(randomColor).append(" size=6>" + dto.getSql() + "</font>\n" + "\n\n**参数:**" + dto.getLastSlowParameters() + "\n" + "\n\n**maxTime <font color=" + randomTimeColor + " face=\"黑体\">**:" + dto.getMaxTimespan() + "毫秒</font>;\n"); return builder.toString(); } public void enqueue(DruidSlowSqlDto druidSlowSqlDto) { stringBufferTrigger.enqueue(druidSlowSqlDto); } /** * 定时扫描慢sql * * @param param * @return * @throws Exception */ @XxlJob("getSlowSqlEveryMinutesJobHandler") public ReturnT<String> getSlowSqlEveryMinutesJobHandler(String param) throws Exception { XxlJobLogger.log("get slow sql every minutes"); try { List<Map<String, Object>> mapList = DruidStatManagerFacade.getInstance().getDataSourceStatDataList(); if (mapList != null && mapList.size() > 0) { for (Map<String, Object> map : mapList) { Integer dataSourceId = (Integer) map.get("Identity"); List<Map<String, Object>> lists = DruidStatManagerFacade.getInstance().getSqlStatDataList(dataSourceId); if (lists != null && lists.size() > 0) { logger.info("存储的sql数据量:" + lists.size() + "条"); lists.forEach(t -> { //todo 获取ExecuteAndResultSetHoldTime当前sql的执行时间,只有sql时间大于慢sql时间在进行打印 if(t.get("MaxTimespan") != null && Long.valueOf(t.get("MaxTimespan").toString()) > slowSqlConfig.getSlowSqlMillis()){ logger.info("druid慢sql信息:" + JSONObject.toJSON(t)); DruidSlowSqlDto dto = new DruidSlowSqlDto(); try { ObjectMapper mapper = new ObjectMapper(); mapper.configure(DeserializationFeature.FAIL_ON_UNKNOWN_PROPERTIES, false); try { dto = mapper.readValue(JSONObject.toJSON(t).toString(), DruidSlowSqlDto.class); } catch (Exception e) { logger.error("转换异常"); } this.enqueue(dto); } catch (Exception e) { logger.info("定时获取慢sql异常", e); dto.setSql(t.get("SQL").toString()); dto.setLastSlowParameters(t.get("LastSlowParameters").toString()); this.enqueue(dto); } } }); } } } } catch (Exception e) { logger.error("定时获取慢sql任务异常",e); } return ReturnT.SUCCESS; } }

其大概思路就是上文说的,通过xxljob定时拉取慢sql信息,然后将其放到内存中。(其实此处直接去进行钉钉告警就行了)。但是自己本机测试时候发现个问题:通过 DruidStatManagerFacade.getInstance().getSqlStatDataList(dataSourceId); 获取的是

这段时间全量的sql,而不仅仅是慢sql。 那如果发到线上的话,会有问题的。段时间内大量的sql,直接给程序oom了。。。。

这样想来想去,这个方案还是不行。这时候参考了知乎上看到别人写的,重写druid默认的过滤器不就行了(参考:druid集中监控所有SQL执行情况? - 罗愿的回答 - 知乎 https://www.zhihu.com/question/56749781/answer/2730085892)。durid默认的过滤器

是druid源码自带的statFilter.用处是在这里:

package com.gwm.marketing.config;

@Configuration

public class DruidMysqlConfig {

@Value("${spring.druid.slowSqlMillis}")

private Long slowSqlMillis;

@Value("${spring.datasource.druid.stat-view-servlet.login-username}")

private String druidUserName;

@Value("${spring.datasource.druid.stat-view-servlet.login-password}")

private String druidPassWord;

@Value("${spring.datasource.druid.timeBetweenLogStatsMillis}")

private Long timeBetweenLogStatsMillis;

/**

* 默认会扫描application.properties文件的以spring.druid开头的数据注入;此处用的是spring的数据库配置

* @return

*/

@ConfigurationProperties(prefix = "spring.datasource")

@Bean(initMethod = "init",destroyMethod = "close")

public DruidDataSource dataSource(){

DruidDataSource dataSource = new DruidDataSource();

//todo使用自定义的拦截器,不使用默认的

// dataSource.setProxyFilters(Lists.newArrayList(statGwmFilter()));

dataSource.setProxyFilters(Collections.singletonList(slowSqlFilter()));

//设置druid的重置间隔

dataSource.setTimeBetweenLogStatsMillis(timeBetweenLogStatsMillis);

return dataSource;

}

/**

* alibaba监听器 打印慢sql

* @return

*/

@Bean

public Filter statGwmFilter(){

StatFilter filter = new StatFilter();

filter.setSlowSqlMillis(slowSqlMillis);

filter.setLogSlowSql(true);

filter.setMergeSql(true);

return filter;

}

@Bean

public Filter slowSqlFilter(){

SqlMonitor sqlMonitor = new SqlMonitor();

return sqlMonitor;

}

/**

* 因为Springboot内置了servlet容器,所以没有web.xml,替代方法就是将ServletRegistrationBean注册进去

* 加入后台监控.这里其实就相当于servlet的web.xml

* @return

*/

@Bean

public ServletRegistrationBean servletRegistrationBean(){

ServletRegistrationBean<StatViewServlet> druidServlet = new ServletRegistrationBean<StatViewServlet>(new StatViewServlet(),"/druid/*");

druidServlet.addUrlMappings();

//后台需要有人登录,进行配置

//设置一些初始化参数

Map<String, String> initParas = new HashMap<String, String>(8);

initParas.put("loginUsername", druidUserName);

initParas.put("loginPassword", druidPassWord);

//允许谁能访问

initParas.put("allow", "");//这个值为空或没有就允许所有人访问,ip白名单

//initParas.put("allow","localhost");//只允许本机访问,多个ip用逗号,隔开

//initParas.put("deny","");//ip黑名单,拒绝谁访问 deny和allow同时存在优先deny

//禁用HTML页面的Reset按钮

initParas.put("resetEnable", "false");

druidServlet.setInitParameters(initParas);

return druidServlet;

}

}

直接重写该filter,重写后在这里指定使用自己的过滤器就行了。按照这个思路,重写过滤器:

package com.gwm.marketing.filter.slowsql;

@Component

public class SqlMonitor extends FilterEventAdapter {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Resource

private SlowSqlConfig slowSqlConfig;

@Resource

private SlowSqlBufferTrigger slowSqlBufferTrigger;

private static final String IGNORE_SQL = "SELECT 1";

@Override

protected void statementExecuteBefore(StatementProxy statement, String sql) {

super.statementExecuteBefore(statement, sql);

statement.setLastExecuteStartNano();

}

@Override

protected void statementExecuteAfter(StatementProxy statement, String sql, boolean result) {

if (IGNORE_SQL.equals(sql)) {

return;

}

final long nowNano = System.nanoTime();

final long nanos = nowNano - statement.getLastExecuteStartNano();

long millis = nanos / (1000 * 1000);

if (millis >= slowSqlConfig.getSlowSqlMillis()) {

String slowParameters = buildSlowParameters(statement);

DruidSlowSqlDto dto = DruidSlowSqlDto.builder()

.sql(sql).maxTimespan(millis).lastSlowParameters(slowParameters).build();

logger.info("slow sql " + millis + " millis. " + sql + "" + slowParameters);

slowSqlBufferTrigger.enqueue(dto);

}

}

@Override

protected void statementExecuteUpdateBefore(StatementProxy statement, String sql) {

super.statementExecuteUpdateBefore(statement, sql);

statement.setLastExecuteStartNano();

}

@Override

protected void statementExecuteBatchBefore(StatementProxy statement) {

super.statementExecuteBatchBefore(statement);

final String sql = statement.getBatchSql();

statement.setLastExecuteStartNano();

}

@Override

protected void statementExecuteBatchAfter(StatementProxy statement, int[] result) {

final long nowNano = System.nanoTime();

final long nanos = nowNano - statement.getLastExecuteStartNano();

long millis = nanos / (1000 * 1000);

if (millis >= slowSqlConfig.getSlowSqlMillis()) {

String batchSql = statement.getBatchSql();

String slowParameters = buildSlowParameters(statement);

DruidSlowSqlDto dto = DruidSlowSqlDto.builder()

.sql(batchSql).maxTimespan(millis).lastSlowParameters(slowParameters).build();

logger.info("slow sql " + millis + " millis. " + batchSql + "" + slowParameters);

slowSqlBufferTrigger.enqueue(dto);

}

}

@Override

protected void statementExecuteUpdateAfter(StatementProxy statement, String sql, int updateCount) {

if (IGNORE_SQL.equals(sql)) {

return;

}

final long nowNano = System.nanoTime();

final long nanos = nowNano - statement.getLastExecuteStartNano();

long millis = nanos / (1000 * 1000);

if (millis >= slowSqlConfig.getSlowSqlMillis()) {

String slowParameters = buildSlowParameters(statement);

DruidSlowSqlDto dto = DruidSlowSqlDto.builder()

.sql(sql).maxTimespan(millis).lastSlowParameters(slowParameters).build();

logger.info("slow sql " + millis + " millis. " + sql + "" + slowParameters);

slowSqlBufferTrigger.enqueue(dto);

}

}

@Override

protected void statementExecuteQueryBefore(StatementProxy statement, String sql) {

super.statementExecuteQueryBefore(statement, sql);

statement.setLastExecuteStartNano();

}

@Override

protected void statementExecuteQueryAfter(StatementProxy statement, String sql, ResultSetProxy resultSet) {

if (IGNORE_SQL.equals(sql)) {

return;

}

final long nowNano = System.nanoTime();

final long nanos = nowNano - statement.getLastExecuteStartNano();

long millis = nanos / (1000 * 1000);

if (millis >= slowSqlConfig.getSlowSqlMillis()) {

String slowParameters = buildSlowParameters(statement);

DruidSlowSqlDto dto = DruidSlowSqlDto.builder()

.sql(sql).maxTimespan(millis).lastSlowParameters(slowParameters).build();

logger.info("slow sql " + millis + " millis. " + sql + "" + slowParameters);

slowSqlBufferTrigger.enqueue(dto);

}

}

protected String buildSlowParameters(StatementProxy statement) {

JSONWriter out = new JSONWriter();

out.writeArrayStart();

for (int i = 0, parametersSize = statement.getParametersSize(); i < parametersSize; ++i) {

JdbcParameter parameter = statement.getParameter(i);

if (i != 0) {

out.writeComma();

}

if (parameter == null) {

continue;

}

Object value = parameter.getValue();

if (value == null) {

out.writeNull();

} else if (value instanceof String) {

String text = (String) value;

if (text.length() > 100) {

out.writeString(text.substring(0, 97) + "...");

} else {

out.writeString(text);

}

} else if (value instanceof Number) {

out.writeObject(value);

} else if (value instanceof java.util.Date) {

out.writeObject(value);

} else if (value instanceof Boolean) {

out.writeObject(value);

} else if (value instanceof InputStream) {

out.writeString("<InputStream>");

} else if (value instanceof NClob) {

out.writeString("<NClob>");

} else if (value instanceof Clob) {

out.writeString("<Clob>");

} else if (value instanceof Blob) {

out.writeString("<Blob>");

} else {

out.writeString('<' + value.getClass().getName() + '>');

}

}

out.writeArrayEnd();

return out.toString();

}

}

重写的时候参考了druid的过滤器,然后在sql查询执行前、更新执行前、批量查询执行前等记录当前时间,然后在查询执行后、更新执行后、批量操作执行后又记录执行后的时间,通过这个时间戳的差值计算执行的慢sql时间。如果sql时间大于设置的慢sql时间,则进行告警功能。

通过这样的方式就不用每次轮训了,而且也不用去定期删除了。 这个其实就是自己刚开始想要通过重写日志的方式来获取原理是一样的,重写过滤器的方式来达到这种效果。 只是刚开始对druid这块不熟悉。

针对告警这块,自己总结:可以通过重写日志(比如feign的日志)、也可以自定义一些过滤器或者监听器来重写,但是在重写过滤器或者监听器时候,要指定地方使用。

浙公网安备 33010602011771号

浙公网安备 33010602011771号