【JAVA】我的爬虫

简介:不是很智能的爬虫,效率慢,仅用作自娱自乐,需要观察目标网站的页面然后修改相关正则去获取自己想要的数据

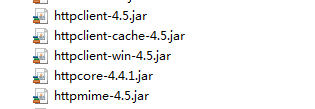

环境:需要Http-client相关jar包,如下,可以去我的下载链接下载:

https://download.csdn.net/download/the_fool_/10046597

重新整理jar包:

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5</version>

</dependency>

工具类1,获取某个页面HTML代码

package com.zzt.spider;

import java.io.IOException;

import java.util.Random;

import org.apache.http.HttpEntity;

import org.apache.http.HttpResponse;

import org.apache.http.client.ClientProtocolException;

import org.apache.http.client.HttpClient;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.DefaultHttpClient;

import org.apache.http.util.EntityUtils;

/**

* 用于获取整张页面的字符串表现形式

* @author Administrator

*

*/

public class SpiderChild {

public static void main(String[] args) {

Random r =new Random();

int nextInt = r.nextInt(8)+1;

System.out.println(nextInt);

// String stringHtml = getStringHtml("http://www.163.com");

// System.out.println(stringHtml);

// String[] contents = stringHtml.split("<a href=\"");

// for(String s :contents){

// System.out.println(s);

// }

}

//获取�?��张页面的字符串表现形�?

public static String getStringHtml(String url){

//实例化客户端

HttpClient client = new DefaultHttpClient();

HttpGet getHttp = new HttpGet(url);

//整张页面

String content = null;

HttpResponse response;

try {

response = client.execute(getHttp);

//获取到实�?

HttpEntity entity = response.getEntity();

if(entity!=null){

content = EntityUtils.toString(entity);

//System.out.println(content);

}

} catch (ClientProtocolException e) {

// TODO Auto-generated catch block

e.printStackTrace();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}finally{

client.getConnectionManager().shutdown();

}

return content;

}

}

主类:

package com.zzt.spider;

import java.io.BufferedReader;

import java.io.File;

import java.io.FileOutputStream;

import java.io.FileWriter;

import java.io.IOException;

import java.io.InputStreamReader;

import java.io.OutputStream;

import java.io.PrintWriter;

import java.net.MalformedURLException;

import java.net.URL;

import java.net.URLConnection;

import java.util.ArrayList;

import java.util.List;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

/**

* ZX 2017.7.26

* 针对于不同网站定制化爬虫,并不全面

* @author Administrator

*

*/

public class BigBugSpiderSu {

public static void main(String[] args) throws Exception {

File file = new File("E:\\htmlfile");

if(!file.exists()){

boolean createNewFile = file.mkdir();

System.out.println(createNewFile);

}

crawl();

}

public static void crawl() {

System.out.println("begin+++++++++++++++++++++++++++++++++++++++++++");

String url = "http://www.xxxx.cn/html/";

for(int i=0; i<=30000; i++){

String URLS = url + i+".html";

System.out.println("共30000页,当前="+i+".html");

try {

spider(URLS);

for(int j=0;j<urlUeue.size();j++){

String u = urlUeue.get(j);System.out.println("页面"+u);

String stringHtml = SpiderChild.getStringHtml(u);

String fileName=(u.substring(u.indexOf("="))+".html").replace("=", "a");

System.out.println(u);

try {

Thread.sleep(1000);

writetoFile(stringHtml,fileName);

} catch (Exception e) {

e.printStackTrace();

continue;

}

}

urlUeue.clear();

} catch (Exception e) {

e.printStackTrace();

continue;

}

try {

Thread.sleep(0);

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

System.out.println("end+++++++++++++++++++++++++++++++++++++++++++");

System.out.println("end+++++++++++++++++++++++++++++++++++++++++++");

System.out.println("end+++++++++++++++++++++++++++++++++++++++++++");

}

//链接容器

static List<String> urlUeue = new ArrayList<String>();

//获取�?��页面的所有连�?

private static void spider(String URLS) throws Exception{

URL url = null;

URLConnection urlconn = null;

BufferedReader br = null;

PrintWriter pw = null;

//http://www.ajxxgk.jcy.cn/html/[0-9_/]+.html

//href="/html/20170904/2/7002870.html">

String regex = "//html//[0-9]+//2//[0-9_]+.html";

Pattern p = Pattern.compile(regex);

try {

url = new URL(URLS);

urlconn = url.openConnection(); //X-Forward-For

urlconn.setRequestProperty("Accept","text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8");

urlconn.setRequestProperty("Accept-Encoding","gzip, deflate");

urlconn.setRequestProperty("Accept-Language","zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3");

urlconn.setRequestProperty("Connection","keep-alive");

urlconn.setRequestProperty("Cookie", "__jsluid=053c2fe045bbf28d2215a0aa0aa713e5; Hm_lvt_2e64cf4f6ff9f8ccbe097650c83d719e=1502258037,1504571969; Hm_lpvt_2e64cf4f6ff9f8ccbe097650c83d719e=1504574596; sYQDUGqqzHpid=page_0; sYQDUGqqzHtid=tab_0; PHPSESSID=7ktaqicdremii959o4d0p2rgm6; __jsl_clearance=1504575799.118|0|cwzSt6rKCXJZrf5ZOVGhco1TpWw%3");

urlconn.setRequestProperty("User-Agent", "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:47.0) Gecko/20100101 Firefox/47.0");

urlconn.setRequestProperty("Host","www.ajxxgk.jcy.cn");

urlconn.setRequestProperty("Content-Type","text/html; charset=UTF-8");

urlconn.setRequestProperty("Referer","http://www.ajxxgk.jcy.cn/html/zjxflws/2.html");

pw = new PrintWriter(new FileWriter("e:/url.txt"), true);

br = new BufferedReader(new InputStreamReader(

urlconn.getInputStream()));

String buf = null;

while ((buf = br.readLine()) != null) {

System.out.println(buf);

// String string = new String(buf.getBytes(), "utf-8");

// System.out.println(string);

Matcher buf_m = p.matcher(buf);

while (buf_m.find()) {

urlUeue.add("http://www.xxxx.cn/"+buf_m.group());

}

}

//System.out.println("获取成功");

} catch (MalformedURLException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

} finally {

try {

br.close();

} catch (IOException e) {

e.printStackTrace();

}

pw.close();

}

}

public static void writetoFile(String context,String fileName)throws Exception{

// 构建指定文件

File file = new File("E:" + File.separator + "htmlfile"+File.separator+fileName);

OutputStream out = null;

try {

// 根据文件创建文件的输出流

out = new FileOutputStream(file);

// 把内容转换成字节数组

byte[] data = context.getBytes();

// 向文件写入内�?

out.write(data);

} catch (Exception e) {

e.printStackTrace();

} finally {

try {

// 关闭输出�?

out.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

//class Dog implements Runnable{

// public List<String> urlUeue;

// @Override

// public void run() {

// for(String u:urlUeue){

//

// String stringHtml = SpiderChild.getStringHtml(u);

// String fileName=u.substring(u.indexOf("xiangqing-"));

// System.out.println("fileName"+fileName);

// writetoFile(stringHtml,fileName);

// }

//

// }

// public void writetoFile(String context,String fileName){

// // 构建指定文件

// File file = new File("E:" + File.separator + "htmlfile"+File.separator+fileName);

// OutputStream out = null;

// try {

// // 根据文件创建文件的输出流

// out = new FileOutputStream(file);

// // 把内容转换成字节数组

// byte[] data = context.getBytes();

// // 向文件写入内�?

// out.write(data);

// } catch (Exception e) {

// e.printStackTrace();

// } finally {

// try {

// // 关闭输出�?

// out.close();

// } catch (Exception e) {

// e.printStackTrace();

// }

// }

// }

// public Dog(List<String> urlUeue) {

// this.urlUeue = urlUeue;

// }

// public Dog() {

// super();

// }

//

//}