k8s-v1.19.4集群部署实验记录文档

k8s-v1.19.4集群部署实验记录文档

一、准备

1.1 部署说明

采用二进制部署Kubernetes集群。从github下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。

在开始之前,部署Kubernetes集群机器需要满足以下几个条件:

- 一台或多台机器,操作系统 CentOS7.x-86_x64。

- 硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘30GB或更多。

- 可以访问外网,需要拉取镜像,如果服务器不能上网,需要提前下载镜像并导入节点。

1.2 软件及来源

- 主要软件及来源

| 软件及版本 | 二进制包 | 下载链接 | 来源 |

|---|---|---|---|

| CentOS Linux release 7.4.1708 (Core) | CentOS-7-x86_64-Minimal-1708.iso | https://vault.centos.org/7.4.1708/isos/x86_64/CentOS-7-x86_64-Minimal-1708.iso | https://www.centos.org/ |

| kubernetes v1.19.4 | kubernetes-server-linux-amd64.tar.gz | https://dl.k8s.io/v1.19.4/kubernetes-server-linux-amd64.tar.gz | https://github.com/ |

| Etcd v3.4.13 | etcd-v3.4.13-linux-amd64.tar.gz | https://github.com/etcd-io/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz | https://github.com/ |

| Docker 19.03.13 | docker-19.03.13.tgz | https://download.docker.com/linux/static/stable/x86_64/docker-19.03.13.tgz | https://www.docker.com/ |

- 部署用到的镜像及配置清单yaml文件

REPOSITORY TAG SIZE

nginx latest 133MB

kubernetesui/dashboard v2.0.4 225MB

coredns/coredns 1.7.0 45.2MB

kubernetesui/metrics-scraper v1.0.4 36.9MB

quay.io/coreos/flannel v0.12.0-amd64 52.8MB

busybox 1.28.3 1.15MB

kubernetes/pause latest 240kB

apiserver-to-kubelet-rbac.yaml

kube-flannel.yaml

kubernetes-dashboard.yaml

coredns-1.7.0.yaml

- 软件及资源下载链接:https://pan.baidu.com/s/1oasap9txommunSNM2syYBQ 提取码:ak8s

1.3 服务器规划

| 主机 | IP | 节点 | 配置 | 组件 |

|---|---|---|---|---|

| vms31 | 192.168.26.31 | k8s-master | 2核4G内存 | kube-apiserver,kube-controller-manager,kube-scheduler,kubelet,kube-proxy,docker,etcd |

| vms32 | 192.168.26.32 | k8s-node1 | 2核4G内存 | kubelet,kube-proxy,docker |

- 这里最快速地部署2个节点的单master集群,其中master节点兼做node节点。

- 此部署方式node计算节点可以很方便地扩展,并对已运行集群无影响。

- 此部署方式可扩展为多master高可用集群,需要配置负载均衡。

- 根据需要另行搭建DNS、资源清单服务器、harbor。

- 此部署未涉及网络规划、存储规划。

- 更多参考:https://gitee.com/cloudlove2007/k8s-center

1.4 节点初始化配置

1. 所有节点添加或修改:/etc/hosts

192.168.26.31 vms31.example.com vms31 k8s-master

192.168.26.32 vms32.example.com vms32 k8s-node1

2. 安装依赖

在所有机器上执行(以下软件如果没有安装就安装)

yum install -y epel-release conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget unzip net-tools

可以根据后续实际使用情况进行安装。

3. 将桥接的IPv4流量传递到iptables的链

在所有节点设置和执行:

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

如果出现以下错误时:

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory

执行

modprobe br_netfilter然后再生效:

~]# modprobe br_netfilter

~]# sysctl -p /etc/sysctl.d/k8s.conf

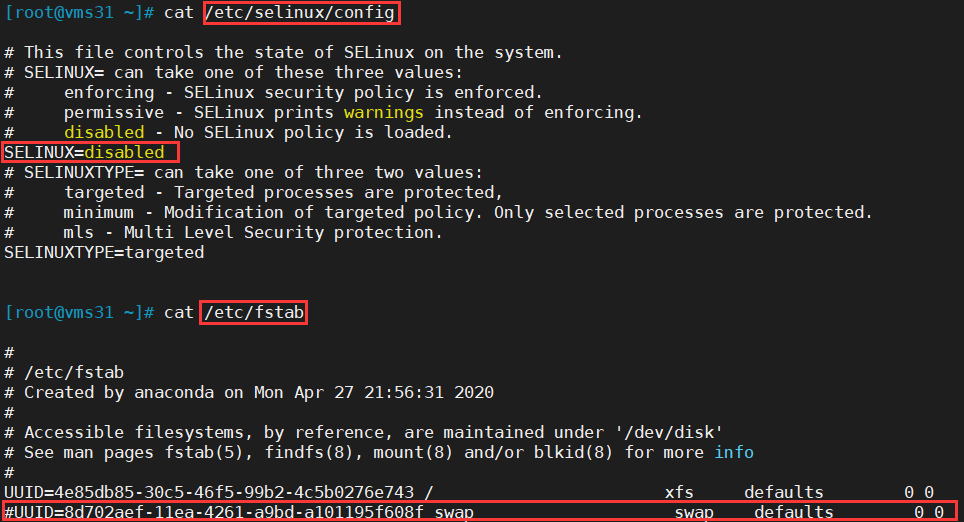

4. 关闭防火墙、selinux、swap分区

在所有节点

#关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat

iptables -P FORWARD ACCEPT

#关闭selinux

setenforce 0

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

#关闭swap分区

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

以vms31为例:

[root@vms31 ~]# firewall-cmd --state

running

[root@vms31 ~]# systemctl stop firewalld

[root@vms31 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@vms31 ~]# firewall-cmd --state

not running

[root@vms31 ~]# iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat

[root@vms31 ~]# iptables -P FORWARD ACCEPT

5. 配置时间同步

在所有节点

ntpdate ntp1.aliyun.com

可以自行搭建时间同步服务器进行时间同步。

6. 升级内核

在所有节点

CentOS 7.x 系统自带的 3.10.x 内核存在一些 Bugs,导致运行的 Docker、Kubernetes 不稳定,例如:

-

高版本的 docker(1.13 以后) 启用了 3.10 kernel 实验支持的 kernel memory account 功能(无法关闭),当节点压力大,如频繁启动和停止容器时会导致 cgroup memory leak;

-

网络设备引用计数泄漏,会导致类似于报错:"kernel:unregister_netdevice: waiting for eth0 to become free. Usage count = 1";

解决方案如下:

(1)升级内核到 4.4.X 以上:

[root@vms31 kernel]# uname -sr

Linux 3.10.0-693.el7.x86_64

[root@vms31 ~]# ls -l /boot/config*

-rw-r--r--. 1 root root 140894 8月 23 2017 /boot/config-3.10.0-693.el7.x86_64

[root@vms31 ~]# awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

0 : CentOS Linux (3.10.0-693.el7.x86_64) 7 (Core)

1 : CentOS Linux (0-rescue-2d7c33c76c954ad28141d7ce515d455a) 7 (Core)

(2)下载及安装

wget https://mirror.rc.usf.edu/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-5.9.8-1.el7.elrepo.x86_64.rpm --no-check-certificate

wget https://mirror.rc.usf.edu/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-5.9.8-1.el7.elrepo.x86_64.rpm --no-check-certificate

yum -y install kernel-ml-5.9.8-1.el7.elrepo.x86_64.rpm && yum -y install kernel-ml-devel-5.9.8-1.el7.elrepo.x86_64.rpm

(3)修改grub中默认的内核版本,重启系统,并查看内核

在vms31:

[root@vms31 ~]# ls -l /boot/config*

-rw-r--r--. 1 root root 140894 8月 23 2017 /boot/config-3.10.0-693.el7.x86_64

-rw-r--r-- 1 root root 217537 11月 11 23:10 /boot/config-5.9.8-1.el7.elrepo.x86_64

[root@vms31 ~]# awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

0 : CentOS Linux 7 Rescue 8940117247304f4c871693a09002e1d9 (5.9.8-1.el7.elrepo.x86_64)

1 : CentOS Linux (5.9.8-1.el7.elrepo.x86_64) 7 (Core)

2 : CentOS Linux (3.10.0-693.el7.x86_64) 7 (Core)

3 : CentOS Linux (0-rescue-2d7c33c76c954ad28141d7ce515d455a) 7 (Core)

[root@vms31 ~]# grub2-set-default 0

[root@vms31 ~]# reboot

...

[root@vms31 ~]# uname -sr

Linux 5.9.8-1.el7.elrepo.x86_64

在vms32:

[root@vms32 ~]# uname -sr

Linux 3.10.0-693.el7.x86_64

[root@vms32 ~]# awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

0 : CentOS Linux 7 Rescue 74b76b31501d48fd8c66ab518d23a0bf (5.9.8-1.el7.elrepo.x86_64)

1 : CentOS Linux (5.9.8-1.el7.elrepo.x86_64) 7 (Core)

2 : CentOS Linux (3.10.0-693.el7.x86_64) 7 (Core)

3 : CentOS Linux (0-rescue-2d7c33c76c954ad28141d7ce515d455a) 7 (Core)

[root@vms32 ~]# grub2-set-default 0

[root@vms32 ~]# reboot

...

[root@vms32 ~]# uname -sr

Linux 5.9.8-1.el7.elrepo.x86_64

7. 下载cfssl证书生成工具

在vms31上操作

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

二、部署etcd

2.1 etcd节点

| 主机 | IP | 配置 | 集群节点 |

|---|---|---|---|

| vms31 k8s-master1 | 192.168.26.31 | 2核4G内存 | etcd-1 |

这里仅部署单节点etcd,此部署方式可以在运行不受影响时扩展为多节点集群。

2.2 下载etcd组件

在vms31上操作

github:https://github.com

wget https://github.com/etcd-io/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz

华为云:https://mirrors.huaweicloud.com

wget https://mirrors.huaweicloud.com/etcd/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz

2.3 生成etcd证书

在vms31上操作

- 创建工作目录:

mkdir -p ~/TLS/{etcd,k8s}

- 自签证书颁发机构

[root@vms31 ~]# cd TLS/etcd

[root@vms31 etcd]# vi ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

[root@vms31 etcd]# vi ca-csr.json

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

- 生成证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

ls *pem

ca-key.pem ca.pem

- 创建证书申请文件

[root@vms31 etcd]# vi server-csr.json

{

"CN": "etcd",

"hosts": [

"192.168.26.31",

"192.168.26.32",

"192.168.26.33"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

- 生成证书

[root@vms31 etcd]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

[root@vms31 etcd]# ls server*pem

server-key.pem server.pem

2.4 创建工作目录并解压二进制包

vms31

cd ~/

[root@vms31 ~]# mkdir /opt/etcd/{bin,cfg,ssl} -p

[root@vms31 ~]# tar zxvf etcd-v3.4.13-linux-amd64.tar.gz

[root@vms31 ~]# mv etcd-v3.4.13-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

2.5 创建etcd的配置文件

在vms31上

[root@vms31 ~]# vi /opt/etcd/cfg/etcd.conf

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.26.31:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.26.31:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.26.31:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.26.31:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.26.31:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

说明

- ETCD_NAME:节点名称,集群中唯一

- ETCD_DATA_DIR:数据目录

- ETCD_LISTEN_PEER_URLS:集群通信监听地址

- ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

- ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

- ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

- ETCD_INITIAL_CLUSTER:集群节点地址

- ETCD_INITIAL_CLUSTER_TOKEN:集群Token

- ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

2.6 创建etcd的systemd

vms31

[root@vms31 ~]# vi /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem --logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

2.7 拷贝刚才生成的证书

vms31:把刚才生成的证书拷贝到配置文件中的路径:

[root@vms31 ~]# ls ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem

/root/TLS/etcd/ca-key.pem /root/TLS/etcd/ca.pem /root/TLS/etcd/server-key.pem /root/TLS/etcd/server.pem

[root@vms31 ~]# cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

[root@vms31 ~]# ls /opt/etcd/ssl/

ca-key.pem ca.pem server-key.pem server.pem

2.8 启动etcd并设置开机启动

vms31

systemctl daemon-reload

systemctl start etcd

systemctl enable etcd

检查启动情况

[root@vms31 ~]# netstat -luntp|grep etcd

tcp 0 0 192.168.26.31:2379 0.0.0.0:* LISTEN 984/etcd

tcp 0 0 192.168.26.31:2380 0.0.0.0:* LISTEN 984/etcd

查看集群状态 (当前只有一个节点)

[root@vms31 ~]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.26.31:2379" endpoint health

https://192.168.26.31:2379 is healthy: successfully committed proposal: took = 7.725129ms

查看当前leader (当前只有一个节点,vms31为leader)

[root@vms31 ~]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.26.31:2379" endpoint status

https://192.168.26.31:2379, 19bd5330bf0cc330, 3.4.13, 4.5 MB, true, false, 10, 248562, 248562,

三、部署docker

所有节点

3.1 下载docker

wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.13.tgz

3.2 部署docker

tar zxvf docker-19.03.13.tgz

mv docker/* /usr/bin

3.3 创建配置文件

mkdir /etc/docker

mkdir /mnt/docker-lib

vi /etc/docker/daemon.json

{

"registry-mirrors": ["https://5gce61mx.mirror.aliyuncs.com"]

}

"registry-mirrors":可以配置阿里云镜像加速器。

3.4 创建docker的systemd

# vi /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

3.5 启动docker并设置开机启动

systemctl daemon-reload

systemctl start docker

systemctl enable docker

检查启动情况:

docker version

docker info

[root@vms31 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:64:35:7b brd ff:ff:ff:ff:ff:ff

inet 192.168.26.31/24 brd 192.168.26.255 scope global ens32

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe64:357b/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:37:9c:63:26 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

四、部署master组件

vms31

4.1 生成kube-apiserver证书

1. 自签证书颁发机构(CA)

[root@vms31 ~]# cd ~/TLS/k8s/

[root@vms31 k8s]# vi ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

[root@vms31 k8s]# vi ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

生成证书:

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

ls *pem

ca-key.pem ca.pem

2. 使用自签CA签发kube-apiserver HTTPS证书

创建证书申请文件:

[root@vms31 k8s]# vi server-csr.json

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.26.31",

"192.168.26.32",

"192.168.26.33",

"192.168.26.34",

"192.168.26.35",

"192.168.26.81",

"192.168.26.82",

"192.168.26.88",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

注:上述文件hosts字段中IP为所有Master/LB/VIP IP。为了方便后期扩容可以多写几个预留的IP。

生成证书:

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

ls server*pem

server-key.pem server.pem

4.2 从Github下载及解压二进制包

[root@vms31 k8s]# cd ~

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

tar zxvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin

ls kube-apiserver kube-scheduler kube-controller-manager

cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin

cp kubectl /usr/bin/

4.3 部署kube-apiserver

1. 创建配置文件

[root@vms31 ~]# vi /opt/kubernetes/cfg/kube-apiserver.conf

KUBE_APISERVER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--etcd-servers=https://192.168.26.31:2379 \

--bind-address=192.168.26.31 \

--secure-port=6443 \

--advertise-address=192.168.26.31 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth=true \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=6000-32767 \

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

- –logtostderr:启用日志

- —v:日志等级

- –log-dir:日志目录

- –etcd-servers:etcd集群地址

- –bind-address:监听地址

- –secure-port:https安全端口

- –advertise-address:集群通告地址

- –allow-privileged:启用授权

- –service-cluster-ip-range:Service虚拟IP地址段

- –enable-admission-plugins:准入控制模块

- –authorization-mode:认证授权,启用RBAC授权和节点自管理

- –enable-bootstrap-token-auth:启用TLS bootstrap机制

- –token-auth-file:bootstrap token文件

- –service-node-port-range:Service nodeport类型默认分配端口范围

- –kubelet-client-xxx:apiserver访问kubelet客户端证书

- –tls-xxx-file:apiserver https证书

- –etcd-xxxfile:连接Etcd集群证书

- –audit-log-xxx:审计日志

2. 拷贝刚才生成的证书

把刚才生成的证书拷贝到配置文件中的路径:

[root@vms31 ~]# ls ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem

/root/TLS/k8s/ca-key.pem /root/TLS/k8s/ca.pem /root/TLS/k8s/server-key.pem /root/TLS/k8s/server.pem

[root@vms31 ~]# cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/

3. 启用 TLS Bootstrapping 机制

TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。

创建上述配置文件中token文件:

cat > /opt/kubernetes/cfg/token.csv << EOF

7edfdd626b76c341a002477b8a3d3fe2,kubelet-bootstrap,10001,"system:node-bootstrapper"

EOF

格式:token,用户名,UID,用户组

token也可自行生成替换:

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

4. systemd管理apiserver

[root@vms31 ~]# vi /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

5. 启动并设置开机启动

systemctl daemon-reload

systemctl start kube-apiserver

systemctl enable kube-apiserver

6. 授权kubelet-bootstrap用户允许请求证书

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

4.4 部署kube-controller-manager

1. 创建配置文件

[root@vms31 ~]# vi /opt/kubernetes/cfg/kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--leader-elect=true \

--master=127.0.0.1:8080 \

--bind-address=127.0.0.1 \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--service-cluster-ip-range=10.0.0.0/24 \

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \

--root-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \

--experimental-cluster-signing-duration=87600h0m0s"

- –master:通过本地非安全本地端口8080连接apiserver。

- –leader-elect:当该组件启动多个时,自动选举(HA)

- –cluster-signing-cert-file/–cluster-signing-key-file:自动为kubelet颁发证书的CA,与apiserver保持一致

2. systemd管理controller-manager

[root@vms31 ~]# vi /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

3. 启动并设置开机启动

systemctl daemon-reload

systemctl start kube-controller-manager

systemctl enable kube-controller-manager

4.5 部署kube-scheduler

1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--leader-elect \

--master=127.0.0.1:8080 \

--bind-address=127.0.0.1"

EOF

- –master:通过本地非安全本地端口8080连接apiserver。

- –leader-elect:当该组件启动多个时,自动选举(HA)

2. systemd管理scheduler

[root@vms31 ~]# cat /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

3. 启动并设置开机启动

systemctl daemon-reload

systemctl start kube-scheduler

systemctl enable kube-scheduler

4. 查看集群状态

所有组件都已经启动成功,通过kubectl工具查看当前集群组件状态:

[root@vms31 ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

如上输出说明Master节点组件运行正常。

五、部署worker组件

下面还是在Master Node(vms31)上操作,即同时作为Worker Node。

5.1 创建工作目录并拷贝二进制文件

在所有worker node创建工作目录:

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

从master节点拷贝:

cd kubernetes/server/bin

cp kubelet kube-proxy /opt/kubernetes/bin

5.2 部署kubelet

1. 创建配置文件

[root@vms31 ~]# vi /opt/kubernetes/cfg/kubelet.conf

KUBELET_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--hostname-override=k8s-master \

--network-plugin=cni \

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet-config.yml \

--cert-dir=/opt/kubernetes/ssl \

--pod-infra-container-image=docker.io/kubernetes/pause:latest"

下载镜像

docker pull docker.io/kubernetes/pause

docker pull registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 #也可以使用这个镜像

说明

- –hostname-override:显示名称,集群中唯一

- –network-plugin:启用CNI

- –kubeconfig:空路径,会自动生成,后面用于连接apiserver

- –bootstrap-kubeconfig:首次启动向apiserver申请证书

- –config:配置参数文件

- –cert-dir:kubelet证书生成目录

- –pod-infra-container-image:管理Pod网络容器的镜像

2. 配置参数文件

[root@vms31 ~]# vi /opt/kubernetes/cfg/kubelet-config.yml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

3. 生成bootstrap.kubeconfig文件

KUBE_APISERVER="https://192.168.26.31:6443" # apiserver IP:PORT

TOKEN="7edfdd626b76c341a002477b8a3d3fe2" # 与token.csv里保持一致

# 生成 kubelet bootstrap kubeconfig 配置文件

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

kubectl config set-credentials "kubelet-bootstrap" \

--token=${TOKEN} \

--kubeconfig=bootstrap.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

直接粘贴到命令行执行。拷贝到配置文件路径:

ls -lt

cp bootstrap.kubeconfig /opt/kubernetes/cfg

4. systemd管理kubelet

[root@vms31 ~]# vi /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

5. 启动并设置开机启动

systemctl daemon-reload

systemctl start kubelet

systemctl enable kubelet

5.3 批准kubelet证书申请并加入集群

- 查看kubelet证书请求

[root@vms31 bin]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-node-csr-kubelet证书申请 75s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

这里的node-csr-kubelet证书申请只是样例,执行时切换成实际的名称

- 批准申请

[root@vms71 bin]# kubectl certificate approve node-csr-kubelet证书申请

certificatesigningrequest.certificates.k8s.io/node-csr-kubelet证书申请 approved

- 查看节点

[root@vms31 bin]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master NotReady <none> 11s v1.19.4

注:由于网络插件还没有部署,节点会没有准备就绪 NotReady

5.4 部署kube-proxy

1. 创建配置文件

[root@vms31 ~]# vi /opt/kubernetes/cfg/kube-proxy.conf

KUBE_PROXY_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

2. 配置参数文件

[root@vms31 ~]# cat /opt/kubernetes/cfg/kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

hostnameOverride: k8s-master

clusterCIDR: 10.0.0.0/24

3. 生成kube-proxy.kubeconfig文件

生成kube-proxy证书:

- 切换工作目录

[root@vms31 ~]# cd ~/TLS/k8s/

- 创建证书请求文件

[root@vms31 k8s]# vi kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

- 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

ls kube-proxy*pem

kube-proxy-key.pem kube-proxy.pem

- 生成kubeconfig文件(必须在~/TLS/k8s/目录下)

KUBE_APISERVER="https://192.168.26.31:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

直接粘贴到命令行执行。拷贝到配置文件指定路径:

cp kube-proxy.kubeconfig /opt/kubernetes/cfg/

4. systemd管理kube-proxy

[root@vms31 ~]# vi /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

5. 启动并设置开机启动

systemctl daemon-reload

systemctl start kube-proxy

systemctl enable kube-proxy

六、核心网络插件部署

下面还是在Master Node(vms31)上操作。

6.1 部署CNI网络插件

1. 先准备好CNI二进制文件

2. 解压二进制包并移动到默认工作目录

mkdir -p /opt/cni/bin

tar zxvf cni-plugins-linux-amd64-v0.8.7.tgz -C /opt/cni/bin

3. 部署CNI网络

- 获取kube-flannel.yml

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

mv kube-flannel.yml kube-flannel.yaml

这里的kube-flannel.yml如果不能下载,可以从网上搜索并下载这个文件。

- 修改kube-flannel.yaml

[root@vms31 ~]# vi kube-flannel.yaml

只保留

name: kube-flannel-ds-amd64的DaemonSet

修改镜像为:image: quay.io/coreos/flannel:v0.12.0-amd64

镜像策略为:imagePullPolicy: IfNotPresent

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-amd64

imagePullPolicy: IfNotPresent

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-amd64

imagePullPolicy: IfNotPresent

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

下载yaml文件中的镜像:

docker pull quay.io/coreos/flannel:v0.12.0-amd64

- 应用kube-flannel.yaml

kubectl apply -f kube-flannel.yaml

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-amd64-qsp5g 1/1 Running 0 11m

kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready <none> 8h v1.19.4

部署好网络插件,Node准备就绪。

6.2 授权apiserver访问kubelet

[root@vms31 ~]# cat apiserver-to-kubelet-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

kubectl apply -f apiserver-to-kubelet-rbac.yaml

七、新增加Worker Node

本节无特别说明,均在worker节点vms32执行。

7.1 拷贝已部署好的Node相关文件到新节点

在Master(vms31)节点将Worker Node涉及的文件拷贝到新节点192.168.26.32

scp -r /opt/kubernetes root@192.168.26.32:/opt/

scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.26.32:/usr/lib/systemd/system

scp -r /opt/cni/ root@192.168.26.32:/opt/

scp /opt/kubernetes/ssl/ca.pem root@192.168.26.32:/opt/kubernetes/ssl

7.2 删除kubelet证书和kubeconfig文件

rm -f /opt/kubernetes/cfg/kubelet.kubeconfig

rm -f /opt/kubernetes/ssl/kubelet*

注:这几个文件是证书申请审批后自动生成的,每个Node不同,必须删除重新生成。

7.3 修改配置文件中的主机名

vi /opt/kubernetes/cfg/kubelet.conf

--hostname-override=k8s-node1

vi /opt/kubernetes/cfg/kube-proxy-config.yml

hostnameOverride: k8s-node1

7.4 启动并设置开机启动

systemctl daemon-reload

systemctl start kubelet

systemctl enable kubelet

systemctl start kube-proxy

systemctl enable kube-proxy

7.5 在master上批准新node的kubelet证书申请

在vms31

[root@vms31 soft]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-kubelet证书申请 97s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-33FAfRfrbV9H1NO1AZ58Oby6kf3Ypb0Upu4qvxmZP2c 8h kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

[root@vms31 soft]# kubectl certificate approve node-csr-kubelet证书申请

certificatesigningrequest.certificates.k8s.io/node-csr-kubelet证书申请 approved

这里的node-csr-kubelet证书申请只是样例,执行时切换成实际的名称

7.6 查看Node状态

在vms31

[root@vms31 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready <none> 39m v1.19.4

k8s-node1 Ready <none> 17m v1.19.4

如果需要新增其他节点,操作同上。记得修改主机名及相关初始化配置!

八、部署核心插件Dashboard和CoreDNS

本节无特别说明,均在master节点vms31执行。

8.1 部署Dashboard

1. 下载部署yaml文件及拉取镜像

下载链接:https://github.com/kubernetes/dashboard/releases/tag/v2.0.4

[root@vms31 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.4/aio/deploy/recommended.yaml

[root@vms31 ~]# cp recommended.yaml kubernetes-dashboard.yaml

[root@vms31 ~]# docker pull kubernetesui/dashboard:v2.0.4

[root@vms31 ~]# docker pull kubernetesui/metrics-scraper:v1.0.4

下载不了recommended.yaml,在网上搜索kubernetes-dashboard.yaml后修改相关内容

2. 修改kubernetes-dashboard.yaml

-

默认Dashboard只能集群内部访问,修改

Service为NodePort类型,nodePort: 30123,暴露到外部: -

镜像策略为:imagePullPolicy: IfNotPresent

[root@vms31 ~]# vi kubernetes-dashboard.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30123

type: NodePort

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.4

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

- --token-ttl=43200

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.4

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

3. 应用配置文件

[root@vms31 ~]# kubectl apply -f kubernetes-dashboard.yaml

[root@vms31 ~]# kubectl get pods,svc -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-7b59f7d4df-wxrtl 1/1 Running 1 32h

pod/kubernetes-dashboard-588849694c-q5d98 1/1 Running 1 31h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.0.0.204 <none> 8000/TCP 32h

service/kubernetes-dashboard NodePort 10.0.0.201 <none> 443:30123/TCP 32h

访问地址:https://NodeIP:30123 即:https://192.168.26.31:30123 或 https://192.168.26.32:30123

4. 创建service account并绑定默认cluster-admin管理员集群角色

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

5. 获取登录token

[root@vms31 ~]# kubectl -n kube-system get secret | grep dashboard

dashboard-admin-token-psj97 kubernetes.io/service-account-token 3 32h

[root@vms31 ~]# kubectl describe secrets -n kube-system dashboard-admin-token-psj97

Name: dashboard-admin-token-psj97

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 5dad7413-2249-4ea2-ac72-76d584a760e7

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkE4Y0dscnRRa3d1OWdfdEVzRmkySEdyS1BoRXEwR0x4MFo3Um5pUVJOZG8ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tcHNqOTciLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNWRhZDc0MTMtMjI0OS00ZWEyLWFjNzItNzZkNTg0YTc2MGU3Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.FaDkfOhR1a8ZxkxZVUKNSLsswXXO62ny7doT-Vx-IHiTKj22CFVOejrzEvAGJnRUeop3wOWFBOk_94EDCwDB-4tnOzhTAIXKZ6RrZrttBjq3fM9Lj_dWt604fsOLEdCLN6_P2j_heEslOLjuWgWYF-6ubLVO9n_N4GlBQ2_85FXnvZWrjp5M_EWCFkJwbJ3z6-qaclArXX8aHGQ8tR8dYZlG5YBdNg5yXbu2qpUk8uYiLmJ_wo2KCrmJOboee1yVCXJlRxFPXNfo38NpKYZBW7dg7NHe8Bxc0h5uU0uruph7ESpVcedTggzf2QBq43AfQP65wu6aqYAJHdosnnnhsg

ca.crt: 1359 bytes

namespace: 11 bytes

或者下面一条命令:

[root@vms31 ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-psj97

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 5dad7413-2249-4ea2-ac72-76d584a760e7

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1359 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkE4Y0dscnRRa3d1OWdfdEVzRmkySEdyS1BoRXEwR0x4MFo3Um5pUVJOZG8ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tcHNqOTciLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNWRhZDc0MTMtMjI0OS00ZWEyLWFjNzItNzZkNTg0YTc2MGU3Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.FaDkfOhR1a8ZxkxZVUKNSLsswXXO62ny7doT-Vx-IHiTKj22CFVOejrzEvAGJnRUeop3wOWFBOk_94EDCwDB-4tnOzhTAIXKZ6RrZrttBjq3fM9Lj_dWt604fsOLEdCLN6_P2j_heEslOLjuWgWYF-6ubLVO9n_N4GlBQ2_85FXnvZWrjp5M_EWCFkJwbJ3z6-qaclArXX8aHGQ8tR8dYZlG5YBdNg5yXbu2qpUk8uYiLmJ_wo2KCrmJOboee1yVCXJlRxFPXNfo38NpKYZBW7dg7NHe8Bxc0h5uU0uruph7ESpVcedTggzf2QBq43AfQP65wu6aqYAJHdosnnnhsg

6. 使用token登录Dashboard

https://192.168.26.31:30123

8.2 部署CoreDNS

CoreDNS用于集群内部Service名称解析。DNS服务监视Kubernetes API,为每一个Service创建DNS记录用于域名解析。

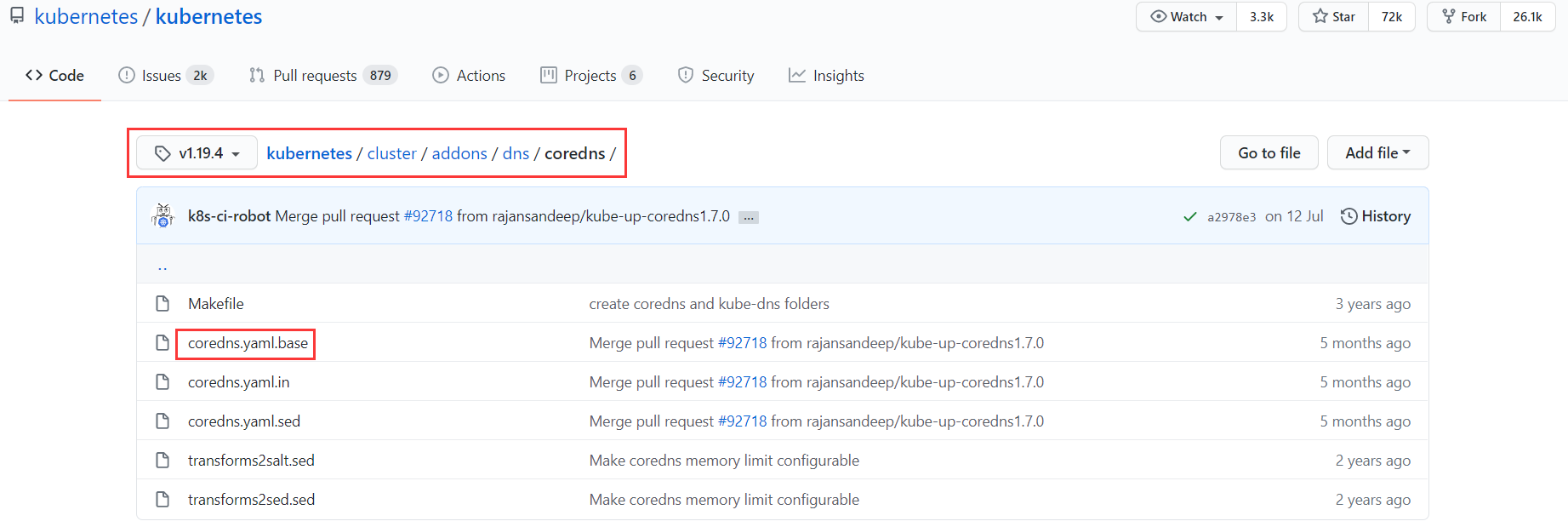

githueb地址:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns/coredns

1. 下载部署yaml文件及拉取镜像

很难下载,点击https://github.com/kubernetes/kubernetes/blob/v1.19.4/cluster/addons/dns/coredns/coredns.yaml.base

- 打开后进行复制。

[root@vms31 ~]# vi coredns-1.7.0.yaml

# __MACHINE_GENERATED_WARNING__

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

# kubernetes __PILLAR__DNS__DOMAIN__ in-addr.arpa ip6.arpa {

# pods insecure

# fallthrough in-addr.arpa ip6.arpa

# ttl 30

# }

kubernetes cluster.local 10.0.0.0/24

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

containers:

- name: coredns

#image: k8s.gcr.io/coredns:1.7.0

image: coredns/coredns:1.7.0

imagePullPolicy: IfNotPresent

resources:

limits:

# memory: __PILLAR__DNS__MEMORY__LIMIT__

memory: 150Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

#clusterIP: __PILLAR__DNS__SERVER__

clusterIP: 10.0.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

以上是修改后的yaml文件内容,修改内容参见下文说明。

- 下载所需要的镜像:

[root@vms31 ~]# grep image coredns-1.7.0.yaml

#image: k8s.gcr.io/coredns:1.7.0

image: coredns/coredns:1.7.0

imagePullPolicy: IfNotPresent

因为k8s.gcr.io/coredns:1.7.0很难下载,需要更改镜像仓库后进行下载。下面提供了两个镜像仓库都可以下载。

[root@vms31 ~]# docker pull k8s.gcr.io/coredns:1.7.0

Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

[root@vms31 ~]# docker pull coredns/coredns:1.7.0

1.7.0: Pulling from coredns/coredns

c6568d217a00: Pull complete

6937ebe10f02: Pull complete

Digest: sha256:73ca82b4ce829766d4f1f10947c3a338888f876fbed0540dc849c89ff256e90c

Status: Downloaded newer image for coredns/coredns:1.7.0

docker.io/coredns/coredns:1.7.0

[root@vms31 ~]# docker pull registry.aliyuncs.com/google_containers/coredns:1.7.0

1.7.0: Pulling from google_containers/coredns

Digest: sha256:242d440e3192ffbcecd40e9536891f4d9be46a650363f3a004497c2070f96f5a

Status: Downloaded newer image for registry.aliyuncs.com/google_containers/coredns:1.7.0

registry.aliyuncs.com/google_containers/coredns:1.7.0

2. 修改coredns-1.7.0.yaml

- 三个地方:变量替换

`__PILLAR__D-NS__DOMAIN__` 改为: `cluster.local`

`__PILLAR__DNS__MEMORY__LIMIT__` 改为: `70Mi`

`__PILLAR__DNS__SERVER__` 改为: `10.0.0.2`对应配置:/opt/kubernetes/cfg/kubelet-config.yml

- 修改镜像及拉取策略

image: coredns/coredns:1.7.0

imagePullPolicy: IfNotPresent

- 将镜像复制到vms32(pod有可能会调度到vms32上,这样做避免在vms32上拉取镜像)

[root@vms31 ~]# docker save coredns/coredns:1.7.0 > coredns-1.7.0.tar

[root@vms31 ~]# scp coredns-1.7.0.tar vms32:~/

在vms32导入镜像:

[root@vms32 ~]# docker load -i coredns-1.7.0.tar

3. 应用配置文件

[root@vms31 ~]# kubectl apply -f coredns-1.7.0.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

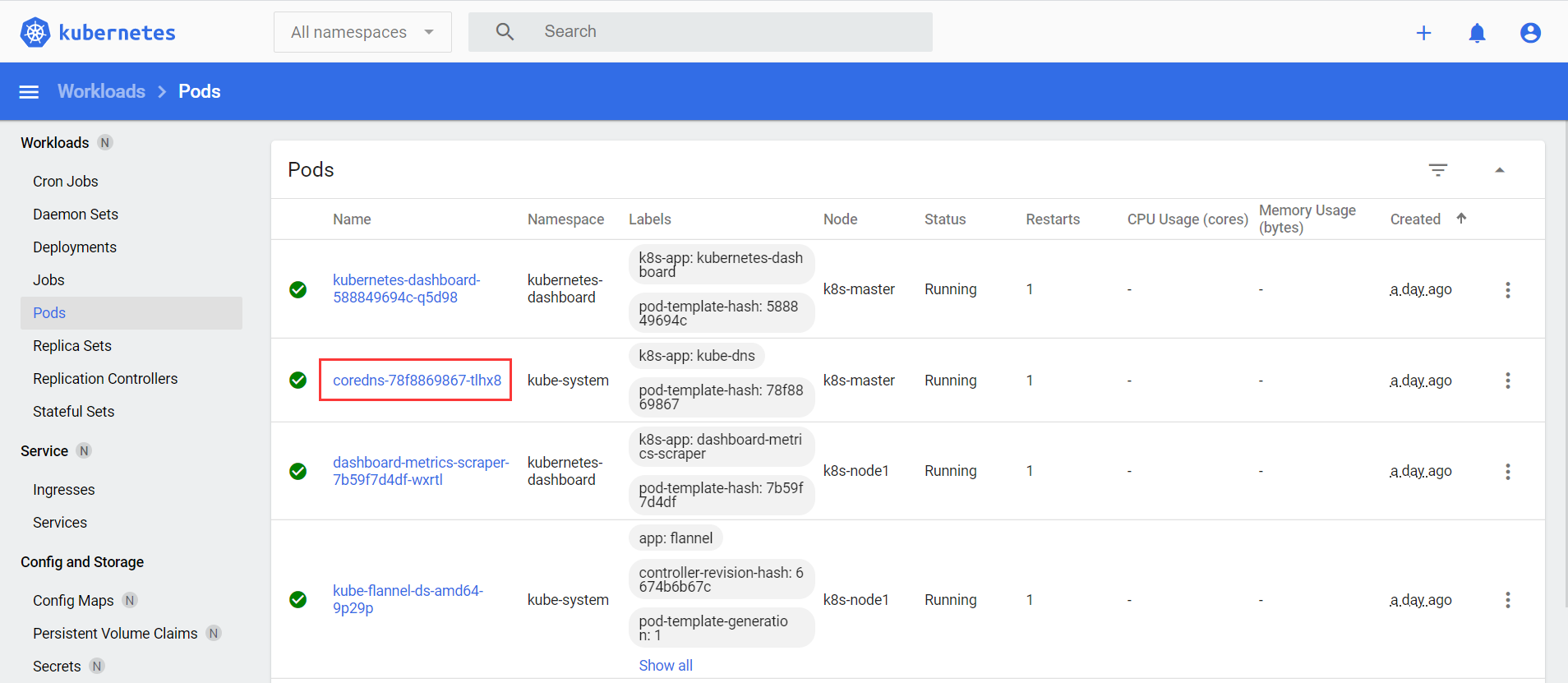

4. 检查与测试

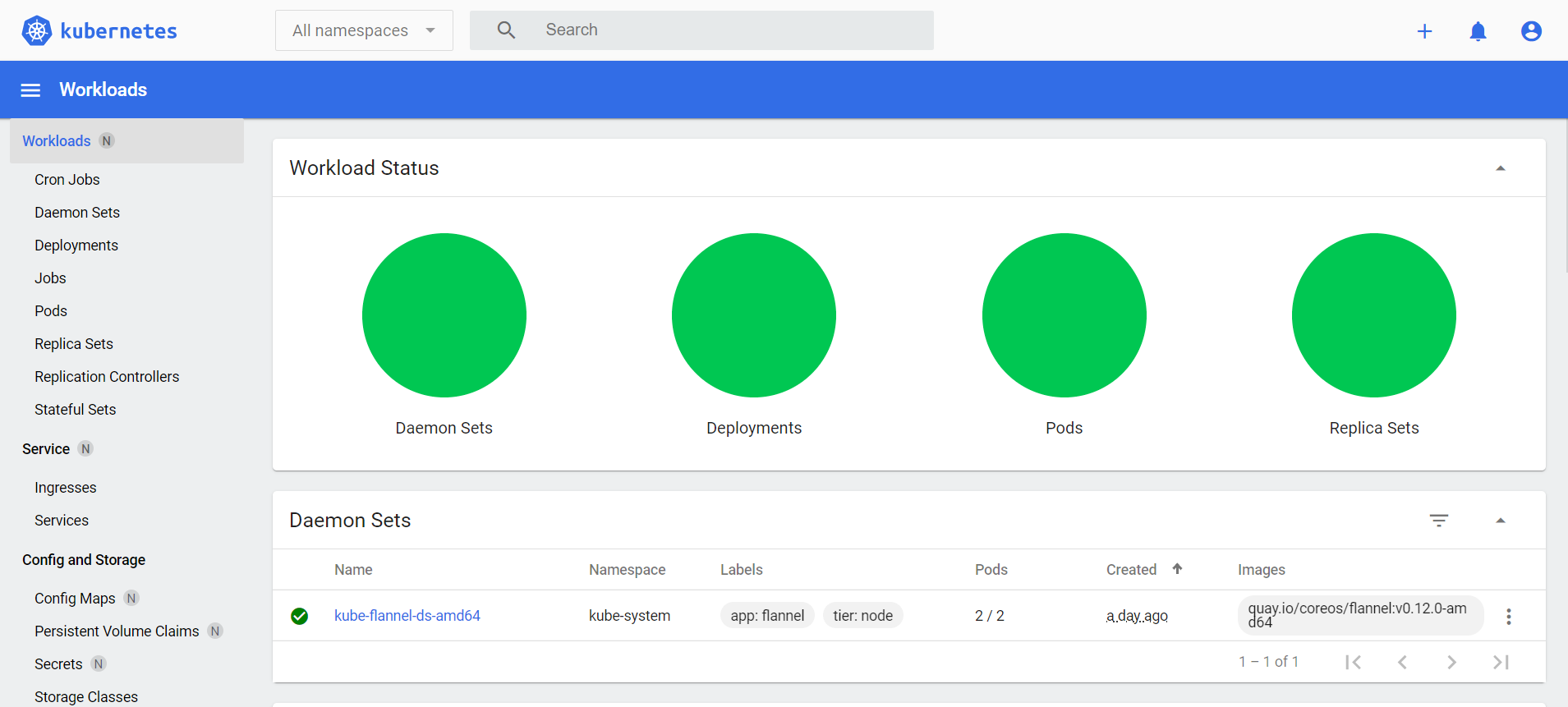

- 查看pod(也可以在dashboard上进行查看)

[root@vms31 ~]# kubectl get pods -n kube-system | grep coredns

coredns-78f8869867-tlhx8 1/1 Running 1 41h

- DNS解析测试:

[root@vms31 ~]# kubectl run -it --rm dns-test --image=busybox:1.28.4 sh

If you don't see a command prompt, try pressing enter.

/ # nslookup kubernetes

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.0.0.1 kubernetes.default.svc.cluster.local

解析没问题。

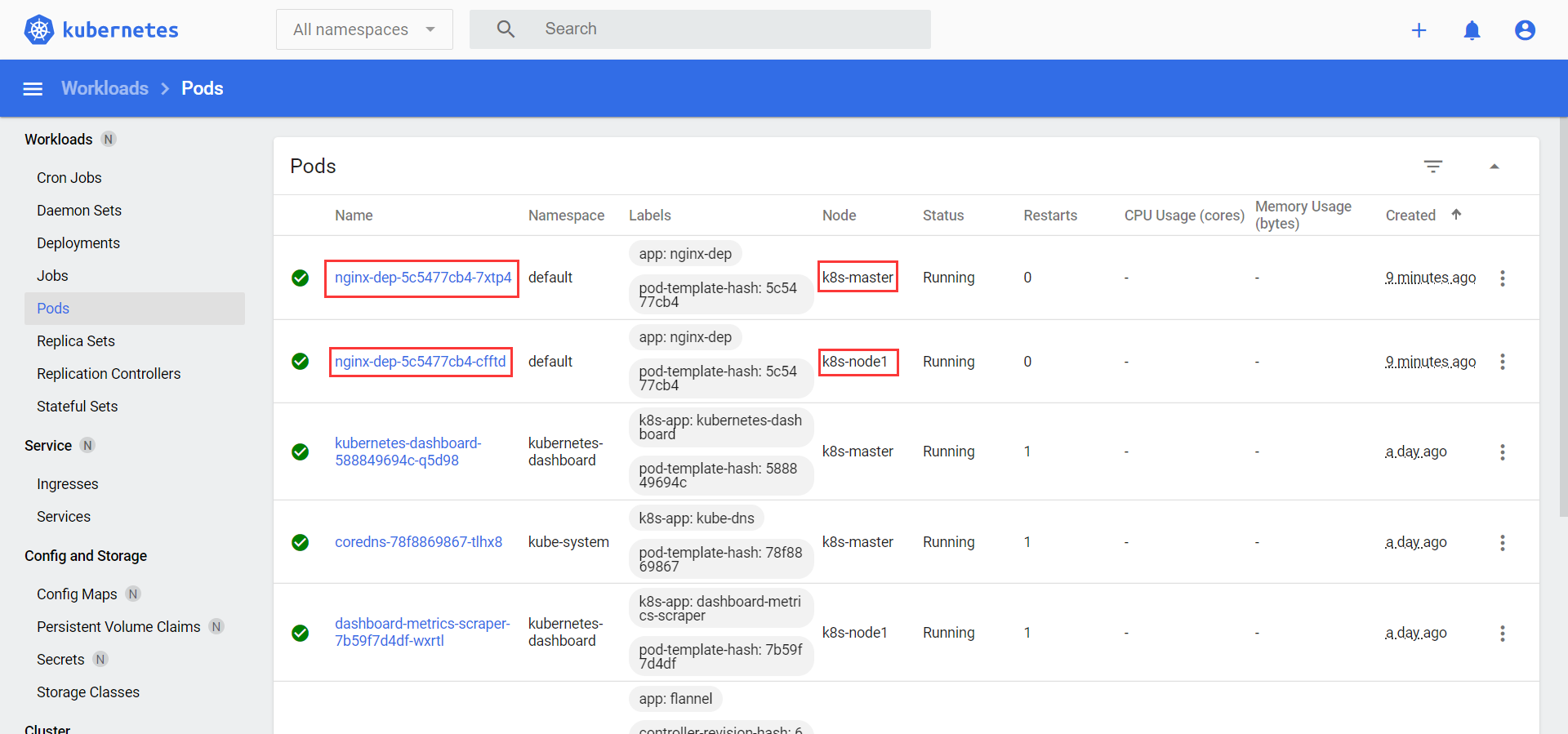

九、部署nginx服务

- 创建deployment、pod、service

[root@vms31 ~]# kubectl create deployment nginx-dep --image=nginx --replicas=2

deployment.apps/nginx-dep created

[root@vms31 ~]# kubectl expose deployment nginx-dep --port=80 --target-port=80 --type=NodePort

service/nginx-dep exposed

[root@vms31 ~]# kubectl get svc | grep nginx

nginx-dep NodePort 10.0.0.153 <none> 80:7049/TCP 7m57s

svc中的7049为节点访问端口,访问地址为:192.168.26.31:7049或192.168.26.32:7049

- 在dashboard查看deployment、pod、service

- 在dashbord通过Exec进入pod,修改主页

root@nginx-dep-5c5477cb4-7xtp4:/# ls

bin boot dev docker-entrypoint.d docker-entrypoint.sh etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

root@nginx-dep-5c5477cb4-7xtp4:/# cd usr/share/nginx/html/

root@nginx-dep-5c5477cb4-7xtp4:/usr/share/nginx/html# ls

50x.html index.html

root@nginx-dep-5c5477cb4-7xtp4:/usr/share/nginx/html# cat index.html

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

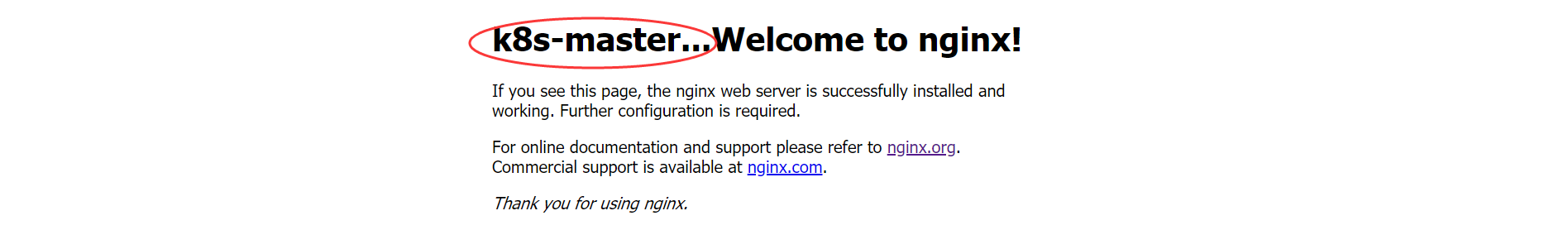

将k8s-master节点的主页中的<h1>Welcome to nginx!</h1>修改为<h1>k8s-master...Welcome to nginx!</h1>

cd /usr/share/nginx/html

sed -e 's/<h1>Welcome to nginx!<\/h1>/<h1>k8s-master...Welcome to nginx!<\/h1>/g' index.html > index2.html

mv index2.html index.html

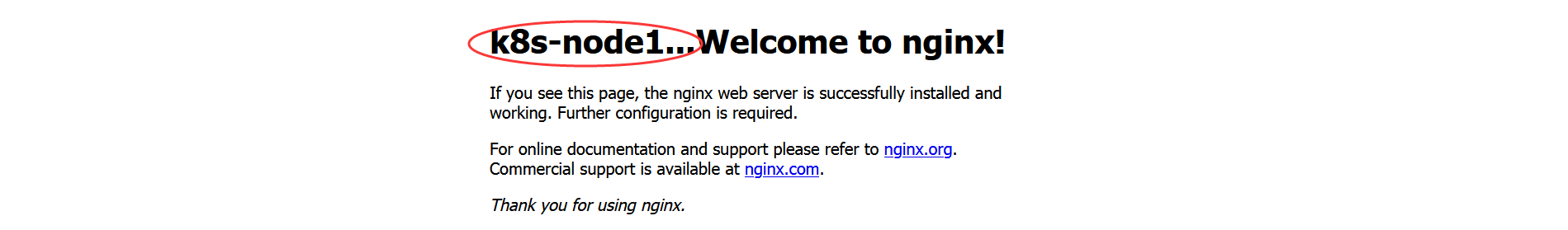

将k8s-node1节点的主页中的<h1>Welcome to nginx!</h1>修改为<h1>k8s-node1...Welcome to nginx!</h1>

cd /usr/share/nginx/html

sed -e 's/<h1>Welcome to nginx!<\/h1>/<h1>k8s-node1...Welcome to nginx!<\/h1>/g' index.html > index2.html

mv index2.html index.html

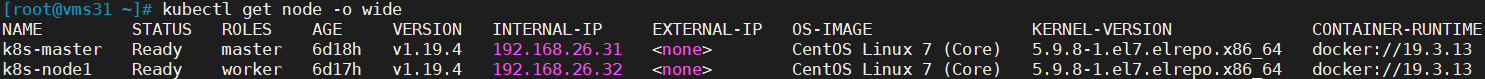

至此,k8s-v1.19.4集群实验环境完美部署成功!

[root@vms31 ~]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready master 6d18h v1.19.4 192.168.26.31 <none> CentOS Linux 7 (Core) 5.9.8-1.el7.elrepo.x86_64 docker://19.3.13

k8s-node1 Ready worker 6d17h v1.19.4 192.168.26.32 <none> CentOS Linux 7 (Core) 5.9.8-1.el7.elrepo.x86_64 docker://19.3.13

--2020.12.5 广州--

浙公网安备 33010602011771号

浙公网安备 33010602011771号