envoy可观测性

可观测性

状态统计

数据分类

- listener相关:侦听器、http连接管理器、tcp代理过滤器

- cluster相关:连接池、路由过滤器、tcp代理过滤器

- envoy自身:envoy服务的内存

envoy统计数据类型

- 计数器

- 指标数据

- 柱状图

stats link

envoy内置功能

统计数据通过接收器(sink)暴露到envoy外部存储,如statsd、普罗米修斯等,支持自定义sink

数据格式

- statsd: 指标:值|类型

- statsd_exporter: 时序名

配置

stats_sinks: #stats_sink列表,默认统计数据不保存,需要保存则配置此

name: envoy.stat_sinks.dog_statsd #初始化的sink名称,必须匹配内置支持的sink,有:envoy.stat_sinks.dog_statsd、envoy.stat_sinks.graphite_statsd、envoy.stat_sinks.hystrix、envoy.stat_sinks.metrics_service、envoy.stat_sinks.statsd、envoy.stat_sinks。wasm几个他们的功能类似于普罗米修斯的exporter

typed_config: #sink配置,各sink配置方式不同,以下为statsd

address: {} #statsdonk服务的访问端点,也可使用tcp_cluster_name指定为配置在envoy上的sink服务器集群

tcp_cluster_name: str #集群名,与address互斥

prefix: #自定义前缀

stats_config: #stats内部处理机制

stats_flush_interval: #stats数据写入到sinks的频率。默认5s一次,单位ms

stats_flush_on_admin: #只在admin接口上收到查询请求时才刷写数据

例

envoy配置

stats_skins:

- name: envoy.statsd

typed_config:

"@type": type.googlleapis.com/envoy.config.metrics.v3.StatsdSink

tcp_cluster_name: statsd_exporter

prefix: front-envoy

static_resources:

cluster:

- name: statsd_exporter

connect_timeout: 0.25s

type: strics_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: statsd_exporter

endpoints:

- lb_endpoints:

- endpoint:

address: { socket_address: statsd_ed, port_value: 80 }

普罗米修斯部分配置

...

scrape_configs:

- job_name: 'statsd'

scrape_interval: 5s

static_configs:

- targets:

- 'statsd_exporter:9102'

labels

group: 'service'

访问日志

envoy的tcp proxy和http过滤器可用特定的extension支持访问日志,支持自定义格式、将请求和响应写入日志

类似于统计数据,访问日志也支持将数据保存在响应的后端存储系统(sink)中,目标支持的sink:

- 文件:异步io架构,可自定义访问日志格式

- grpc:将访问日志发给grpc访问日志记录服务中

- stdout

- stderr

日志格式

格式规则

- format string(字符串)

- format dictionaries(字典)

命令操作符

用于提取数据并插入到日志中

有些操作符对于tcp、http来说含义有所不同

例

%REQ(X?Y):Z% #记录http请求报文标头X的值,Y时备用标头,Z时可选参数,标识字符串阶段并保留最多Z个字符。X和Y标头都不存在时记录为"-"。tcp不支持此

%RESP(X?Y):Z% #记录http响应白问标头值。tcp不支持

%DURATION% #http时从开始时间到最后1个字节输出的请求的总时间(毫秒);tcp时客户端连接的总持续时间(毫秒)

格式字符串

换行时必须手动指定

默认格式:

[%START_TIME%] "%REQ(:METHOD)% %REQ(X-ENVOY-ORIGINAL-PATH?:PATH)% %PROTOCOL%" %RESPONSE_CODE% %RESPONSE_FLAGS% %BYTES_RECEVIED% %BYTES_SENT% %FRUATION% %RESP(X-ENVOY-UPSTREAM-SERVICE-TIME)% "%REQ(X-FORWARDED-FOR)%" "%REQ(USER-AGENT)%" "%REQ(X-REQUEST-ID)%" "%REQ(:AUTHORITY)%" "%UPSTREAM_HOST%"\n

格式字符串说明

- %STRAT_TIME% 请求开始的时间戳

- %REQ(X?Y):Z%

- %RESP(X?Y):Z%

- %PROTOCOL% http协议版本

- %RESPONSE_CODE% http响应码

- %RESPONSE_FLAGS% 响应标志,用于进一步说明响应或连接信息

- %BYTED_RECEVIED% 接收的body大小,tcp代理中为客户端接收的字节数

- %BYTES_SENT% 发送的body大小,tcp代理中为客户端发送的字节数

- %DURATION% 从接收请求到响应最后1个字节的时间

- %UPSTREAM_HOST% 上游的url。tcp代理中为:tcp://ip:port

配置

...

filter_chains:

- filters:

- name: envoy.filter.network.http_connection_manager

typed_config:

"@type":

stat_prefix: ingress_http

code_type: AUTO

access_log:

name: str #访问日志名,必须与静态注册的访问日志匹配,日志记录器一次只能选一个,当前内置日志记录器有:

#envoy.access_loggers.file

#envoy.access_loggers.http_grpc

#envoy.access_loggers.open_telemetry

#envoy.access_loggers.stream

#envoy.access_loggers.tcp_grpc

#envoy.access_loggers.wasm

filter: {} #用于确定输出哪些日志信息的过滤器,只能选1个

typed_config: #日志记录器类型相关的专用配置,以文件记录器为例

"@type": type.googleapis.com/envoy.extensions.access_loggers.file.v3.FileAccessLog

path: str #路径

log_format: 格式 #格式定义,未定义时使用默认值

text_format: #命令操作符的文本字符串,几个格式只能选一个

json_format: #json格式

text_format_source: #字符串来自filename、inline_bytes、inline_string数据源

omit_empty_values: 布尔值

content_type: 类型 #文本类型,默认为text/plan,json为application/json

formatters: [] #调用的日志格式化插件

status_code_filter: {}

duration_filter: {}

not_health_check_filter: {}

traceable_filter: {}

runtime_filter: {}

and_filter: {}

or_filter: {}

header_filter: {}

response_flag_filter: {}

grpc_status_filter: {}

extension_filter: {}

metadata_filter: {}

例

static_resources:

...

access_log:

- name: envoy.access_loggers.file

typed_config:

"@type": type.googleapis.com/envoy.extensions.access_loggers.file.v3.FileAccessLog

path: '/dev/stdout'

log_format:

text_format: "[%START_TIME%] \"%REQ(:METHOD)% %REQ(X-ENVOY-ORIGINAL-PATH?:PATH)% %PROTOCOL%\" %RESPONSE_CODE% %RESPONSE_FLAGS% %BYTES_RECEVIED% %BYTES_SENT% %FRUATION% %RESP(X-ENVOY-UPSTREAM-SERVICE-TIME)% \"%REQ(X-FORWARDED-FOR)%\" \"%REQ(USER-AGENT)%\" \"%REQ(X-REQUEST-ID)%\" \"%REQ(:AUTHORITY)%\" \"%UPSTREAM_HOST%"\n"

#json_format: {"start": "[%START_TIME%] ", "method": "%REQ(:METHOD)%", "url": "%REQ(X-ENVOY-ORIGINAL-PATH?:PATH)%", "protocol": "%PROTOCOL%", "status": "%RESPONSE_CODE%", "respflags": "%RESPONSE_FLAGS%", "bytes-received": "%BYTES_RECEIVED%", "bytes-sent": "%BYTES_SENT%", "duration": "%DURATION%", "upstream-service-time": "%RESP(X-ENVOY-UPSTREAM-SERVICE-TIME)%", "x-forwarded-for": "%REQ(X-FORWARDED-FOR)%", "user-agent": "%REQ(USER-AGENT)%", "request-id": "%REQ(X-REQUEST-ID)%", "authority": "%REQ(:AUTHORITY)%", "upstream-host": "%UPSTREAM_HOST%", "remote-ip": "%DOWNSTREAM_REMOTE_ADDRESS_WITHOUT_PORT%"}

分布式跟踪

内置支持的跟踪系统:

- zipkin

- jaeger

- datadog

内置分布式跟踪机制

- 生成请求id:envoy会在需要的时候生成uuid,并填充x-request-id标头,应用可转发这个标头进行统一的记录和跟踪

- 集成外部跟踪服务:envoy支持接入外部跟踪可视化服务,如:lightstep、zipkin、jaeger

- 加入客户端跟踪id:x-client-trace-id标头可用来把不受信任的请求id连接到受信任的x-request-id标头

跟踪请求过程

envoy-ingress-->应用-->envoy-egress

必须应用内部写代码能识别traceid,在并发场景中,多个请求同时从envoy路由到应用中,应用无法识别此次请求对应哪个traceid,所以需要在代码中实现,应用也必须要赋值请求中的数据才能实现追踪(通常需要借助专门的追踪库)

传播上下文信息

envoy提供报告网格内服务间通信跟踪信息的能力,为了可以关联请求中生成的跟踪信息,服务必须在入站出站间做上下文传播,无论用哪个跟踪服务,都应该传递x-request-id标头

http连接管理器设置跟踪对象

- 外部客户端使用:x-client-trace-id

- 内部服务使用:x-envoy-force-trace

- 随机采样使用运行时配置:random_sampling

路由过滤器设置跟踪对象

- start_child_span为egress调用创建子span

手动传播上下文

- zipkin跟踪器:传播B3 HTTP标头(x-b3-traceid、x-b3-spanid、x-b3-parentspanid、x-b3-sampled、x-b3-flags)

- datadog跟踪器:传播特定datadog的标头(x-datadog-trace-id、x-datadog-parent-id、x-datadog-sampling-priority)

- lightstep跟踪器:传播x-ot-span-context标头,同时将http请求发送到其他服务

envoy生成的span信息

- 原始服务集群,用

--service-cluster选项设置 - 请求的开始时间、持续时间

- 始发主机,用

--service-node选项设置 - 用

x-envoy-downstream-service-cluster标头设置下游集群 - http url、方法、响应码

- 跟踪系统自带的元数据

配置

仅支持http协议的跟踪器

三部分组成:

- 定义分布式跟踪的相关集群:如zipkin或jaeger等服务需要定义未envoy可识别的集群,且是静态配置

- tracing配置:设置全局跟踪器,定义在bootstrap中

- http过滤器中定义tracing

static_resources:

listeners:

- name: xx

address: {}

filter_chains:

- filters:

- name: envoy.http_connection_manager

stat_prefix: ..

route_config: {}

generate_request_id: true

tracing: #向tracing provider发送跟踪数据

client_sampling: #由客户端通过x-client-trace-id标头指定进行跟踪时的采样,默认100%

random_sampling: #随机采样,默认100%

overall_sampling: #整体采样,默认100%

verbose: #是否为span标注额外信息,开启时,span将包含stram事件的日志信息

max_path_tag_length: [] #记录url时使用的最大长度

custom_tags: [] #自定义标签列表,各标签用于活动的span之上,且名称要唯

provider: {} #要使用的外部跟踪器

clusters:

- name: zipkin|jaeger|...

tracing: #跟踪器的全局定义,配置tracing provider,全局是所有数据都发送到跟踪系统中,但有时不需要,所以可以只定义http过滤器上,为应用单独指定配置跟踪器

http: #http跟踪器

name: xx

typed_config: #类型化配置,

#envoy.tracers.datadog

#envoy.tracers.dynamic_ot

#envoy.tracers.lightstep

#envoy.tracers.opencensus

#envoy.tracers.skywalking

#envoy.tracers.xray

#envoy.tracers.zipkin

"@type": type.googleapis.com/envoy.config.trace.v3.ZipkinConfig #以zipkin为例

conllector_cluster: str #指定承载zipkin收集器的集群名,必须在bootstrap静态集群资源中定义

collecrot_endpoint: str #zipkin服务的用于接收span数据的api端点,默认配置为:/api/v2/spans

trace_id_128bit: str #是否创建128位的跟踪id,默认为false,即使用64位id

shared_span_context: str #客户端和服务器span是否共享相同的spanid,默认true

collector_endpoint_version: str #collocter端点版本

collector_hostname: str #向collector cluster发送span时使用的主机名,默认为conllector_cluster定义的名称。可选项

例:

zipkin和jaeger的配置是一模一样,jaeger直接使用的zipkin的类型

static_resources:

listeners:

- name: xx

address: {}

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connecion_manager.v3.HttpConnectionManager

generate_requiest_id: true

tracing:

provider:

name: envoy.tracers.zipkin

typed_config:

"@type": type.googleapis.com/envoy.config.trace.v3.ZipkinConfig

collector_cluster: zipkin

#collector_cluster: jeager

#share_span_context: false

collector_endpoint: '/api/v2/spans'

collector_endpoint_version: HTTP_JSON

cluters:

- name: zipkin

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: zipkin

endpoints:

- lb_endpoints:

- endpoint:

address: { socket_address: { address: zipkin, port_value: 9411 }}

案例

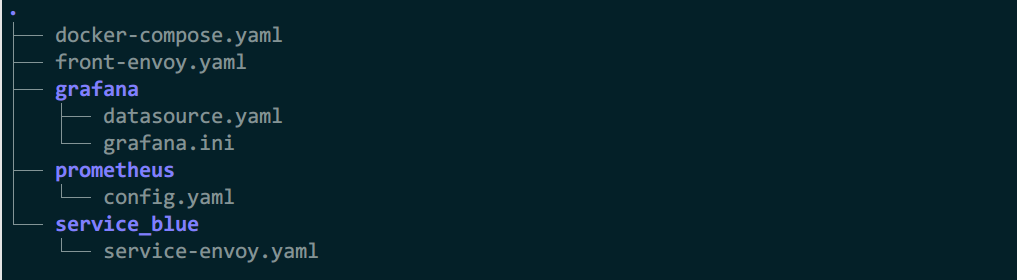

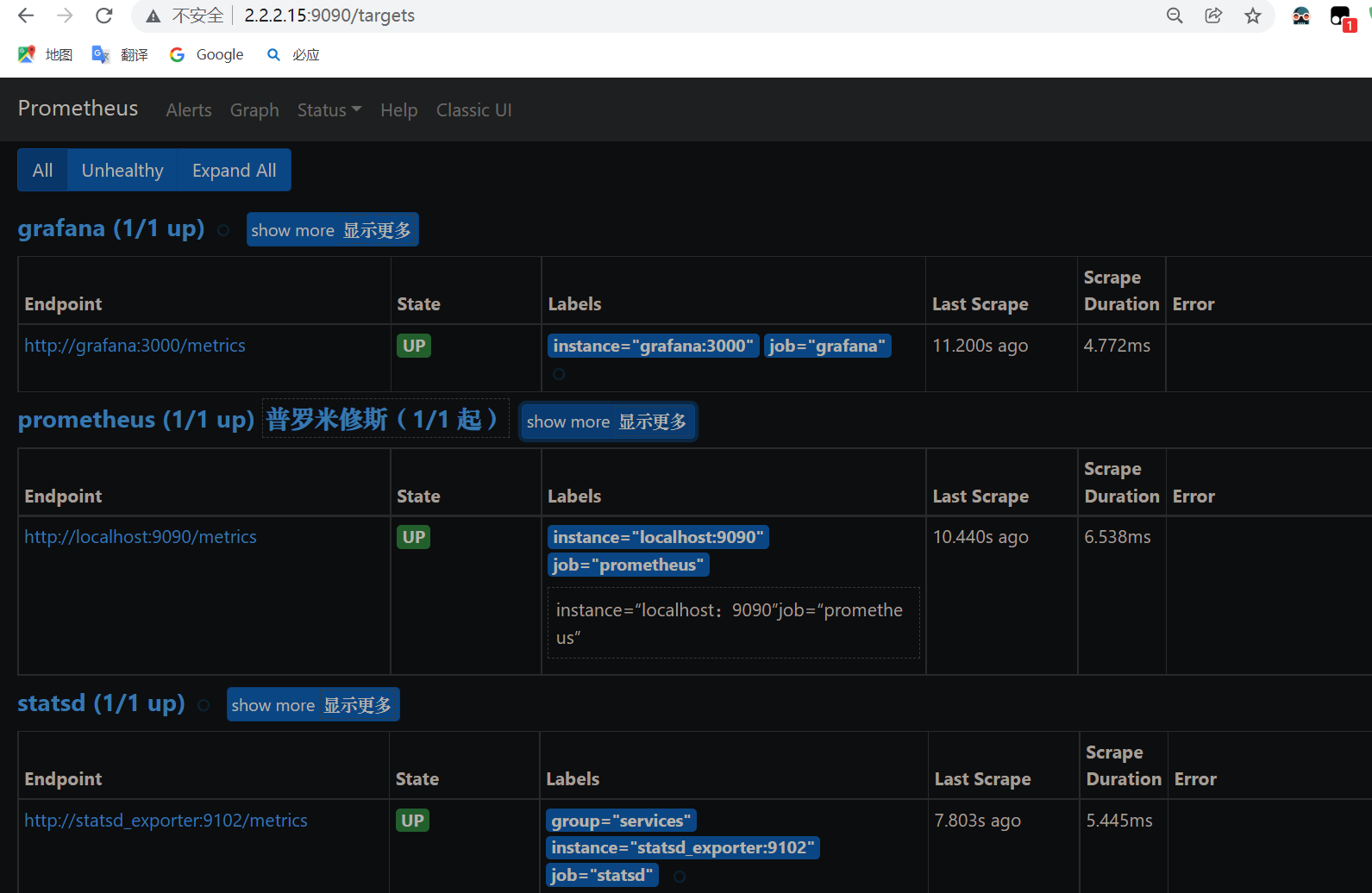

例1:prometheus采集envoy指标

1)front-envoy.yaml

node:

id: front-envoy

cluster: mycluster

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

stats_sinks:

- name: envoy.statsd

typed_config:

"@type": type.googleapis.com/envoy.config.metrics.v3.StatsdSink

tcp_cluster_name: statsd_exporter

prefix: front-envoy

static_resources:

listeners:

- name: listener_0

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: service

domains: ["*"]

routes:

- match:

prefix: "/"

route:

cluster: colord

retry_policy:

retry_on: "5xx"

num_retries: 3

timeout: 1s

http_filters:

- name: envoy.filters.http.router

typed_config: {}

clusters:

- name: colord

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: colord

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: myservice

port_value: 80

- name: statsd_exporter

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: statsd_exporter

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: statsd_exporter

port_value: 9125

2)service_blue/service-envoy.yaml

node:

id: service_blue

cluster: mycluster

stats_sinks:

- name: envoy.statsd

typed_config:

"@type": type.googleapis.com/envoy.config.metrics.v3.StatsdSink

tcp_cluster_name: statsd_exporter

prefix: service_blue

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

static_resources:

listeners:

- name: listener_0

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: service

domains: ["*"]

routes:

- match:

prefix: "/"

route:

cluster: local_service

http_filters:

- name: envoy.filters.http.fault

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault

max_active_faults: 100

abort:

http_status: 503

percentage:

numerator: 10

denominator: HUNDRED

- name: envoy.filters.http.router

typed_config: {}

clusters:

- name: local_service

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

load_assignment:

cluster_name: local_service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 127.0.0.1

port_value: 8080

- name: statsd_exporter

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: statsd_exporter

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: statsd_exporter

port_value: 9125

3)prometheus/config.yaml

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'grafana'

static_configs:

- targets: ['grafana:3000']

- job_name: 'statsd'

scrape_interval: 5s

static_configs:

- targets: ['statsd_exporter:9102']

labels:

group: 'services'

4)grafana/datasource.yaml

apiVersion: 1

datasources:

- name: prometheus

type: prometheus

access: proxy

url: http://prometheus:9090

editable: true

isDefault:

5)grafana/grafana.ini

instance_name = "grafana"

[security]

admin_user = admin

admin_password = admin

6)docker-compose.yaml

version: '3.3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.20.0

volumes:

- ./front-envoy.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.68.10

aliases:

- front-proxy

expose:

# Expose ports 80 (for general traffic) and 9901 (for the admin server)

- "80"

- "9901"

service_blue:

image: ikubernetes/servicemesh-app:latest

volumes:

- ./service_blue/service-envoy.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.68.11

aliases:

- myservice

- blue

environment:

- SERVICE_NAME=blue

expose:

- "80"

statsd_exporter:

image: prom/statsd-exporter:v0.22.3

networks:

envoymesh:

ipv4_address: 172.31.68.6

aliases:

- statsd_exporter

ports:

- 9125:9125

- 9102:9102

prometheus:

image: prom/prometheus:v2.30.3

volumes:

- "./prometheus/config.yaml:/etc/prometheus.yaml"

networks:

envoymesh:

ipv4_address: 172.31.68.7

aliases:

- prometheus

ports:

- 9090:9090

command: "--config.file=/etc/prometheus.yaml"

grafana:

image: grafana/grafana:8.2.2

volumes:

- "./grafana/grafana.ini:/etc/grafana/grafana.ini"

- "./grafana/datasource.yaml:/etc/grafana/provisioning/datasources/datasource.yaml"

networks:

envoymesh:

ipv4_address: 172.31.68.8

aliases:

- grafana

ports:

- 3000:3000

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.68.0/24

7)测试

curl 物理机ip:9090

curl 物理机ip:3000

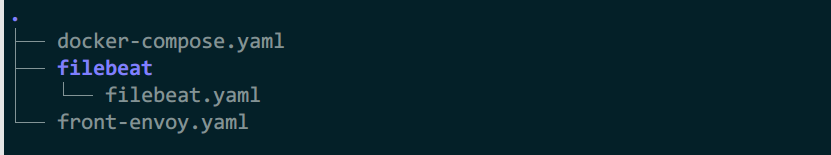

例2:efk日志采集

1)front-envoy.yaml

node:

id: front-envoy

cluster: mycluster

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

static_resources:

listeners:

- address:

socket_address:

address: 0.0.0.0

port_value: 80

name: listener_http

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

access_log:

- name: envoy.access_loggers.file

typed_config:

"@type": type.googleapis.com/envoy.extensions.access_loggers.file.v3.FileAccessLog

path: "/dev/stdout"

log_format:

json_format: {"start": "[%START_TIME%] ", "method": "%REQ(:METHOD)%", "url": "%REQ(X-ENVOY-ORIGINAL-PATH?:PATH)%", "protocol": "%PROTOCOL%", "status": "%RESPONSE_CODE%", "respflags": "%RESPONSE_FLAGS%", "bytes-received": "%BYTES_RECEIVED%", "bytes-sent": "%BYTES_SENT%", "duration": "%DURATION%", "upstream-service-time": "%RESP(X-ENVOY-UPSTREAM-SERVICE-TIME)%", "x-forwarded-for": "%REQ(X-FORWARDED-FOR)%", "user-agent": "%REQ(USER-AGENT)%", "request-id": "%REQ(X-REQUEST-ID)%", "authority": "%REQ(:AUTHORITY)%", "upstream-host": "%UPSTREAM_HOST%", "remote-ip": "%DOWNSTREAM_REMOTE_ADDRESS_WITHOUT_PORT%"}

#text_format: "[%START_TIME%] \"%REQ(:METHOD)% %REQ(X-ENVOY-ORIGINAL-PATH?:PATH)% %PROTOCOL%\" %RESPONSE_CODE% %RESPONSE_FLAGS% %BYTES_RECEIVED% %BYTES_SENT% %DURATION% %RESP(X-ENVOY-UPSTREAM-SERVICE-TIME)% \"%REQ(X-FORWARDED-FOR)%\" \"%REQ(USER-AGENT)%\" \"%REQ(X-REQUEST-ID)%\" \"%REQ(:AUTHORITY)%\" \"%UPSTREAM_HOST%\" \"%DOWNSTREAM_REMOTE_ADDRESS_WITHOUT_PORT%\"\n"

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: vh_001

domains: ["*"]

routes:

- match:

prefix: "/"

route:

cluster: mycluster

http_filters:

- name: envoy.filters.http.router

clusters:

- name: mycluster

connect_timeout: 0.25s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: mycluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: colored

port_value: 80

2)filebeat/filebeat.yaml

filebeat.inputs:

- type: container

paths:

- '/var/lib/docker/containers/*/*.log'

processors:

- add_docker_metadata:

host: "unix:///var/run/docker.sock"

- decode_json_fields:

fields: ["message"]

target: "json"

overwrite_keys: true

output.elasticsearch:

hosts: ["elasticsearch:9200"]

indices:

- index: "filebeat-%{+yyyy.MM.dd}"

logging.json: true

logging.metrics.enabled: false

3)docker-compose.yaml

version: '3.3'

services:

front-envoy:

image: envoyproxy/envoy-alpine:v1.20.0

volumes:

- ./front-envoy.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.76.10

aliases:

- front-envoy

expose:

# Expose ports 80 (for general traffic) and 9901 (for the admin server)

- "80"

- "9901"

service_blue:

image: ikubernetes/servicemesh-app:latest

networks:

envoymesh:

aliases:

- colored

- blue

environment:

- SERVICE_NAME=blue

expose:

- "80"

service_green:

image: ikubernetes/servicemesh-app:latest

networks:

envoymesh:

aliases:

- colored

- green

environment:

- SERVICE_NAME=green

expose:

- "80"

service_red:

image: ikubernetes/servicemesh-app:latest

networks:

envoymesh:

aliases:

- colored

- red

environment:

- SERVICE_NAME=red

expose:

- "80"

elasticsearch:

image: "docker.elastic.co/elasticsearch/elasticsearch:7.14.2"

environment:

- "ES_JAVA_OPTS=-Xms1g -Xmx1g"

- "discovery.type=single-node"

- "cluster.name=myes"

- "node.name=myes01"

ulimits:

memlock:

soft: -1

hard: -1

networks:

envoymesh:

ipv4_address: 172.31.76.15

aliases:

- es

- myes01

ports:

- "9200:9200"

volumes:

- elasticsearch_data:/usr/share/elasticsearch/data

kibana:

image: "docker.elastic.co/kibana/kibana:7.14.2"

environment:

ELASTICSEARCH_URL: http://myes01:9200

ELASTICSEARCH_HOSTS: '["http://myes01:9200"]'

networks:

envoymesh:

ipv4_address: 172.31.76.16

aliases:

- kibana

- kib

ports:

- "5601:5601"

filebeat:

image: "docker.elastic.co/beats/filebeat:7.14.2"

networks:

envoymesh:

ipv4_address: 172.31.76.17

aliases:

- filebeat

- fb

user: root

command: ["--strict.perms=false"]

volumes:

- ./filebeat/filebeat.yaml:/usr/share/filebeat/filebeat.yml

- /var/lib/docker:/var/lib/docker:ro

- /var/run/docker.sock:/var/run/docker.sock

volumes:

elasticsearch_data:

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.76.0/24

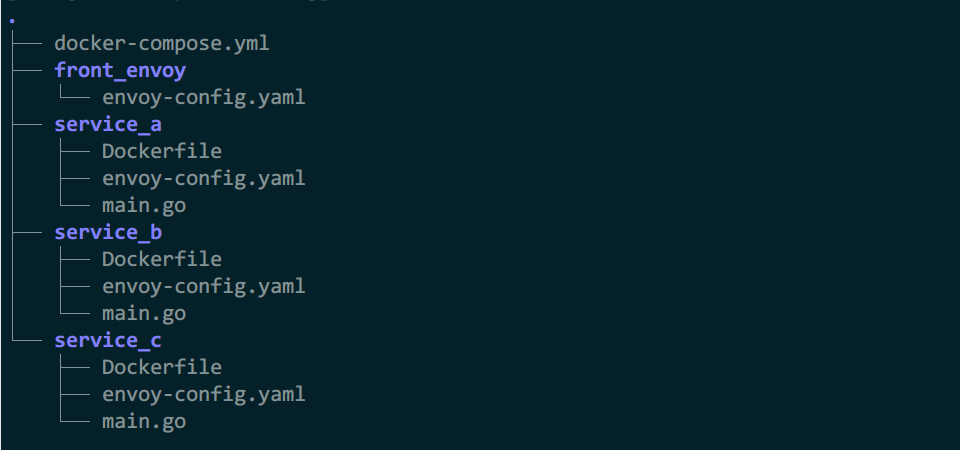

例3:zipkin跟踪

1)front_envoy/envoy-config.yaml

node:

id: front-envoy

cluster: front-envoy

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

static_resources:

listeners:

- name: http_listener-service_a

address:

socket_address:

address: 0.0.0.0

port_value: 80

traffic_direction: OUTBOUND

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

generate_request_id: true

tracing:

provider:

name: envoy.tracers.zipkin

typed_config:

"@type": type.googleapis.com/envoy.config.trace.v3.ZipkinConfig

collector_cluster: zipkin

collector_endpoint: "/api/v2/spans"

collector_endpoint_version: HTTP_JSON

codec_type: AUTO

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: service_a

decorator:

operation: checkAvailability

response_headers_to_add:

- header:

key: "x-b3-traceid"

value: "%REQ(x-b3-traceid)%"

- header:

key: "x-request-id"

value: "%REQ(x-request-id)%"

http_filters:

- name: envoy.filters.http.router

clusters:

- name: zipkin

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: zipkin

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: zipkin

port_value: 9411

- name: service_a

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: service_a

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_a_envoy

port_value: 8786

2)dockerfile文件

3个服务通用

FROM golang:alpine

COPY main.go main.go

CMD go run main.go

3)service_a目录

main.go

package main

import (

"fmt"

"io/ioutil"

"log"

"net/http"

)

func handler(w http.ResponseWriter, r *http.Request) {

fmt.Fprintf(w, "Calling Service B: ")

req, err := http.NewRequest("GET", "http://localhost:8788/", nil)

if err != nil {

fmt.Printf("%s", err)

}

req.Header.Add("x-request-id", r.Header.Get("x-request-id"))

req.Header.Add("x-b3-traceid", r.Header.Get("x-b3-traceid"))

req.Header.Add("x-b3-spanid", r.Header.Get("x-b3-spanid"))

req.Header.Add("x-b3-parentspanid", r.Header.Get("x-b3-parentspanid"))

req.Header.Add("x-b3-sampled", r.Header.Get("x-b3-sampled"))

req.Header.Add("x-b3-flags", r.Header.Get("x-b3-flags"))

req.Header.Add("x-ot-span-context", r.Header.Get("x-ot-span-context"))

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

fmt.Printf("%s", err)

}

defer resp.Body.Close()

body, err := ioutil.ReadAll(resp.Body)

if err != nil {

fmt.Printf("%s", err)

}

fmt.Fprintf(w, string(body))

fmt.Fprintf(w, "Hello from service A.\n")

req, err = http.NewRequest("GET", "http://localhost:8791/", nil)

if err != nil {

fmt.Printf("%s", err)

}

req.Header.Add("x-request-id", r.Header.Get("x-request-id"))

req.Header.Add("x-b3-traceid", r.Header.Get("x-b3-traceid"))

req.Header.Add("x-b3-spanid", r.Header.Get("x-b3-spanid"))

req.Header.Add("x-b3-parentspanid", r.Header.Get("x-b3-parentspanid"))

req.Header.Add("x-b3-sampled", r.Header.Get("x-b3-sampled"))

req.Header.Add("x-b3-flags", r.Header.Get("x-b3-flags"))

req.Header.Add("x-ot-span-context", r.Header.Get("x-ot-span-context"))

client = &http.Client{}

resp, err = client.Do(req)

if err != nil {

fmt.Printf("%s", err)

}

defer resp.Body.Close()

body, err = ioutil.ReadAll(resp.Body)

if err != nil {

fmt.Printf("%s", err)

}

fmt.Fprintf(w, string(body))

}

func main() {

http.HandleFunc("/", handler)

log.Fatal(http.ListenAndServe(":8081", nil))

}

envoy-config.yaml

node:

id: service-a

cluster: service-a

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

static_resources:

listeners:

- name: service-a-svc-http-listener

address:

socket_address:

address: 0.0.0.0

port_value: 8786

traffic_direction: INBOUND

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

tracing:

provider:

name: envoy.tracers.zipkin

typed_config:

"@type": type.googleapis.com/envoy.config.trace.v3.ZipkinConfig

collector_cluster: zipkin

collector_endpoint: "/api/v2/spans"

collector_endpoint_version: HTTP_JSON

route_config:

name: service-a-svc-http-route

virtual_hosts:

- name: service-a-svc-http-route

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: local_service

decorator:

operation: checkAvailability

http_filters:

- name: envoy.filters.http.router

- name: service-b-svc-http-listener

address:

socket_address:

address: 0.0.0.0

port_value: 8788

traffic_direction: OUTBOUND

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: egress_http_to_service_b

codec_type: AUTO

tracing:

provider:

name: envoy.tracers.zipkin

typed_config:

"@type": type.googleapis.com/envoy.config.trace.v3.ZipkinConfig

collector_cluster: zipkin

collector_endpoint: "/api/v2/spans"

collector_endpoint_version: HTTP_JSON

route_config:

name: service-b-svc-http-route

virtual_hosts:

- name: service-b-svc-http-route

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: service_b

decorator:

operation: checkStock

http_filters:

- name: envoy.filters.http.router

- name: service-c-svc-http-listener

address:

socket_address:

address: 0.0.0.0

port_value: 8791

traffic_direction: OUTBOUND

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: egress_http_to_service_c

codec_type: AUTO

tracing:

provider:

name: envoy.tracers.zipkin

typed_config:

"@type": type.googleapis.com/envoy.config.trace.v3.ZipkinConfig

collector_cluster: zipkin

collector_endpoint: "/api/v2/spans"

collector_endpoint_version: HTTP_JSON

route_config:

name: service-c-svc-http-route

virtual_hosts:

- name: service-c-svc-http-route

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: service_c

decorator:

operation: checkStock

http_filters:

- name: envoy.filters.http.router

clusters:

- name: zipkin

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: zipkin

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: zipkin

port_value: 9411

- name: local_service

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: local_service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 127.0.0.1

port_value: 8081

- name: service_b

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: service_b

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_b_envoy

port_value: 8789

- name: service_c

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: service_c

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_c_envoy

port_value: 8790

4)service_b目录

main.go

package main

import (

"fmt"

"log"

"net/http"

)

func handler(w http.ResponseWriter, r *http.Request) {

fmt.Fprintf(w, "Hello from service B.\n")

}

func main() {

http.HandleFunc("/", handler)

log.Fatal(http.ListenAndServe(":8082", nil))

}

envoy-config.yaml

node:

id: service-b

cluster: service-b

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

static_resources:

listeners:

- name: service-b-svc-http-listener

address:

socket_address:

address: 0.0.0.0

port_value: 8789

traffic_direction: INBOUND

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

tracing:

provider:

name: envoy.tracers.zipkin

typed_config:

"@type": type.googleapis.com/envoy.config.trace.v3.ZipkinConfig

collector_cluster: zipkin

collector_endpoint: "/api/v2/spans"

collector_endpoint_version: HTTP_JSON

route_config:

name: service-b-svc-http-route

virtual_hosts:

- name: service-b-svc-http-route

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: local_service

decorator:

operation: checkAvailability

http_filters:

- name: envoy.filters.http.fault

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault

max_active_faults: 100

abort:

http_status: 503

percentage:

numerator: 15

denominator: HUNDRED

- name: envoy.filters.http.router

typed_config: {}

clusters:

- name: zipkin

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: zipkin

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: zipkin

port_value: 9411

- name: local_service

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: local_service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 127.0.0.1

port_value: 8082

5)service_c目录

main.go

package main

import (

"fmt"

"log"

"net/http"

)

func handler(w http.ResponseWriter, r *http.Request) {

fmt.Fprintf(w, "Hello from service C.\n")

}

func main() {

http.HandleFunc("/", handler)

log.Fatal(http.ListenAndServe(":8083", nil))

}

envoy-config.yaml

node:

id: service-c

cluster: service-c

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

static_resources:

listeners:

- name: service-c-svc-http-listener

address:

socket_address:

address: 0.0.0.0

port_value: 8790

traffic_direction: INBOUND

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

tracing:

provider:

name: envoy.tracers.zipkin

typed_config:

"@type": type.googleapis.com/envoy.config.trace.v3.ZipkinConfig

collector_cluster: zipkin

collector_endpoint: "/api/v2/spans"

collector_endpoint_version: HTTP_JSON

route_config:

name: service-c-svc-http-route

virtual_hosts:

- name: service-c-svc-http-route

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: local_service

decorator:

operation: checkAvailability

http_filters:

- name: envoy.filters.http.fault

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault

max_active_faults: 100

delay:

fixed_delay: 3s

percentage:

numerator: 10

denominator: HUNDRED

- name: envoy.filters.http.router

typed_config: {}

clusters:

- name: zipkin

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: zipkin

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: zipkin

port_value: 9411

- name: local_service

connect_timeout: 0.25s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: local_service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 127.0.0.1

port_value: 8083

6)docker-compose.yml

version: '3.3'

services:

front-envoy:

image: envoyproxy/envoy-alpine:v1.20.0

volumes:

- "./front_envoy/envoy-config.yaml:/etc/envoy/envoy.yaml"

networks:

envoymesh:

ipv4_address: 172.31.85.10

aliases:

- front-envoy

- front

ports:

- 8080:80

- 9901:9901

service_a_envoy:

image: envoyproxy/envoy-alpine:v1.20.0

volumes:

- "./service_a/envoy-config.yaml:/etc/envoy/envoy.yaml"

networks:

envoymesh:

aliases:

- service_a_envoy

- service-a-envoy

ports:

- 8786

- 8788

- 8791

service_a:

build: service_a/

network_mode: "service:service_a_envoy"

#ports:

#- 8081

depends_on:

- service_a_envoy

service_b_envoy:

image: envoyproxy/envoy-alpine:v1.20.0

volumes:

- "./service_b/envoy-config.yaml:/etc/envoy/envoy.yaml"

networks:

envoymesh:

aliases:

- service_b_envoy

- service-b-envoy

ports:

- 8789

service_b:

build: service_b/

network_mode: "service:service_b_envoy"

#ports:

#- 8082

depends_on:

- service_b_envoy

service_c_envoy:

image: envoyproxy/envoy-alpine:v1.20.0

volumes:

- "./service_c/envoy-config.yaml:/etc/envoy/envoy.yaml"

networks:

envoymesh:

aliases:

- service_c_envoy

- service-c-envoy

ports:

- 8790

service_c:

build: service_c/

network_mode: "service:service_c_envoy"

#ports:

#- 8083

depends_on:

- service_c_envoy

zipkin:

image: openzipkin/zipkin:2

networks:

envoymesh:

ipv4_address: 172.31.85.15

aliases:

- zipkin

ports:

- "9411:9411"

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.85.0/24

7)测试

查看zipkin界面: 主机ip:9411

docker-compose build

docker-compose up

curl -vv 172.31.85.10

例4:skywalking跟踪

参考官方文档:https://github.com/envoyproxy/envoy/tree/main/examples/skywalking

浙公网安备 33010602011771号

浙公网安备 33010602011771号