envoy的xds api动态配置

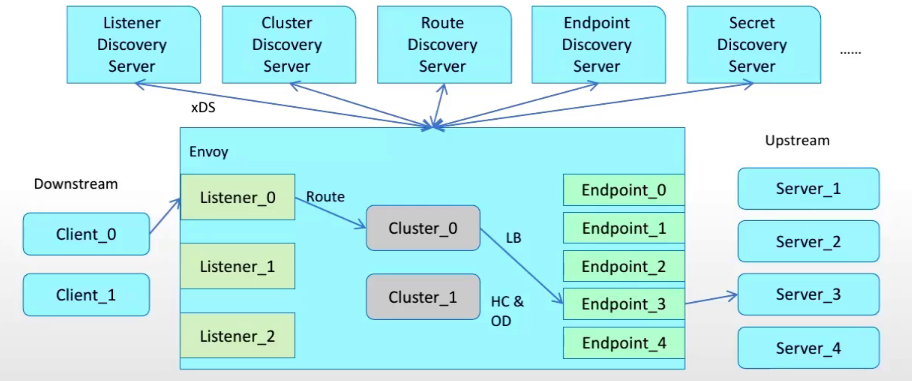

XDS API动态配置

为envoy提供资源的动态配置机制,也叫数据平面api(data plane api)

所有api组合成xds api,这些api都提供了最终的一致性,并且彼此间互不影响

部分更高级别的操作(如执行服务A/B部署),需要进行排序以防流量被丢弃,因此基于1个管理服务器提供多类api时还要使用聚合发现服务ads api(允许其他所有api用单个管理服务器的单个grpc双向流进行编组,从而实现对api排序)

xds的各api支持增量传输,包括ads

目前有3个版本的api,同时共存,所有在使用时需要指定协议版本

3种类型动态发现配置:

- 基于文件系统发现(监视文件系统路径)

- api配置源:需要事先静态指定好定义好管理服务(manager server)组成的集群

- grpc api:启动grpc流

- delta grpc:基于grpc,实现增量方式

- rest-json api:轮询rest-json url。

- ads:基于管理服务发现所有类型的动态配置

v3版本xds支持的常见类型

- envoy.config.listener.v3.Listener(lds)

- envoy.config.route.v3.RouteConfiguration(rds)

- envoy.config.route.v3.ScopedRouteConfiguration(srds)

- envoy.config.route.v3.VirtualHost(vhds)

- envoy.config.route.cluster.v3.Cluster(crds)

- envoy.config.cluster.v3.Cluster(cds)

- envoy.config.endpoion.v3.ClusterLoadAssignment(eds)

- envoy.extensions.transport_sockets.tls.v3.Secret(sds)

- envoy.service.runtime.v3.Runtime(rtds)

初始化启动配置段

当使用动态配置时必须提供一个bootstrap配置

顶级配置段node,envoy请求管理服务器时,必须上报自己的信息:id、cluster、metadata、locality等

node:

id: str

cluster:

metadata: {}

locality: #主机所属的区域

region:

zone:

sub_zone:

user_agent_name: str #自定义us

user_agent_version: #自定义ua版本

user_agent_build_version: #一情敌ua构建版本

version:

metadata: {}

extensions: []

client_features: []

listening_addresses: []

动态配置加载过程:

http类型:

- xds需要为envoy配置的核心资源为:listener、rds、cluster、eds

- 每个listener资源可以包含1到n个rds,1个rds又可以指向多个cluster,1个cluster又可以包含1到n个eds

- envoy启动时先加载所有listener、cluster资源,再并行获取listener和cluster依赖的route配置和endpoint资源。

grpc类型:

- 可以在启动时仅加载感兴趣的listener资源,再加载特定listener相关的route配置,再根据route配置中关联的cluster,再去加载cluster、以及对应的endpoint。一切从cluster开始到endpoint顺序加载

- listener资源是整个配置树的

/

基于文件系统动态发现

envoy使用inotify来监视文件的更改,并在更新时解析文件中的服务响应报文

二进制protobuf、json、yaml、proto文本都是服务发现响应支持的数据格式

配置

eds部分

clusters:

- name: target_cluster

connect_tinmeout: 0.25s

lb_policy: ROUND_ROBIN

type: EDS

eds_cluster_config:

service_name: webcluster

eds_config:

path: 文件 #文件后缀名为conf,内容是json格式;后缀名为yaml,内容是yaml格式

api_ocnfig_source:

ads:

发现文件的配置:

resources:

- "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignment

cluster_name: webcluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address:

port_value:

案例

例1: 文件发现eds

1)主配置文件:envoy.yml

node:

id: envoy_001

cluster: testcluster

static_resources:

listeners:

- name: listener_0

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: web_service_1

domains:

- "*"

routes:

- match: { prefix: "/" }

route: { cluster: local_cluster }

http_filters:

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

clusters:

- name: local_cluster

connect_timeout: 0.25s

type: EDS

lb_policy: ROUND_ROBIN

eds_cluster_config:

service_name: webservice

eds_config:

path: '/etc/envoy/file.yaml'

2)服务发现文件:file.yml

resources:

- "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignment

cluster_name: webservice

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 172.31.3.2

port_value: 8080

3)docker-compose.yml文件

version: '3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.20.0

volumes:

- ./envoy.yml:/etc/envoy/envoy.yaml

- ./file.yml:/etc/envoy/file.yaml

networks:

envoymesh:

ipv4_address: 172.31.3.2

aliases:

- ingress

webserver01:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:envoy"

depends_on:

- envoy

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.3.0/24

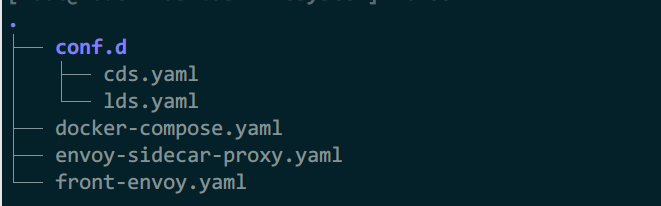

例2: 基于文件系统订阅(lds和cds)

1)网关envoy主配置文件:front-envoy.yaml

node:

id: front_proxy

cluster: test_cluster

admin:

profile_path: /tmp/envoy.prof

access_log_path: /dev/null

address:

socket_address:

address: 0.0.0.0

port_value: 9901

dynamic_resources:

lds_config:

path: /etc/envoy/lds.yaml

cds_config:

path: /etc/envoy/cds.yaml

2)sidecar容器配置:envoy-sidecar-proxy.yaml

为业务容器提供网格,envoy和业务容器用同一网络

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

static_resources:

listeners:

- name: listener_0

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: local_service

domains: ["*"]

routes:

- match: { prefix: "/" }

route: { cluster: local_cluster }

http_filters:

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

clusters:

- name: local_cluster

connect_timeout: 0.25s

type: STATIC

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: local_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address: { address: 127.0.0.1, port_value: 8080 }

3)lds.yaml配置

mkdir -p conf.d

resources:

- "@type": type.googleapis.com/envoy.config.listener.v3.Listener

cluster_name: listener_http

address:

socket_address:

address: 0.0.0.0

port_value: 80

filter_chains:

- filters:

name: envoy.http_connection_manager

http_filters:

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: local_service

domains:

- '*'

routes:

- match:

prefix: '/'

route:

cluster: webcluster

4)cds.yaml配置

resources:

- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster

name: webcluster

connect_timeout: 1s

type: STRICT_DNS

load:assignment:

cluster_name: webcluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: webserver1

port_value: 8080

- endpoint:

address:

socket_address:

address: webserver2

port_value: 8080

5)docker-compose配置

version: '3.3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.18-latest

volumes:

- ./envoy.yaml:/etc/envoy/envoy.yaml

- ./conf.d/:/etc/envoy/conf.d/

networks:

envoymesh:

ipv4_address: 172.31.12.2

aliases:

- front-proxy

depends_on:

- webserver01

- webserver01-app

- webserver02

- webserver02-app

webserver01:

image: envoyproxy/envoy-alpine:v1.18-latest

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

hostname: webserver01

networks:

envoymesh:

ipv4_address: 172.31.12.11

aliases:

- webserver01-sidecar

webserver01-app:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:webserver01"

depends_on:

- webserver01

webserver02:

image: envoyproxy/envoy-alpine:v1.18-latest

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

hostname: webserver02

networks:

envoymesh:

ipv4_address: 172.31.12.12

aliases:

- webserver02-sidecar

webserver02-app:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:webserver02"

depends_on:

- webserver02

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.12.0/24

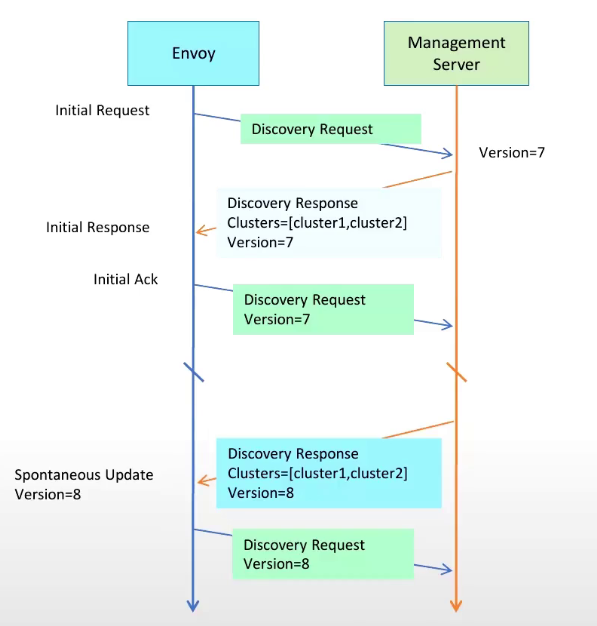

api动态发现:

grpc api:

为每个xds api独立指定grpc配置,可指向与管理服务器对应的某上游集群

为每个xds资源启动一个独立的双向grpc流,可发给不同的管理服务器,每个流都有自己的独立维护的资源版本,且不存在跨资源类型的共享版本机制

在不使用ads的情况下,每个资源类型可能具有不同的版本,因为envoy api允许指向不同的eds、rds资源配置并对应不同的配置资源

配置:

以lds举例,cds相同,配置动态发现后,listener内部的路由也可由listener直接提供,或单独配置rds

注意:提供grpc api服务的管理服务器,也要定义envoy上的集群,并由envoy实例通过xds api进行请求。也就是在管理服务器进行静态资源配置,定义envoy的集群

dynamic_resources:

lds_config:

api_config_source:

api_type: str #api可由rest或grpc获取,支持的类型:REST、grpc、delta_grpc

resource_api_version: 版本 #xds api版本,1.19后的envoy使用v3

rate_limit_settings: [] #限速

grpc_services: #提供grpc服务的1到多个服务源

transport_api_version: 版本 #xds协议使用的api版本

envoy_grpc:

cluster_name: 名称 #grpc集群名

google_grpc: #谷歌的c++ grpc客户端

timeout: 超时时间

案例

例1:lds和cds基于grpc发现

1)front-envoy.yaml

node:

id: envoy_front_proxy

cluster: webcluster

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

dynamic_resources:

lds_config:

resource_api_version: V3

api_config_source:

api_type: GRPC

transport_api_version: V3

grpc_services:

- envoy_grpc:

cluster_name: xds_cluster

cds_config:

resource_api_version: V3

api_config_source:

api_type: GRPC

transport_api_version: V3

grpc_services:

- envoy_grpc:

cluster_name: xds_cluster

static_resources:

clusters:

- name: xds_cluster

connect_timeout: 0.25s

type: STRICT_DNS

#grpc是基于http2协议传输,需要开启http2

typed_extension_protocol_options:

envoy.extensions.upstreams.http.v3.HttpProtocolOptions:

"@type": type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions

explicit_http_config:

http2_protocol_options: {}

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: xds_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: xdsserver

port_value: 18000

2)envoy-sidecar-proxy.yaml

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

static_resources:

listeners:

- name: listener_0

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: local_service

domains: ["*"]

routes:

- match: { prefix: "/" }

route: { cluster: local_cluster }

http_filters:

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

clusters:

- name: local_cluster

connect_timeout: 0.25s

type: STATIC

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: local_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address: { address: 127.0.0.1, port_value: 8080 }

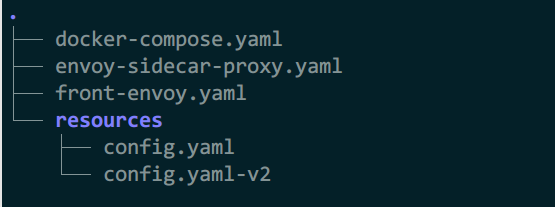

3)resources/config.yaml

name: myconfig

spec:

listeners:

- name: listener_http

address: 0.0.0.0

port: 80

routes:

- name: local_route

prefix: /

clusters:

- webcluster

clusters:

- name: webcluster

endpoints:

- address: 172.31.15.11

port: 8080

4)docker-compose.yaml

version: '3.3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.18-latest

volumes:

- ./front-envoy.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.15.2

aliases:

- front-proxy

depends_on:

- webserver01

- webserver02

- xdsserver

webserver01:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

hostname: webserver01

networks:

envoymesh:

ipv4_address: 172.31.15.11

webserver01-sidecar:

image: envoyproxy/envoy-alpine:v1.18-latest

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

network_mode: "service:webserver01"

depends_on:

- webserver01

webserver02:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

hostname: webserver02

networks:

envoymesh:

ipv4_address: 172.31.15.12

webserver02-sidecar:

image: envoyproxy/envoy-alpine:v1.18-latest

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

network_mode: "service:webserver02"

depends_on:

- webserver02

xdsserver:

image: ikubernetes/envoy-xds-server:v0.1

environment:

- SERVER_PORT=18000

- NODE_ID=envoy_front_proxy

- RESOURCES_FILE=/etc/envoy-xds-server/config/config.yaml

volumes:

- ./resources:/etc/envoy-xds-server/config/

networks:

envoymesh:

ipv4_address: 172.31.15.5

aliases:

- xdsserver

- xds-service

expose:

- "18000"

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.15.0/24

5)测试

curl 172.31.15.2:9901/clusters

rest api:

http协议,json格式,由于性能弱于grpc,较少使用,v3版之前会用(v1.19之后仅支持V3版本)

配置

与grpc类似

dynamic_resources:

lds_config:

resource_api_version: 版本 #xds api版本,1.19后的envoy使用v3

api_config_source:

api_type: str #api可由rest或grpc获取,支持的类型:REST、grpc、delta_grpc

transport_api_version: ... #xds资源配置遵循的api版本,v1.19之后仅支持V3

cluster_names: [] #提供服务的集群名称列表,只能与rest类型api一起使用,故障时将循环访问

refresh_delay: int #轮询间隔

request_timeout: int #请求超时时间,默认1s

ads

通过1个控制平面,使用1个grpc流就提供所有的api更新(需要规划更新的顺序,避免更新过程中流量丢失)

使用ads时,单个流上课通过类型url进行复用多个独立的:发现请求、发现响应序列

案例

例1:

1)front-envoy.yaml

node:

id: envoy_front_proxy

cluster: webcluster

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

dynamic_resources:

ads_config:

api_type: GRPC

transport_api_version: V3

grpc_services:

- envoy_grpc:

cluster_name: xds_cluster

set_node_on_first_message_only: true

cds_config:

resource_api_version: V3

ads: {}

lds_config:

resource_api_version: V3

ads: {}

static_resources:

clusters:

- name: xds_cluster

connect_timeout: 0.25s

type: STRICT_DNS

typed_extension_protocol_options:

envoy.extensions.upstreams.http.v3.HttpProtocolOptions:

"@type": type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions

explicit_http_config:

http2_protocol_options: {}

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: xds_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: xdsserver

port_value: 18000

2)envoy-sidecar-proxy.yaml

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

static_resources:

listeners:

- name: listener_0

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: local_service

domains: ["*"]

routes:

- match: { prefix: "/" }

route: { cluster: local_cluster }

http_filters:

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

clusters:

- name: local_cluster

connect_timeout: 0.25s

type: STATIC

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: local_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address: { address: 127.0.0.1, port_value: 8080 }

3)resources/config.yaml

name: myconfig

spec:

listeners:

- name: listener_http

address: 0.0.0.0

port: 80

routes:

- name: local_route

prefix: /

clusters:

- webcluster

clusters:

- name: webcluster

endpoints:

- address: 172.31.16.11

port: 8080

4)docker-compose.yaml

version: '3.3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.18-latest

volumes:

- ./front-envoy.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.16.2

aliases:

- front-proxy

depends_on:

- webserver01

- webserver02

- xdsserver

webserver01:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

hostname: webserver01

networks:

envoymesh:

ipv4_address: 172.31.16.11

webserver01-sidecar:

image: envoyproxy/envoy-alpine:v1.18-latest

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

network_mode: "service:webserver01"

depends_on:

- webserver01

webserver02:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

hostname: webserver02

networks:

envoymesh:

ipv4_address: 172.31.16.12

webserver02-sidecar:

image: envoyproxy/envoy-alpine:v1.18-latest

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

network_mode: "service:webserver02"

depends_on:

- webserver02

xdsserver:

image: ikubernetes/envoy-xds-server:v0.1

environment:

- SERVER_PORT=18000

- NODE_ID=envoy_front_proxy

- RESOURCES_FILE=/etc/envoy-xds-server/config/config.yaml

volumes:

- ./resources:/etc/envoy-xds-server/config/

networks:

envoymesh:

ipv4_address: 172.31.16.5

aliases:

- xdsserver

- xds-service

expose:

- "18000"

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.16.0/24

浙公网安备 33010602011771号

浙公网安备 33010602011771号