kafka部署

kafka部署

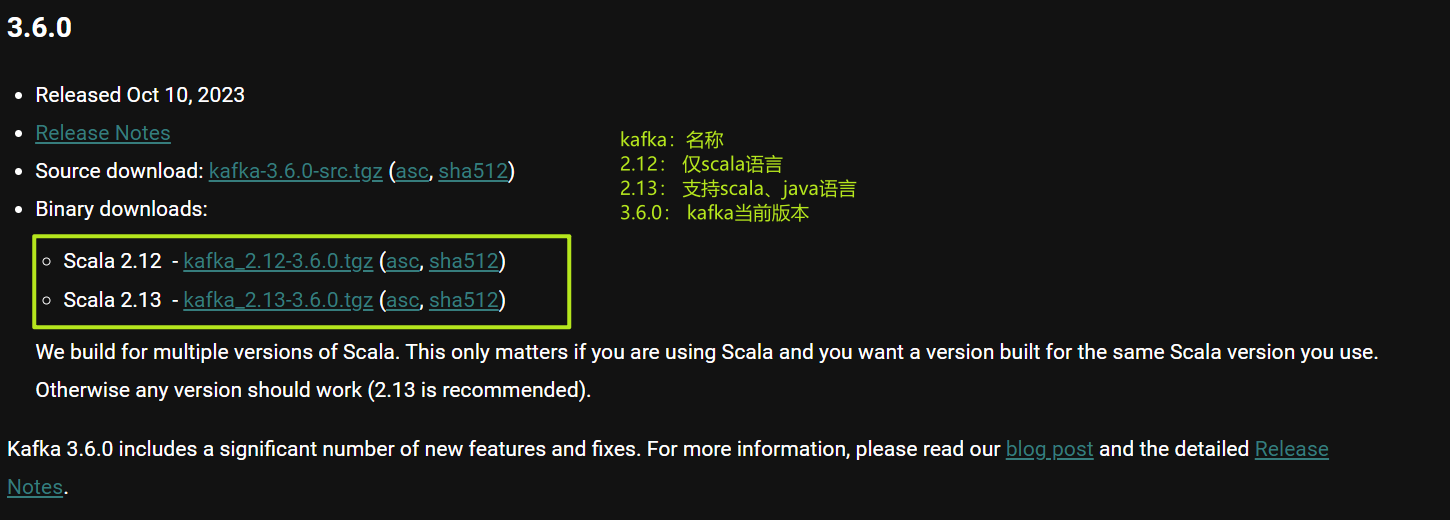

官网下载: https://kafka.apache.org/downloads

阿里镜像:http://mirrors.aliyun.com/apache/kafka/

注意:需要关注版本与支持的语言

kafka运行依赖zookeeper服务,关于zk安装,可参考:zk部署

单节点部署:

1)下载文件

wget https://archive.apache.org/dist/kafka/2.8.1/kafka_2.13-2.8.1.tgz

tar xf kafka_2.13-2.8.1.tgz

ln -s kafka_2.13-2.8.1 kafka

2)改配置文件

mkdir /opt/kafka/data

cd kafka/config

vim server.properties

\broker.id=1

listeners=PLAINTEXT://2.2.2.12:9092

num.network.threads=16

num.io.threads=16

log.dirs=/opt/kafka/data

num.partitions=3

zookeeper.connect=2.2.2.12:2181,2.2.2.22:2181,2.2.2.32:2181

3)写service文件

tee > /etc/systemd/system/kafka.service<<-eof

[Unit]

After=network.target

[Service]

Type=forking

ExecStart=/opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

ExecStop=/opt/kafka/bin/kafka-server-stop.sh stop

[Install]

WantedBy=multi-user.target

eof

systemctl daemon-reload

systemctl enable --now kafka

集群部署

| ip | 节点作用 |

|---|---|

| 2.2.2.12 | A节点,zookeeper kafka |

| 2.2.2.22 | B节点,zookeeper kafka |

| 2.2.2.32 | C节点,zookeeper kafka |

注意点:在配置套接字缓存时,可以配置系统内核的套接字缓存,如果使用内核的,则需要调内核参数

1)所有节点安装zk、kafka

2)A主机修改kafka配置文件

#加入内核参数

cat > /etc/sysctl.d/sock.conf <<eof

net.core.wmem_default=8388608

net.core.rmem_default=8388608

net.core.rmem_max=16777216

net.core.wmem_max=16777216

net.ipv4.tcp_mem=786432 1048576 1572864

net.ipv4.tcp_rmem=10240 87380 12582912

net.ipv4.tcp_wmem=10240 87380 12582912

eof

sysctl -p

mkdir /data/kafka-logs

cat > server.properties <<eof

broker.id=0

listeners=PLAINTEXT://:9092

zookeeper.connect=2.2.2.12:2181,2.2.2.22:2181,2.2.2.32:2181

zookeeper.connection.timeout.ms=18000

log.dirs=/data/kafka-logs

num.network.threads=8

num.io.threads=12

num.partitions=3

num.recovery.threads.per.data.dir=2

num.replica.alter.log.dirs.threads=8

num.replica.fetchers=3

background.threads=15

message.max.bytes=1048588

max.message.bytes=1048588

max.request.size=1048588

socket.send.buffer.bytes=-1

socket.receive.buffer.bytes=-1

socket.request.max.bytes=104857600

offsets.topic.replication.factor=3

transaction.state.log.replication.factor=3

transaction.state.log.min.isr=2

replica.fetch.max.bytes=1048588

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

group.initial.rebalance.delay.ms=0

auto.create.topic.enable=true

delete.topic.enable=true

eof

systemctl start kafka

3)B主机修改kafka配置文件

#加入内核参数

cat > /etc/sysctl.d/sock.conf <<eof

net.core.wmem_default=8388608

net.core.rmem_default=8388608

net.core.rmem_max=16777216

net.core.wmem_max=16777216

net.ipv4.tcp_mem=786432 1048576 1572864

net.ipv4.tcp_rmem=10240 87380 12582912

net.ipv4.tcp_wmem=10240 87380 12582912

eof

sysctl -p

mkdir /data/kafka-logs

cat > server.properties <<eof

broker.id=1

listeners=PLAINTEXT://:9092

zookeeper.connect=2.2.2.12:2181,2.2.2.22:2181,2.2.2.32:2181

zookeeper.connection.timeout.ms=18000

log.dirs=/data/kafka-logs

num.network.threads=8

num.io.threads=12

num.partitions=3

num.recovery.threads.per.data.dir=2

num.replica.alter.log.dirs.threads=8

num.replica.fetchers=3

background.threads=15

message.max.bytes=1048588

max.message.bytes=1048588

max.request.size=1048588

socket.send.buffer.bytes=-1

socket.receive.buffer.bytes=-1

socket.request.max.bytes=104857600

offsets.topic.replication.factor=3

transaction.state.log.replication.factor=3

transaction.state.log.min.isr=2

replica.fetch.max.bytes=1048588

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

group.initial.rebalance.delay.ms=0

auto.create.topic.enable=true

delete.topic.enable=true

eof

systemctl start kafka

4)C主机修改kafka配置文件

#加入内核参数

cat > /etc/sysctl.d/sock.conf <<eof

net.core.wmem_default=8388608

net.core.rmem_default=8388608

net.core.rmem_max=16777216

net.core.wmem_max=16777216

net.ipv4.tcp_mem=786432 1048576 1572864

net.ipv4.tcp_rmem=10240 87380 12582912

net.ipv4.tcp_wmem=10240 87380 12582912

eof

sysctl -p

mkdir /data/kafka-logs

cat > server.properties <<eof

broker.id=2

listeners=PLAINTEXT://:9092

zookeeper.connect=2.2.2.12:2181,2.2.2.22:2181,2.2.2.32:2181

zookeeper.connection.timeout.ms=18000

log.dirs=/data/kafka-logs

num.network.threads=8

num.io.threads=12

num.partitions=3

num.recovery.threads.per.data.dir=2

num.replica.alter.log.dirs.threads=8

num.replica.fetchers=3

background.threads=15

message.max.bytes=1048588

max.message.bytes=1048588

max.request.size=1048588

socket.send.buffer.bytes=-1

socket.receive.buffer.bytes=-1

socket.request.max.bytes=104857600

offsets.topic.replication.factor=3

transaction.state.log.replication.factor=3

transaction.state.log.min.isr=2

replica.fetch.max.bytes=1048588

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

group.initial.rebalance.delay.ms=0

auto.create.topic.enable=true

delete.topic.enable=true

eof

systemctl start kafka

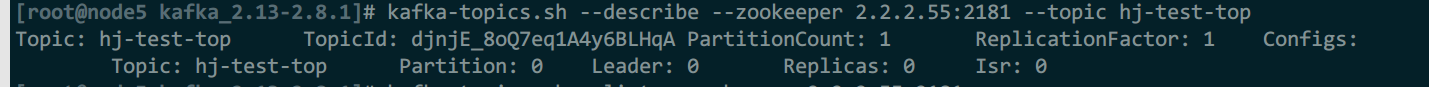

5)测试

#在kafka中创建topic,会立即同步到zk

kafka-topics.sh --create --topic hj-test-top --bootstrap-server 2.2.2.12:9092

#在zookeeper中创建topic,并指定在kafka中存3个副本

kafka-topics.sh --create --zookeeper 2.2.2.12:2181,2.2.2.22,2181,2.2.2.32:2181 --partitions 3 --replication-factor 3 --topic hj-test2

#验证zookeeper中的topic信息

kafka-topics.sh --describe --zookeeper 2.2.2.12:2181 --topic hj-test-top

#获取所有topic

kafka-topics.sh --list --zookeeper 2.2.2.12:2181

#测试发送消息

kafka-console-producer.sh --broker-list 2.2.2.12:9092 --topic hj-test2

#删除topic数据

kafka-topics.sh --delete --zookeeper 2.2.2.12:2181 --topic hj-test2

kafka-topics.sh --delete --zookeeper 2.2.2.12:2181 --topic hj-test-top

解释:

Topic 名称

Partition 分区

Leader broker.id,分区存放的主节点

Replicas 分区存放在leader的位置,按顺序

isr 状态,表示可参加选举成为leader

浙公网安备 33010602011771号

浙公网安备 33010602011771号