ansible作业 -- 作软raid5

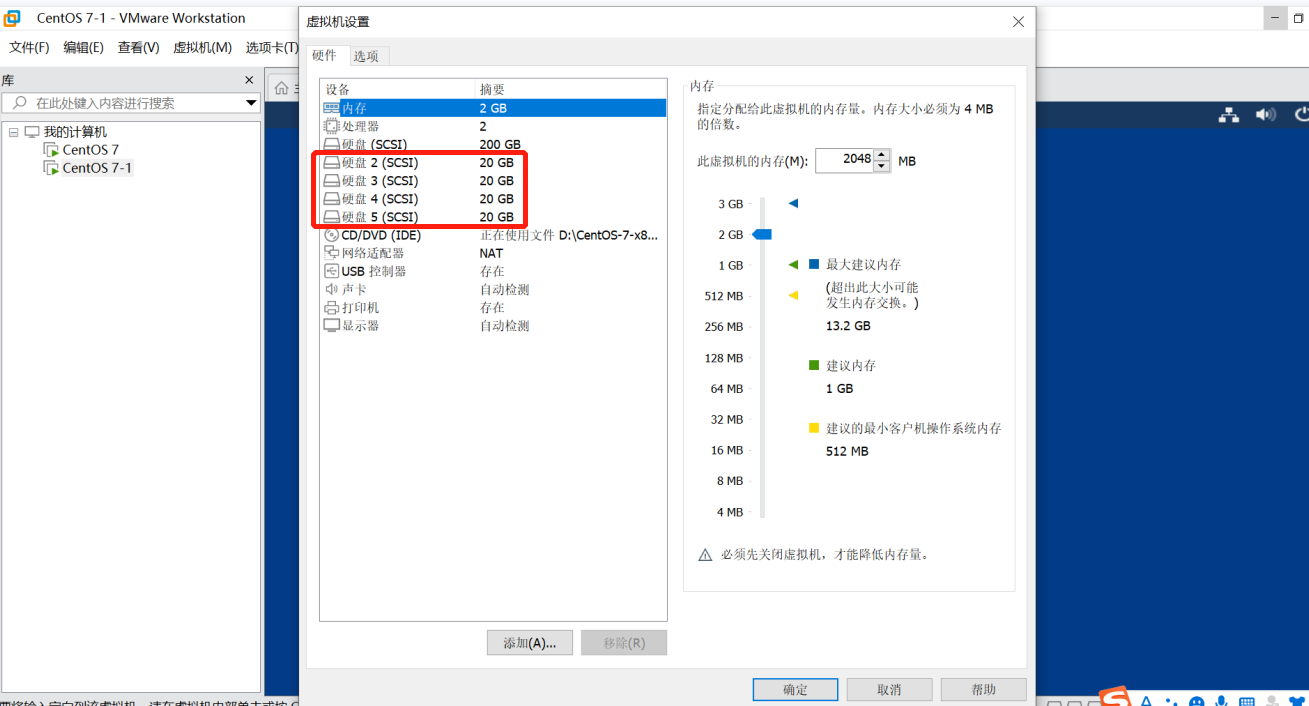

step1:环境准备:raid5至少需要三块硬盘,因此在虚拟机中,增加4块硬盘,其中三块用来做活动盘,一块用来做热备盘

step2:直接对4块硬盘做raid,其中一块硬盘为热备盘;

[root@node02 ~]# mdadm -C /dev/md5 -a yes -l 5 -n 3 -x 1 /dev/sd[b,c,d,e]

说明:-C:创建模式;

-a {yes|no}自动(在/dev/md5)创建对应的设备,

-l: 指明要创建的级别

-n: 使用#个块设备来创建此raid

-x:当前阵列中热备盘只有#块

查看raid5状态

[root@node02 ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd[4] sde[3](S) sdc[1] sdb[0]

41910272 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

unused devices: <none>

[root@node02 ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Tue Aug 17 16:44:14 2021

Raid Level : raid5

Array Size : 41910272 (39.97 GiB 42.92 GB)

Used Dev Size : 20955136 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Tue Aug 17 16:46:00 2021

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : node02:5 (local to host node02)

UUID : c42a9c5f:415080f9:e2c7cc23:ab17f6e1

Events : 28

Number Major Minor RaidDevice State

0 8 16 0 active sync /dev/sdb

1 8 32 1 active sync /dev/sdc

4 8 48 2 active sync /dev/sdd

3 8 64 - spare /dev/sde

step3:添加raid5到raid配置文件中/etc/mdadm.conf;

[root@node02 ~]# echo DEVICE /dev/sd[b,c,d,e] >> /etc/mdadm.conf

[root@node02 ~]# mdadm -Ds >> /etc/mdadm.conf

[root@node02 ~]# cat /etc/mdadm.conf

DEVICE /dev/sdb /dev/sdc /dev/sdd /dev/sde

ARRAY /dev/md5 metadata=1.2 spares=1 name=node02:5 UUID=8881bf3f:f72936c3:49de2bfd:7b807327

step4: 格式化磁盘;

[root@node02 ~]# mkfs.ext4 /dev/md5

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=128 blocks, Stripe width=256 blocks

2621440 inodes, 10477568 blocks

523878 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=2157969408

320 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

step5:创建挂载点并挂载磁盘;

[root@node02 ~]# mkdir /raid5

[root@node02 ~]# mount /dev/md5 /raid5/

[root@node02 ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda2 xfs 100G 4.5G 96G 5% /

devtmpfs devtmpfs 898M 0 898M 0% /dev

tmpfs tmpfs 912M 0 912M 0% /dev/shm

tmpfs tmpfs 912M 9.1M 903M 1% /run

tmpfs tmpfs 912M 0 912M 0% /sys/fs/cgroup

/dev/sda6 xfs 50G 33M 50G 1% /data

/dev/sda5 xfs 2.0G 37M 2.0G 2% /home

/dev/sda1 ext4 976M 145M 765M 16% /boot

tmpfs tmpfs 183M 28K 183M 1% /run/user/0

/dev/sr0 iso9660 8.1G 8.1G 0 100% /run/media/root/CentOS 7 x86_64

/dev/md5 ext4 40G 49M 38G 1% /raid5

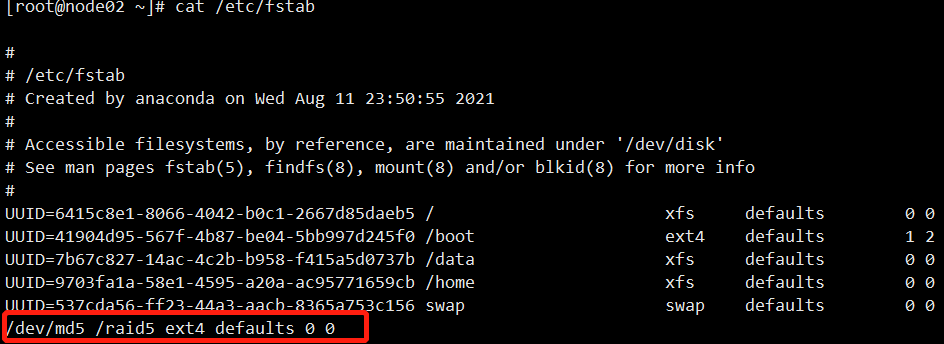

step6:添加开机自动挂载;

[root@node02 ~]# echo "/dev/md5 /raid5 ext4 defaults 0 0" >> /etc/fstab

reboot重启,测试开机是否自动挂载,raid5是否正常工作。

roles:

mkdir -p mdadm_raid5/{tasks,files,templates}

tasks

- name: creat raid5

shell: mdadm -C /dev/md5 -a yes -l 5 -n 3 -x 1 /dev/sd[b,c,d,e]

- name: set raid5

template: src=mdadm.sh dest=~/mdadm.sh

- name: add raid5

shell: sh mdadm.sh

- name: format md5

shell: mkfs.ext4 /dev/md5

- name: mount md5

mount:

path: /raid5/

src: /dev/md5

fstype: ext4

state: mounted

- name: set add_fstab

template: src=add_fstab.sh dest=~/add_fstab.sh

- name: add_fstab

shell: sh add_fstab.sh

编写templates/mdadm.sh,add_fstab.sh

vim templates/mdadm.sh

#!/bin/bash

echo DEVICE /dev/sd[b,c,d,e] >> /etc/mdadm.conf

mdadm -Ds >> /etc/mdadm.conf

vim templates/add_fstab.sh

#!/bin/bash

echo "/dev/md5 /raid5 ext4 defaults 0 0" >> /etc/fstab

编写mdadm_raid5.yml

vi mdadm_raid5.yml

- hosts: websrvs

remote_user: root

roles:

- mdadm_raid5

运行

ansible-playbook install_docker_ce.yml

附正确删除raid5方法:

[root@node02 ~]# umount /dev/md5 #先卸载阵列 [root@node02 ~]# df Filesystem 1K-blocks Used Available Use% Mounted on /dev/sda2 104806400 4701492 100104908 5% / devtmpfs 918572 0 918572 0% /dev tmpfs 933524 0 933524 0% /dev/shm tmpfs 933524 9292 924232 1% /run tmpfs 933524 0 933524 0% /sys/fs/cgroup /dev/sda6 52403200 32944 52370256 1% /data /dev/sda5 2086912 36948 2049964 2% /home /dev/sda1 999320 148160 782348 16% /boot tmpfs 186708 28 186680 1% /run/user/0 /dev/sr0 8490330 8490330 0 100% /run/media/root/CentOS 7 x86_64 [root@node02 ~]# mdadm -S /dev/md5 #停止raid运行 mdadm: stopped /dev/md5 [root@node02 ~]# mdadm --misc --zero-superblock /dev/sd[bcde] #删除磁盘

[root@node02 ~]# rm -f /etc/mdadm.conf #删除配置文件

fstab配置的自动挂载也需要删除

浙公网安备 33010602011771号

浙公网安备 33010602011771号