Java-微服务环境快速准备-Docker Compose-Mac环境

前提

汇总了一下大多数常见的服务、中间件的docker-compose.yml ,包括了kafka、Rabbitmq、Rocketmq、Nacos、Redis、Elasticsearch(均为单机)、Mysql(主从)、Nginx、Minio、Maxwell。为方便在启动项目前配置好环境,就放在了项目的根目录里,在这里也记录一下,供学习交流使用。

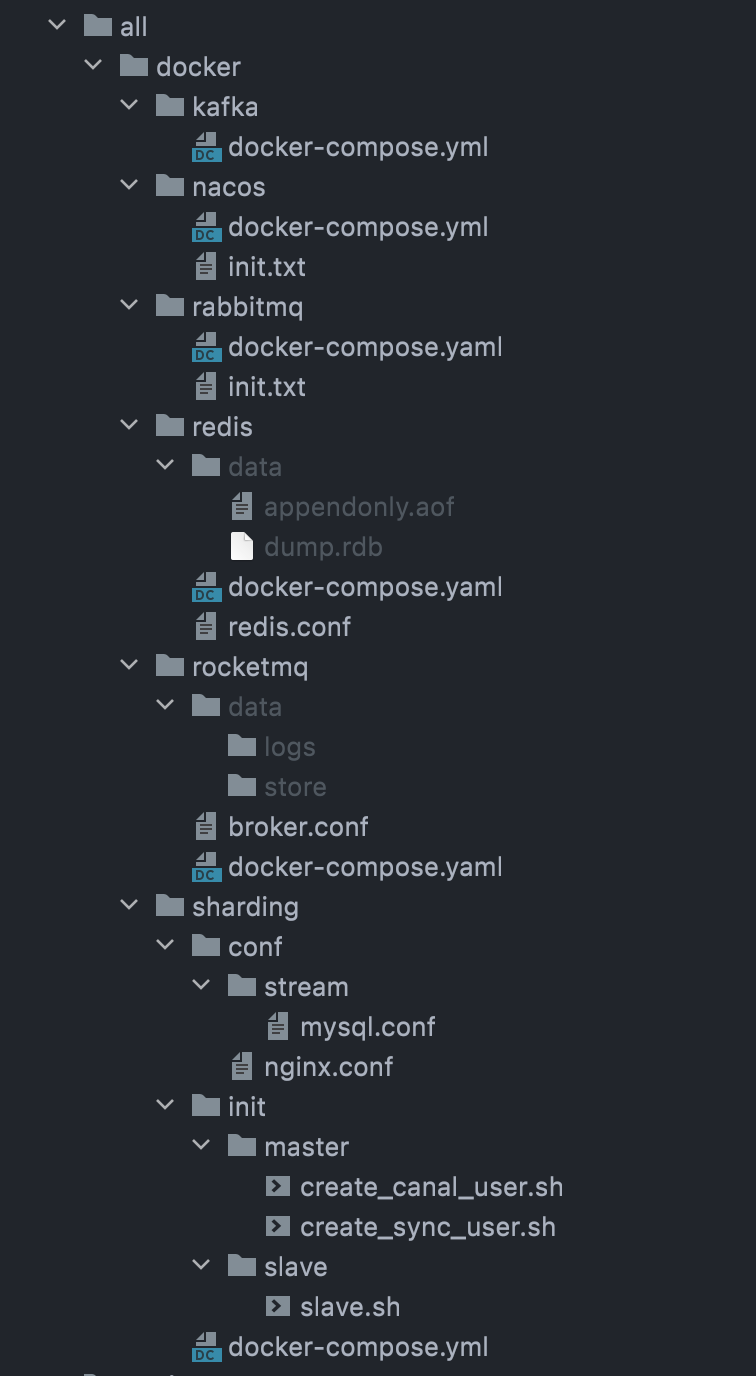

项目结构

代码片段

1.kafka

docker-compose.yaml

version: '3'

services:

zookepper:

image: wurstmeister/zookeeper # 原镜像`wurstmeister/zookeeper`

container_name: zookeeper # 容器名为'zookeeper'

volumes: # 数据卷挂载路径设置,将本机目录映射到容器目录

- "/etc/localtime:/etc/localtime"

ports: # 映射端口

- "2181:2181"

kafka:

image: wurstmeister/kafka # 原镜像`wurstmeister/kafka`

container_name: kafka # 容器名为'kafka'

volumes: # 数据卷挂载路径设置,将本机目录映射到容器目录

- "/etc/localtime:/etc/localtime"

environment: # 设置环境变量,相当于docker run命令中的-e

KAFKA_BROKER_ID: 0 # 在kafka集群中,每个kafka都有一个BROKER_ID来区分自己

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://ip:9092 # TODO 将kafka ip

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092 # 配置kafka的监听端口

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_CREATE_TOPICS: "hello_world"

KAFKA_HEAP_OPTS: -Xmx1G -Xms256M

ports: # 映射端口

- "9092:9092"

depends_on: # 解决容器依赖启动先后问题

- zookepper

kafka-manager:

image: sheepkiller/kafka-manager # 原镜像`sheepkiller/kafka-manager`

container_name: kafka-manager # 容器名为'kafka-manager'

environment: # 设置环境变量,相当于docker run命令中的-e

ZK_HOSTS: zookeeper:2181

APPLICATION_SECRET: xxxxx

KAFKA_MANAGER_AUTH_ENABLED: "true" # 开启kafka-manager权限校验

KAFKA_MANAGER_USERNAME: admin # 登陆账户

KAFKA_MANAGER_PASSWORD: 123456 # 登陆密码

ports: # 映射端口

- "9000:9000"

depends_on: # 解决容器依赖启动先后问题

- kafka

2.nacos

docker-compose.yaml

version: "3"

services:

nacos-server-m1: #服务名

image: 'zhusaidong/nacos-server-m1:2.0.3'

environment:

- MODE=standalone

container_name: nacos

ports:

- '8848:8848'

- '9848:9848'

- '9849:9849'

command:

- "--env MODE=standalone"

restart: on-failureinit.txt

# nacos

docker pull zhusaidong/nacos-server-m1:2.0.3

docker run --env MODE=standalone --name nacos -d -p 8848:8848 -p 9848:9848 -p 9849:9849 zhusaidong/nacos-server-m1:2.0.3

# Nacos默认账号密码 nacos nacos

http://127.0.0.1:8848/nacos/index.html

# Docker 命令行转换为 docker-compose 文件格式

https://www.composerize.com/

# docker-compose

https://docs.docker.com/compose/

# 查看正在运行docker容器的启动命令

docker ps -a --no-trunc

3.rabbitmq

docker-compose.yaml

version: '3'

services:

rabbitmq:

image: rabbitmq:management # image: rabbitmq:latest 没有web管理界面

container_name: rabbitmq

restart: always

hostname: rabbitmq_host

ports:

- "15672:15672" # web UI 管理接口

- "5672:5672" # 生产者和消费者连接使用的接口init.txt

# web UI 默认账号密码 guest guest

http://localhost:15672

management与普通不带management的版本的区别是:带management的版本可以自带web管理界面。

# 不带management的版本,图形化界面默认是关闭的,这里需要开启

docker exec -it rabbitmq /bin/bash -c "/opt/rabbitmq/sbin/rabbitmq-plugins enable rabbitmq_management"

# Stats in management UI are disabled on this node

docker exec -it rabbitmq /bin/bash -c "cd /etc/rabbitmq/conf.d/ && echo management_agent.disable_metrics_collector = false > management_agent.disable_metrics_collector.conf"

docker restart rabbitmq

4.redis

docker-compose.yaml

version: '3'

services:

redis:

image: redis:latest

container_name: redis

restart: always

ports:

- "6379:6379"

volumes:

- ./redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf"redis.conf

protected-mode no

port 6379

timeout 0

save 900 1

save 300 10

save 60 10000

rdbcompression yes

dbfilename dump.rdb

dir /data

appendonly yes

appendfsync everysec

5.rocketmq

docker-compose.yaml

version: '3.5'

services:

rmqnamesrv:

image: foxiswho/rocketmq:server

container_name: rmqnamesrv

ports:

- "9876:9876"

volumes:

- ./data/logs:/opt/logs

- ./data/store:/opt/store

networks:

rmq:

aliases:

- rmqnamesrv

rmqbroker:

image: foxiswho/rocketmq:broker

container_name: rmqbroker

ports:

- "10909:10909"

- "10911:10911"

volumes:

- ./data/logs:/opt/logs

- ./data/store:/opt/store

- ./broker.conf:/etc/rocketmq/broker.conf

environment:

NAMESRV_ADDR: "rmqnamesrv:9876"

JAVA_OPTS: " -Duser.home=/opt"

JAVA_OPT_EXT: "-server -Xms128m -Xmx128m -Xmn128m"

command: mqbroker -c /etc/rocketmq/broker.conf

depends_on:

- rmqnamesrv

networks:

rmq:

aliases:

- rmqbroker

rmqconsole:

image: styletang/rocketmq-console-ng # localhost:8080

volumes:

- /etc/localtime:/etc/localtime

container_name: rmqconsole

ports:

- "8080:8080"

environment:

JAVA_OPTS: "-Drocketmq.namesrv.addr=rmqnamesrv:9876 -Dcom.rocketmq.sendMessageWithVIPChannel=false -Duser.timezone='Asia/Shanghai'"

depends_on:

- rmqnamesrv

networks:

rmq:

aliases:

- rmqconsole

networks:

rmq:

name: rmq

driver: bridgebroker.conf

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# 所属集群名字

brokerClusterName=DefaultCluster

# broker 名字,注意此处不同的配置文件填写的不一样,如果在 broker-a.properties 使用: broker-a,

# 在 broker-b.properties 使用: broker-b

brokerName=broker-a

# 0 表示 Master,> 0 表示 Slave

brokerId=0

# nameServer地址,分号分割

# namesrvAddr=rocketmq-nameserver1:9876;rocketmq-nameserver2:9876

# 启动IP,如果 docker 报 com.alibaba.rocketmq.remoting.exception.RemotingConnectException: connect to <192.168.0.120:10909> failed

# 解决方式1 加上一句 producer.setVipChannelEnabled(false);,解决方式2 brokerIP1 设置宿主机IP,不要使用docker 内部IP

brokerIP1=192.168.8.101

# 在发送消息时,自动创建服务器不存在的topic,默认创建的队列数

defaultTopicQueueNums=4

# 是否允许 Broker 自动创建 Topic,建议线下开启,线上关闭 !!!这里仔细看是 false,false,false

autoCreateTopicEnable=true

# 是否允许 Broker 自动创建订阅组,建议线下开启,线上关闭

autoCreateSubscriptionGroup=true

# Broker 对外服务的监听端口

listenPort=10911

# 删除文件时间点,默认凌晨4点

deleteWhen=04

# 文件保留时间,默认48小时

fileReservedTime=120

# commitLog 每个文件的大小默认1G

mapedFileSizeCommitLog=1073741824

# ConsumeQueue 每个文件默认存 30W 条,根据业务情况调整

mapedFileSizeConsumeQueue=300000

# destroyMapedFileIntervalForcibly=120000

# redeleteHangedFileInterval=120000

# 检测物理文件磁盘空间

diskMaxUsedSpaceRatio=88

# 存储路径

# storePathRootDir=/home/ztztdata/rocketmq-all-4.1.0-incubating/store

# commitLog 存储路径

# storePathCommitLog=/home/ztztdata/rocketmq-all-4.1.0-incubating/store/commitlog

# 消费队列存储

# storePathConsumeQueue=/home/ztztdata/rocketmq-all-4.1.0-incubating/store/consumequeue

# 消息索引存储路径

# storePathIndex=/home/ztztdata/rocketmq-all-4.1.0-incubating/store/index

# checkpoint 文件存储路径

# storeCheckpoint=/home/ztztdata/rocketmq-all-4.1.0-incubating/store/checkpoint

# abort 文件存储路径

# abortFile=/home/ztztdata/rocketmq-all-4.1.0-incubating/store/abort

# 限制的消息大小

maxMessageSize=65536

# flushCommitLogLeastPages=4

# flushConsumeQueueLeastPages=2

# flushCommitLogThoroughInterval=10000

# flushConsumeQueueThoroughInterval=60000

# Broker 的角色

# - ASYNC_MASTER 异步复制Master

# - SYNC_MASTER 同步双写Master

# - SLAVE

brokerRole=ASYNC_MASTER

# 刷盘方式

# - ASYNC_FLUSH 异步刷盘

# - SYNC_FLUSH 同步刷盘

flushDiskType=ASYNC_FLUSH

# 发消息线程池数量

# sendMessageThreadPoolNums=128

# 拉消息线程池数量

# pullMessageThreadPoolNums=128

6.sharding

docker-compose.yml

version: '3'

services:

mysql-slave-lb:

image: nginx:latest

container_name: mysql-slave-lb

ports:

- "3305:3305"

volumes:

- ./conf/stream:/opt/nginx/stream/conf.d

- ./conf/nginx.conf:/etc/nginx/nginx.conf

networks:

- mysql

depends_on:

- mysql-master

- mysql-slave1

- mysql-slave2

mysql-master:

image: mysql/mysql-server

container_name: mysql-master

ports:

- "3307:3306"

environment:

MYSQL_ROOT_PASSWORD: "123456"

MASTER_SYNC_USER: "sync_admin" #设置脚本中定义的用于同步的账号

MASTER_SYNC_PASSWORD: "123456" #设置脚本中定义的用于同步的密码

ADMIN_USER: "root" #当前容器用于拥有创建账号功能的数据库账号

ADMIN_PASSWORD: "123456"

ALLOW_HOST: "10.10.%.%" #允许同步账号的host地址

TZ: "Asia/Shanghai" #解决时区问题

networks:

mysql:

ipv4_address: 10.10.10.10 #固定ip,因为从库在连接master的时候,需要设置host

volumes:

- ./init/master:/docker-entrypoint-initdb.d #挂载master脚本

command:

- "--default-authentication-plugin=mysql_native_password"

- "--server-id=1"

- "--binlog-ignore-db=mysql"

- "--character-set-server=utf8mb4"

- "--collation-server=utf8mb4_unicode_ci"

- "--log-bin=mysql-bin"

- "--sync_binlog=1"

mysql-slave1:

image: mysql/mysql-server

container_name: mysql-slave1

environment:

MYSQL_ROOT_PASSWORD: "123456"

SLAVE_SYNC_USER: "sync_admin" #用于同步的账号,由master创建

SLAVE_SYNC_PASSWORD: "123456"

ADMIN_USER: "root"

ADMIN_PASSWORD: "123456"

MASTER_HOST: "10.10.10.10" #master地址,开启主从同步需要连接master

TZ: "Asia/Shanghai" #设置时区

networks:

mysql:

ipv4_address: 10.10.10.20 #固定ip

volumes:

- ./init/slave:/docker-entrypoint-initdb.d #挂载slave脚本

command:

- "--default-authentication-plugin=mysql_native_password"

- "--server-id=2"

- "--replicate-ignore-db=mysql"

- "--character-set-server=utf8mb4"

- "--collation-server=utf8mb4_unicode_ci"

mysql-slave2:

image: mysql/mysql-server

container_name: mysql-slave2

environment:

MYSQL_ROOT_PASSWORD: "123456"

SLAVE_SYNC_USER: "sync_admin"

SLAVE_SYNC_PASSWORD: "123456"

ADMIN_USER: "root"

ADMIN_PASSWORD: "123456"

MASTER_HOST: "10.10.10.10"

TZ: "Asia/Shanghai"

networks:

mysql:

ipv4_address: 10.10.10.30 #固定ip

volumes:

- ./init/slave:/docker-entrypoint-initdb.d

command: #这里需要修改server-id,保证每个mysql容器的server-id都不一样

- "--default-authentication-plugin=mysql_native_password"

- "--replicate-ignore-db=mysql"

- "--server-id=3"

- "--character-set-server=utf8mb4"

- "--collation-server=utf8mb4_unicode_ci"

networks:

mysql:

driver: bridge

ipam:

driver: default

config:

- subnet: 10.10.0.0/16

conf/stream/mysql.conf

proxy_timeout 30m;

upstream mysql-slave-cluster{

#docker-compose.yml里面会配置固定mysql-slave的ip地址,这里就填写固定的ip地址

server 10.10.10.20:3306 weight=1;

server 10.10.10.30:3306 weight=1 backup; #备用数据库,当上面的数据库挂掉之后,才会使用此数据库,也就是如果上面的数据库没有挂,则所有的流量都很转发到上面的主库

}

server {

listen 0.0.0.0:3305;

proxy_pass mysql-slave-cluster;

}

# ip port local-port

#master localhost 3307 10.10.10.10:3306

#slave1 localhost 3305 10.10.10.20:3306

#slave2 localhost 3305 10.10.10.30:3306conf/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

# 添加stream模块,实现tcp反向代理

stream {

include /opt/nginx/stream/conf.d/*.conf; #加载 /opt/nginx/stream/conf.d目录下面的所有配置文件

}

init/master/create_canal_user.sh

#!/bin/bash

#创建canal同步的账号

#定义用于登录mysql的用户名

ADMIN_USER=${ADMIN_USER:-root}

#定义用于登录mysql的用户密码

ADMIN_PASSWORD=${ADMIN_PASSWORD:-123456}

#定义创建账号的sql语句

CREATE_USER_SQL="CREATE USER 'nacos'@'%' IDENTIFIED BY '123456';"

GRANT_PRIVILEGES_SQL="grant all on nacos.* to 'nacos'@'%' with grant option;"

#定义刷新权限的sql

FLUSH_PRIVILEGES_SQL="FLUSH PRIVILEGES;"

#执行sql

mysql -u"$ADMIN_USER" -p"$ADMIN_PASSWORD" -e "$CREATE_USER_SQL $GRANT_PRIVILEGES_SQL $FLUSH_PRIVILEGES_SQL"init/master/create_sync_user.sh

#!/bin/bash

#创建Mysql主从同步的账号

#定义用于同步的用户名

MASTER_SYNC_USER=${MASTER_SYNC_USER:-sync_admin}

#定义用于同步的用户密码

MASTER_SYNC_PASSWORD=${MASTER_SYNC_PASSWORD:-123456}

#定义用于登录mysql的用户名

ADMIN_USER=${ADMIN_USER:-root}

#定义用于登录mysql的用户密码

ADMIN_PASSWORD=${ADMIN_PASSWORD:-123456}

#定义运行登录的host地址

ALLOW_HOST=${ALLOW_HOST:-%}

#定义创建账号的sql语句

CREATE_USER_SQL="CREATE USER '$MASTER_SYNC_USER'@'$ALLOW_HOST' IDENTIFIED BY '$MASTER_SYNC_PASSWORD';"

#定义赋予同步账号权限的sql,这里设置两个权限,REPLICATION SLAVE,属于从节点副本的权限,REPLICATION CLIENT是副本客户端的权限,可以执行show master status语句

GRANT_PRIVILEGES_SQL="GRANT REPLICATION SLAVE,REPLICATION CLIENT ON *.* TO '$MASTER_SYNC_USER'@'$ALLOW_HOST';"

#定义刷新权限的sql

FLUSH_PRIVILEGES_SQL="FLUSH PRIVILEGES;"

#远程连接报错

ORIGIN_HOST_SQL="update mysql.user set host='%' where user='root';"

#执行sql

mysql -u"$ADMIN_USER" -p"$ADMIN_PASSWORD" -e "$CREATE_USER_SQL $GRANT_PRIVILEGES_SQL $ORIGIN_HOST_SQL $FLUSH_PRIVILEGES_SQL"init/slave/slave.sh

#定义连接master进行同步的账号

SLAVE_SYNC_USER="${SLAVE_SYNC_USER:-sync_admin}"

#定义连接master进行同步的账号密码

SLAVE_SYNC_PASSWORD="${SLAVE_SYNC_PASSWORD:-123456}"

#定义slave数据库账号

ADMIN_USER="${ADMIN_USER:-root}"

#定义slave数据库密码

ADMIN_PASSWORD="${ADMIN_PASSWORD:-123456}"

#定义连接master数据库host地址

MASTER_HOST="${MASTER_HOST:-%}"

#等待10s,保证master数据库启动成功,不然会连接失败

sleep 10

#连接master数据库,查询二进制数据,并解析出logfile和pos,这里同步用户要开启 REPLICATION CLIENT权限,才能使用SHOW MASTER STATUS;

RESULT=`mysql -u"$SLAVE_SYNC_USER" -h$MASTER_HOST -p"$SLAVE_SYNC_PASSWORD" -e "SHOW MASTER STATUS;" | grep -v grep |tail -n +2| awk '{print $1,$2}'`

#解析出logfile

LOG_FILE_NAME=`echo $RESULT | grep -v grep | awk '{print $1}'`

#解析出pos

LOG_FILE_POS=`echo $RESULT | grep -v grep | awk '{print $2}'`

#设置连接master的同步相关信息

SYNC_SQL="change master to master_host='$MASTER_HOST',master_user='$SLAVE_SYNC_USER',master_password='$SLAVE_SYNC_PASSWORD',master_log_file='$LOG_FILE_NAME',master_log_pos=$LOG_FILE_POS;"

#开启同步

START_SYNC_SQL="start slave;"

#查看同步状态

STATUS_SQL="show slave status\G;"

#远程连接报错

ORIGIN_HOST_SQL="update mysql.user set host='%' where user='root';"

#定义刷新权限的sql

FLUSH_PRIVILEGES_SQL="FLUSH PRIVILEGES;"

mysql -u"$ADMIN_USER" -p"$ADMIN_PASSWORD" -e "$ORIGIN_HOST_SQL $FLUSH_PRIVILEGES_SQL $SYNC_SQL $START_SYNC_SQL $STATUS_SQL"

后续添加

minio:

image: minio/minio

container_name: minio

hostname: "minio"

ports:

- "9000:9000" # api 端口

- "9001:9001" # 控制台端口

environment:

MINIO_ACCESS_KEY: username #管理后台用户名

MINIO_SECRET_KEY: password #管理后台密码,最小8个字符

volumes:

- ./minio/data:/data #映射当前目录下的minio/data目录至容器内/data目录

- ./minio/config:/root/.minio #映射配置目录

command: server --console-address ':9001' /data #指定容器中的目录 /data

privileged: true

restart: always

elasticsearch:

image: 'elasticsearch:7.9.2'

container_name: elasticsearch

ports:

- '9200:9200'

- '9300:9300'

environment:

- discovery.type=single-node

- 'ES_JAVA_OPTS=-Xms128m -Xmx256m'

command:

bin/elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.9.2/elasticsearch-analysis-ik-7.9.2.zip

maxwell:

image: zendesk/maxwell

container_name: maxwell

restart: always

command:

bin/maxwell --user=root --password=123456 --host=10.10.10.10 --producer=rabbitmq --rabbitmq_host='192.168.8.101' --rabbitmq_port='5672' --rabbitmq_exchange='maxwell_exchange' --rabbitmq_exchange_type='fanout' --rabbitmq_exchange_durable='true' --filter='exclude:*.*,include:blog.tb_article.article_title=*,include:blog.tb_article.article_content=*,include:blog.tb_article.is_delete=*, include:blog.tb_article.status=*'

networks:

mysql:

ipv4_address: 10.10.10.40 #固定ip

浙公网安备 33010602011771号

浙公网安备 33010602011771号