sb-deepseek-OllamaChatModel 20250715

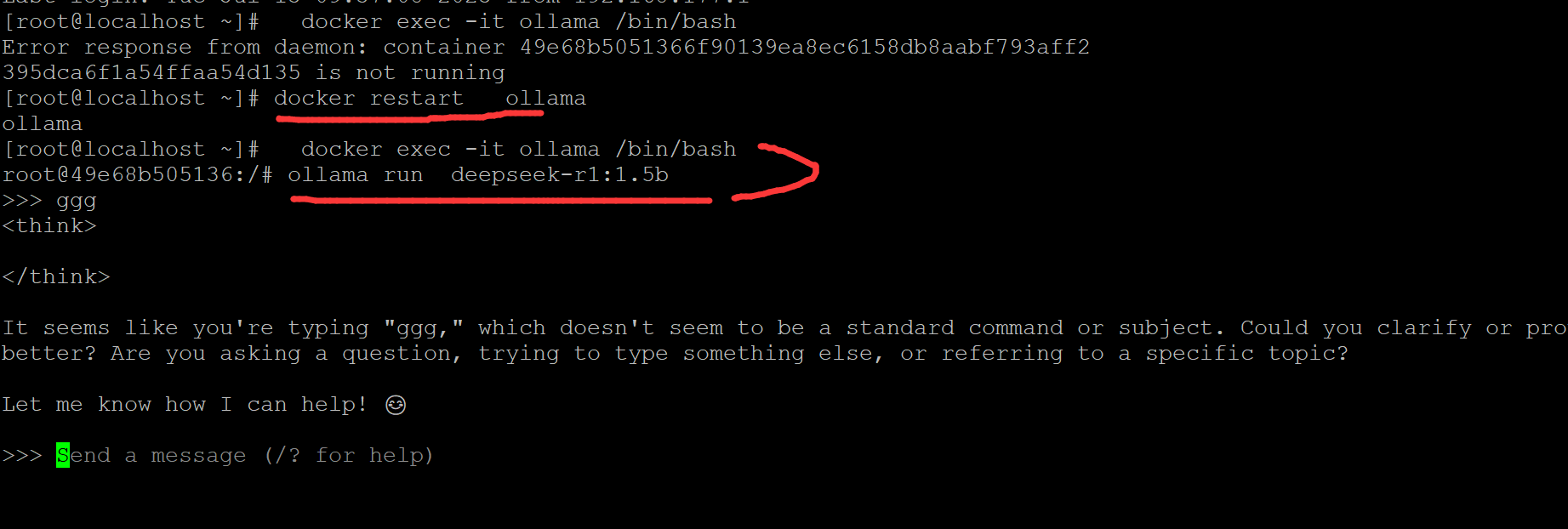

1、docker ollama, ollama run deepseek-r1:1.5b

宿主机192.168.177.128

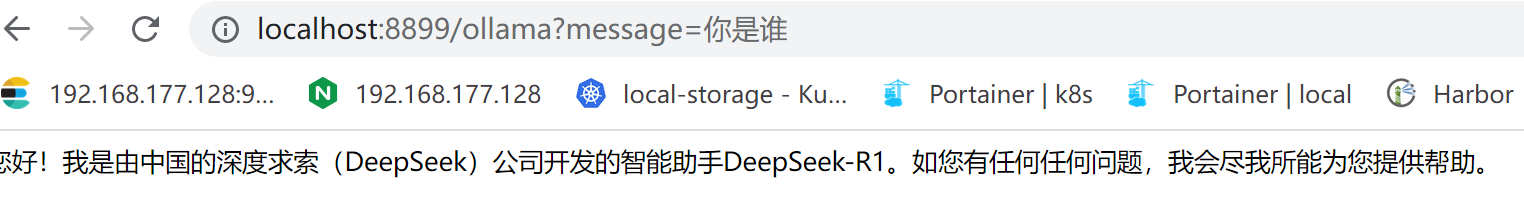

2、springboot实列

2.1 application.properties

spring.application.name=ai-ollama

server.port=8899

spring.ai.ollama.base-url=http://192.168.177.128:11434

spring.ai.ollama.chat.model=deepseek-r1:1.5b

spring.ai.ollama.chat.temperature=0.7

2.2

package com.ds.aiollama.controller;

import org.springframework.ai.ollama.OllamaChatModel;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class OllamaController {

@Autowired

private OllamaChatModel chatModel;

@GetMapping("/ollama")

public String ollama(@RequestParam(value = "message", defaultValue = "hello")

String message) {

String result = chatModel.call(message);

return result;

}

}

2.3

浙公网安备 33010602011771号

浙公网安备 33010602011771号